| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Rafael Vaño | -- | 4291 | 2023-02-24 17:07:22 | | | |

| 2 | Lindsay Dong | -2 word(s) | 4289 | 2023-02-27 03:40:54 | | | | |

| 3 | Lindsay Dong | Meta information modification | 4289 | 2023-02-27 03:42:03 | | | | |

| 4 | Rafael Vaño | + 6 word(s) | 4296 | 2023-02-27 18:56:36 | | |

Video Upload Options

Cloud-native computing principles such as virtualization and orchestration are key to transferring to the promising paradigm of edge computing. Challenges of containerization, operative models and scarce availability of established tools make a thorough review indispensable. Container virtualization and its orchestration through Kubernetes have dominated the cloud computing domain, while major efforts have been recently recorded focused on the adaptation of these technologies to the edge. Such initiatives have addressed either the reduction of container engines and the development of specific tailored operating systems or the development of smaller K8s distributions and edge-focused adaptations (such as KubeEdge). Finally, new workload virtualization approaches, such asWebAssembly modules together with the joint orchestration of these heterogeneous workloads, seem to be the topics to pay attention to in the short to medium term.

1. Introduction

2. Virtualization: From Cloud Computing to Constrained Environments

3. Current Commercial Approaches from Public Cloud Providers

4. Container Virtualization: Deploying Container Workloads on the Edge

4.1. Container Engines

4.2. Purpose-Built Operating Systems

5. Future Directions: The Horizon beyond Containers-Only

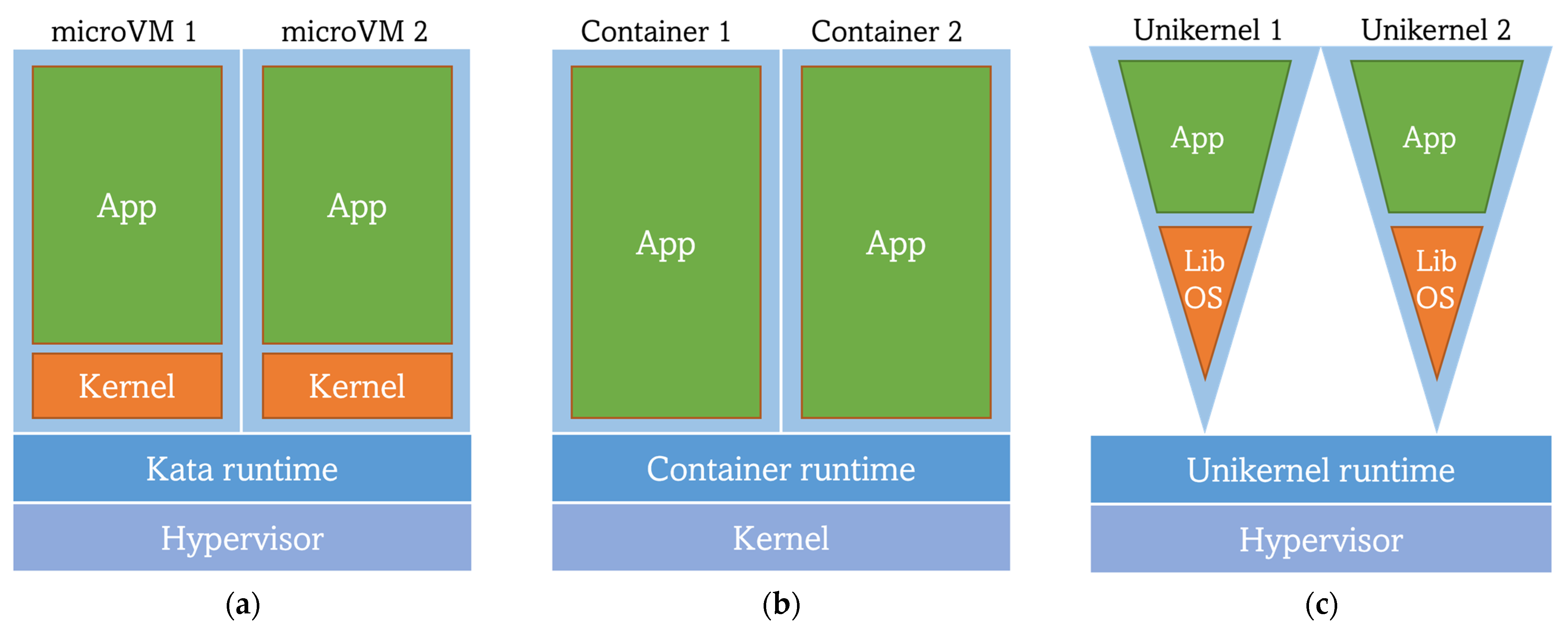

A new approach for workload virtualization has arisen, keeping the isolation trait while skipping some inconveniences of the VMs. This is reached by reducing the size and requirements of VMs, achieving the so-called microVMs or lightweight VMs that intend to be even lighter and faster than containers. Kata Containers is an OpenStack tool whose main goal is to run lighter VMs instead of containers while remaining compliant with the OCI runtime and image specifications. By sticking to OCI, Kata allows Docker images previously built from Dockerfiles to be deployed as microVMs (Kata containers [39]).

Another approach to replace or complement containers with microVMs are Unikernels. According to A. Madhavapeddy and D.J. Scott [40], Unikernels are single-purpose appliances that are compile-time specialized into standalone kernels and sealed against modification when deployed to a cloud platform”. The main idea beyond Unikernels is to use only the strictly necessary part of the user and kernel space of an operating system to obtain a customized OS that will be run by a type 1 hypervisor. Such customized OS is achieved through the usage of a library operating system (library OS or libOS), removing the need for a whole guest OS [41]. This is translated into a reduction of image size and their booting time, as well as their footprint and possible attack surface. However, this virtualization technology has many disadvantages, the most prominent being the lack of standardization as compared with containers, in addition to the need of a complete library operating system rebuild for every new application with any minimal changes, followed by the limitation of debugging and monitoring capabilities[] Unikernel applications are also language-specific (there are Unikernel development systems only for a few languages), being also a considerable limitation for developers. Unikraft [42][43] is a Linux-based “automated system for building Unikernels” under the umbrella of the Linux Foundation (inside its Xen Project) and partially funded by the EU H2020 project UNICORE [44]. Unikraft presents an improved performance in comparison to its peers and other virtualization technologies, such as Docker (regarding boot time, image size, and memory consumption). In addition, Unikraft leverages an OCI-compliant runtime (runu) along with libvirt (a toolkit for interacting with the hypervisor) for running Unikernels. The novelty is that runu natively supports the execution of workloads previously packaged following the OCI Image Specification (unlike Nabla), enabling Unikraft-built Unikernels’ interaction with containers and cloud-native platforms like K8s.

Figure 3. Comparison of the architectures of different virtualization techniques: (a) MicroVMs lightweight VM; (b) Containers; (c) Unikernels.

One step ahead of MicroVMs and Unikernels is the most recent and most promising trend in workload virtualization: WebAssembly (Wasm). Wasm is “a binary instruction format for stack-based virtual machines” developed as an open standard by the World Wide Web Consortium (W3C), that seeks to establish itself as a strong alternative to containers. Wasm allows software to be written in several high-level languages (C++, Go, Kotlin, …) to be compiled and executed with near-native performance in web applications that are designed to run in web browsers [45][46]. At a high level, the functioning of Wasm is simple: native code functions are compiled into Wasm modules that then are loaded and used by a web application (in JavaScript code) in a similar way to how ES6 modules are managed.

In recent times, developers have attempted to move Wasm outside web browsers, or, in other words, from the (web) client side to the server side for a standalone mode of operation. Wasm binaries’ tiny size, low memory footprint, great isolation, fast booting (up to 100 times faster than containers), and response times make Wasm perfect for running workloads in edge and IoT devices using Wasm runtimes such as Wasmedge, Wasmer and Wasmtime. Adopting Wasm in edge environments (where resource-constrained nodes struggle to run container workloads) could lead to an increased number of simultaneously running services as compared with the current container throughput in the same environment [47][48]. This research led to the creation of the WebAssembly System Interface (WASI), a “modular system interface for WebAssembly” with the main purpose of enabling the execution of Wasm on the server side through the creation and standardization of APIs.

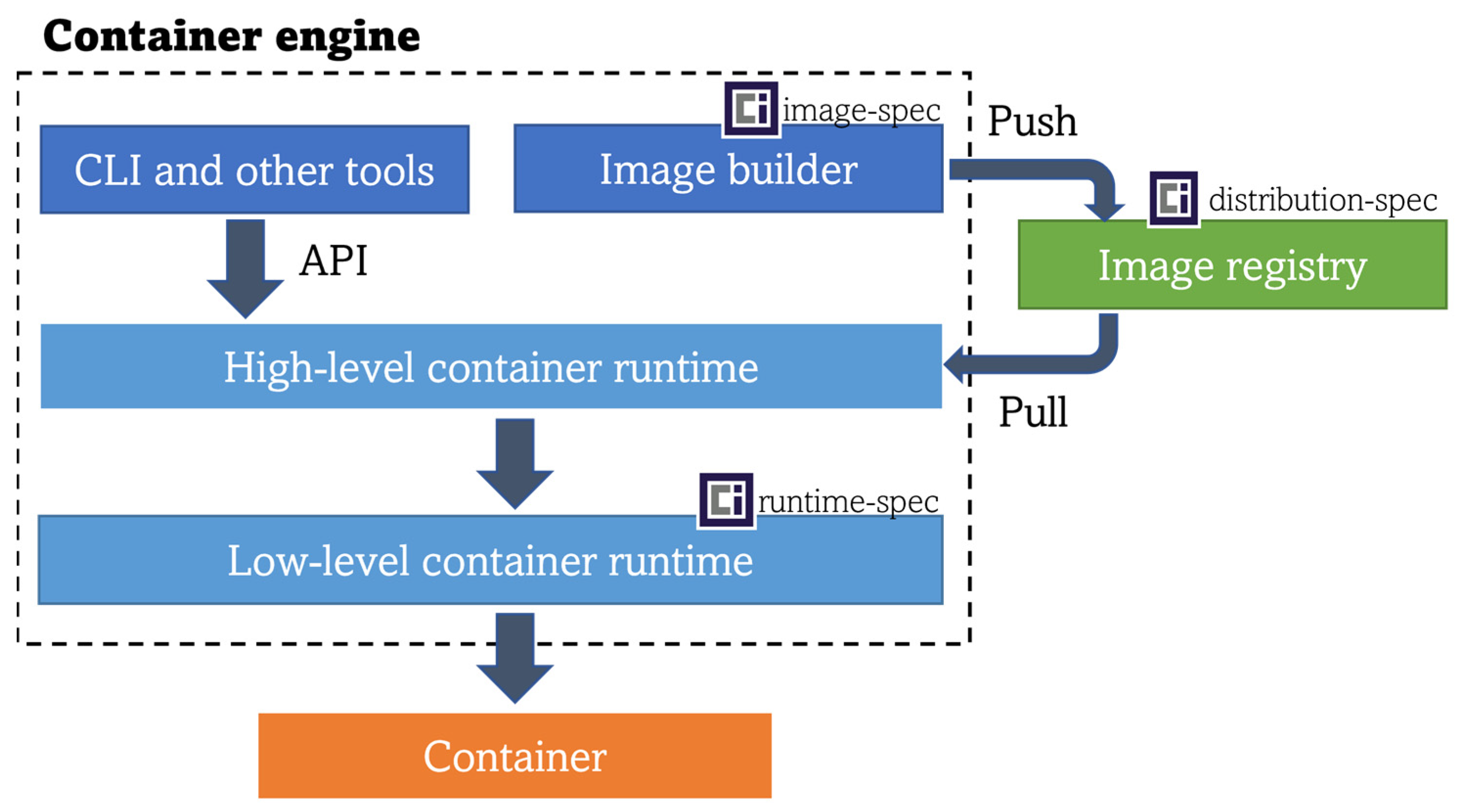

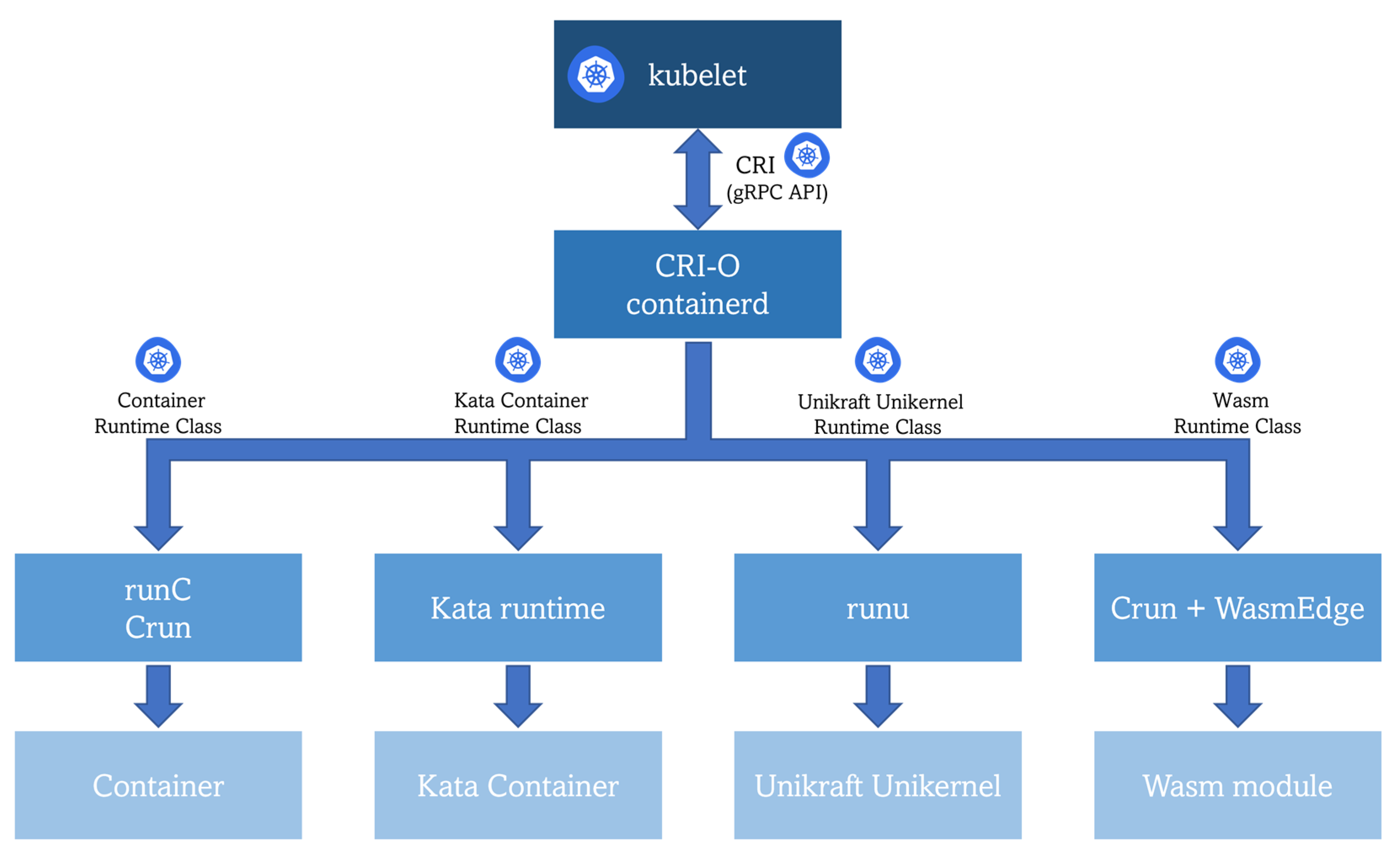

The hype about this technology is such that Docker cofounder Solomon Hykes stated in 2019, that if Wasm along with WASI had existed in 2008, they would not have needed to create Docker [49]. However, this does not mean that these two types of workloads are incompatible or that one technology is expected to replace the other in the future; both can be combined, and the choice of the working technology should be made depending on the final business use case. Therefore, the coexistence of different types of workloads puts into the scene the requirement of a common orchestration mechanism, so Kubernetes stands out as the most interesting candidate. Observing the general architecture of it, the kubelet component interacts with a high-level container runtime that implements the Kubernetes Container Runtime Interface (CRI) acting as a gRPC API client for launching pods and their containers. Looking deeper into K8s functioning, a resource named Runtime Class is the key underlying component. This class is “a feature for selecting the container runtime configuration” [50], which can be leveraged for mapping different workload types with their corresponding low-level runtime (same architectural spot as the low-level runtime in Figure 1). Therefore, the installed high-level container runtime in the cluster, for instance, CRI-O or containerd, will be able to send a request to the proper low-level runtime in order to actually run the requested workload, as shown in Figure 4. The only requirement is packaging the application into an OCI-compliant image previously stored in an OCI-compliant image registry (same pre-condition as for the Docker Engine).

References

- Satyanarayanan, M.; Klas, G.; Silva, M.; Mangiante, S. The Seminal Role of Edge-Native Applications. In Proceedings of the 2019 IEEE International Conference on Edge Computing, EDGE 2019—Part of the 2019 IEEE World Congress on Services, Milan, Italy, 1 July 2019; Institute of Electrical and Electronics Engineers Inc.: Milan, Italy, 2019; pp. 33–40.

- Raj, P.; Vanga, S.; Chaudhary, A. Delineating Cloud-Native Edge Computing. In Cloud-Native Computing: How to Design, Develop, and Secure Microservices and Event-Driven Applications; Wiley-IEEE Press: Piscataway, NJ, USA, 2023; pp. 171–201.

- LF Edge Projects. Available online: https://www.lfedge.org/projects/ (accessed on 9 January 2023).

- The Eclipse Foundation: Edge Native Working Group. Available online: https://edgenative.eclipse.org/ (accessed on 9 January 2023).

- CNCF Cloud Native Landscape. Available online: https://landscape.cncf.io/ (accessed on 9 January 2023).

- Shaping Europe’s Digital Future: IoT and the Future of Edge Computing in Europe. Available online: https://digital-strategy.ec.europa.eu/en/news/iot-and-future-edge-computing-europe (accessed on 9 January 2023).

- European Comission Funding & Tender Opportunities: “Future European Platforms for the Edge: Meta Operating Systems (RIA)”. Available online: https://ec.europa.eu/info/funding-tenders/opportunities/portal/screen/opportunities/topic-details/horizon-cl4-2021-data-01-05 (accessed on 9 January 2023).

- European Comission Funding & Tender Opportunities: “Cognitive Cloud: AI-Enabled Computing Continuum from Cloud to Edge (RIA)”. Available online: https://ec.europa.eu/info/funding-tenders/opportunities/portal/screen/opportunities/topic-details/horizon-cl4-2022-data-01-02 (accessed on 9 January 2023).

- EUCloudEdgeIOT: Building the European Cloud Edge IoT Continuum for Business and Research. Available online: https://eucloudedgeiot.eu/ (accessed on 9 January 2023).

- Malhotra, L.; Agarwal, D.; Jaiswal, A. Virtualization in Cloud Computing. J. Inf. Technol. Softw. Eng. 2014, 4, 1–3.

- Bhardwaj, A.; Krishna, C.R. Virtualization in Cloud Computing: Moving from Hypervisor to Containerization—A Survey. Arab. J. Sci. Eng. 2021, 46, 8585–8601.

- OpenFaaS: Serverless Functions Made Simple. Available online: https://www.openfaas.com/ (accessed on 9 January 2023).

- Knative: An Open-Source Enterprise-Level Solution to Build Serverless and Event Driven Applications. Available online: https://knative.dev/docs/ (accessed on 9 January 2023).

- CloudEvents: A Specification for Describing Event Data in a Common Way. Available online: https://cloudevents.io/ (accessed on 9 January 2023).

- Maenhaut, P.J.; Volckaert, B.; Ongenae, V.; de Turck, F. Resource Management in a Containerized Cloud: Status and Challenges. J. Netw. Syst. Manag. 2020, 28, 197–246.

- Yadav, A.K.; Garg, M.L. Ritika Docker Containers versus Virtual Machine-Based Virtualization. Adv. Intell. Syst. Comput. 2019, 814, 141–150.

- Canonical Ltd. Linux Containers: LXC. Available online: https://linuxcontainers.org/lxc/introduction/ (accessed on 9 January 2023).

- Duan, Q. Intelligent and Autonomous Management in Cloud-Native Future Networks—A Survey on Related Standards from an Architectural Perspective. Future Internet 2021, 13, 42.

- Alonso, J.; Orue-Echevarria, L.; Casola, V.; Torre, A.I.; Huarte, M.; Osaba, E.; Lobo, J.L. Understanding the Challenges and Novel Architectural Models of Multi-Cloud Native Applications—A Systematic Literature Review. J. Cloud Comput. 2023, 12, 1–34.

- AWS IoT Greengrass. Available online: https://aws.amazon.com/greengrass/features/ (accessed on 9 January 2023).

- What Is AWS Snowball Edge?—AWS Snowball Edge Developer Guide. Available online: https://docs.aws.amazon.com/snowball/latest/developer-guide/whatisedge.html (accessed on 9 January 2023).

- Microsoft Azure IoT Edge. Available online: https://azure.microsoft.com/en-us/products/iot-edge/#iotedge-overview (accessed on 9 January 2023).

- Certifying IoT Devices: Azure Certified Device Program. Available online: https://www.microsoft.com/azure/partners/azure-certified-device (accessed on 9 January 2023).

- Google Distributed Cloud. Available online: https://cloud.google.com/distributed-cloud (accessed on 9 January 2023).

- Open Container Initiative. Available online: https://opencontainers.org/ (accessed on 9 January 2023).

- containerd: An Industry-Standard Container Runtime with an Emphasis on Simplicity, Robustness and Portability. Available online: https://containerd.io/ (accessed on 9 January 2023).

- runC: CLI Tool for Spawning and Running Containers According to the OCI Specification. Available online: https://github.com/opencontainers/runc (accessed on 9 January 2023).

- Moby Project. Available online: https://mobyproject.org/ (accessed on 9 January 2023).

- EVE—LF Edge. Available online: https://www.lfedge.org/projects/eve/ (accessed on 9 January 2023).

- EVE Kubernetes Control Plane Integration—Draft. Available online: https://wiki.lfedge.org/display/EVE/EVE+Kubernetes+Control+Plane+Integration+-+Draft (accessed on 9 January 2023).

- BalenaOS—Run Docker Containers on Embedded IoT Devices. Available online: https://www.balena.io/os/ (accessed on 9 January 2023).

- Pantavisor: A framework for containerized embedded Linux. Available online: https://pantavisor.io/ (accessed on 9 January 2023).

- Truyen, E.; van Landuyt, D.; Preuveneers, D.; Lagaisse, B.; Joosen, W. A Comprehensive Feature Comparison Study of Open-Source Container Orchestration Frameworks. Appl. Sci. 2019, 9, 931.

- Paszkiewicz, A.; Bolanowski, M.; Ćwikła, C.; Ganzha, M.; Paprzycki, M.; Palau, C.E.; Lacalle Úbeda, I. Network Load Balancing for Edge-Cloud Continuum Ecosystems. In Innovations in Electrical and Electronic Engineering; Mekhilef, S., Shaw, R.N., Siano, P., Eds.; Springer Science and Business Media Deutschland GmbH: Antalya, Turkey, 2022; Volume 894 LNEE, pp. 638–651.

- Balouek-Thomert, D.; Renart, E.G.; Zamani, A.R.; Simonet, A.; Parashar, M. Towards a Computing Continuum: Enabling Edge-to-Cloud Integration for Data-Driven Workflows. Int. J. High Perform. Comput. Appl. 2019, 33, 1159–1174.

- K3s: Lightweight Kubernetes. Available online: https://k3s.io/ (accessed on 9 January 2023).

- K3OS: The Kubernetes Operating System. Available online: https://k3os.io/ (accessed on 9 January 2023).

- KubeEdge: A Kubernetes Native Edge Computing Framework. Available online: https://kubeedge.io/en/ (accessed on 9 January 2023).

- Kata Containers: Open-Source Container Runtime, Building Lightweight Virtual Machines That Seamlessly Plug into the Containers Ecosystem. Available online: https://katacontainers.io/ (accessed on 9 January 2023)

- Madhavapeddy, A.; Scott, D.J. Unikernels: Rise of the Virtual Library Operating System. Queue 2013, 11, 30–44.

- Sarrigiannis, I.; Contreras, L.M.; Ramantas, K.; Antonopoulos, A.; Verikoukis, C. Fog-Enabled Scalable C-V2X Architecture for Distributed 5G and beyond Applications. IEEE Netw. 2020, 34, 120–126. [Google Scholar] [CrossRef]

- Kuenzer, S.; Bădoiu, V.-A.; Lefeuvre, H.; Santhanam, S.; Jung, A.; Gain, G.; Soldani, C.; Lupu, C.; Răducanu, C.; Banu, C.; et al. Unikraft: Fast, Specialized Unikernels the Easy Way. In Proceedings of the Sixteenth European Conference on Computer Systems, Online Event, UK, 26–28 April 2021; pp. 376–394.

- Unikraft: A Fast, Secure and Open-Source Unikernel Development Kit. Available online: https://unikraft.org/ (accessed on 9 January 2023).

- UNICORE EU H2020 Project. Available online: https://unicore-project.eu/ (accessed on 9 January 2023).

- WebAssembly. Available online: https://webassembly.org/ (accessed on 9 January 2023).

- WebAssembly Community Group WebAssembly Specification Release 2.0 (Draft 2023-02-13); 2023. Available online: https://webassembly.github.io/spec/core/_download/WebAssembly.pdf (accessed on 13 February 2023).

- Ménétrey, J.; Pasin, M.; Felber, P.; Schiavoni, V. WebAssembly as a Common Layer for the Cloud-Edge Continuum. In Proceedings of the 2nd Workshop on Flexible Resource and Application Management on the Edge, FRAME 2022, Co-Located with HPDC 2022, Minneapolis, MN, USA, 1 July 2022; Association for Computing Machinery, Inc.: Minneapolis, MN, USA, 2022; pp. 3–8.

- Mäkitalo, N.; Mikkonen, T.; Pautasso, C.; Bankowski, V.; Daubaris, P.; Mikkola, R.; Beletski, O. WebAssembly Modules as Lightweight Containers for Liquid IoT Applications. In Proceedings of the Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), Biarritz, France, 18–21 May 2021; Springer Science and Business Media Deutschland GmbH: Berlin, Germany, 2021; Volume 12706 LNCS, pp. 328–336.

- Charboneau, T. Why Containers and WebAssembly Work Well Together. Available online: https://www.docker.com/blog/why-containers-and-webassembly-work-well-together/ (accessed on 9 January 2023).

- Kubernetes Reference Documentation: Runtime Class. Available online: https://kubernetes.io/docs/concepts/containers/runtime-class/ (accessed on 9 January 2023).