Video Upload Options

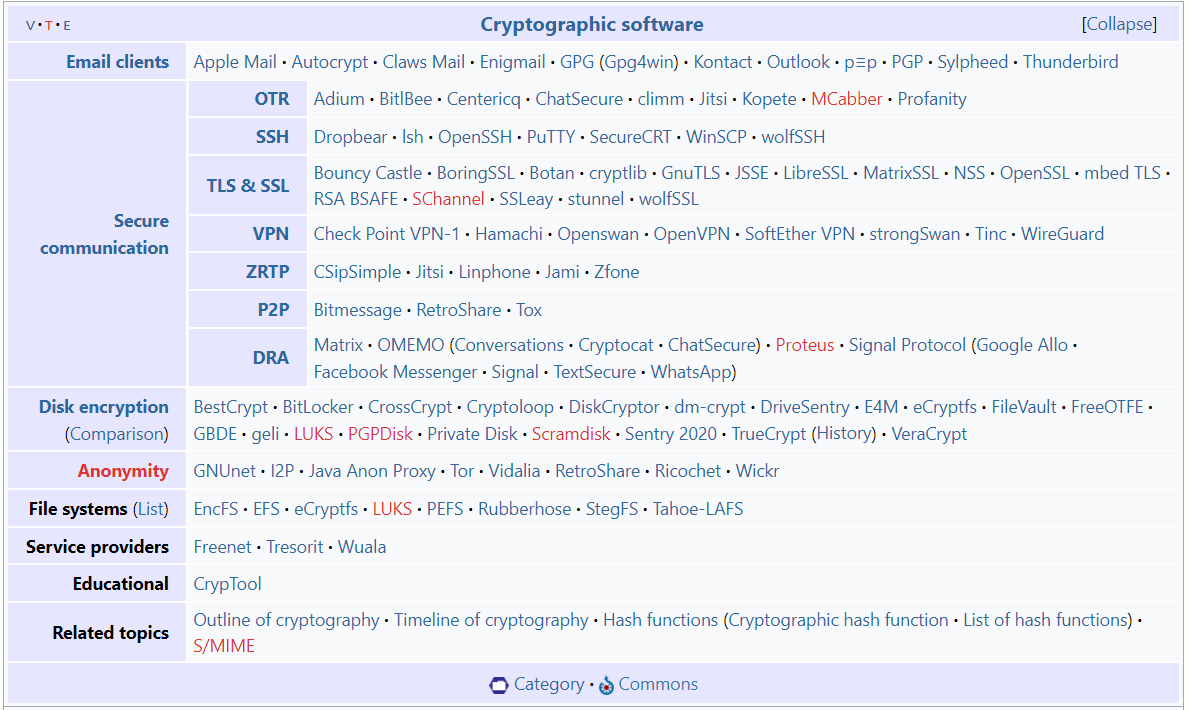

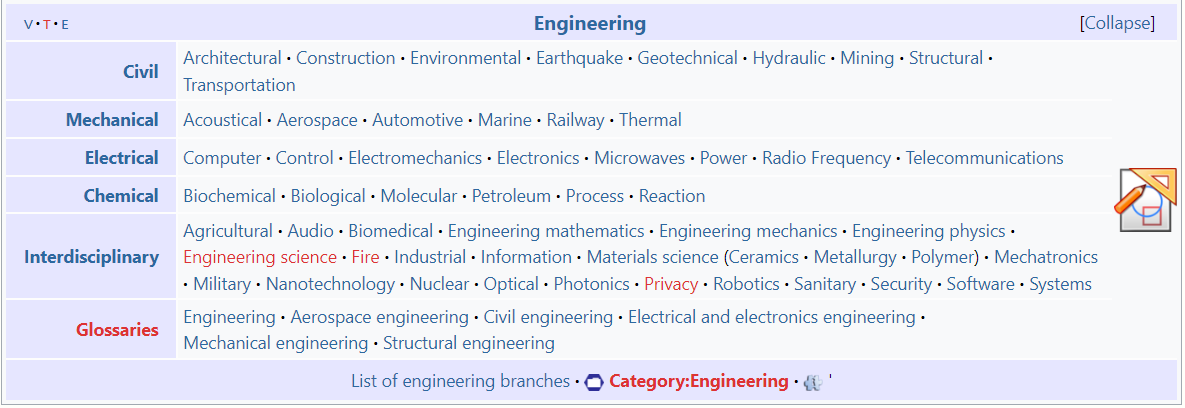

Cryptographic Engineering is the discipline of using cryptography to solve human problems. Cryptography is typically applied when trying to ensure data confidentiality, to authenticate people or devices, or to verify data integrity in risky environments. Cryptographic engineering is a complicated, multidisciplinary field. It encompasses mathematics (algebra, finite groups, rings, and fields), computer engineering (hardware design, ASIC, embedded systems, FPGAs) and computer science (algorithms, complexity theory, software design). In order to practice state-of-the-art cryptographic design, mathematicians, computer scientists, and electrical engineers need to collaborate. Below are the main topics that are specifically related to cryptographic engineering: Cryptographic implementations Attacks against implementations and countermeasures against these attacks Tools and methodologies Applications Interactions between cryptographic theory and implementation issues

1. Major Issues

In modern practice, cryptographic engineering is deployed in crypto systems.[1] Like most engineering design, these are wholly human creations. Most crypto systems are computer software, either embedded in firmware or running as ordinary executable files under an operating system. In some system designs, the cryptography runs under manual direction, in others, it is run automatically, often in the background. Like other software design, and unlike most other engineering, there are few external constraints.

1.1. Active Opposition

In other engineering design, a successful design or implementation of one, is one which 'works'. Thus, an aircraft which actually flies without crashing due to some aerodynamic design is a successful design. How successful is important, of course, and depends on how well it meets intended performance criteria. Continuing with the aircraft example, several World War I fighter aircraft designs only barely flew, while others flew well (at least one design flew well, but its wings broke off with some regularity) though with insufficient agility (turning, climbing, ..., rates) or insufficient stability (too frequent inescapable spins and so on) to be useful or survivable. To a considerable extent, good agility in aircraft is inversely related to inadequate stability, so fighter aircraft designs are, in this respect, inevitable compromises. The same considerations have continued in more recent times, as for instance the necessity for computer 'fly-by-wire' control in some fighters with great agility.

Cryptographic designs also have performance goals (e.g., unbreakability of encryption), but must perform in a more complex, and more complexly hostile, environment than merely high (but not too low) in the Earth's atmosphere under war conditions.

Some aspects of the conditions under which crypto designs must work (to be successful and so worth bothering with) have been long recognized. Sensible cipher designers (of which there were fewer than their users would have wanted) attempted to find ways to prevent frequency analysis success, starting, it must be assumed, almost immediately after that cryptanalytic technique was first used. The most effective way to defeat frequency analysis attacks was the polyalphabetic substitution cipher, invented by Alberti about 1465. For the next several hundred years, other designers also tried to evade frequency analysis, usually poorly, demonstrating that few had a clear understanding of the problem. What is probably the best known (and likely the widest used) of those attempts is the (misnamed) Vigenère cipher which is a partial implementation of Alberti's idea. Edgar Allan Poe famously, and rashly, boasted that no cipher could defeat his cryptanalytic talents (essentially frequency analysis); that he was almost entirely correct about the ciphertexts submitted to him suggests a low level of cryptographic awareness some 400 (!) years after Alberti. As this history suggests, an important part of crypto engineering is understanding the techniques the Opposition may have available.

In addition, it has been explicitly realized since the mid-19th century that the Opposition must be credited with certain kinds of knowledge, lest one's design efforts address too little. Kerckhoffs' Law -- "The security of a cipher must reside entirely in the key", and the equivalent, and somewhat less obscure, Shannon's Maxim -- "The enemy knows the system", put it more or less clearly. A crypto design must achieve its goals (e.g., confidentiality, or message integrity—see 'goals' in the article cryptography), not only despite active intelligent Opposition, but in spite of uncomfortably well informed Opposition.

1.2. Inherent Zero-Defect Requirement

Many failures in cryptographic engineering are catastrophic. That is, success in breaking one message leads to reading all messages. Most cryptographic algorithms and protocols make certain assumptions (random key or nonce choices, for example), and when those assumptions are violated, all security is lost.

Examples: Netscape random bug found at UC Berkeley, Microsoft's PPTP protocol implementation problems found by Schneier.

1.3. Invisibility of Most Failure Modes

Success in cryptographic engineering is unclear at best. Not crashing is a quite prominent sine qua non in aircraft design. Not allowing the Opposition access (to protected message traffic, for instance) is the design goal, but it is far less obvious when this goal has been achieved than in other engineering. Essentially no Opponents will ever make their access to message content public, and so neither designers nor implementors nor users of crypto systems will ever learn from them that their design is insecure. It is certainly irrational to count on Opponents as a quality control resource.

One tempting measure of security is 'I can't figure out how to break it, so I will assume Opponents will not be able to do so either'. This may be true, but there is no way to actually know your Opponents have the same limitations you do. In a modern environment, in which messages travel over public networks, it is not even possible to detect eavesdropping, much less to prevent it. Accordingly, most message traffic must be presumed to be entirely in an Opponent's possession.

Known cryptographic failures fall into several classes. Future failures may also, or may find new categories. Examples include:

Design errors:

- cryptographic protocol errors

- user operational procedure errors

- algorithm implementation errors

- associated system failures

User errors:

- misunderstanding of correct operations

- arbitrary user actions

Implementation errors:

- programming errors (bugs)

- precision arithmetic errors

- random data errors

- software library routine errors

Environment errors:

- operating system insecurities with effects on cryptographic software (e.g., keys retained in swap file data)

- operating system insecurities with regard to plaintext access

- operating system vulnerabilities (viruses, Trojan horses, etc.)

The effect of most of these will not be apparent to end users, generally not to the computer system's administrators, and often not even to the cryptographic system's designers. For instance, a buffer overflow vulnerability in an obligatory operating system component may not have been present in version 5.1 (used during crypto system testing), but appear only at version 5.3, available only after release of the crypto system. Or that particular vulnerability may have been removed in all operating system releases later than version 5.3, but the cryptographic system is being used in this case with version 5.1.

The invisibility of many such errors makes finding and removing them more difficult than in many other kinds of engineering.

References

- "Surreptitiously Weakening Cryptographic Systems". https://eprint.iacr.org/2015/097.pdf. Retrieved 1 August 2017.