Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is an old version of this entry, which may differ significantly from the current revision.

Subjects:

Archaeology

The utilization of 3D digital technologies in the realm of cultural heritage is found to provide substantial support in the formulation of strategies aimed at mitigating the deterioration and loss of original materials. Their adoption is determined not only by their efficacy in facilitating the work of conservators while adhering to the principles of compatibility, reversibility, and non-invasiveness but also by the potential for preserving digital models and promoting dissemination in the scientific community.

- cultural heritage

- 3D digitization

- photogrammetry

1. 3D Digitalization Process

The main techniques for digitizing artifacts belonging to archaeological museum collections are close-range photogrammetry and short-range scanning. In recent years, the use of the latter has become widespread because it is a relatively inexpensive method of digitization [21] and also allows for obtaining a high-quality color texture of the object represented. 3D scanners, on the other hand, are a less widespread solution, mainly due to their cost [22].

However, for the correct virtualization of 3D models, a series of technical skills are recommended that are not always possessed by the people in charge of carrying out these tasks. The simplification of digitization processes in recent decades has facilitated access to these technologies for many professionals in the field of cultural heritage conservation without extensive knowledge of 3D modeling, so they tend to directly use the model obtained by scanning or photogrammetry, without performing a post-processing to optimize the asset for publication. The process of adapting a digitized model to the medium in which it will be published is relatively complex. First of all, it is necessary to drastically reduce the number of polygons of the mesh in most cases, since the resolution of the mesh is usually quite high due to the fact that both 3D scanning and photogrammetry nowadays allow for recording even the smallest details of a figure with high precision. Polygon reduction is essential to ensure the smooth real-time performance of the 3D model within the constraints of network data transmission speeds. Through this process, a high-poly model is transformed into a low-poly model. Low-poly models are optimized for real-time applications such as 3D object viewers, virtual reality, and augmented reality, where rendering speed and performance are critical. The reduced polygon count allows for faster rendering and smoother real-time movement, making them ideal for interactive applications that require real-time responsiveness. In contrast, high-poly models have a higher level of detail with more polygons and produce more realistic and detailed representations that require more computational resources and longer rendering times.

The reduction of the resolution of the 3D model can be carried out in many cases by means of the software that incorporates the scanner or the photogrammetry software; however, these programs usually only reduce the number of triangles of the figure but do not usually offer the possibility of converting them into quads, i.e., four-sided polygons, or to arrange the flow of polygons in a more logical way. The correct organization of the faces of the mesh, known as retopology, is important because a good distribution of the polygons allows for maintaining the shape of the figure with a smaller number of faces but also because it facilitates the UV mapping operations. UV mapping is the process by which each polygon of the mesh is matched to a certain area of the image containing the color of the 3D model to be displayed on its surface. This process is essential to be able to correctly see the colors of the virtualized object, and it can be carried out automatically or manually. Usually, the programs used to process the information recorded by the 3D scanner or by the camera in the photogrammetry process perform automatic mapping processes that result in texture images without a clearly identifiable order for a human being, which can make manual editing difficult.

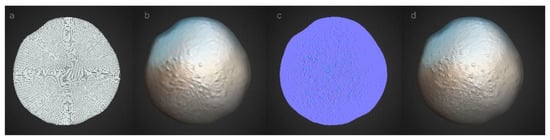

As we have seen, polygon reduction allows us to obtain a mesh that can be quickly transferred from the web, where it is published, to the device on which it is to be reproduced. However, the drastic reduction in the number of polygons of a mesh inexorably results in the loss of surface details, which is not recommended for publication. However, there is a method for ensuring that the reduced polygon count model retains the original appearance of the high-resolution model. The usual way to achieve this is to use a special texture image known as a normal map. This is an image, independent of the one used to color the virtual model, which is assigned to a 3D model so that it displays volume reliefs on screen that it does not actually have. To generate this image, the 3D modeling software calculates, at each point, the difference in volume between the low-resolution mesh and the high-resolution mesh, converting this information into chromatic values, cyan, magenta or green, depending on the orientation of the surface in that particular area. These values are stored in an image, known as a normal map, in which a correspondence between each pixel of the image and a certain region of the polygonal mesh has been previously established through the mapping process. Once this image is created, it can be assigned to the low-resolution model and it will be displayed on the screen with a very similar appearance to the high-resolution model (Figure 1). In addition, the mesh can be easily transferred to other programs or to an on-line platform for sharing 3D models because it will have a very low weight.

Figure 1. Use of normal maps for the representation of fine reliefs in a 3D model: (a) low-resolution polygon mesh in wireframe mode; (b) low-resolution polygon mesh in solid mode without a normal map; (c) model showing the normal map; (d) low-resolution polygon mesh in solid mode with a normal map applied.

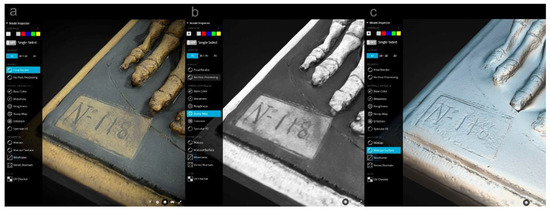

A similar system, although somewhat simpler and currently less employed, is the use of bump maps. The operation is similar, although images store the information with the difference in volume at each point between the two meshes only in grayscale, so they are less accurate, as they do not consider the orientation of the surface in each area. This method also has the disadvantage that it creates the sensation of volume depending on the tonal value of the object at each point, which leads to misinterpretation of the volume when the represented area has color. Thus, areas painted in black are represented as depressed areas, and light-colored ones will appear excessively elevated (Figure 2).

Figure 2. Problems due to the use of bump maps based on the color of the object: (a) final model in Sketchfab.com; (b) bump map; and (c) model without color texture. The label number looks sunken due to its dark color, but in the original model, it is flat.

Another task that must be performed for the correct visualization of the virtualized object in a 3D model viewer is the configuration of the materials. These so-called “shaders” are responsible for rendering the visual appearance of objects, reflecting the characteristics of the materials that compose them. Some of the most frequently considered properties when configuring materials are the following: the color, the amount of light reflected by the surface, the surface roughness, the metallic character, the transparency and translucency (subsurface scattering), and the ambient occlusion. Each of these characteristics is controlled by a section of the material called a channel. While some 3D models are composed of a single material that is simple to represent, others have a more complex composition and require more sophisticated tuning to faithfully represent the appearance of the real object. Often, it is necessary to create specific texture images, also called maps, which allow for different values of a certain feature to be assigned to each of the regions of the 3D object. Thus, specular maps are used to determine which area of the mesh will reflect more light and which will reflect less, depending on whether its pixels have lighter or darker values, respectively. Similarly, roughness maps are used to indicate on a gray scale which areas of the mesh will appear brighter. In these maps, darker areas represent smoother regions that produce more concentrated brightness, and lighter areas indicate rougher areas and therefore more diffuse brightness. Similarly, there are maps to indicate which parts of the model are made of a metallic material or which are more transparent. In transparency maps, the darkest regions are those most easily penetrated by light, and the lightest are the most opaque; in metal maps, the whiter areas are more metallic, and the black ones have a less metallic character. Another commonly employed image texture in computer graphics is the ambient occlusion map. This texture map encodes information about the soft shadows and shading that occur in crevices and corners of 3D objects, resulting in a more realistic and visually appealing appearance. This is an image texture that enhances the realism of the object by mimicking the behavior of light hitting surfaces close to each other. On the other hand, transparent materials are usually represented by transmission maps, which indicate which area is opaque and which allows light to pass through the object. Finally, for objects composed of translucent materials, the subsurface scattering filter can be used, which shows the phenomenon known as translucence in the narrower areas of the model. This characteristic implies that the light incident on the object is scattered inside it, and when leaving, it shows a characteristic color depending on the material composition of the object. This phenomenon is typical of materials such as marble, wax, porcelain, and some plastics.

Likewise, the material is assigned the normal maps already mentioned. These maps contain the information of the volume details that the low-poly does not have.

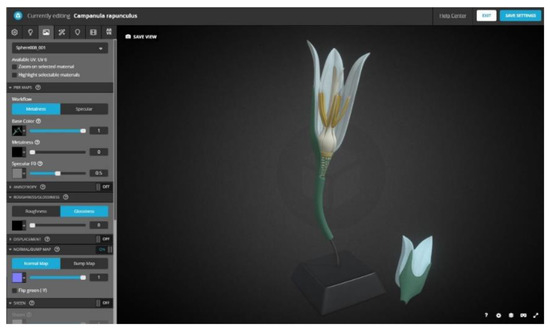

All the materials used in the model must be configured in the program or application in which the model will be reproduced. In each material channel, the appropriate intensity value must be set for the entire mesh if a global adjustment is desired, or a texture map is assigned if a different value is required for each area of the mesh (Figure 3).

Figure 3. Configuration of model materials on the Sketchfab.com website.

As this material configuration needs some technical knowledge of 3D modeling, it is not always correct in the models that are published on the Internet, so the final result is often not fully satisfactory.

In addition to model optimization and material configuration, the most popular online 3D model viewers allow for the customization of a large number of scene properties. Thus, it is possible to choose the spatial position, focal length, type of background, or scene illumination and apply a wide variety of filters to improve the appearance of the virtualized object. Among the most important, we can highlight the focus filter to increase the sharpness of the image, the chroma, brightness, and saturation adjustments to globally modify the color of the scene, or the ambient occlusion filter to darken the hollow areas of the model without the need to use a specific ambient occlusion map. Also widely used in this type of 3D model viewer are the depth-of-field filters for determining the amount of blur to be produced depending on the distance to the focus point or the vignetting filter, used to create a fading area towards the periphery of the scene, and also, although to a lesser extent, the bloom filter, for giving a brighter appearance to objects.

In short, there are multiple filters and effects that can be used to more faithfully represent the artifacts in 3D model viewers, and they require certain knowledge about material configuration that the professionals of the conservation and restoration of cultural heritage usually do not have.

Another interesting technique for generating graphic documents with a three-dimensional appearance from cultural heritage artifacts is known as Reflectance Transformation Imaging (RTI), which provides a highly accurate image in which it is possible to modify the lighting interactively by moving the cursor on the screen to reveal relieves that are not visible to the naked eye. The elaboration process consists of taking multiple photographs with a DSLR camera in a fixed position while varying the orientation of the light source between shots. Subsequently, the photographs are processed in specific software to obtain a file that can be visualized using an RTI viewer [23].

2. Evaluation Methods for the Optimization of 3D Models

Guidelines related to 3D models in the field of cultural heritage are essential for ensuring the responsible and effective use of digital technologies. These guidelines emphasize the accuracy, authenticity, standardization, documentation, and long-term preservation of digital data, including 3D models. In this regard, the 3D reconstruction and digitization of cultural heritage objects must comply with the London Charter and the Seville Principles [24]. One of the fundamental objectives of the London Charter is to “Promote intellectual and technical rigor in digital heritage visualizations”. However, this document also states that both the 3D reconstruction and the configuration of the generated three-dimensional models for visualization are processes in which interpretative or creative decisions are usually made. For this reason, these processes must be properly documented so that the relationship between the virtual models and the artifacts studied, as well as with the documentary sources and the inferences drawn from them, can be correctly interpreted [25]. In the same way, number 4 of the Seville principles talks about authenticity, indicating that when reconstructing or recreating artifacts from the past, it should always be possible to know what is real and what is not [26]. It is also very important to develop methods for identifying degrees of uncertainty in 3D models, such as the use of icons, graphic styles, or metadata [27].

Historic England [28] and Cultural Heritage Imaging [29] also provide guidelines that align closely with the Principles of Seville in the field of cultural heritage preservation. Historic England and Cultural Heritage Imaging emphasize the importance of repair and maintenance techniques that respect the authenticity and integrity of historic buildings and archaeological sites and the use of advanced technologies to document and digitally preserve cultural heritage artifacts and sites. Together, these frameworks provide a comprehensive approach to safeguarding and promoting cultural heritage in a manner that respects its authenticity, integrity, and social significance.

In order to ensure that the visualization of the optimized 3D models is correct and does not produce excessive distortions that alter the user experience, different systems have been developed to analyze the quality of the modified models. Among them, the most important are the quality metrics for mesh quality assessment (MQA), based on the analysis of the polygonal mesh, and point cloud quality assessment (PCQA), focused on the study of the distribution of points detected by the device used to record information, whether a camera or a scanner. Many of these methods are based on analysis techniques designed for the study of 2D images and videos, although the complexity of 3D models makes the task much more complex. Subjective quality assessment is based on an assessment by human observers, while objective quality metrics use automated calculation methods to determine the quality of models. There are different types of quality assessment depending on the use of references to analyze the degree of distortion of the edited model by comparison with this, that is, with the unedited model. Currently, subjective analyses are mostly performed by means of full-reference quality assessment, which uses undistorted reference models to compare them with distorted ones and only analyzes data related to the mesh geometry.

Some authors have proposed different methods for evaluating the quality of 3D models based on the comparison of 3D meshes (model-based metrics), generally using a high-quality reference model and another model distorted or subjected to polygon reduction [30,31]. There have also been different systems proposed based on the comparison of render images obtained from reference models and those obtained from modified models (image-based metrics) [32,33,34,35], and comparisons between such evaluation methods have also been established [36,37], but these analysis systems were designed to compare altered meshes with the original unaltered ones, so it is also not a valid method for establishing the quality of models published on the Internet, where there is usually no reference mesh to compare them with.

The advances achieved in recent years in relation to machine learning technologies have facilitated the design of different learning-based no-reference quality assessment metrics oriented fundamentally to the analysis of the geometric characteristics of 3D models [38,39,40]. Recently, a new no-reference quality assessment metrics method has been published that extracts geometry and color features from point clouds and 3D meshes based on natural scene statistics, an area of perception based on the fact that the human perceptual system is fundamentally adapted to the interpretation of the natural environment [41].

However, automated methods are difficult to apply in the analysis of models of cultural heritage artifacts published on Internet platforms. First, this difficulty is due to the fact that the systems designed at the moment to evaluate the quality of 3D meshes are rather complex to apply in practice and currently do not allow for evaluating a large sample of models in an acceptable time. Second, the methods of the representation of shape and color vary from one model to another and complicate the possible comparison between them. Finally, when evaluating virtual models published on Internet platforms, it is usually not possible to download the high-resolution model, and only the optimized model is available, so it is not possible to establish an evaluation based on the fidelity to a reference object for each of the meshes analyzed. Therefore, given the impossibility of having a high-quality reference model of each of the artifacts represented, it is necessary to evaluate the quality of the model solely from the data available in the virtual model.

Since there is not yet an automated method for analyzing the quality of optimized 3D models for which a high-resolution reference model is not available, this work has opted for a visual analysis performed by an expert in the 3D modeling and 3D digitization of cultural heritage. By the visual inspection of the colored mesh, and turning on and off the color of the 3D model, the details recorded in the photographic texture, such as cracks, pores, wrinkles, or small folds, were identified and compared with the existing reliefs in the mesh devoid of color located in the same place to see if there was an adequate correspondence between the two. This is not a comparison between models but rather a check that the details expected based on the color texture are present in the polygon mesh.

3. Cultural Heritage and 3D Model Visualization Platforms

Nowadays, there are many institutions that have published part of their collections on the Internet, uncluding the British Museum (London, UK), the Museo Arqueológico Nacional (Madrid, Spain), the Real Academia de Bellas Artes de San Fernando (Madrid, Spain), The Harvard Museum of the Ancient Near East (Cambridge, MA, USA), the Ministry of Culture (Lima, Peru), The Royal Museums of Art and History (Brussels, Belgium), Museum of Origins of the Sapienza University of Rome (Rome, Italy), and the Statens Museum for Kunst—National Gallery of Denmark (Copenhagen, Denmark). In addition, there are many independent companies and individuals that publish countless models of figures and objects belonging to other museums, such as the Louvre (Paris, France), the Prado Museum (Madrid, Spain), the Hermitage (St. Petersburg, Russia), and the Vatican Museums, (Vatican City, Italy).

In this research, we analyze a large sample of objects belonging to different art museums from different countries, which have been published on Sketchfab.com in order to check if the models uploaded to this platform are correctly optimized and if the configuration of materials and effects is adequate for their exhibition through the 3D model viewer. Sketchfab.com is currently the most widely used website for sharing this type of file and has more than 10 million members and more than 5 million 3D models. The possibilities offered by this platform are numerous, it being able to visualize the models on screen, both on the personal computer and on cell phones or tablets, and also in virtual reality glasses or devices compatible with augmented reality. In addition, the possibilities for configuring the 3D model are quite extensive, including numerous filters and effects.

Some papers have been published on the usefulness of online platforms for the dissemination of 3D models of artifacts belonging to cultural heritage. Such studies analyze the characteristics of these platforms and the availability of certain 3D model customization functions in these applications that allow for improving their authenticity [18,42]. Sthatham analyzed different 3D model viewers present on the Internet and compared their features in order to determine whether they contributed to improving the scientific rigor of the virtualized artifacts, concluding that, indeed, some of them did improve the scientific quality of the models, although they were used infrequently. However, this author does not explain how she found these features to be underutilized and only discusses whether the platforms studied possess such customization features and how they contribute to improving model fidelity. For this reason, the objective of this study is to check the quality of 3D models of cultural heritage artifacts published in Sketchfab and the degree of use of personalization functions on this platform to determine if they are taking full advantage of the possibilities they offer to achieve a faithful representation of virtualized artifacts.

This entry is adapted from the peer-reviewed paper 10.3390/heritage6050206

This entry is offline, you can click here to edit this entry!