Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is an old version of this entry, which may differ significantly from the current revision.

In accordance with the rapid proliferation of machine learning (ML) and data management, ML applications have evolved to encompass all engineering disciplines. Owing to the importance of the world’s water supply throughout the rest of this century, much research has been concentrated on the application of ML strategies to integrated water resources management (WRM).

- classification

- climate change

- clustering

- machine learning (ML)

1. Introduction

In recent years, machine learning (ML) applications in water resources management (WRM) have garnered significant interest [1]. The advent of big data has substantially enhanced the ability of hydrologists to address existing challenges and encouraged novel applications of ML. The global data sphere is expected to reach 175 zettabytes by 2025 [2]. The availability of this large amount of data is forming a new era in the field of WRM. The next step for hydrological sciences is determining a method to integrate traditional physical-based hydrology into new machine-aided techniques to draw information directly from big data. An extensive range of decisions, from superficial to complicated scientific problems, is now handled by various ML techniques. Only a machine is capable of fully utilizing big data because of its veracity, velocity, volume, and variety. In recent decades, ML has attracted a great deal of attention from hydrologists and has been widely applied to a variety of fields because of its ability to manage complex environments.

In the coming decades, the issues surrounding climate change, increasing constraints on water resources, population growth, and natural hazards will force hydrologists worldwide to adapt and develop strategies to maintain security related to WRM. The Intergovernmental Hydrological Programme (IHP) just started its ninth phase plans (IHP-IX, 2022-2029), which place hydrologists, scholars, and policymakers on the frontlines of action to ensure a water-secure world despite climate change, with the goal of creating a new and sustainable water culture [3]. Moreover, the rapid growth in the availability of hydrologic data repositories, alongside advanced ML models, offers new opportunities for improved assessments in the field of hydrology by simplifying the existing complexity. For instance, it is possible to switch from traditional single-step prediction to multi-step ahead prediction, from short-term to long-term prediction, from deterministic models to their probabilistic counterparts, from univariate to multivariate models, from the application of structured data to volumetric and unstructured data, and from spatial to spatio-temporal and the more advanced geo-spatiotemporal environment. Moreover, ML models have contributed to optimal decision-making in WRM by efficiently modeling the nonlinear, erratic, and stochastic behaviors of natural hydrological phenomena. Furthermore, when solving complicated models, ML techniques can dramatically reduce the computational cost, which allows decision-makers to switch from physical-based models to ML models for cumbersome problems. Therefore, the new emerging hydrological crises, such as droughts and floods, can now be efficiently investigated and mitigated with the assistance of the advancements in ML algorithms.

2. Major Application of ML in WRM

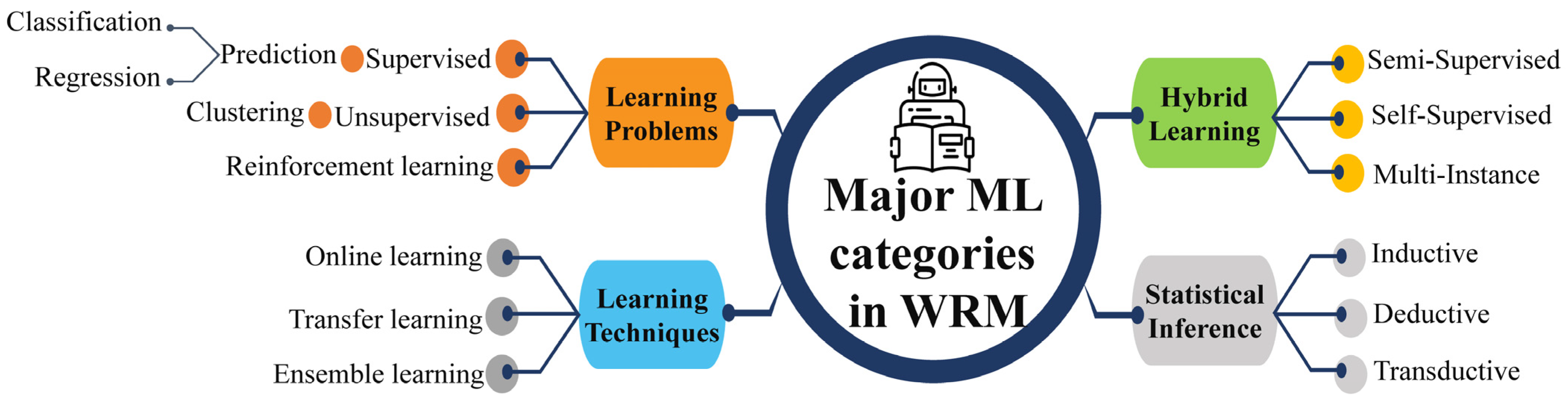

ML algorithms are typically categorized into three main groups: supervised, unsupervised, and RL [4]. A comparison of these is summarized in Table 1. Supervised learning algorithms employ labeled datasets to train the algorithms to classify or predict the output, where both the input and output values are known beforehand. Unsupervised learning algorithms are trained using unlabeled datasets for clustering. These algorithms discover hidden patterns or data groupings without the need for human intervention. RL is an area of ML that concerns how intelligent an agent is to take action in an environment to obtain the maximum reward. In both supervised and RL, inputs and outputs are mapped such that the agent is informed of the best strategy to take in order to complete a task. In RL, positive and negative behaviors are signaled through incentives and penalties, respectively. As a result, in supervised learning, a machine learns the behavior and characteristics of labeled datasets, detects patterns in unsupervised learning, and explores the environment without any prior training in RL algorithms. Thus, an appropriate category of ML is required based on the engineering application. The major ML learning types in WRM are summarized in Figure 1, where the first segment covers the core contents of the research reviewed in the following sections.

Figure 1. Four major types of machine learning.

Table 1. Comparison of supervised, unsupervised, and reinforcement learning algorithms.

| Learning Types | Type of Data | Training | Used for | Algorithms | |

|---|---|---|---|---|---|

| Supervised Learning | Labeled data | Trained using labeled data (extra supervision) | Regression for nowcasting and forecasting | Classification in binary and multiple classes | Linear regression, logistic regression, RF, SVM, KNN, RNN, DNN, etc. |

| Unsupervised Learning | Unlabeled data | Trained using unlabeled data without any guidance (no supervision) | Clustering | K—Means, C—Means, Agglomerative Hierarchical Clustering, DBSCAN, Gaussian Mixture Models, OPTICS, etc. | |

| Reinforcement Learning | Without predefined data | Works based on the interaction between agent and environment (no supervision) | Decision making | Q—Learning, SARSA, DQN, double DQN, dueling DQN, etc. | |

2.1. Prediction

The term “prediction” refers to any technique that uses data processing to get an estimation of an outcome. This is the outcome of an algorithm that was trained on a prior dataset and is now being applied to new data to assess the likelihood of a certain result in order to generate an output model. Forecasting is the probabilistic version of predicting an event in the future. The terms prediction and forecasting are used interchangeably. ML model predictions can be used to create very accurate estimations of the potential outcomes of a situation based on past data, and they can be about anything. For each record in the new data, the algorithm will generate probability values for an unknown variable, allowing the model builder to determine the most likely value. Prediction models can have either a parametric or non-parametric form; however, most WRM models are parametric. The development steps consist of four phases: data processing, feature selection, hyperparameter tuning, and training. Raw historical operation data are translated to a normalized scale in the data transformation step to increase the accuracy of the prediction model. The feature extraction stage extracts the essential variables that influence the output. These retrieved features are then used to train the model. The model’s hyperparameters are optimized to acquire the best model structure. Finally, the model’s weights are automatically modified to produce the final forecast model, which is of paramount importance for optimal control, performance evaluation, and other purposes.

2.2. Clustering

The importance of clustering in hydrology cannot be overstated. The clustering of hydrological data provides rich insights into diverse concepts and relations that underline the inherent heterogeneity and complexity of hydrological systems. Clustering is a form of unsupervised ML that can identify hidden patterns in data and classify them into sets of items that share the most similarities. Similarities and differences among cluster members are revealed by the clustering procedure. The intra-cluster similarity is just as important as inter-cluster dissimilarity in cluster analysis. Different clustering algorithms vary in how they detect different types of data patterns and distances. Classification is distinct from clustering. In other words, a machine uses a supervised procedure called classification to learn the pattern, structure, and behavior of the data that it is fed. In supervised learning, the machine is fed with labeled historical data in order to learn the relationships between inputs and outputs, whereas in unsupervised learning, the machine is fed only input data and then asked to discover the hidden patterns. In this method, data is clustered to make models more manageable, decrease their dimensionality, or improve the efficiency of the learning process. Each of these applications, along with the pertinent literature, is discussed in this section.

2.3. Reinforcement Learning

This section provides an in-depth introduction to RL, covering all the fundamental concepts and algorithms. After years of being ignored, this subfield of ML has recently gained much attention as a result of the successful application of Google DeepMind to learning to play Atari games in 2013 (and, later, learning to play Go at the highest level) [5]. This modern subfield of ML is a crowning achievement of DL. RL deals with how to learn control strategies to interact with a complex environment. In other words, RL defines how to interact with the environment based on experience (by trial and error) as a framework for learning. Currently, RL is the cutting-edge research topic in the field of modern artificial intelligence (AI), and its popularity is growing in all scientific fields. It is all about taking appropriate action to maximize reward in a particular environment. In contrast to supervised learning, in which the answer key is included in the training data by labeling them, and the model is trained with the correct answer itself, RL does not have an answer; instead, the reinforcement autonomous agent determines what to do in order to accomplish the given task. In other words, unlike supervised learning, where the model is trained on a fixed dataset, RL works in a dynamic environment and tries to explore and interact with that environment in different ways to learn how to best accomplish its tasks in that environment without any form of human supervision [6][7]. The Markov decision process (MDP), which is a framework that can be utilized to model sequential decision-making issues, along with the methodology of dynamic programming (DP) as its solution, serves as the mathematical basis for RL [8]. RL extends mainly to conditions with known and unknown MDP models. The former refers to model-based RL, and the latter refers to model-free RL. Value-based RL, including Monte Carlo (MC) and temporal difference (TD) methods, and policy-search-based RL, including stochastic and deterministic policy gradient methods, fall into the category of model-free RL. State–action–reward–state–action (SARSA) and Q-learning are two well-known TD-based RL algorithms that are widely employed in RL-related research, with the former employing an on-policy method and the latter employing an off-policy method [9][10].

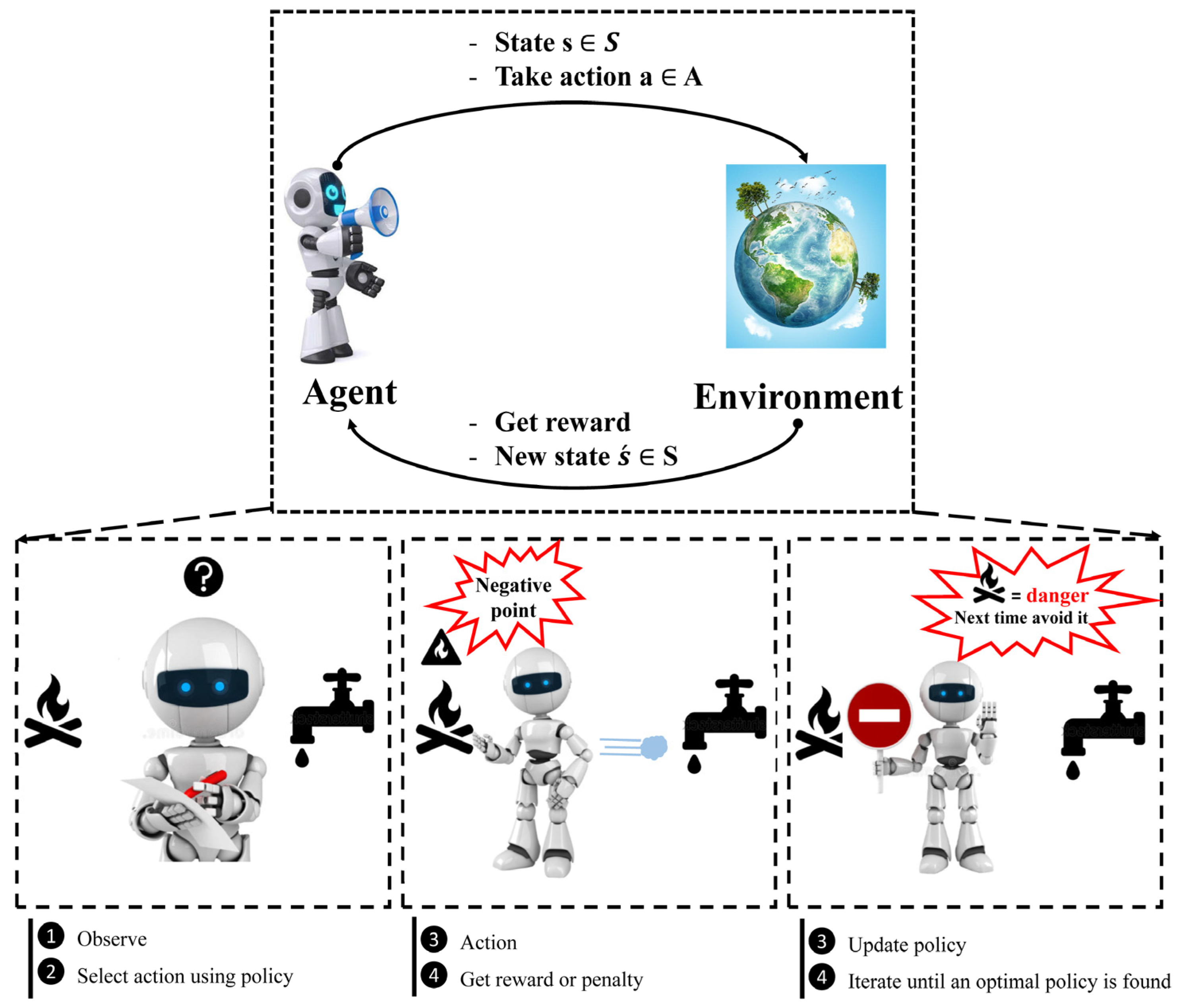

When it comes to training agents for optimal performance in a regulated Markovian domain, Q-learning is one of the most popular RL techniques [11]. It is an off-policy method in which the agent discovers the optimal policy without following the policy. The MDP framework consists of five components, as shown in Figure 2. To comprehend RL, it is required to understand agents, environments, states, actions, and rewards. An autonomous agent takes action, where the action is the set of all possible moves the agent can take [12]. The environment is a world through which the agent moves and responds. The agent’s present state and action are inputs, while the environment returns the agent’s reward and its next state as outputs. A state is the agent’s concrete and immediate situation. An action’s success or failure in a given state can be measured through the provision of feedback in the form of a reward, as shown in Figure 2. Another term in RL is policy, which refers to the agent’s technique for determining the next action based on the current state. The policy could be a neural network that receives observations as inputs and outputs the appropriate action to take. The policy can be any algorithm one can think of and does not have to be deterministic. Owing to inherent dynamic interactions and complex behaviors of the natural phenomena dealt with in WRM, RL could be considered a remedy to solve a wide range of tasks in the field of hydrology. Most real-world WRM challenges can be handled by RL for efficient decision-making, design, and operation, as elucidated in this section.

Figure 2. Concept of reinforcement learning (RL).

Water resources have always been vital to human society as sources of life and prosperity [13]. Owing to social development and uneven precipitation, water resources security has become a global issue, especially in many water-shortage countries where competing demands over water among its users are inevitable [14]. Complex and adaptive approaches are needed to allocate and use water resources properly. Allocating water resources properly is difficult because many different factors, including population, economic, environmental, ecologic, policy, and social factors, must be considered, all of which interact with and adapt to water resources and related socio-economic and environmental aspects.

RL can be utilized to model the behaviors of agents, simplify the process of modeling human behavior, and locate the optimal solution, particularly in an uncertain decision-making environment, to optimize the long-term reward. Simulating the actions of agents and the feedback corresponding to those actions from the environment is the aim of RL-based approaches. In other words, RL involves analyzing the mutually beneficial relationships that exist between the agents and the system, which is an essential requirement in an optimal water resources allocation and management scenario. Another challenging yet less investigated issue in WRM and allocation is shared resource management. Without simultaneously considering the complicated and challenging social, economic, and political aspects, along with the roles of all the beneficiaries and stakeholders, providing an applicable decision-making plan for water demand management is not possible, especially in countries located in arid regions suffering from water crises. Various frameworks have been proposed to analyze and model such a multi-level, complex, and dynamic environment. In the last two decades, complex adaptive systems (CASs) have received much attention because of their efficacy [15].

In the realm of WRM, agent-based modeling (ABM) is a popular simulation method for investigating the non-linear adaptive interactions inherent to a CAS [8]. ABM has been widely used for simulating human decisions when modeling complex natural and socioecological systems. In contrast, the application of ABM in WRM is still relatively new [16], despite the fact that it can be used to define and simulate water resources wherein individual actors are described as unique and autonomous entities that interact regionally with one another and with a shared environment, thus addressing the complexity of integrated WRM [17][18]. In RL water resources-related studies, when addressing water allocation systems, from water infrastructure systems and ecological water consumers to municipal water supply and demand problem management, agents have been conceptualized to represent urban water end-users [19][20].

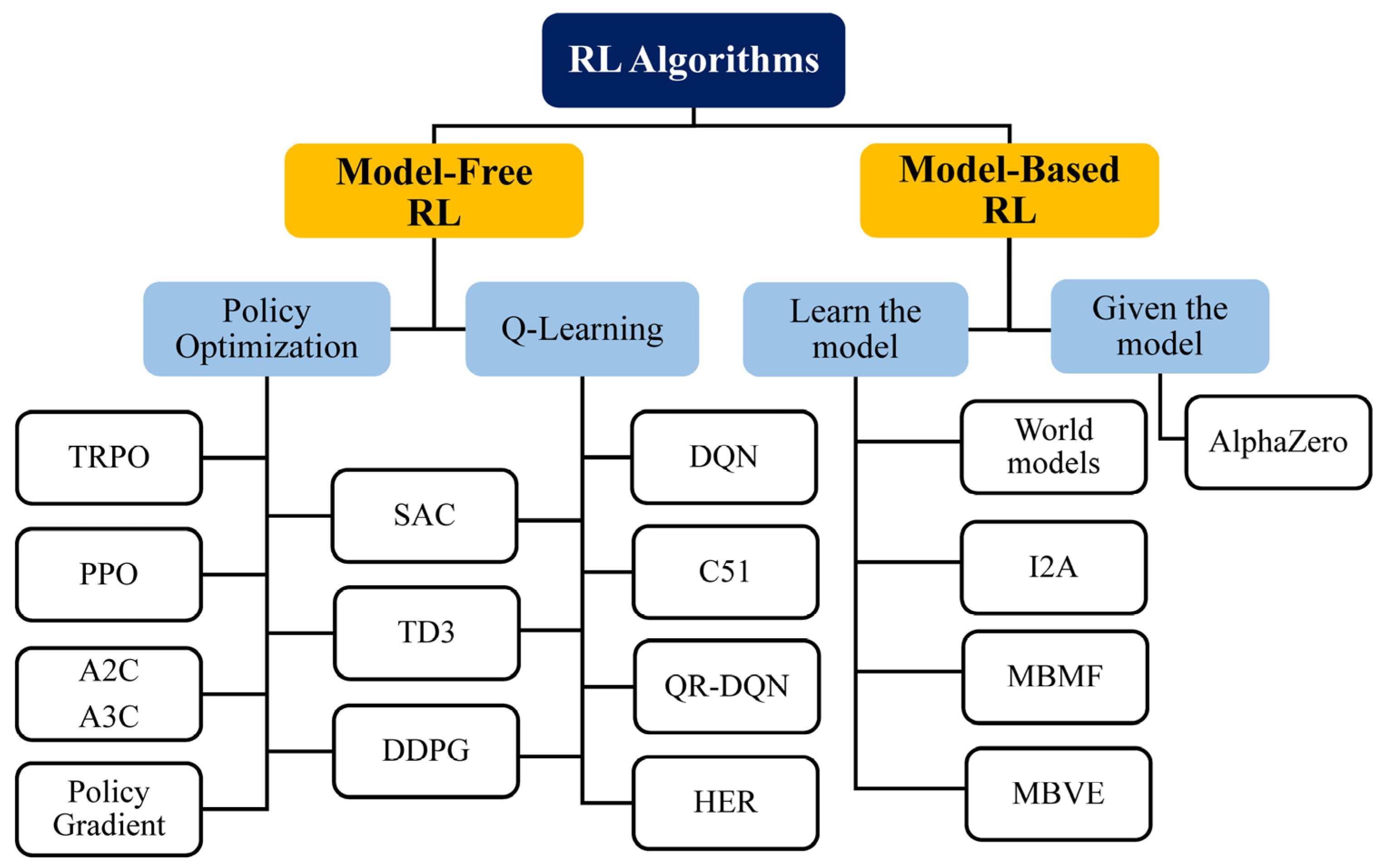

While RL has shown promise in self-driving cars, games, and robot applications, it has not been given widespread attention related to applications in the field of hydrology. However, RL is expected to take over an increasingly wide range of real-world applications in the future, especially to obtain better WRM schemes. Over the past five years, numerous frameworks have emerged, including Tensorforce, which is a useful RL open-source library based on TensorFlow [21], and Keras-RL [22]. In addition to these, it is notable that there are now additional frameworks such as TF-agents [23], RL-coach [24], acme [25], dopamine [26], RLlib [27], and stable baselines3 [28]. RL can be integrated with a DNN as a function approximator to improve its performance. Deep reinforcement learning (DRL) is capable of automatically and directly abstracting and extracting high-level features while avoiding complex feature engineering for a specific task. Some trendy DRL algorithms that are modified from Q-learning include the deep Q-network (DQN) [5], double DQN (DDQN) [29], and dueling DQN [30]. Other packages for the Python programming language are available to facilitate the implementation of RL, including the PyTorch-based RL (PFRL) library [31]. Other fundamental, engineering-focused programming languages such as MATLAB (MathWorks), and Modelica (Modelica Association Project) have also been utilized for the development and instruction of RL agents. The application of RL in WRM and planning will simplify the complexity of all the conflicting interests and their interactions. It will also provide a powerful tool for simulating new management scenarios to understand the consequences of decisions in a more straightforward way [8]. The categorization of RL algorithms by OpenAI using [27][28][29][30][31][32][33][34][35][36][37][38][39][40][41][42][43][44] was utilized to draw the overall picture in Figure 3.

Figure 3. Reinforcement learning (RL) categorization (all the abbreviations are provided in the nomenclature).

This entry is adapted from the peer-reviewed paper 10.3390/w15040620

References

- Razavi, S.; Hannah, D.M.; Elshorbagy, A.; Kumar, S.; Marshall, L.; Solomatine, D.P.; Dezfuli, A.; Sadegh, M.; Famiglietti, J. Coevolution of Machine Learning and Process-Based Modelling to Revolutionize Earth and Environmental Sciences A Perspective. Hydrol. Process. 2022, 36, e14596.

- Reinsel, D.; Gantz, J.; Rydning, J. The Digitization of the World From Edge to Core; International Data Corporation: Framingham, MA, USA, 2018; Available online: https://www.seagate.com/files/www-content/our-story/trends/files/idc-seagate-dataage-whitepaper.pdf (accessed on 7 October 2022).

- UNESCO IHP-IX: Strategic Plan of the Intergovernmental Hydrological Programme: Science for a Water Secure World in a Changing Environment, Ninth Phase 2022-2029. 2022. Available online: https://unesdoc.unesco.org/ark:/48223/pf0000381318 (accessed on 7 October 2022).

- Mosaffa, H.; Sadeghi, M.; Mallakpour, I.; Naghdyzadegan Jahromi, M.; Pourghasemi, H.R. Application of Machine Learning Algorithms in Hydrology. Comput. Earth Environ. Sci. 2022, 585–591.

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-Level Control through Deep Reinforcement Learning. Nature 2015, 518, 529–533.

- Géron, A. Hands-on Machine Learning with Scikit-Learn, Keras, and TensorFlow SECOND EDITION Concepts, Tools, and Techniques to Build Intelligent Systems; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2022.

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT press: Cambridge, MA, USA, 2016; Volume 521, ISBN 978-0262035613.

- Agha-Hoseinali-Shirazi, M.; Bozorg-Haddad, O.; Laituri, M.; DeAngelis, D. Application of Agent Base Modeling in Water Resources Management and Planning. Springer Water 2021, 177–216.

- Tang, T.; Jiao, D.; Chen, T.; Gui, G. Medium- and Long-Term Precipitation Forecasting Method Based on Data Augmentation and Machine Learning Algorithms. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 1000–1011.

- Rahman, A.T.M.S.; Kono, Y.; Hosono, T. Self-Organizing Map Improves Understanding on the Hydrochemical Processes in Aquifer Systems. Sci. Total Environ. 2022, 846, 157281.

- Jang, B.; Kim, M.; Harerimana, G.; Kim, J.W. Q-Learning Algorithms: A Comprehensive Classification and Applications. IEEE Access 2019, 7, 133653–133667.

- Zhu, Z.; Hu, Z.; Chan, K.W.; Bu, S.; Zhou, B.; Xia, S. Reinforcement Learning in Deregulated Energy Market: A Comprehensive Review. Appl. Energy 2023, 329, 120212.

- Nurcahyono, A.; Fadhly Jambak, F.; Rohman, A.; Faisal Karim, M.; Neves-Silva, P.; Cruz Foundation, O.; Horizonte, B. Shifting the Water Paradigm from Social Good to Economic Good and the State’s Role in Fulfilling the Right to Water. F1000Research 2022, 11, 490.

- Damascene123, N.J.; Dithebe, M.; Laryea, A.E.N.; Medina, J.A.M.; Bian, Z.; Gilbert, M.A.S.E.N.G.O. Prospective Review of Mining Effects on Hydrology in a Water-Scarce Eco-Environment; North American Academic Research: San Francisco, CA, USA, 2022; Volume 5, pp. 352–365.

- Yan, B.; Jiang, H.; Zou, Y.; Liu, Y.; Mu, R.; Wang, H. An Integrated Model for Optimal Water Resources Allocation under “3 Redlines” Water Policy of the Upper Hanjiang River Basin. J. Hydrol. Reg. Stud. 2022, 42, 101167.

- Xiao, Y.; Fang, L.; Hipel, K.W.; Wre, H.D.; Asce, F. Agent-Based Modeling Approach to Investigating the Impact of Water Demand Management; American Society of Civil Engineers (ASCE): Reston, VA, USA, 2018.

- Lin, Z.; Lim, S.H.; Lin, T.; Borders, M. Using Agent-Based Modeling for Water Resources Management in the Bakken Region. J. Water Resour. Plan. Manag. 2020, 146, 05019020.

- Berglund, E.Z.; Asce, M. Using Agent-Based Modeling for Water Resources Planning and Management. J. Water Resour. Plan. Manag. 2015, 141, 04015025.

- Tourigny, A.; Filion, Y. Sensitivity Analysis of an Agent-Based Model Used to Simulate the Spread of Low-Flow Fixtures for Residential Water Conservation and Evaluate Energy Savings in a Canadian Water Distribution System. J. Water Resour. Plan. 2019, 145, 1.

- Giacomoni, M.H.; Berglund, E.Z. Complex Adaptive Modeling Framework for Evaluating Adaptive Demand Management for Urban Water Resources Sustainability. J. Water Resour. Plan. Manag. 2015, 141, 11.

- Tensorforce: A TensorFlow Library for Applied Reinforcement Learning—Tensorforce 0.6.5 Documentation. Available online: https://tensorforce.readthedocs.io/en/latest/ (accessed on 26 October 2022).

- Plappert, M. keras-rl. “GitHub—Keras-rl/Keras-rl: Deep Reinforcement Learning for Keras.” GitHub Repos. 2019. Available online: https://github.com/keras-rl/keras-rl (accessed on 26 October 2022).

- Guadarrama, S.; Korattikara, A.; Ramirez, O.; Castro, P.; Holly, E.; Fishman, S.; Wang, K.; Gonina, E.; Wu, N.; Kokiopoulou, E.; et al. TF-Agents: A Library for Reinforcement Learning in Tensorflow. GitHub Repos. 2018. Available online: https://github.com/tensorflow/agents (accessed on 26 October 2022).

- Caspi, I.; Leibovich, G.; Novik, G.; Endrawis, S. Reinforcement Learning Coach, December 2017.

- Hoffman, M.W.; Shahriari, B.; Aslanides, J.; Barth-Maron, G.; Nikola Momchev, D.; Sinopalnikov, D.; Stańczyk, P.; Ramos, S.; Raichuk, A.; Vincent, D.; et al. Acme: A Research Framework for Distributed Reinforcement Learning. arXiv 2020, arXiv:2006.00979.

- Castro, P.S.; Moitra, S.; Gelada, C.; Kumar, S.; Bellemare, M.G.; Brain, G. Dopamine: A Research Framework for Deep Reinforcement Learning. arXiv 2018, arXiv:1812.06110.

- Liang, E.; Liaw, R.; Nishihara, R.; Moritz, P.; Fox, R.; Gonzalez, J.; Goldberg, K. Ray RLlib: A Composable and Scalable Reinforcement Learning Library. arXiv 2017, preprint. arXiv:1712.09381.

- Raffin, A.; Hill, A.; Gleave, A.; Kanervisto, A.; Ernestus, M.; Dormann, N. Stable-Baselines3: Reliable Reinforcement Learning Implementations. J. Mach. Learn. Res. 2021, 22, 12348–12355.

- Van Hasselt, H.; Guez, A.; Silver, D. Deep Reinforcement Learning with Double Q-Learning. Proc. AAAI Conf. Artif. Intell. 2016, 30, 2094–2100.

- Wang, Z.; Schaul, T.; Hessel, M.; Hasselt, H.; Lanctot, M.; Freitas, N. Dueling Network Architectures for Deep Reinforcement Learning 2016. In Proceedings of the 33rd International Conference on Machine Learning, New York, NY USA, 19–24 June 2016; pp. 1995–2003.

- PFRL, a Deep Reinforcement Learning Library — PFRL 0.3.0 Documentation. Available online: https://pfrl.readthedocs.io/en/latest/ (accessed on 26 October 2022).

- Mnih, V.; Badia, A.P.; Mirza, L.; Graves, A.; Harley, T.; Lillicrap, T.P.; Silver, D.; Kavukcuoglu, K. Asynchronous Methods for Deep Reinforcement Learning. arXiv 2016, arXiv:1602.01783v2.

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal Policy Optimization Algorithms. arXiv 2017, arXiv:1707.06347.

- Racanière, S.; Weber, T.; Reichert, D.P.; Buesing, L.; Guez, A.; Rezende, D.; Badia, A.P.; Vinyals, O.; Heess, N.; Li, Y.; et al. Imagination-Augmented Agents for Deep Reinforcement Learning. arxiv 2017, preprint. arXiv:1707.06203.

- Feinberg, V.; Wan, A.; Stoica, I.; Jordan, M.I.; Gonzalez, J.E.; Levine, S. Model-Based Value Estimation for Efficient Model-Free Reinforcement Learning. arxiv 2018, arXiv:1803.00101.

- Silver, D.; Hubert, T.; Schrittwieser, J.; Antonoglou, I.; Lai, M.; Guez, A.; Lanctot, M.; Sifre, L.; Kumaran, D.; Graepel, T.; et al. Mastering Chess and Shogi by Self-Play with a General Reinforcement Learning Algorithm. arXiv 2017, arXiv:1712.01815.

- Schulman, J.; Levine, S.; Moritz, P.; Jordan, M.; Abbeel, P. Trust Region Policy Optimization. arXiv 2015, arXiv:1502.05477.

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous Control with Deep Reinforcement Learning. arXiv 2015, arXiv:1509.02971v6.

- Fujimoto, S.; Van Hoof, H.; Meger, D. Addressing Function Approximation Error in Actor-Critic Methods. arXiv 2018, arXiv:1802.09477.

- Haarnoja, T.; Zhou, A.; Abbeel, P.; Levine, S. Soft Actor-Critic: Off-Policy Maximum Entropy Deep Reinforcement Learning with a Stochastic Actor. arXiv 2018, arXiv:1801.01290.

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Graves, A.; Antonoglou, I.; Wierstra, D.; Riedmiller, M. Playing Atari with Deep Reinforcement Learning. arXiv 2013, preprint. arXiv:1312.5602.

- Bellemare, M.G.; Dabney, W.; Munos, R. A Distributional Perspective on Reinforcement Learning. arXiv 2017, arXiv:1707.06887.

- Dabney, W.; Rowland, M.; Bellemare, M.G.; Munos, R. Distributional Reinforcement Learning with Quantile Regression. arXiv 2017, arXiv:1710.10044.

- Andrychowicz, M.; Wolski, F.; Ray, A.; Schneider, J.; Fong, R.; Welinder, P.; McGrew, B.; Tobin, J.; Abbeel, P.; Zaremba, W. Hindsight Experience Replay. arXiv 2017, arXiv:1707.01495.

This entry is offline, you can click here to edit this entry!