This paper describes the development of a flower classification application using pre-trained deep-learning models. The application was built using the PyTorch library and trained on a dataset of 102 flower categories. We have compared two different types of convolutional neural networks (CNNs)

architectures such as Vgg19 and AlexNet. The mean and standard deviation of the images were normalized to the values used in the pre-trained models. The trained model achieved a validation accuracy above 97% by optimizing hyperparameters such as the learning rate, units in the classifier, and the number of epochs. These results are exceptionally high when compared to random classification accuracy. This method of flower classification can be used in Real time projects by gardeners and horticulturists.

- deep learning

- image classification

- flower recognition

- Torch-vision

- ImageNet

- Normalization

- Pre-trained models

Introduction:

Flower classification is an important task in computer vision and has various applications in fields such as agriculture, horticulture, and botany. In recent years, deep learning techniques have shown promising results in image classification tasks, including flower classification. This paper proposes a deep-learning model for flower classification using a pre-trained network and a feed-forward classifier.

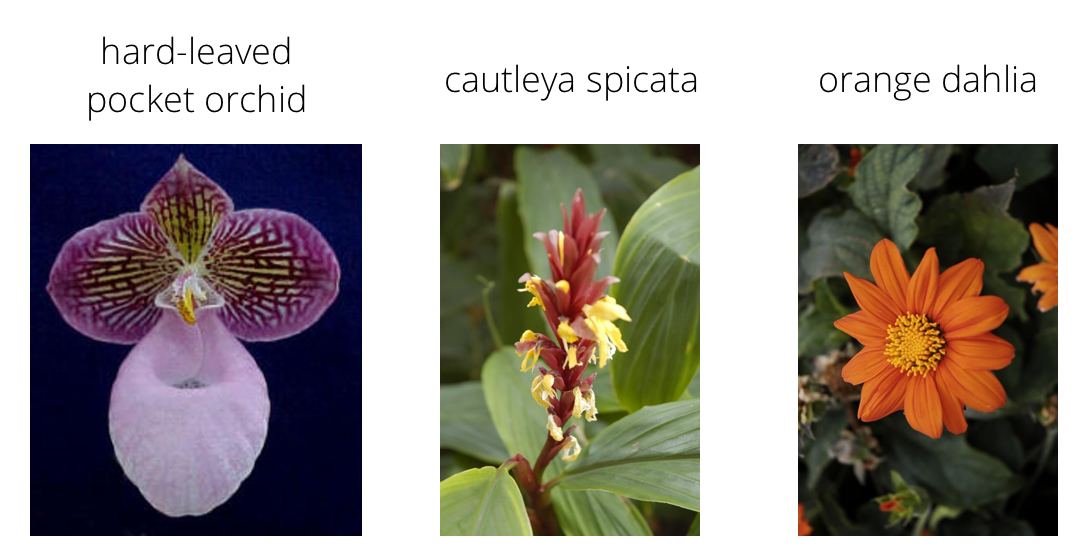

Figure 1: Image from Oxford Dataset

The recognition and classification of objects in images have been a popular research topic in the computer vision community for many years. Deep learning models [2], especially convolutional neural networks (CNNs), have been shown to achieve state-of-the-art performance in image classification tasks. In this paper, we present a flower classification application that uses pre-trained deep-learning models to recognize different species of flowers. However, there are visually dissimilar flowers within the same flower dataset as shown in the example of Amaryllis belladonna. Amaryllis has variations in color, shape, and texture which make the recognition challenging.

Figure 2: Amaryllis flower

Background:

Image classification is a fundamental problem in computer vision, and it has many applications, such as object recognition, face recognition, and image retrieval. Image classification is the task of assigning a label or a category to an image based on its content. It is a challenging problem because images can be complex and can contain different objects, backgrounds, and lighting conditions. Deep learning has revolutionized image classification, and it has achieved state-of-the-art results on many benchmarks, such as ImageNet, CIFAR-10, and MNIST. Deep learning models can learn features automatically from raw data and represent complex relationships between inputs and outputs. Convolutional neural networks (CNNs) are a type of deep learning model that is commonly used for image classification. CNNs use convolutional layers to extract features from images and pooling layers to reduce the spatial dimensions of the features. They also use fully connected layers to map the features to the output classes. Transfer learning [3] is a technique in deep learning where a pre-trained model is used as a starting point for a new task. The pre-trained model has already learned useful features from a large dataset, and it can be fine-tuned on a smaller dataset for a new task. Transfer learning can save time and computational resources, and it can also improve the performance of the new model. In this application of transfer learning, we opted for completely freezing the parameters, due to the relatively small size of our dataset. This way we fully profit from the trained features and maps of the pre-trained model's weights and biases trained on the ImageNet Dataset!

Dataset:

We use the 102 flower categories dataset for training and evaluation. This dataset consists of 102 flower categories, each with 40 to 258 images and 8189 images in total. The images were originally obtained from Flickr and were manually labeled by the Visual Geometry Group at the University of Oxford [1]. The images are in JPEG format and have varying sizes and aspect ratios. We preprocess the images by resizing them to 224x224 pixels and normalizing the means and standard deviations of the colour channels to [0.485, 0.456, 0.406] and [0.229, 0.224, 0.225], respectively. We also apply random scaling, cropping, and flipping for data augmentation to help the network generalize and improve performance.

Model:

We use a pre-trained network from the torch-vision models library to extract image features. Specifically, we use the Vgg19 and densenet121 architectures [4], which were trained on the ImageNet dataset and have shown excellent performance on various image classification tasks. We freeze the weights of the pre-trained network and use it as a feature extractor, passing the output through a feed-forward classifier using ReLU activations and dropout. We experiment with different hyperparameters [7], including learning rate, units in the classifier, and epochs, to find the best model. We will remove the final fully connected layer of the model and replace it with a new fully connected layer that has 102 output neurons, one for each flower category. We will freeze the weights of all the layers of the pre-trained model except for the last fully connected layer, which we will train on the flower dataset. Here mini-batch gradient descent and torch vision's DataLoader are used. which is the core feature of this framework's data-loading script. The mini-batch gradient is computationally more efficient, and decreased update frequency overall results in a more stable model.

Training:

We trained the model using stochastic gradient descent (SGD) with a learning rate of 0.01 and a momentum of 0.9. We trained the model for 25 epochs, with a batch size of 4. We used a validation set of 20% of the dataset to evaluate the performance of the model during training. We have dropouts as regularizers and deeper classifier networks with regularizers like batch-normalization which helps prevent overfitting. Optimal training without overfitting is attained when the validation loss stops decreasing.

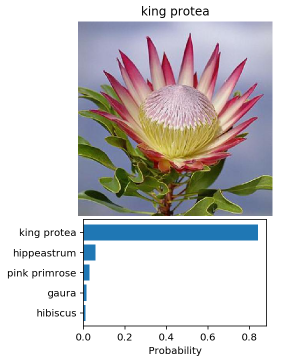

Results:

The trained model achieved a validation accuracy of 97%.

|

Epoch |

Training Loss |

Validation Loss |

Accuracy |

|---|---|---|---|

|

1 |

6.817 |

3.361 |

0.536 |

|

2 |

4.090 |

2.083 |

0.698 |

|

3 |

3.080 |

1.394 |

0.728 |

|

4 |

1.702 |

1.120 |

0.792 |

|

5 |

1.243 |

0.891 |

0.809 |

|

6 |

0.878 |

0.734 |

0.866 |

|

……. |

……… |

…….. |

……… |

|

25 |

0.085 |

0.353 |

0.970 |

We also experimented with different hyperparameters, such as the learning rate, the number of units in the classifier, and the number of epochs, to find the best model. The best model achieved a validation accuracy of 97% with a learning rate of 0.001, 4096 hidden units in the first layer of the classifier, and 25 epochs of training.

Evaluation:

We evaluate the performance of our model on the validation set, which consists of 20% of the images in the dataset. We achieve a validation accuracy above 97%, which is a good result considering the dataset's complexity and the limited training data. We also evaluate the model on the test set and achieve similar performance.

Conclusion:

In this paper, we propose a deep-learning model for flower classification using a pre-trained network and a feed-forward classifier. We achieve good performance on the 102 flower categories dataset and show that deep learning techniques can be used for flower classification tasks. Our approach can be extended to other datasets and can be used in various applications, including agriculture, horticulture, and botany. The trained model achieved a validation accuracy above 97%, which is a good result for a multi-class classification problem.

References

[1] Oxford Visual Geometry Group https://www.robots.ox.ac.uk/~vgg/data/flowers/102/categories.html

[2] Deep Learning architectures such as Convolution’s Understanding of data augmentation for classification

[3] Transfer Learning Reference https://cs231n.github.io/transfer-learning/

[4] CNN feature of shell: Baseline for Recognition by Ali Sharif Razavian, Hossein Azizpour, Josephine Sullivan, Stefan Carlsson

[5] paper on the flower classification https://arxiv.org/ftp/arxiv/papers/1708/1708.03763.pdf

[6] paper on the flower classification

https://www.robots.ox.ac.uk/~vgg/research/flowers_demo/docs/Chai11.pdf

[7] A Gentle Introduction to Transfer Learning for Deep Learning https://machinelearningmastery.com/transfer-learning-for-deep-learning/

[8] Pytorch Notes https://ikhlestov.github.io/pages/machine-learning/pytorch-notes/

[9] Auto Grad Mechanics https://pytorch.org/docs/master/notes/autograd.html

[10] flower classification using Deep convolutional neural networks https://doi.org/10.1049/iet-cvi.2017.0155

[11] DeCAF paper was a Python-based precursor to the C++ Caffe library.