Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is an old version of this entry, which may differ significantly from the current revision.

The rapid development of sensors and information technology has made it possible for machines to recognize and analyze human emotions. Emotion recognition is an important research direction in various fields. Human emotions have many manifestations. Therefore, emotion recognition can be realized by analyzing facial expressions, speech, behavior, or physiological signals. These signals are collected by different sensors. Correct recognition of human emotions can promote the development of affective computing.

- sensors for emotion recognition

- emotion models

- emotional signal processing

1. Introduction

Emotion is a comprehensive manifestation of people’s physiological and psychological states; emotion recognition was systematically proposed in the 1990s [1]. With the rapid development of science and technology, emotion recognition has been widely used in various fields, such as human-computer interactions (HCI) [2], medical health [3], Internet education [4], security monitoring [5], intelligent cockpit [6], psychological analysis [7], and the entertainment industry [8].

Emotion recognition can be realized through different detection methods and different sensors. Sensors are combined with advanced algorithm models and rich data to form human-computer interaction systems [9][10] or robot systems [11]. In the field of medical and health care [12], emotion recognition can be used to detect the patient’s psychological state or adjuvant treatment, and improve medical efficiency and medical experience. In the field of Internet education [13], emotion recognition can be used to detect students’ learning status and knowledge acceptance, and cooperate with relevant reminders to improve learning efficiency. In the field of criminal interrogation [14], emotion recognition can be used to detect lies (authenticity test). In the field of intelligent cockpits [15], it can be used to detect the drowsiness and mental state of the driver to improve driving safety. In the field of psychoanalysis [16], it can be used to help analyze whether a person has autism. This technique can also be applied to recognize the emotions of the elderly, infants, and those with special diseases who cannot clearly express their emotions [17][18].

2. Emotion Models

The definition of emotion is the basis of emotion recognition. The basic concept of emotion was proposed by Ekman in the 1970s [19]. At present, there are two mainstream emotion models: the Discrete emotion model and the dimensional emotion model.

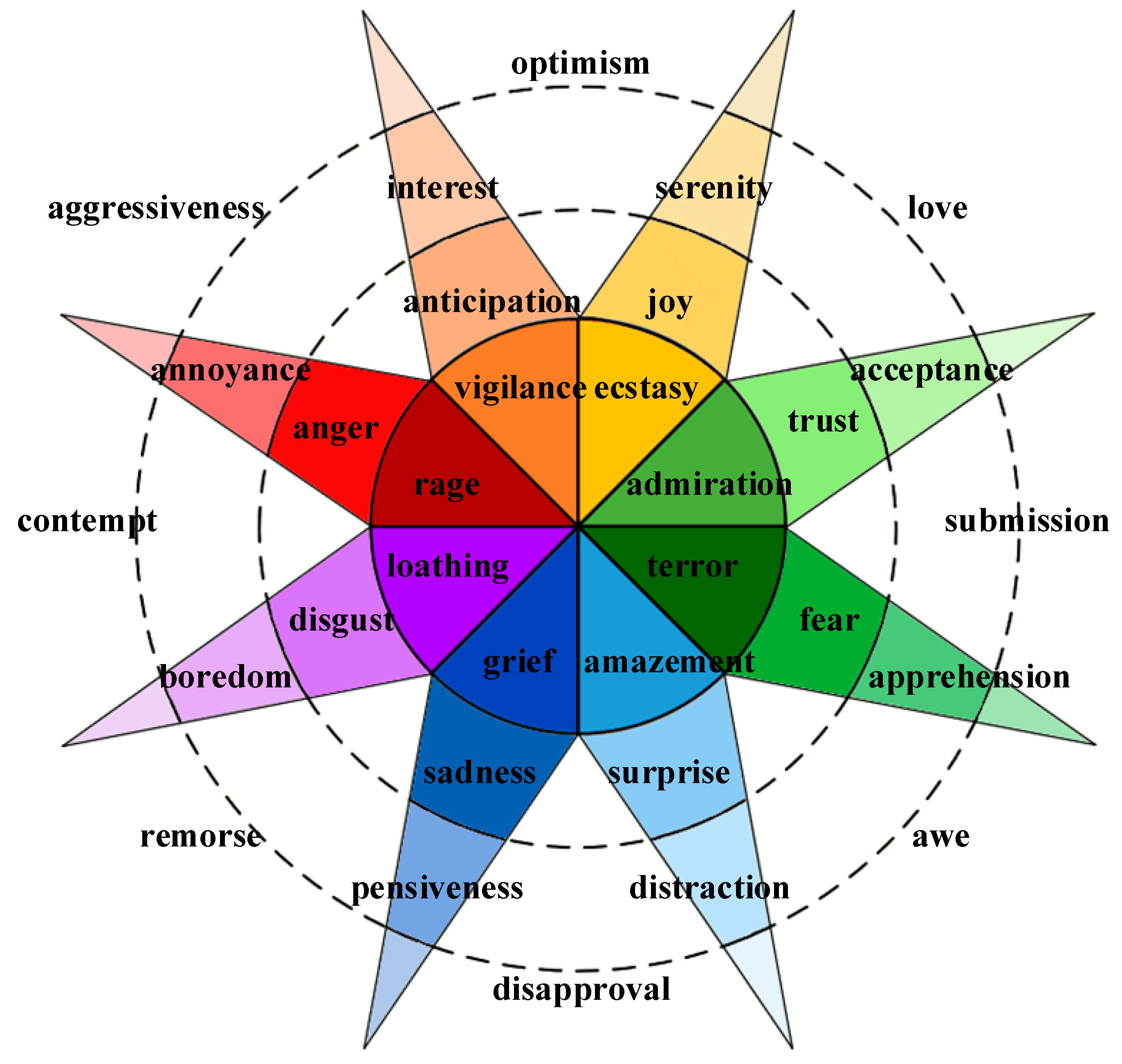

Darwinian evolution [20] holds that emotions are primitive or fundamental. Emotion as a form is considered to correspond to discrete and elementary responses or tendencies of action. The discrete emotion model divides human emotions into limited categories [21], mainly including happiness, sadness, fear, anger, disgust, surprise, etc. There are two to eight basic emotions, according to different theories. However, these discrete emotion model theories have certain common features. They believe that emotions are: mental and physiological processes; caused by the awareness of developmental events; inducing factors for changes in the body’s internal and external signals; related to a fixed set of actions or tendencies. Ekman proposed seven characteristics to distinguish different basic emotions and emotional phenomena: autonomous evaluation; have specific antecedent events; also present in other primates; rapid onset; short duration; unconscious or involuntary appearance; reflected in unique physiological systems such as the nervous system and facial expressions. R. Plutchik proposed eight basic emotions and distinguished them according to intensity, forming the Plutchik’s wheel model [22]. It is a well-known discrete emotion model, as shown in Figure 1 (adapted from [22]).

Figure 1. Piutchik’s wheel model.2.2. Dimensional Emotion Model.

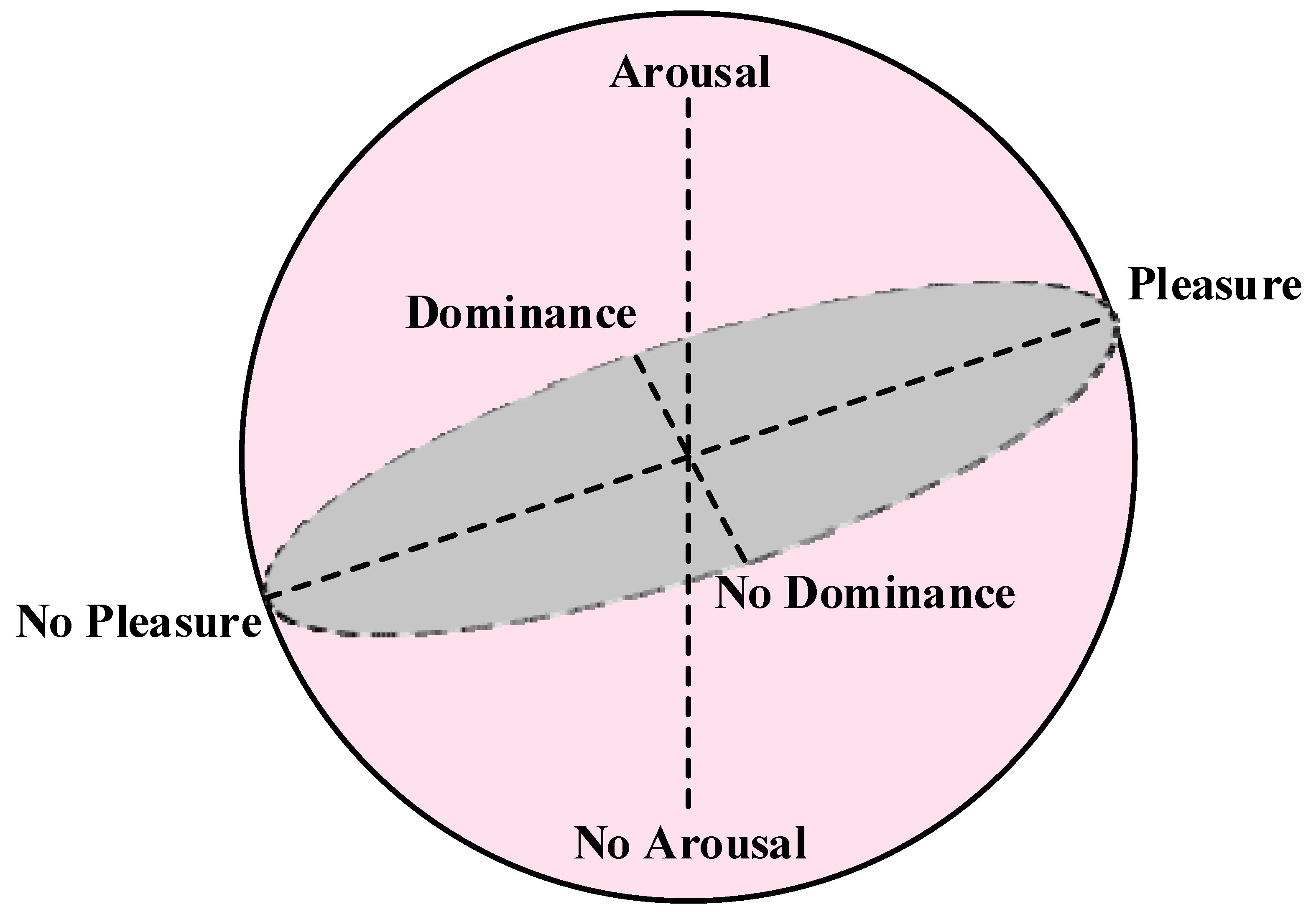

Dimensional emotion models view emotions as combinations of vectors within a more fundamental dimensional space. This enables complex emotions to be researched and measured in fewer dimensions. Core emotions are generally expressed in two-dimensional or three-dimensional space. The dimensional emotion model in the two-dimensional space is usually the arousal-valence model. Valence reflects the positive or negative evaluation of an emotion and the degree of pleasure the participant feels. Arousal reflects the intensity or activation of an emotion in the body. The level of arousal reflects the individual’s will, and low arousal means less energy. However, dimensional emotion models in two dimensions were not able to successfully distinguish core emotions with the same degree of consistency and valence. For example, both anger and fear have high arousal and low valence. Therefore, a new dimension needs to be introduced to distinguish these emotions.

The most famous three-dimensional emotion model is the pleasure, arousal, and dominance (PAD) model [23] proposed by Mehrabian and Russell through the study of environmental psychology methods [24] and the feeling-thinking-acting [25] model, as shown in Figure 2 (adapted from [23]).

Figure 2. PAD 3D emotion model.

Dominance represents control or position, and indicates whether a certain emotion is submissive. It is worth noting the dimensional emotion model can accurately identify the core emotion. However, for some complex emotions, the dimensional emotion model will lose some details.

3. Sensors for Emotion Recognition

The sensors used for emotion recognition mainly include visual sensors, audio sensors, radar sensors, and other physiological signal sensors, which can collect signals of different dimensions and achieve emotional analysis through some algorithms. Different sensors have different applications in emotion recognition. The advantages and disadvantages of different sensors for emotion recognition are shown in Table 1.

Table 1. Advantages and disadvantages of different sensors for emotion recognition.

| Sensors | Advantages | Disadvantages |

|---|---|---|

| Visual sensor | Simple data collection; high scalability |

Restricted by light; easy to cause privacy leakage [26] |

| Audio sensor | Low cost; wide range of applications |

Lack of robustness for complex sentiment analysis |

| Radar sensor | Remote monitoring of physiological signals | Radial movement may cause disturbance |

| Other physiological sensors | Ability to monitor physiological signals representing real emotion | Invasive, requires wearing close to the skin surface [27] |

| Multi-sensor fusion | Richer collected information; higher robustness |

Multi-channel information needs to be synchronized; the follow-up calculation is relatively large |

3.1. Visual Sensor

Emotion recognition based on visual sensors is one of the most common emotion recognition methods. It has the advantages of low cost and simple data collection. At present, visual sensors are mainly used for facial expression recognition (FER) [28][29][30] to detect emotion or remote photoplethysmography (rPPG) technology to detect heart rate [31][32]. The accuracies of these methods severely drop as the light intensity decreases.

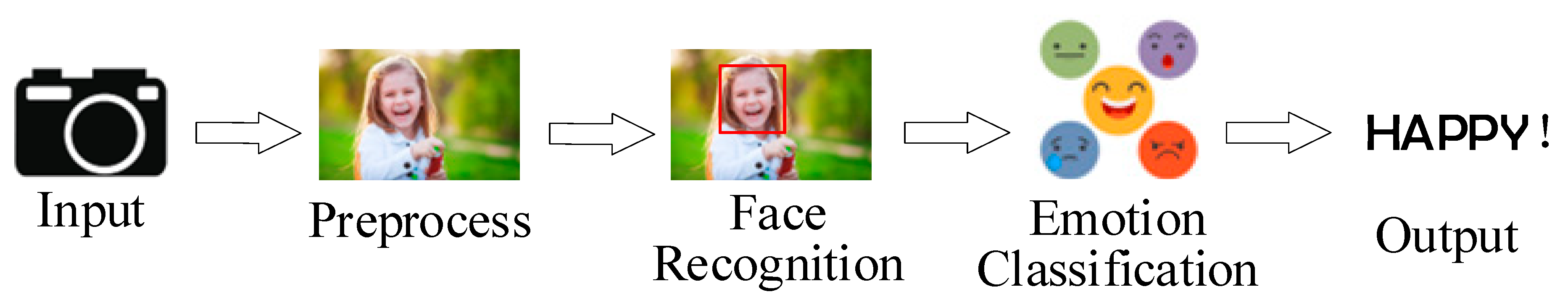

The facial expression recognition process is shown in Figure 3. Facial expressions can intuitively reflect people’s emotions. It is difficult for machines to capture the details of expressions like humans [33]. Facial expressions are easy to hide, which leads to emotion recognition errors [34]. For example, in some social activities, we usually politely smile even though we are not in a happy mood [35].

Figure 3. Facial expression recognition process.

Different individuals have different skin colors, looks, and facial features [36][37], which pose challenges to the accuracy of classification. Facial features of the same emotion can be different, and small changes in different emotions of the same individual are not very obvious [38]. Therefore, there is a classification challenge of large intra-class distance and small inter-class distance for emotion detection through facial expression recognition by the camera. It is also difficult to effectively recognize emotions when the face is occluded (wearing a mask) or from different shooting angles [39].

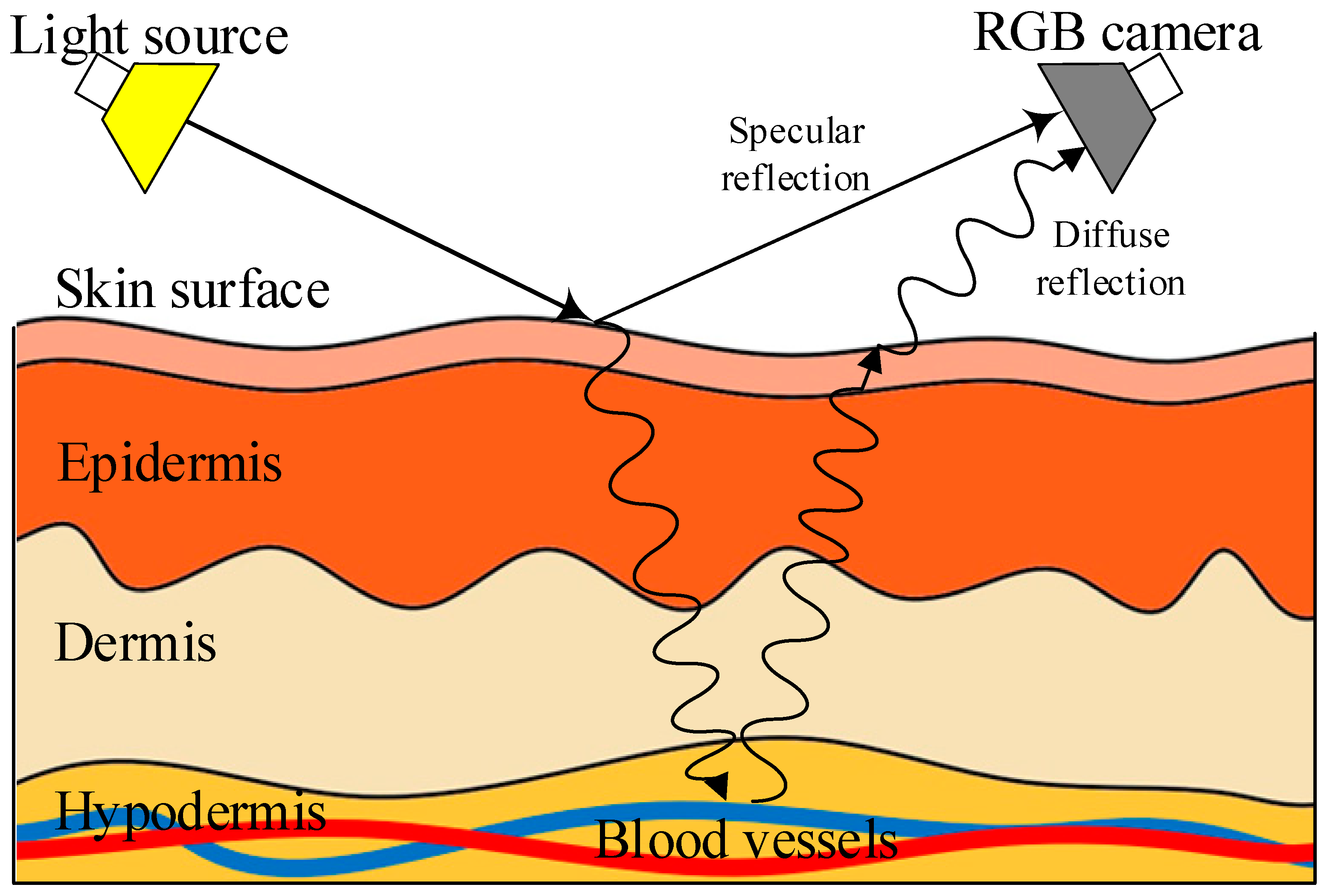

Photoplethysmography (PPG) is an optical technology for the non-invasive detection of various vital signs, which was first proposed in the 1930s [40]. PPG is widely used in the detection of physiological signals in personal portable devices (smart wristbands, smart watches, etc.) [41][42]. The successful application of PPG has led to the rapid development of remote photoplethysmography (rPPG). A multi-wavelength RGB camera is used by rPPG technology to identify minute variations in skin color on the human face caused by changes in blood volume during a heartbeat [43], as shown in Figure 4.

Figure 4. Schematic diagram of rPPG technology.

The rPPG technology can be used to obtain the degree of peripheral vascular constriction and analyze the participant’s emotions. External vasoconstriction is considered to be a defensive physiological response. When people are in a state of pain, hunger, fear, or anger, the constriction of external blood vessels will be enhanced. Conversely, in a calm or relaxed state, this response will reduce.

With the improvement in hardware and algorithm level, rPPG technology can also realize remote non-contact monitoring and estimation of heart rate [44], respiratory rate [45], blood pressure [46], or other signals. Emotion recognition is performed after analyzing a large amount of monitoring data. These signals can classify emotions into a few types and intensities. There are certain errors in the recognition of multiple types of emotions. It is necessary to combine other physiological information to improve the accuracy rate of emotion recognition [47].

3.2. Audio Sensor

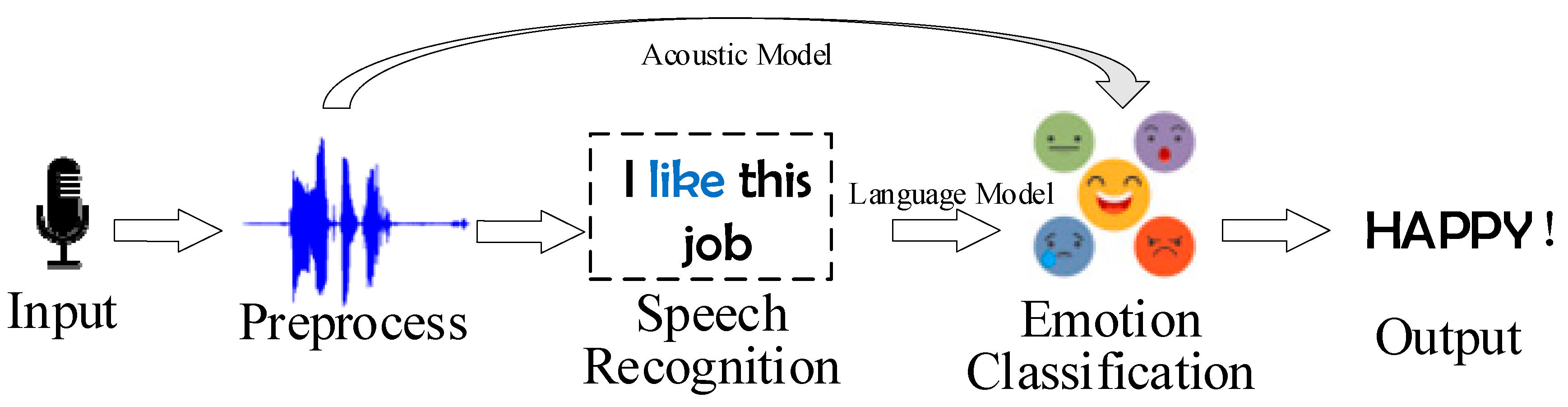

Language is one of the most important components of human culture. People can express themselves or communicate with others through language. Speech recognition [48] has promoted the development of speech emotion recognition (SER) [49]. Human speech contains rich information that can be used for emotion recognition [50][51]. Understanding the emotion in information is essential for artificial intelligence to engage in effective dialog. SER can be used for call center dialog, automatic response systems, autism diagnosis, etc. [52][53][54]. SER is jointly completed by acoustics feature extraction [55] and language mark [56]. The process of SER is shown in Figure 5.

Figure 5. The process of SER.

In the preprocessing stage, the input signal is enhanced into segmentations [57] after noise reduction; and then feature extraction and classification are performed [58]. The language model [59] can identify emotional expressions with specific semantic contributions. The acoustic model can distinguish different emotions contained in the same sentence by analyzing the features of prosody or spectral [60]. Combining these two models can improve the accuracy of SER.

Understanding the emotion in speech is a complex process. Different speaking styles of different people will bring about acoustic variability, which will directly affect speech feature labeling and extraction [61]. The same sentence may contain different emotions [62], and some specific emotional differences often depend on the speaker’s local culture or living environment, which also pose challenges for SER.

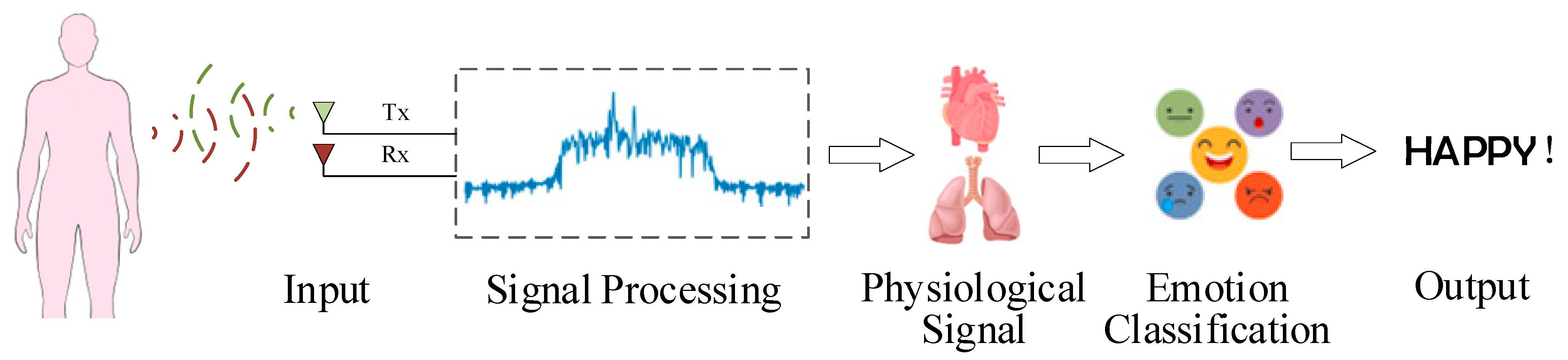

3.3. Radar Sensor

Different emotions will cause a series of physiological responses, such as changes in respiratory rate [63], heart rate [64][65], brain wave [66], blood pressure [67], etc. For example, the excitement caused by happiness, anger, or anxiety can lead to an increased heart rate [68]. Positive emotions can increase respiratory rate, and depressive emotions can tend to inhibit breathing [69]. Respiratory rate also affects heart rate variability (HRV), which decreases when exhaling and increases when inhaling [70]. Currently, radar technology is widely used in remote vital signs detection [71] and wireless sensing [72]. Radar sensors can use the echo signal of the target to analyze the chest micro-motion caused by breathing and heartbeats. It can realize remote acquisition of these physiological signals. The overall process of emotion recognition based on radar sensor is shown in Figure 6.

Figure 6. Process of emotion recognition based on radar sensor.

Compared with visual sensors, emotion recognition based on radar sensors is unrestricted by light intensity [73]. However, in real environments, radar echo signals are affected by noise, especially for the radial doppler motion close to or away from the radar [74], which affects the accuracy of sentiment analysis.

3.4. Other Physiological Sensors

Emotions have been shown to be biological since ancient times. Excessive emotion is believed to have some effects on the functioning of vital organs. Aristotle believed that the influence of emotions on physiology is reflected in the changes in physiological states, such as a rapid heartbeat, body heat, or loss of appetite. William James first proposed the theory of the physiology of emotion [75]. He believed that external stimuli would trigger activity in the autonomic nervous system and create a physiological response in the brain. For example, when we feel happy, we laugh; when we feel scared, our hairs stand on end; when we feel sad, we cry.

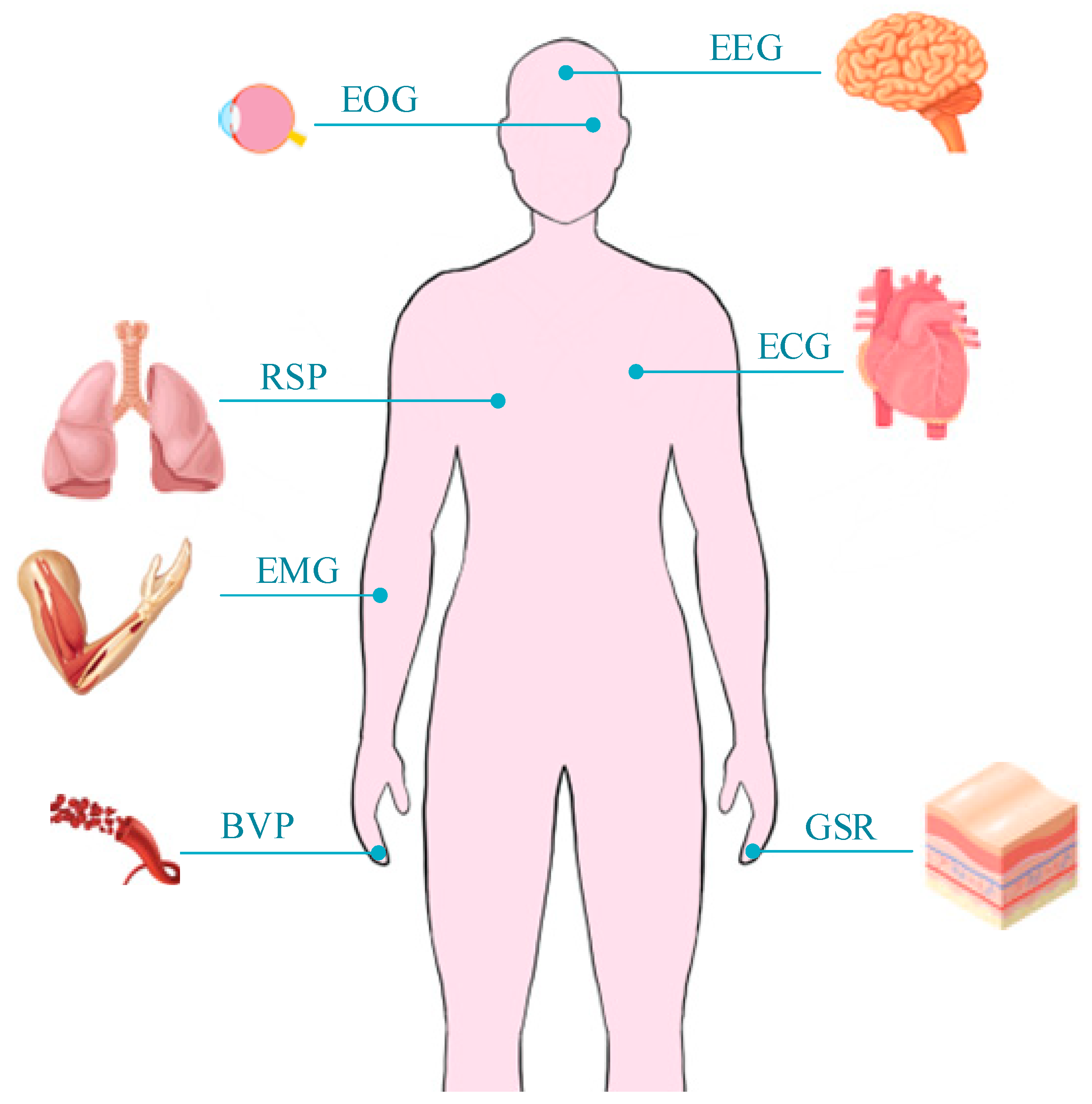

Human emotion is a spontaneous mental state, which is reflected in the physiological changes of the human body and significantly affects our consciousness [76]. Many other physiological signals in the human body, such as electroencephalogram (EEG) [66], electrocardiogram (ECG) [77], electromyogram (EMG) [78], galvanic skin response (GSR) [79], blood volume pulse (BVP) [80], and electrooculography (EOG) [81], as shown in Figure 7.

Figure 7. Physiological signals detected by other physiological sensors.

EEG measures the electrical signal activity of the brain by setting electrodes on the skin surface of the head. Many studies have shown that the prefrontal cortex, temporal lobe, and anterior cingulate gyrus of the brain are related to the control of emotions. Their levels of activity induce emotions such as anxiety, irritability, depression, worry, and resentment, respectively.

ECG is a method of electrical monitoring on the surface of the skin that detects the heartbeat controlled by the body’s electrical signals. Heart rate and heart rate variability obtained through subsequent analysis are widely used in affective computing. Heart rate and heart rate variability are controlled by the sympathetic nervous systems and parasympathetic nervous systems. The sympathetic nervous system can speed up the heart rate, which is reflected in greater psychological stress and activation. The parasympathetic nervous system is responsible for bringing the heart rate down to normal levels, putting the body in a more relaxed state.

EMG measures the degree of muscle activation by collecting the voltage difference generated during muscle contraction. The current EMG signal measurement technology can be divided into two types. The first is to study facial expressions by measuring facial muscles. The second is to place electrodes on the body to recognize emotional movements.

GSR is another signal commonly used for emotion recognition. Human skin is normally an insulator. When sweat glands secrete sweat, the electrical conductivity of the skin will change Therefore, GSR can reflect the sweating situation of a person. GSR is usually measured on the palms or soles of the feet, where sweat glands are thought to best reflect changes in emotion. When a person is in an anxious or tense mood, the sweat glands usually secrete more sweat, which causes a greater change in current.

Related physiological signals also include BVP, EOG, etc. These signals all change with emotional changes, and they are not subject to human conscious control [82]. Therefore, these signals can be measured by different physiological sensors to achieve the purpose of emotion recognition. Using these physiological sensors can accurately and quickly obtain real human physiological signals. However, physiological sensors other than visual, audio, and radar sensors usually need to touch the skin or wear related equipment to extract physiological signals, which will affect people’s daily comfort (most people will not accept this monitoring method). Contact sensors are limited by weight and size [27]. These contact devices may also cause people tension and anxiety, which will affect the accuracy of emotion recognition.

3.5. Multi-Sensor Fusion

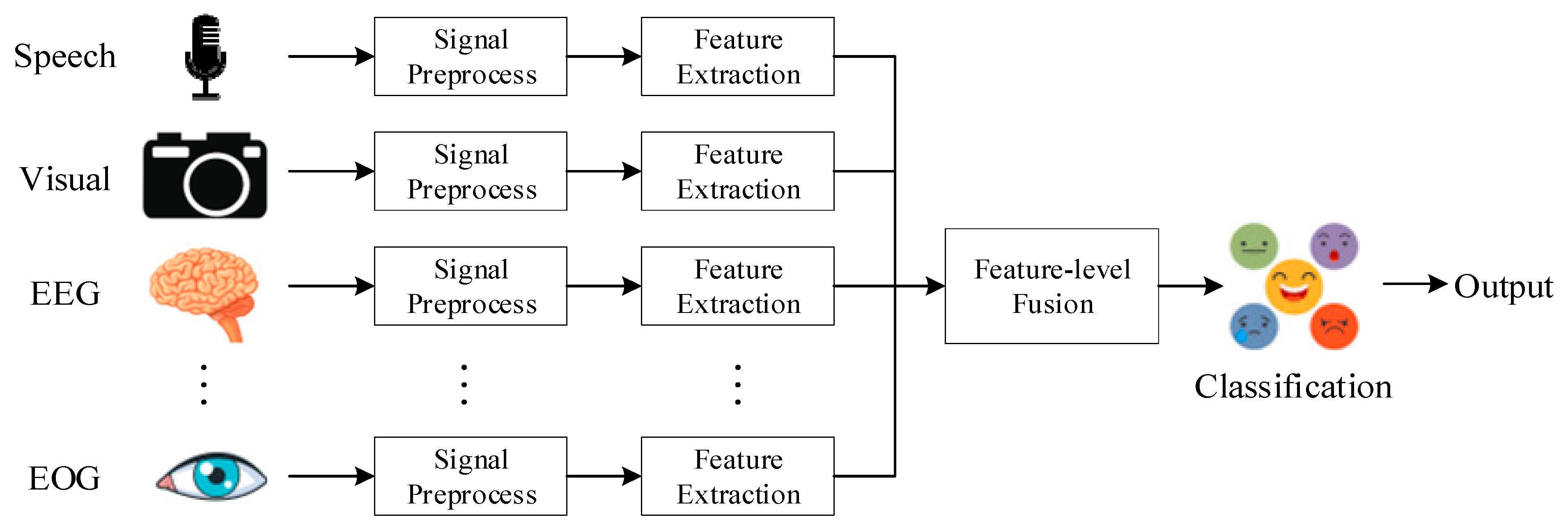

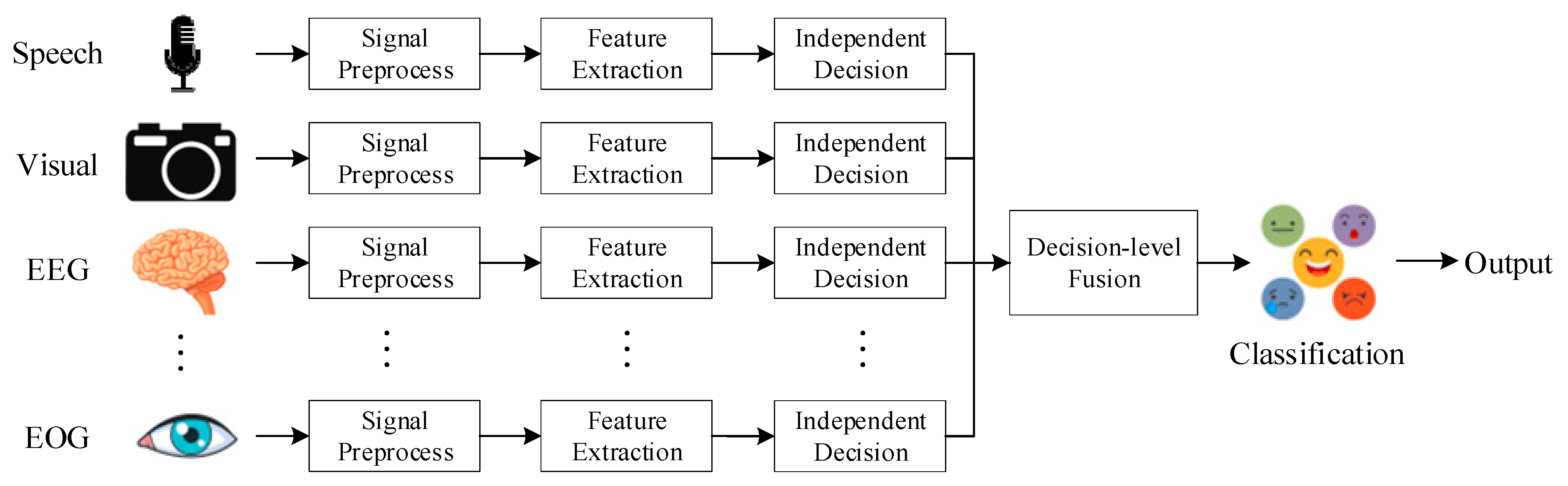

There are certain deficiencies in single-modal emotion recognition, and it is usually unable to accurately identify complex emotions. The multi-modal emotion recognition method refers to the use of signals obtained by multiple sensors to complement each other and obtain more reliable recognition results. Multi-modal approaches can promote the development of emotion recognition. Multi-modal emotion recognition can often achieve the best recognition performance, but the computational complexity will increase due to the excessive number of channels. There are higher requirements for the collection of multi-modal datasets. Multi-modal emotion recognition has different fusion strategies, which can be mainly divided into pixel-level fusion, feature-level fusion, and decision-level fusion.

Pixel-level fusion [83] refers to the direct fusion of the original data; the semantic information and noise of the signal will be superimposed, which will affect the classification effect after fusion. Processing time is wasted when there is too much redundant information.

The feature-level fusion [84] process is shown in Figure 8. Feature-level fusion occurs in the early stages of the fusion process. Extract features from different input signals and combine them into high-dimensional feature vectors. Finally, output the result through a classifier. Feature-level fusion retains most of the important information, it can greatly reduce computing consumption. However, when the amount of data is small or some details are missing, the final accuracy rate will decrease.

Figure 8. Feature-level fusion.

The decision-level fusion [85][86] process is shown in Figure 9. Decision-level fusion refers to the fusion of independent decisions of each part after making independent decisions based on signals collected by different sensors.

Figure 9. Decision-level fusion.

The advantage of decision-level fusion is that independent feature extraction and classification methods can be set according to different signals. It has lower requirements for the integrity of multimodal data. Decision-level fusion has higher robustness and better classification results.

This entry is adapted from the peer-reviewed paper 10.3390/s23052455

References

- Picard, R.W. Affective Computing; MIT Press: Cambridge, MA, USA, 2000; pp. 4–5.

- Nayak, S.; Nagesh, B.; Routray, A.; Sarma, M. A Human-Computer Interaction Framework for Emotion Recognition through Time-Series Thermal Video Sequences. Comput. Electr. Eng. 2021, 93, 107280.

- Colonnello, V.; Mattarozzi, K.; Russo, P.M. Emotion Recognition in Medical Students: Effects of Facial Appearance and Care Schema Activation. Med. Educ. 2019, 53, 195–205.

- Feng, X.; Wei, Y.J.; Pan, X.L.; Qiu, L.H.; Ma, Y.M. Academic Emotion Classification and Recognition Method for Large-Scale Online Learning Environment-Based on a-Cnn and Lstm-Att Deep Learning Pipeline Method. Int. J. Environ. Res. Public Health 2020, 17, 1941.

- Fu, E.; Li, X.; Yao, Z.; Ren, Y.X.; Wu, Y.H.; Fan, Q.Q. Personnel Emotion Recognition Model for Internet of Vehicles Security Monitoring in Community Public Space. Eurasip J. Adv. Signal Process. 2021, 2021, 81.

- Oh, G.; Ryu, J.; Jeong, E.; Yang, J.H.; Hwang, S.; Lee, S.; Lim, S. DRER: Deep Learning-Based Driver’s Real Emotion Recognizer. Sensors 2021, 21, 2166.

- Sun, X.; Song, Y.Z.; Wang, M. Toward Sensing Emotions With Deep Visual Analysis: A Long-Term Psychological Modeling Approach. IEEE Multimed. 2020, 27, 18–27.

- Mandryk, R.L.; Atkins, M.S.; Inkpen, K.M. A continuous and objective evaluation of emotional experience with interactive play environments. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Montréal, QC, Canada, 22–27 April 2006; pp. 1027–1036.

- Ogata, T.; Sugano, S. Emotional communication between humans and the autonomous robot which has the emotion model. In Proceedings of the 1999 IEEE International Conference on Robotics and Automation (Cat. No. 99CH36288C), Detroit, MI, USA, 10–15 May 1999; pp. 3177–3182.

- Malfaz, M.; Salichs, M.A. A new architecture for autonomous robots based on emotions. IFAC Proc. Vol. 2004, 37, 805–809.

- Rattanyu, K.; Ohkura, M.; Mizukawa, M. Emotion monitoring from physiological signals for service robots in the living space. In Proceedings of the ICCAS 2010, Gyeonggi-do, Republic of Korea, 27–30 October 2010; pp. 580–583.

- Hasnul, M.A.; Aziz, N.A.A.; Alelyani, S.; Mohana, M.; Aziz, A.A.J.S. Electrocardiogram-based emotion recognition systems and their applications in healthcare—A review. Sensors 2021, 21, 5015.

- Feidakis, M.; Daradoumis, T.; Caballé, S. Emotion measurement in intelligent tutoring systems: What, when and how to measure. In Proceedings of the 2011 Third International Conference on Intelligent Networking and Collaborative Systems, Fukuoka, Japan, 30 November–2 December 2011; pp. 807–812.

- Saste, S.T.; Jagdale, S. Emotion recognition from speech using MFCC and DWT for security system. In Proceedings of the 2017 international Conference of Electronics, Communication and Aerospace Technology (ICECA), Coimbatore, India, 20–22 April 2017; pp. 701–704.

- Zepf, S.; Hernandez, J.; Schmitt, A.; Minker, W.; Picard, R. Driver emotion recognition for intelligent vehicles: A survey. ACM Comput. Surv. (CSUR) 2020, 53, 1–30.

- Houben, M.; Van Den Noortgate, W.; Kuppens, P. The relation between short-term emotion dynamics and psychological well-being: A meta-analysis. Psychol. Bull. 2015, 141, 901.

- Bal, E.; Harden, E.; Lamb, D.; Van Hecke, A.V.; Denver, J.W.; Porges, S.W. Emotion recognition in children with autism spectrum disorders: Relations to eye gaze and autonomic state. J. Autism Dev. Disord. 2010, 40, 358–370.

- Martínez, R.; Ipiña, K.; Irigoyen, E.; Asla, N.; Garay, N.; Ezeiza, A.; Fajardo, I. Emotion elicitation oriented to the development of a human emotion management system for people with intellectual disabilities. In Trends in Practical Applications of Agents and Multiagent Systems: 8th International Conference on Practical Applications of Agents and Multiagent Systems; Springer: Berlin/Heidelberg, Germany, 2010; pp. 689–696.

- Ekman, P. Universals and cultural differences in facial expressions of emotion. In Nebraska Symposium on Motivation; University of Nebraska Press: Lincoln, NE, USA, 1971.

- Darwin, C.; Prodger, P. The Expression of the Emotions in Man and Animals; Oxford University Press: Oxford, UK, 1998.

- Ekman, P.; Sorenson, E.R.; Friesen, W.V.J.S. Pan-cultural elements in facial displays of emotion. Science 1969, 164, 86–88.

- Plutchik, R. Emotions and Life: Perspectives from Psychology, Biology, and Evolution; American Psychological Association: Washington, DC, USA, 2003.

- Bakker, I.; Van Der Voordt, T.; Vink, P.; De Boon, J. Pleasure, arousal, dominance: Mehrabian and Russell revisited. Curr. Psychol. 2014, 33, 405–421.

- Mehrabian, A.; Russell, J.A. An Approach to Environmental Psychology; the MIT Press: Cambridge, MA, USA, 1974.

- Bain, A. The senses and the intellect; Longman, Green, Longman, Roberts, and Green: London, UK, 1864.

- Hassan, M.U.; Rehmani, M.H.; Chen, J.; Computing, D. Differential privacy in blockchain technology: A futuristic approach. J. Parallel Distrib. Comput. 2020, 145, 50–74.

- Ray, T.R.; Choi, J.; Bandodkar, A.J.; Krishnan, S.; Gutruf, P.; Tian, L.; Ghaffari, R.; Rogers, J. Bio-integrated wearable systems: A comprehensive review. Chem. Rev. 2019, 119, 5461–5533.

- Li, S.; Deng, W. Deep facial expression recognition: A survey. IEEE Trans. Affect. Comput. 2020, 13, 1195–1215.

- Schmid, P.C.; Mast, M.S.; Bombari, D.; Mast, F.W.; Lobmaier, J. How mood states affect information processing during facial emotion recognition: An eye tracking study. Swiss J. Psychol. 2011.

- Sandbach, G.; Zafeiriou, S.; Pantic, M.; Yin, L.J.I.; Computing, V. Static and dynamic 3D facial expression recognition: A comprehensive survey. Image Vis. Comput. 2012, 30, 683–697.

- Wang, W.; Den Brinker, A.C.; Stuijk, S.; De Haan, G. Algorithmic principles of remote PPG. IEEE Trans. Biomed. Eng. 2016, 64, 1479–1491.

- Xie, K.; Fu, C.-H.; Liang, H.; Hong, H.; Zhu, X. Non-contact heart rate monitoring for intensive exercise based on singular spectrum analysis. In Proceedings of the 2019 IEEE Conference on Multimedia Information Processing and Retrieval (MIPR), San Jose, CA, USA, 28–30 March 2019; pp. 228–233.

- Ghimire, D.; Lee, J. Geometric feature-based facial expression recognition in image sequences using multi-class adaboost and support vector machines. Sensors 2013, 13, 7714–7734.

- Zhao, M.; Adib, F.; Katabi, D. Emotion recognition using wireless signals. In Proceedings of the 22nd Annual International Conference on Mobile Computing and Networking, New York, NY, USA, 3–7 October 2016; pp. 95–108.

- Zhang, J.; Yin, Z.; Chen, P.; Nichele, S. Emotion recognition using multi-modal data and machine learning techniques: A tutorial and review. Inf. Fusion 2020, 59, 103–126.

- Lopes, A.T.; De Aguiar, E.; De Souza, A.F.; Oliveira-Santos, T. Facial expression recognition with convolutional neural networks: Coping with few data and the training sample order. Pattern Recognit. 2017, 61, 610–628.

- Kim, D.H.; Baddar, W.J.; Jang, J.; Ro, Y. Multi-objective based spatio-temporal feature representation learning robust to expression intensity variations for facial expression recognition. IEEE Trans. Affect. Comput. 2017, 10, 223–236.

- Zhong, L.; Liu, Q.; Yang, P.; Huang, J.; Metaxas, D. Learning multiscale active facial patches for expression analysis. IEEE Trans. Cybern. 2014, 45, 1499–1510.

- Schroff, F.; Kalenichenko, D.; Philbin, J. Facenet: A unified embedding for face recognition and clustering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 8–10 June 2015; pp. 815–823.

- Hertzman, A.B. Photoelectric plethysmography of the fingers and toes in man. Proc. Soc. Exp. Biol. Med. 1937, 37, 529–534.

- Ram, M.R.; Madhav, K.V.; Krishna, E.H.; Komalla, N.R.; Reddy, K.A. A novel approach for motion artifact reduction in PPG signals based on AS-LMS adaptive filter. IEEE Trans. Instrum. Meas. 2011, 61, 1445–1457.

- Temko, A. Accurate heart rate monitoring during physical exercises using PPG. IEEE Trans. Biomed. Eng. 2017, 64, 2016–2024.

- Poh, M.-Z.; McDuff, D.J.; Picard, R.W. Advancements in noncontact, multiparameter physiological measurements using a webcam. IEEE Trans. Biomed. Eng. 2010, 58, 7–11.

- Li, X.; Chen, J.; Zhao, G.; Pietikainen, M. Remote heart rate measurement from face videos under realistic situations. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014; pp. 4264–4271.

- Tarassenko, L.; Villarroel, M.; Guazzi, A.; Jorge, J.; Clifton, D.; Pugh, C. Non-contact video-based vital sign monitoring using ambient light and auto-regressive models. Physiol. Meas. 2014, 35, 807.

- Jeong, I.C.; Finkelstein, J. Introducing contactless blood pressure assessment using a high speed video camera. J. Med. Syst. 2016, 40, 1–10.

- Zhang, L.; Fu, C.-H.; Hong, H.; Xue, B.; Gu, X.; Zhu, X.; Li, C. Non-contact Dual-modality emotion recognition system by CW radar and RGB camera. IEEE Sens. J. 2021, 21, 23198–23212.

- Hinton, G.; Deng, L.; Yu, D.; Dahl, G.E.; Mohamed, A.R.; Jaitly, N.; Senior, A.; Vanhoucke, V.; Nguyen, P.; Sainath, T.N.; et al. Deep Neural Networks for Acoustic Modeling in Speech Recognition. IEEE Signal Process. Mag. 2012, 29, 82–97.

- Martin, O.; Kotsia, I.; Macq, B.; Pitas, I. The eNTERFACE’05 audio-visual emotion database. In Proceedings of the 22nd International Conference on Data Engineering Workshops (ICDEW’06), Atlanta, GA, USA, 3–7 April 2016; p. 8.

- Li, J.; Deng, L.; Haeb-Umbach, R.; Gong, Y. Robust Automatic Speech Recognition: A Bridge to Practical Applications; Academic Press: Cambridge, MA, USA, 2015.

- Williams, C.E.; Stevens, K. Vocal correlates of emotional states. Speech Eval. Psychiatry 1981, 52, 221–240.

- Schuller, B.W. Speech emotion recognition: Two decades in a nutshell, benchmarks, and ongoing trends. Commun. ACM 2018, 61, 90–99.

- France, D.J.; Shiavi, R.G.; Silverman, S.; Silverman, M.; Wilkes, M. Acoustical properties of speech as indicators of depression and suicidal risk. IEEE Trans. Biomed. Eng. 2000, 47, 829–837.

- Hansen, J.H.; Cairns, D.A. Icarus: Source generator based real-time recognition of speech in noisy stressful and lombard effect environments. Speech Commun. 1995, 16, 391–422.

- Ang, J.; Dhillon, R.; Krupski, A.; Shriberg, E.; Stolcke, A. Prosody-based automatic detection of annoyance and frustration in human-computer dialog. In Proceedings of the INTERSPEECH, Denver, CO, USA, 16–20 September 2002; pp. 2037–2040.

- Cohen, R. A computational theory of the function of clue words in argument understanding. In Proceedings of the 10th International Conference on Computational Linguistics and 22nd Annual Meeting of the Association for Computational Linguistics, Stanford University, Stanford, CA, USA, 2–6 July 1984; pp. 251–258.

- Deng, J.; Frühholz, S.; Zhang, Z.; Schuller, B. Recognizing emotions from whispered speech based on acoustic feature transfer learning. IEEE Access 2017, 5, 5235–5246.

- Guo, S.; Feng, L.; Feng, Z.-B.; Li, Y.-H.; Wang, Y.; Liu, S.-L.; Qiao, H. Multi-view laplacian least squares for human emotion recognition. Neurocomputing 2019, 370, 78–87.

- Grosz, B.J.; Sidner, C.L. Attention, intentions, and the structure of discourse. Comput. Linguist. 1986, 12, 175–204.

- Dellaert, F.; Polzin, T.; Waibel, A. Recognizing emotion in speech. In Proceedings of the Fourth International Conference on Spoken Language Processing. ICSLP’96, Philadelphia, PA, USA, 3–6 October 1996; pp. 1970–1973.

- Burmania, A.; Busso, C. A Stepwise Analysis of Aggregated Crowdsourced Labels Describing Multimodal Emotional Behaviors. In Proceedings of the INTERSPEECH, Stockholm, Sweden, 20–24 August 2017; pp. 152–156.

- Lee, S.-W. The generalization effect for multilingual speech emotion recognition across heterogeneous languages. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 5881–5885.

- Ashhad, S.; Kam, K.; Del Negro, C.A.; Feldman, J. Breathing rhythm and pattern and their influence on emotion. Annu. Rev. Neurosci. 2022, 45, 223–247.

- Du, G.; Long, S.; Yuan, H. Non-contact emotion recognition combining heart rate and facial expression for interactive gaming environments. IEEE Access 2020, 8, 11896–11906.

- Verkruysse, W.; Svaasand, L.O.; Nelson, J. Remote plethysmographic imaging using ambient light. Opt. Express 2008, 16, 21434–21445.

- Qing, C.; Qiao, R.; Xu, X.; Cheng, Y. Interpretable emotion recognition using EEG signals. IEEE Access 2019, 7, 94160–94170.

- Theorell, T.; Ahlberg-Hulten, G.; Jodko, M.; Sigala, F.; De La Torre, B. Influence of job strain and emotion on blood pressure in female hospital personnel during workhours. Scand. J. Work Environ. Health 1993, 19, 313–318.

- Nouman, M.; Khoo, S.Y.; Mahmud, M.P.; Kouzani, A. Recent Advances in Contactless Sensing Technologies for Mental Health Monitoring. IEEE Internet Things J. 2021, 9, 274–297.

- Boiten, F. The effects of emotional behaviour on components of the respiratory cycle. Biol. Psychol. 1998, 49, 29–51.

- Yasuma, F.; Hayano, J. Respiratory sinus arrhythmia: Why does the heartbeat synchronize with respiratory rhythm? Chest 2004, 125, 683–690.

- Li, C.; Cummings, J.; Lam, J.; Graves, E.; Wu, W. Radar remote monitoring of vital signs. IEEE Microw. Mag. 2009, 10, 47–56.

- Li, H.; Shrestha, A.; Heidari, H.; Le Kernec, J.; Fioranelli, F. Bi-LSTM network for multimodal continuous human activity recognition and fall detection. IEEE Sens. J. 2019, 20, 1191–1201.

- Ren, L.; Kong, L.; Foroughian, F.; Wang, H.; Theilmann, P.; Fathy, A. Comparison study of noncontact vital signs detection using a Doppler stepped-frequency continuous-wave radar and camera-based imaging photoplethysmography. IEEE Trans. Microw. Theory Technol. 2017, 65, 3519–3529.

- Gu, C.; Wang, G.; Li, Y.; Inoue, T.; Li, C. A hybrid radar-camera sensing system with phase compensation for random body movement cancellation in Doppler vital sign detection. IEEE Trans. Microw. Theory Technol. 2013, 61, 4678–4688.

- James, W. The Principles of Psychology; Cosimo, Inc.: New York, NY, USA, 2007; Volume 1.

- Petrantonakis, P.C.; Hadjileontiadis, L. Emotion recognition from brain signals using hybrid adaptive filtering and higher order crossings analysis. IEEE Trans. Affect. Comput. 2010, 1, 81–97.

- Katsigiannis, S.; Ramzan, N. DREAMER: A database for emotion recognition through EEG and ECG signals from wireless low-cost off-the-shelf devices. IEEE J. Biomed. Health Inform. 2017, 22, 98–107.

- Wen, W.; Liu, G.; Cheng, N.; Wei, J.; Shangguan, P.; Huang, W. Emotion recognition based on multi-variant correlation of physiological signals. IEEE Trans. Affect. Comput. 2014, 5, 126–140.

- Jerritta, S.; Murugappan, M.; Nagarajan, R.; Wan, K. Physiological signals based human emotion recognition: A review. In Proceedings of the 2011 IEEE 7th International Colloquium on Signal Processing and Its Applications, Penang, Malaysia, 4–6 March 2011; pp. 410–415.

- Kushki, A.; Fairley, J.; Merja, S.; King, G.; Chau, T. Comparison of blood volume pulse and skin conductance responses to mental and affective stimuli at different anatomical sites. Physiol. Meas. 2011, 32, 1529.

- Lim, J.Z.; Mountstephens, J.; Teo, J. Emotion recognition using eye-tracking: Taxonomy, review and current challenges. Sensors 2020, 20, 2384.

- Ekman, P. The argument and evidence about universals in facial expressions. In Handbook of Social Psychophysiology; John Wiley & Sons: Hoboken, NJ, USA, 1989; Volume 143, p. 164.

- Li, S.; Kang, X.; Fang, L.; Hu, J.; Yin, H. Pixel-level image fusion: A survey of the state of the art. Inf. Fusion 2017, 33, 100–112.

- Cai, H.; Qu, Z.; Li, Z.; Zhang, Y.; Hu, X.; Hu, B. Feature-level fusion approaches based on multimodal EEG data for depression recognition. Inf. Fusion 2020, 59, 127–138.

- Ho, T.K.; Hull, J.J.; Srihari, S. Decision combination in multiple classifier systems. IEEE Trans. Pattern Anal. Mach. Intell. 1994, 16, 66–75.

- Aziz, A.M. A new adaptive decentralized soft decision combining rule for distributed sensor systems with data fusion. Inf. Sci. 2014, 256, 197–210.

This entry is offline, you can click here to edit this entry!