Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is an old version of this entry, which may differ significantly from the current revision.

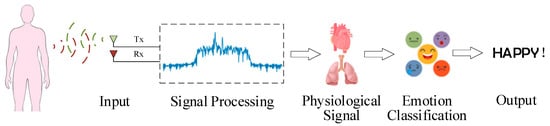

The rapid development of sensors and information technology has made it possible for machines to recognize and analyze human emotions. Emotion recognition is an important research direction in various fields. Human emotions have many manifestations. Therefore, emotion recognition can be realized by analyzing facial expressions, speech, behavior, or physiological signals. These signals are collected by different sensors. Correct recognition of human emotions can promote the development of affective computing.

- sensors for emotion recognition

- emotion models

- emotional signal processing

1. Introduction

Emotion is a comprehensive manifestation of people’s physiological and psychological states; emotion recognition was systematically proposed in the 1990s [1]. With the rapid development of science and technology, emotion recognition has been widely used in various fields, such as human-computer interactions (HCI) [2], medical health [3], Internet education [4], security monitoring [5], intelligent cockpit [6], psychological analysis [7], and the entertainment industry [8].

Emotion recognition can be realized through different detection methods and different sensors. Sensors are combined with advanced algorithm models and rich data to form human-computer interaction systems [9,10] or robot systems [11]. In the field of medical and health care [12], emotion recognition can be used to detect the patient’s psychological state or adjuvant treatment, and improve medical efficiency and medical experience. In the field of Internet education [13], emotion recognition can be used to detect students’ learning status and knowledge acceptance, and cooperate with relevant reminders to improve learning efficiency. In the field of criminal interrogation [14], emotion recognition can be used to detect lies (authenticity test). In the field of intelligent cockpits [15], it can be used to detect the drowsiness and mental state of the driver to improve driving safety. In the field of psychoanalysis [16], it can be used to help analyze whether a person has autism. This technique can also be applied to recognize the emotions of the elderly, infants, and those with special diseases who cannot clearly express their emotions [17,18].

2. Emotion Models

The definition of emotion is the basis of emotion recognition. The basic concept of emotion was proposed by Ekman in the 1970s [19]. At present, there are two mainstream emotion models: the Discrete emotion model and the dimensional emotion model.

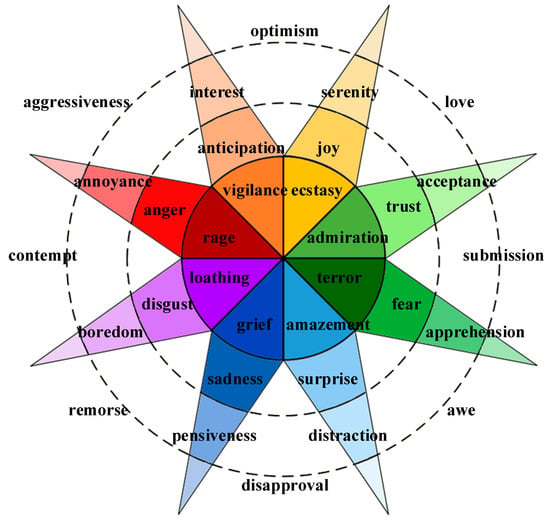

Darwinian evolution [20] holds that emotions are primitive or fundamental. Emotion as a form is considered to correspond to discrete and elementary responses or tendencies of action. The discrete emotion model divides human emotions into limited categories [21], mainly including happiness, sadness, fear, anger, disgust, surprise, etc. There are two to eight basic emotions, according to different theories. However, these discrete emotion model theories have certain common features. They believe that emotions are: mental and physiological processes; caused by the awareness of developmental events; inducing factors for changes in the body’s internal and external signals; related to a fixed set of actions or tendencies. Ekman proposed seven characteristics to distinguish different basic emotions and emotional phenomena: autonomous evaluation; have specific antecedent events; also present in other primates; rapid onset; short duration; unconscious or involuntary appearance; reflected in unique physiological systems such as the nervous system and facial expressions. R. Plutchik proposed eight basic emotions and distinguished them according to intensity, forming the Plutchik’s wheel model [22]. It is a well-known discrete emotion model, as shown in Figure 1 (adapted from [22]).

Figure 1. Piutchik’s wheel model.2.2. Dimensional Emotion Model.

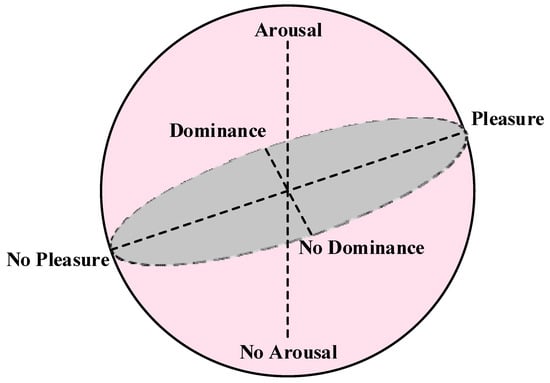

Dimensional emotion models view emotions as combinations of vectors within a more fundamental dimensional space. This enables complex emotions to be researched and measured in fewer dimensions. Core emotions are generally expressed in two-dimensional or three-dimensional space. The dimensional emotion model in the two-dimensional space is usually the arousal-valence model. Valence reflects the positive or negative evaluation of an emotion and the degree of pleasure the participant feels. Arousal reflects the intensity or activation of an emotion in the body. The level of arousal reflects the individual’s will, and low arousal means less energy. However, dimensional emotion models in two dimensions were not able to successfully distinguish core emotions with the same degree of consistency and valence. For example, both anger and fear have high arousal and low valence. Therefore, a new dimension needs to be introduced to distinguish these emotions.

The most famous three-dimensional emotion model is the pleasure, arousal, and dominance (PAD) model [23] proposed by Mehrabian and Russell through the study of environmental psychology methods [24] and the feeling-thinking-acting [25] model, as shown in Figure 2 (adapted from [23]).

Figure 2. PAD 3D emotion model.

Dominance represents control or position, and indicates whether a certain emotion is submissive. It is worth noting the dimensional emotion model can accurately identify the core emotion. However, for some complex emotions, the dimensional emotion model will lose some details.

3. Sensors for Emotion Recognition

The sensors used for emotion recognition mainly include visual sensors, audio sensors, radar sensors, and other physiological signal sensors, which can collect signals of different dimensions and achieve emotional analysis through some algorithms. Different sensors have different applications in emotion recognition. The advantages and disadvantages of different sensors for emotion recognition are shown in Table 1.

Table 1. Advantages and disadvantages of different sensors for emotion recognition.

| Sensors | Advantages | Disadvantages |

|---|---|---|

| Visual sensor | Simple data collection; high scalability |

Restricted by light; easy to cause privacy leakage [26] |

| Audio sensor | Low cost; wide range of applications |

Lack of robustness for complex sentiment analysis |

| Radar sensor | Remote monitoring of physiological signals | Radial movement may cause disturbance |

| Other physiological sensors | Ability to monitor physiological signals representing real emotion | Invasive, requires wearing close to the skin surface [27] |

| Multi-sensor fusion | Richer collected information; higher robustness |

Multi-channel information needs to be synchronized; the follow-up calculation is relatively large |

3.1. Visual Sensor

Emotion recognition based on visual sensors is one of the most common emotion recognition methods. It has the advantages of low cost and simple data collection. At present, visual sensors are mainly used for facial expression recognition (FER) [28,29,30] to detect emotion or remote photoplethysmography (rPPG) technology to detect heart rate [31,32]. The accuracies of these methods severely drop as the light intensity decreases.

The facial expression recognition process is shown in Figure 3. Facial expressions can intuitively reflect people’s emotions. It is difficult for machines to capture the details of expressions like humans [33]. Facial expressions are easy to hide, which leads to emotion recognition errors [34]. For example, in some social activities, we usually politely smile even though we are not in a happy mood [35].

Figure 3. Facial expression recognition process.

Different individuals have different skin colors, looks, and facial features [36,37], which pose challenges to the accuracy of classification. Facial features of the same emotion can be different, and small changes in different emotions of the same individual are not very obvious [38]. Therefore, there is a classification challenge of large intra-class distance and small inter-class distance for emotion detection through facial expression recognition by the camera. It is also difficult to effectively recognize emotions when the face is occluded (wearing a mask) or from different shooting angles [39].

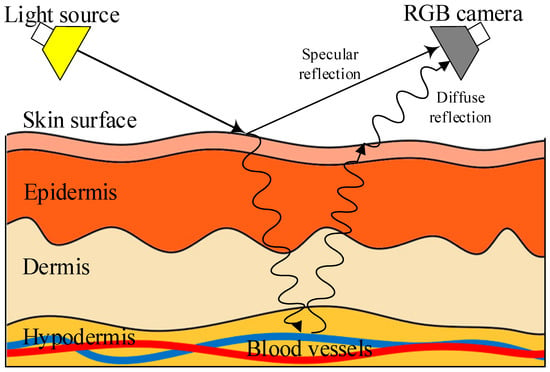

Photoplethysmography (PPG) is an optical technology for the non-invasive detection of various vital signs, which was first proposed in the 1930s [40]. PPG is widely used in the detection of physiological signals in personal portable devices (smart wristbands, smart watches, etc.) [41,42]. The successful application of PPG has led to the rapid development of remote photoplethysmography (rPPG). A multi-wavelength RGB camera is used by rPPG technology to identify minute variations in skin color on the human face caused by changes in blood volume during a heartbeat [43], as shown in Figure 4.

Figure 4. Schematic diagram of rPPG technology.

The rPPG technology can be used to obtain the degree of peripheral vascular constriction and analyze the participant’s emotions. External vasoconstriction is considered to be a defensive physiological response. When people are in a state of pain, hunger, fear, or anger, the constriction of external blood vessels will be enhanced. Conversely, in a calm or relaxed state, this response will reduce.

With the improvement in hardware and algorithm level, rPPG technology can also realize remote non-contact monitoring and estimation of heart rate [44], respiratory rate [45], blood pressure [46], or other signals. Emotion recognition is performed after analyzing a large amount of monitoring data. These signals can classify emotions into a few types and intensities. There are certain errors in the recognition of multiple types of emotions. It is necessary to combine other physiological information to improve the accuracy rate of emotion recognition [47].

3.2. Audio Sensor

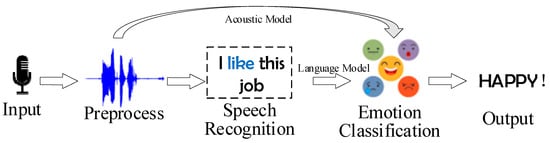

Language is one of the most important components of human culture. People can express themselves or communicate with others through language. Speech recognition [48] has promoted the development of speech emotion recognition (SER) [49]. Human speech contains rich information that can be used for emotion recognition [50,51]. Understanding the emotion in information is essential for artificial intelligence to engage in effective dialog. SER can be used for call center dialog, automatic response systems, autism diagnosis, etc. [52,53,54]. SER is jointly completed by acoustics feature extraction [55] and language mark [56]. The process of SER is shown in Figure 5.

Figure 5. The process of SER.

In the preprocessing stage, the input signal is enhanced into segmentations [57] after noise reduction; and then feature extraction and classification are performed [58]. The language model [59] can identify emotional expressions with specific semantic contributions. The acoustic model can distinguish different emotions contained in the same sentence by analyzing the features of prosody or spectral [60]. Combining these two models can improve the accuracy of SER.

Understanding the emotion in speech is a complex process. Different speaking styles of different people will bring about acoustic variability, which will directly affect speech feature labeling and extraction [61]. The same sentence may contain different emotions [62], and some specific emotional differences often depend on the speaker’s local culture or living environment, which also pose challenges for SER.

3.3. Radar Sensor

Different emotions will cause a series of physiological responses, such as changes in respiratory rate [63], heart rate [64,65], brain wave [66], blood pressure [67], etc. For example, the excitement caused by happiness, anger, or anxiety can lead to an increased heart rate [68]. Positive emotions can increase respiratory rate, and depressive emotions can tend to inhibit breathing [69]. Respiratory rate also affects heart rate variability (HRV), which decreases when exhaling and increases when inhaling [70]. Currently, radar technology is widely used in remote vital signs detection [71] and wireless sensing [72]. Radar sensors can use the echo signal of the target to analyze the chest micro-motion caused by breathing and heartbeats. It can realize remote acquisition of these physiological signals. The overall process of emotion recognition based on radar sensor is shown in Figure 6.

Figure 6. Process of emotion recognition based on radar sensor.

Compared with visual sensors, emotion recognition based on radar sensors is unrestricted by light intensity [73]. However, in real environments, radar echo signals are affected by noise, especially for the radial doppler motion close to or away from the radar [74], which affects the accuracy of sentiment analysis.

3.4. Other Physiological Sensors

Emotions have been shown to be biological since ancient times. Excessive emotion is believed to have some effects on the functioning of vital organs. Aristotle believed that the influence of emotions on physiology is reflected in the changes in physiological states, such as a rapid heartbeat, body heat, or loss of appetite. William James first proposed the theory of the physiology of emotion [75]. He believed that external stimuli would trigger activity in the autonomic nervous system and create a physiological response in the brain. For example, when we feel happy, we laugh; when we feel scared, our hairs stand on end; when we feel sad, we cry.

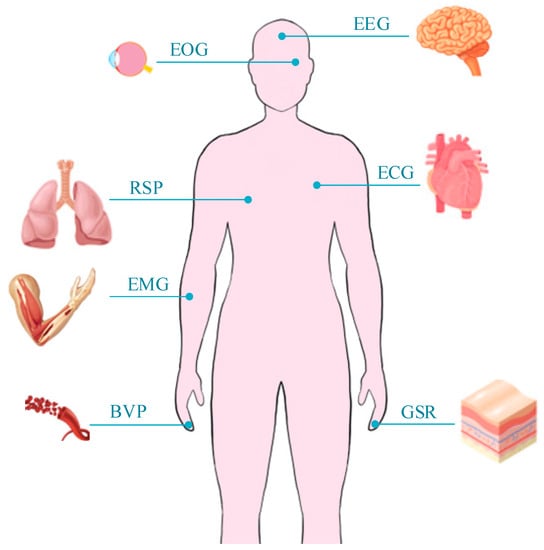

Human emotion is a spontaneous mental state, which is reflected in the physiological changes of the human body and significantly affects our consciousness [76]. Many other physiological signals in the human body, such as electroencephalogram (EEG) [66], electrocardiogram (ECG) [77], electromyogram (EMG) [78], galvanic skin response (GSR) [79], blood volume pulse (BVP) [80], and electrooculography (EOG) [81], as shown in Figure 7.

Figure 7. Physiological signals detected by other physiological sensors.

EEG measures the electrical signal activity of the brain by setting electrodes on the skin surface of the head. Many studies have shown that the prefrontal cortex, temporal lobe, and anterior cingulate gyrus of the brain are related to the control of emotions. Their levels of activity induce emotions such as anxiety, irritability, depression, worry, and resentment, respectively.

ECG is a method of electrical monitoring on the surface of the skin that detects the heartbeat controlled by the body’s electrical signals. Heart rate and heart rate variability obtained through subsequent analysis are widely used in affective computing. Heart rate and heart rate variability are controlled by the sympathetic nervous systems and parasympathetic nervous systems. The sympathetic nervous system can speed up the heart rate, which is reflected in greater psychological stress and activation. The parasympathetic nervous system is responsible for bringing the heart rate down to normal levels, putting the body in a more relaxed state.

EMG measures the degree of muscle activation by collecting the voltage difference generated during muscle contraction. The current EMG signal measurement technology can be divided into two types. The first is to study facial expressions by measuring facial muscles. The second is to place electrodes on the body to recognize emotional movements.

GSR is another signal commonly used for emotion recognition. Human skin is normally an insulator. When sweat glands secrete sweat, the electrical conductivity of the skin will change Therefore, GSR can reflect the sweating situation of a person. GSR is usually measured on the palms or soles of the feet, where sweat glands are thought to best reflect changes in emotion. When a person is in an anxious or tense mood, the sweat glands usually secrete more sweat, which causes a greater change in current.

Related physiological signals also include BVP, EOG, etc. These signals all change with emotional changes, and they are not subject to human conscious control [82]. Therefore, these signals can be measured by different physiological sensors to achieve the purpose of emotion recognition. Using these physiological sensors can accurately and quickly obtain real human physiological signals. However, physiological sensors other than visual, audio, and radar sensors usually need to touch the skin or wear related equipment to extract physiological signals, which will affect people’s daily comfort (most people will not accept this monitoring method). Contact sensors are limited by weight and size [27]. These contact devices may also cause people tension and anxiety, which will affect the accuracy of emotion recognition.

3.5. Multi-Sensor Fusion

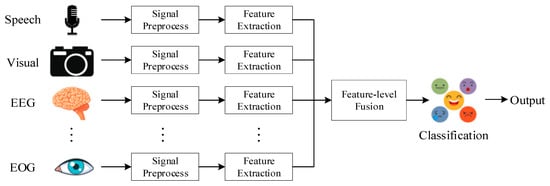

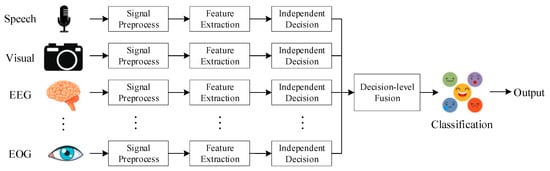

There are certain deficiencies in single-modal emotion recognition, and it is usually unable to accurately identify complex emotions. The multi-modal emotion recognition method refers to the use of signals obtained by multiple sensors to complement each other and obtain more reliable recognition results. Multi-modal approaches can promote the development of emotion recognition. Multi-modal emotion recognition can often achieve the best recognition performance, but the computational complexity will increase due to the excessive number of channels. There are higher requirements for the collection of multi-modal datasets. Multi-modal emotion recognition has different fusion strategies, which can be mainly divided into pixel-level fusion, feature-level fusion, and decision-level fusion.

Pixel-level fusion [83] refers to the direct fusion of the original data; the semantic information and noise of the signal will be superimposed, which will affect the classification effect after fusion. Processing time is wasted when there is too much redundant information.

特征级融合[84]过程如图8所示。特征级融合发生在融合过程的早期阶段。从不同的输入信号中提取特征,并将它们组合成高维特征向量。最后,通过分类器输出结果。功能级融合保留了大部分重要信息,可以大大降低计算消耗。但是,当数据量较小或缺少某些细节时,最终准确率将降低。

图8.功能级融合。

图9.决策级融合。

决策级融合的优点是可以根据不同的信号设置独立的特征提取和分类方法。它对多模态数据的完整性要求较低。决策级融合具有更高的鲁棒性和更好的分类结果。

This entry is adapted from the peer-reviewed paper 10.3390/s23052455

This entry is offline, you can click here to edit this entry!