Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is an old version of this entry, which may differ significantly from the current revision.

Subjects:

Dermatology

Thanks to the rapid development of computer-based systems and deep-learning-based algorithms, artificial intelligence (AI) has long been integrated into the healthcare field. AI is also particularly helpful in image recognition, surgical assistance and basic research. Due to the unique nature of dermatology, AI-aided dermatological diagnosis based on image recognition has become a modern focus and future trend.

- deep learning

- pattern recognition

- dermatology

- skin cancer

- intelligent diagnosis

- 3D imaging

1. Relevant Concept of AI in Dermatology

Knowledge representation and knowledge engineering are central to classical AI research [25,40]. Machine learning and its sub-field deep learning are foundations of the AI framework. “Machine Learning” refers to the automatic improvement of AI algorithms through experience and massive historical data (training datasets) to build models based on datasets that allow the algorithm to generate prediction and make decisions without programming [41]. “Deep learning” is a division of machine learning founded on artificial neural networks (ANNs) and representation learning. The ANN is a mathematical model that simulates the structure and function of biological neural networks, and an adaptive system with learning capabilities. The performance of an ANN depends on the number and structure of its neural layers and training dataset [42,43]. Deep learning is already widely used to detect and classify skin cancers and other skin lesions [44,45,46]. The most prominent deep learning networks can be divided into recursive neural networks (RvNNs), recurrent neural networks (RNNs), Kohonen self-organizing neural networks (KNNs), generative adversarial neural networks (GANs) and convolutional neural networks (CNNs) [47]. CNNs, a subtype of ANNs, are most frequently used for image processing and detection in medicine, particularly in dermatology, pathology and radiology [48]. Currently, the most implemented CNN architectures in the field of dermatology are GoogleNet, Inception-V3, V4, ResNet, Inception-ResNet V2 and Dense Net [47]. As the raw data source for training CNN architectures for applying deep learning, image sets with a large number of high-quality images are decisive for the diagnostic accuracy, sensitivity and specificity of the final trained AI algorithm [49]. An image set can be used to be managed for image data. The object contains a description of the image, the location of the image and the number of images in the set [50]. The most common image sets used to train AI CAD systems in dermatology today are ISIC archives (2016–2021), HAM10000, BCN20000 and PH2 image sets [51,52,53,54,55,56]. The concepts and components related to AI in the dermatology field are displayed systematically in Table 1.

Table 1. Essential terminologies involved in AI in dermatology.

| Terminology | Paraphrase |

|---|---|

| Artificial Intelligence (AI) | The intelligence manifested by machines made by humans, i.e., the ability of the machine to simulate natural intelligence. |

| Knowledge Representation | It is the field of AI dedicated to representing information about the world in a form that a computer system can utilize to solve complex tasks such as diagnosing a medical condition or having a dialog in a natural language. |

| Representation Learning (Feature Learning) | A set of techniques that allows a system to automatically discover the representations needed for feature detection or classification from raw data. |

| Machine Learning | The study of computer algorithms that improve automatically through experience. The algorithms use computational methods to learn from data without being explicitly programmed. |

| Deep Learning | A branch of machine learning methods based on artificial neural networks with representation learning. |

| Supervised Learning | Refers to the machine learning task of learning a function that maps an input to an output based on example input–output pairs. It infers a function from labeled training data consisting of a set of training examples. |

| Transfer Learning | Transfer learning is a machine learning model that allows a model developed from one task to be transferred for another task after fine-tuning and augmentation. |

| Artificial Neural Networks (ANNs) | ANNs, usually simply called neural networks (NNs), are computing systems vaguely inspired by the biological neural networks that constitute animal brains. An ANN is based on a collection of connected units or nodes called artificial neurons, which loosely model the neurons in a biological brain |

| Convolutional Neural Networks (CNNs) | CNNs are a class of neural networks; they are feedforward neural networks. Their artificial neurons can respond to a part of the surrounding units in the coverage area, most commonly applied to analyzing visual imagery. |

| Generative Adversarial Networks (GANs) | GANs are a method of unsupervised learning that learn by playing two neural networks against each other. |

| Pattern Recognition | The automated recognition of patterns and regularities in data. The environment and objects are collectively referred to as patterns. |

| Image Set | An object stores information about an image data set or a collection of image data sets. It contains image descriptions, locations of images and the number of images in the collection. |

2. The Implementation of AI in Dermatology

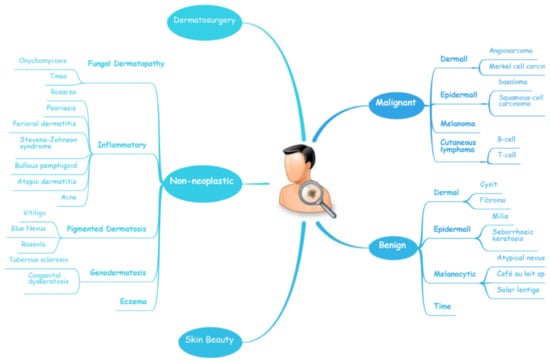

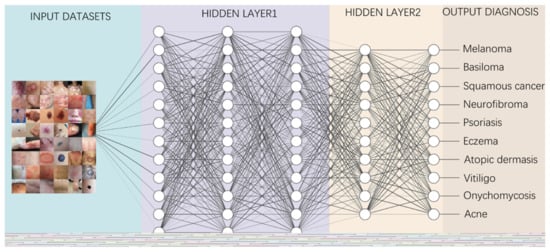

The diagnosis of skin diseases is mainly based on the characteristics of the lesions [57]. However, there are more than 2000 different types of dermatological diseases, and some skin lesions of different diseases show similarities, which makes antidiastole difficult [58]. At present, the global shortage of dermatologists is increasing with the high incidence of skin diseases. There is a serious deficit of dermatologists and uneven distribution, especially the developing countries and remote areas, which urgently require more medical facility, professional consultation and clinical assistance [59,60]. Rapid iteration in big data, image recognition technology and the widespread use of smartphones worldwide may be creating the largest transformational opportunity for skin diseases’ diagnosis and treatment in this era [61,62]. In addition to addressing the needs of underserved areas and the poor, AI now has the ability to provide rapid diagnoses, leading to more diverse and accessible treatments approaches [63]. An AI-aided system and algorithm will quickly turn out to be normal diagnosis and evaluation-related techniques. The morphological analysis of a lesion is the classic basis of dermatological diagnostics, and the face recognition and aesthetic analysis from AI have also matured and become more reliable [64,65]. Currently, some applications of AI in dermatology have already found their way into clinical practice. Specific implementation of AI in dermatology is visualized with a mind map (Figure 1) [53,66,67]. AI systems based on a deep learning algorithm use plentiful public skin lesion image datasets to distinguish between benign and malignant skin cancers. These datasets contain massive original images in diverse modalities, such as dermoscopy, clinical photographs or histopathological images [68]. In addition, deep learning was used to process the disagreements of human annotations for skin lesion images. An ensemble of Bayesian fully convolutional networks (FCNs) trained with ISIC archive was applied for the lesion image’s segmentation by considering two major factors in the aggregations of multiple truth annotations. The FCNs implemented a robust-to-annotation noise learning scheme to leverage multiple experts’ opinions towards improving the generalization performance using all available annotations efficiently [69]. Currently, the most representative and commonly used AI model is the CNN. It transmits input data through a series of interconnected nodes that resemble biological neurons. Each node is a unit of mathematical operation, a group of interconnected nodes in the network is called a layer and multiple layers build the overall framework of the network (Figure 2) [70,71]. Deep CNNs have also been applied to the automatic understanding of skin lesion images in recent years. Mirikharaji et al., proposed a new framework for training fully convolutional segmentation networks from a large number of cheap unreliable annotations, as well as a small fraction of expert clean annotations to handle both clean and noisy pixel-level annotations accordingly in the loss function. The results show that their spatially adaptive re-weighting method can significantly decrease the requirement for the careful labelling of images without sacrificing segmentation accuracy [72].

Figure 1. A schematic illustrates the hierarchy of the implementation of AI in dermatology.

Figure 2. A diagram depicting how to perform classification tasks in an AI neural network.

Information from the image data set is transmitted through a structure composed of multi-layer connection nodes. Each line is a weight connecting one layer to the next, with each circle representing an input, neuron or output. In convolutional neural networks, these layers contain unique convolutional layers that act as filters. The network made up of many layered filters learn increasingly high-level representations of the image.

3. AI in Aid-Diagnosis and Multi-Classification for Skin Lesions

3.1. Multi-Classification for Skin Lesions in ISIC Challenges

In recent years, the classification of multiple skin lesions has become a hotspot with the increasing popularity of using deep learning algorithms in medical image analysis. Before, metadata indicating information such as site, age, gender, etc., were not included, even though this information is collected by doctors in daily clinical practice and has an impact on their diagnostic decisions. Therefore, the algorithm or AI system that includes this information is better able to reproduce the actual diagnostic scenario, and its diagnostic performance will be more credible. The ISIC challenges consider AI systems that can identify the presence of many different pathologies and provide metadata for labelled cases, thus allowing for a more realistic comparison between AI systems and clinical scenarios. Since the International Skin Imaging Collaboration (ISIC) challenge was held in 2016, it represents the benchmark for diverse research groups working in this area. To date, their database has accumulated over 80,000 labelled training and testing images, which are openly accessible to all researchers and have been used for training algorithms to diagnose and classify various skin lesions [109]. In ISIC 2016–2018, subsets of the image datasets were divided into seven classes: (1) actinic keratosis and intraepithelial carcinoma, (2) basal cell carcinoma, (3) benign keratosis, (4) dermatofibroma, (5) melanocytic nevi, (6) melanoma and (7) vascular skin lesion. From 2019, the atypical nevi were added as the eighth subset. Garcia-Arroyo and Garcia-Zapirain designed a CAD system to participate in ISIC 2016, 2017 Challenge and were ranked 9th and 15th, respectively [110]. In 2018, Rezvantalab et al., investigated the effectiveness and capability of four pre-trained algorithms with HAM10000 (comprising a large part of the ISIC datasets) and PH2 state-of-the-art architectures (DenseNet 201, ResNet 152, Inception v3, Inception ResNet v2) in the classification of eight skin diseases. Their overall results show that all deep learning models outperform dermatologists (by at least 11%) [52]. Iqbal et al., proposed a deep convolutional neural network (DNN) model trained using ISIC 2017–2019 datasets that proved to be able to automatically and efficiently classify skin lesions with 0.964 AUR in ROC curve [71]. Similarly, Lucius’ team developed a DNN trained with HM10000 to classify seven types of skin lesions. Statistics showed that the diagnostic accuracy of dermatologists is significantly improved with the help of DNNs [111]. MINAGAWA et al., trained a DNN using ISIC-2017, HAM10000 and Shinshu datasets to narrow the diagnostic accuracy gap for dermatologists facing patients from different regions [112]. Qin et al., established a skin lesion style-based generative adversarial network (GAN) and tested it in the ISIC 2018 dataset, showing that the GAN can efficiently generate high-quality images of skin lesions, resulting in an improved performance of the classification model [73]. Cano et al., applied CNNs based on NASNet architecture trained with a skin image lesion from the ISIC archive for multiple skin lesion classification, which has been cross validated. Its excellent performance suggests that it can be utilized as a novel classification system for multiple classes of skin diseases [74]. Al-masni et al., integrated a deep learning full-resolution convolutional network and a convolutional neural network classifier for segmenting and classifying various skin lesions. The proposed integrated deep learning model was evaluated in ISIC 2016–2018 datasets and achieved an over 80% accuracy in all three for segmentation and discrimination among seven classes of skin lesions, with the highest accuracy of 89.28% in ISIC 2018 [113]. In 2018, Gessert et al., employed an ensemble of CNNs in the ISIC 2018 challenge and achieved second place [53]. Next year, they exploited a set of deep learning models trained with BCN20000 and HAM10000 datasets to solve the skin lesion classification problem, including EfficientNets, SENet and ResNeXt WSL to address the classification of skin lesions and predict unknown classes by analyzing patients’ metadata. Their approach achieved first place in the ISIC 2019 challenge [54].

In recent years, transfer learning technology has also been applied for classifying multiple skin lesions. Transfer learning allows a model developed from one task to be transferred for another task after fine-tuning and augmentation. It is very helpful when we don’t have enough training data sources. When lesion images are difficult to acquire, the algorithmic model can be initially performed with natural images and subsequently fine-tuned with an enhanced lesion dataset to increase the accuracy and specificity of the algorithm, thereby improving the performance on image processing tasks. Singhal et al., utilized transfer learning to train four different state-of-the-art architectures with the ISIC 2018 dataset and demonstrated their practicability for the detection of skin lesions [114]. Barhoumi et al., trained content-based dermatological lesion retrieval (CBDLR) systems using transfer learning, and their results showed that it outperformed a similar CBDLR systems using standard distances [75]. There are also some more studies that have devised AI systems or architectures trained or tested in ISIC datasets and that have gained outstanding performances [23,68,76,77,78,79,80,115,116].

Lately, the ISIC-2021 datasets have just been released. Except for the ISIC 18, ISIC 2019 and ISIC 2020 melanoma datasets, it also contains extra seven datasets with a total of approximately 30,000 images, such as Fitzpatric 17k, PAD-UFS-20, Derma7pt and Dermofit Image. This greatly increases the richness and diversity of the ISIC-2021 archive and correlates the patient’s skin lesion condition with the other disorders of the body, which will provide the basis for the future training of AI algorithms with a more comprehensive and higher diagnostic accuracy. Researchers are also looking forward to the publication of high-quality papers based on this archive [117].

3.2. Multi-Classification for Skin Lesions in Specific Dermatosis

In addition to the eight major categories of skin diseases defined in the ISIC challenge, in many specific skin diseases, a differential diagnosis for multiple subtypes is also an urgent issue to be solved. For example, in melanoma, while the common melanoma subtypes superficial spreading melanoma (SSM) and lentigo maligna melanoma (LMM) are relatively easy to diagnose, the morphological features of melanomas on other specific anatomical sites (e.g., mucosa, limb skin and nail units) are often overlooked [81]. On top on that, some benign nevus of melanocytic origin can also be easily confused with malignant melanoma in morphology [118]. Among the common pigmentation disorders, many are caused by abnormalities in melanin in the skin. Although they are similar in appearance, they are diseases with different pathological structures and treatment strategies. Diagnostic models based on AI algorithms can improve the diagnostic accuracy and specificity of these diseases so as to benefit dermatologists by reducing the time and financial cost of the diagnosis [119].

Melanocytic Skin Lesions

Since Binder’s team applied an ANN to discriminate between benign naevi and malignant melanoma in 1994, increasing numbers of AI algorithms are employed for the multi-classification of melanocytic skin lesions [82]. Moleanalyzer pro is a proven commercial CNN system for the classification of melanogenic lesions. Winkler and his team used the system, which was trained with more than 150,000 images, to investigate its diagnostic performance across different melanoma localizations and subtypes in six benign/malignant dermoscopic image sets. The CNN showed a high-level performance in most sets, except for the melanoma in mucosal and subungual sites, suggesting that the CNN may partly offset the impact of a reduced human accuracy [81]. In two studies by HA Haenssle et al., in 2018 and 2020, CNNs were also used in comparison with specialist dermatologists to detect melanocytic/non-melanocytic skin cancers and benign lesions. In 2018, the CNN trained with Google’s Inception v4 CNN architecture was compared with 58 physicians. The results showed that most dermatologists outperformed the CNN, but the CNN ROC curves revealed a higher specificity and doctors may benefit from assistance by a CNN’s image classification [55]. In 2020, Moleanalyzer pro was compared with 96 dermatologists. Even though dermatologists accomplish better results when they have richer clinical and textual case information, the overall results show that the CNN and most dermatologists perform at the same level in less artificial conditions and a wider range of diagnoses [56]. Sies et al., utilize the Moleanalyzer pro and Moleanalyzer daynamole systems for the classification of melanoma, melanocytic nervus and other dermatomas. The results showed that the two market-approved CAD systems offer a significantly superior diagnostic performance compared to conventional image analyzers without AI algorithms (CIA) [83].

Benign Pigmented Skin Lesions

Based on a wealth of experience and successful clinical practice, scholars have gradually tried to apply AI to differentiate a variety of pigmented skin diseases with promising results. Lin’s team pioneered the use of deep learning to diagnose common benign pigmented disorders. They developed two CNN models (DenseNet-96 and ResNet-152) to identify six facial pigmented dermatoses (the nevus of Ota, acquired nevus of Ota, chloasma, freckles, seborrheic keratosis and cafe-au-lait spots).Then, they introduced ResNet.99 to build a fusion network, and evaluated the performance of the two CNN with fusion networks separately. The results showed that the fusion network performance was the best and could reach a level comparable to that of dermatologists [84]. In 2019, Tschandl et al., conducted the world largest comparison study between the machine-learning algorithm and 511 dermatologists for the diagnosis accuracy of pigmented skin lesion classification. The algorithm was, on average, 2.01% more correct in its diagnosis compared to all human readers. The result disclosed that machine-learning classifiers outperform dermatologist in the diagnosis of skin pigmented lesions and should be more widely used in clinical practice [120]. In the latest study, Lyakhov et al., established a multimodal neural network for the hair removal preliminary process and differentiation of the 10 most common pigmented lesions (7 benign and 3 malignant). They found that fusing metadata from various sources could provide additional information, thereby improving the efficiency of the neural network analysis and classification system, as well as the accuracy of the diagnosis. Experimental results showed that the fusion of metadata led to an increase in recognition accuracy of 4.93–6.28%, with a maximum diagnosis rate of 83.56%. The study demonstrated that the fusion of patient statistics and visual data makes it possible to find extra connections between dermatoscopic images and medical diagnoses, significantly improving the accuracy of neural network classification [85].

Inflammatory Dermatoses

Inflammatory dermatoses are a group of diseases caused by the destruction of skin tissue as a result of immune system disorders, including eczema, atopic dermatitis, psoriasis, chronic urticarial and pemphigus. Newly recorded histological findings and neoteric applications of immunohistochemistry have also refined the diagnosis of inflammatory skin diseases [121]. AI CAD systems are able to optimize the workflow of highly routinely diagnosed inflammatory dermatoses. A multi-model, multi-level system using an ANN architecture was designed for eczema detection. This system is conceived as an architecture with different models matching input features, and the output of these models are integrated through a multi-level decision layer to calculate the probability of eczema, resulting in a system with a higher confidence level than a single-level system [86]. From 2017 onwards, neural networks have been shown to be useful for diagnosing acne vulgaris [90]. The latest publications on the use of computer-aided systems in acne vulgaris are based on a wealth of data from cell phone photographs of affected patients, which enable the development of AI-based algorithms to determine the severity of facial acne and to identify different types of acne lesions or post-inflammatory hyperpigmentation [91]. Scientists in South Korea trained various image analysis algorithms to recognize images of fungal nails. For this purpose, they used datasets of almost 50,000 nail images and 4 validation datasets of a total of 1358 images. A comparison of the respective diagnostic accuracy (measured in this study by the Youden index) of differently trained assessors and the AI algorithm showed the highest diagnostic accuracy in the computer-based image analysis and was significant superior to dermatologists (p = 0.01) [87].

4. AI in Aid-Diagnosis and Binary-Classification for Specific Dermatosis

4.1. Skin Cancer

The incidence of skin cancer has been increasing yearly [58,122]. Although its mortality rate is relatively low [123], it remains a heavy economic burden on health services and can cause severe mental problems, especially as most skin cancers occur in highly visible areas of the body [124]. Due to the low screening awareness, a lack of specific lesion features in early skin cancer and insufficient adequate clinical expertise and services, most patients were only diagnosed at an advanced stage, thus leading to a poor prognosis [124,125], so there is an urgent need for AI systems to help clinicians in this field.

Melanoma

Melanoma is the deadliest type of skin cancer. The early screening and early diagnosis of melanoma is essential to improve patient survival [126]. Currently, dermatologists diagnose melanoma mainly by applying the ABCD principle based on the morphological characteristics of melanoma lesions [127]. However, even for experienced dermatologists, this manual examination is non-trivial, time consuming and can be easily confused with other benign skin lesions [128]. Thus, most AI-driven skin cancer research has focused on the classification of melanocytic lesions to aid melanoma screening. In 2004, Blum et al., pioneered the use of computer algorithms for the diagnosis of cutaneous melanoma and proved that a diagnostic algorithm for the digital image analysis of melanocytic diseases could achieve a similar accuracy to expert dermatoscopy [88]. In 2017, Esteva et al., trained a GoogleNet-Inception-v3-based CNN with the training dataset, including 129,450 clinical images of 2032 different diseases from 18 sites. The performance of the CNN was compared with 21 dermatologists in two critical binary classifications (the most common cancer and the deadliest skin cancer) of biopsy-confirmed clinical images. The CNN’s performance on both tasks was competent, and comparable to that of dermatologists, demonstrating its ability to classify skin cancer [66]. The ISIC Melanoma Project has also created a publicly accessible archive of images of skin lesions for education and research. Marchetti et al., summarized the results of a melanoma classification for ISIC challenge in 2016, which involved 25 competing teams. They compared the algorithm’s diagnosis with those of eight experienced dermatologists. The outcomes showed that automated algorithms significantly outperformed the dermatologists in diagnosing melanoma [89]. Subsequently, they made a comparison of the computer algorithms’ performance of 32 teams in the ISIC 2017 challenge with 17 human readers. The results also demonstrated that deep neural networks could classify skin images of melanoma and its benign simulants with a high precision and have the potential to boost the performance of human readers [22]. Filho and Tangs’ team have utilized the ISIC 2016, 2017 challenge and PH2 datasets to develop the algorithm for the classification and segmentation of the melanoma area automatically. Their test outcomes indicated that these algorithms could dramatically improve the doctors’ efficiency in diagnosing melanoma [51,129]. In MacLellan’s study, three AI-aid diagnosis systems were compared with dermatologists using 209 lesions in 184 patients. The statistics showed that the Moleanalyzer pro had a relative high sensitivity and the highest specificity (88.1%, 78.8%), whereas local dermatologists had the highest sensitivity but a low specificity (96.6%, 32.2%) [130]. Consistently, Moleanalyzer pro also showed its reliability in the differentiation of combined naevi and melanomas [131]. It is also possible for dermatologists to build a whole-body map using a 3D imaging AI system; its application is of particular relevance in the context of skin cancer diagnostics. The 360° scanner uses whole-body images to create a “map” of pigmented skin lesions. Using a dermatoscope, atypical and altered nevi can also be examined microscopically and stored digitally. With the help of intelligent software, emerging lesions or lesions that change over time are automatically marked during follow-up checks—an important feature for recognizing a malignancy and initiating therapeutic measures [132]. In addition, in the long term, high-risk melanoma populations will benefit from a clinical management approach that combines an AI-based 3D total-body photography monitor with sequential digital dermoscopy imaging and teledermatologist evaluation [133,134].

Non-Melanoma Skin Cancer

AI is also widely used to differentiate between malignant and benign skin lesions, along with the detection of non-melanoma skin cancer (NMSC). Rofman et al., proposed a multi-parameter ANN system based on personal health management data that can be used to forecast and analyze the risk of NMSC. The system was trained and validated by 2056 NMSC and 460,574 non-cancer cases from the 1997–2015 NHIS adult survey data, and was then further tested by 28058 individuals from the 2016 NHIS survey data. The ANN system is available for the risk assessment of non-melanoma skin cancer with a high sensitivity (88.5%). It can classify patients into high, medium and low cancer risk categories to provide clinical decision support and personalized cancer risk management. The study’s model is therefore a prediction, where clinicians can obtain information and the patient risk status to detect and prevent non-melanoma skin cancer at an early stage [94]. Alzubaidi et al., propose a novel approach to overcome the lack of enough input-labeled raw skin lesion images by retraining a deep learning model based on large unlabeled medical images on a small number of labeled medical images through transfer learning. The model has an F1-score value of 98.53% in distinguishing skin cancer from normal skin [95].

Neurofibroma

Neurofibromatosis (NF) is a group of three conditions in which tumors grow in the nervous system, and are NF1, NF2 and schwannomatosis [135]. NF1 is the most common neurofibroma and cancer susceptibility disease. Most patients with NF1 have a normal life expectancy, but 10% of them develop malignant peripheral nerve sheath tumors (MPNST), which is a major cause of morbidity [136]. Therefore, the timely differentiation of benign and malignant lesions has direct significance for improving the survival rate of patients. Wei et al., successfully established a Keras-based machine-learning model that can discriminate between NF1-related benign and malignant craniofacial lesions with a very high accuracy (96.99 and 100%) in validation cohorts 1 and 2 and a 51.27% accuracy in various other body regions [137]. Plexiform neurofibroma (PN) is a prototypical and most common NF1 tumor. Ho et al., created a DNN algorithm to conduct a semi-automated volume segmentation of PNs based on multiple b-value diffusion-weighted MRI. They evaluated the accuracy of semi-automated tumor volume maps constructed by a DNN compared to manual segmentation and revealed that the volumes generated by the DNN from multiple diffusion data on PNs have a good correlation with manual volumes, and that there is a significance between PN and normal tissue [97]. Interestingly, Bashat and his colleagues also demonstrated that a quantitative image representation method based on machine learning may assist in the classification between benign PNs and MPNST in NF1 [102]. In a similar initiative, Duarte et al., used grey matter density maps obtained from magnetic resonance (MR) brain structure scans to create a multivariate pattern analysis algorithm to differentiate between NF1 patients and healthy controls. A total of 83% of participants were correctly classified, with 82% sensitivity and 84% specificity, demonstrating that multivariate techniques are a useful and powerful tool [103].

4.2. Application of AI for Inflammatory Dermatosis

Psoriasis

The prevalence of psoriasis is 0% to 2.1% in children and 0.91% to 8.5% in adults [138]. The psoriasis area and severity index (PASI), body surface area (BSA) and physician global assessment (PGA) are the three most commonly used indicators to evaluate psoriasis severity [139,140]. However, both PASI and BSA have been repeatedly questioned for their objectivity and reliability [141]. It would therefore be of great help to use AI algorithms to make a standardized and objective assessment. Nowadays, machine-learning-based algorithms are available to determine BSA scores. Although this algorithm had slight limitations in detecting flaking as diseased skin, it has reached an expert level in BSA assessment [104]. At present, there are already computer-assisted programs for PASI evaluation, which, however, still require human assistance and function by recognizing predefined threshold values for certain characteristics [98]. Another study by Fink’s team is also based on image analysis with the FotoFinderTM. The accuracy and reproducibility of PASI has been impressively improved with the help of semi-automatic computer-aided algorithms [99]. These technological advances in BSA and PASI measurements are expected to greatly reduce the workload of doctors while ensuring a high degree of repeatability and standardization. In addition to the three above indicators, Anabik Pal et al., used erythema, scaling and induration to build a DNN to determine the severity of psoriatic plagues. The algorithm is given a psoriasis image and then makes a prediction about the severity of the three parameters. This task is seen as a new multi-task learning (MTL) problem formed by three interdependent subtasks in addition to three different single task learning (STL) problems, so the DNN is trained accordingly. The training dataset consists of 707 photographs and the training results show that the deep CNN-based MTL approach performs well when grading the disease parameters alone, but slightly less well when all three parameters are correctly graded at the same time [142].

AI can also assist in evaluating and diagnosing psoriasis. Munro’s microabscesses (MM) is a sign of psoriasis. Anabik Pak et al., presented a computational framework (MICaps) to detect neutrophils in the epidermal stratum corneum of the skin from biopsy images (a component of MM detection in skin biopsies). Using MICaps, the diagnosis performance was increased by 3.27% and model parameters were reduced by 50% [143]. A CNN algorithm that differentiated among nine diagnoses based on photos made fewer misdiagnoses and had a lower omission diagnostic rate of psoriasis compared to 25 dermatologists [92]. In addition, Emma et al., used machine learning to find out which psoriasis patient characteristics are associated with long-term responses to biologics [144]. Thanks to AI, an amelioration in diagnosis and treatment can be inferred in psoriasis patients.

Eczema

The challenge in the computer-aided image diagnosis of eczematous diseases is to correctly differentiate not only between disease and health, but also between different forms of eczema. The eczema stage and affected area are the most essential factors in effectively assessing the dynamics of the disease. It is not trivial to accurately identify the eczema area and other inflammatory dermatoses on the basis of photographic documentation. The macroscopic forms of eczema are diverse, with different stages and varying degrees of distribution and severity [145]. The prerequisite for training algorithms for the AI-supported image analysis of all of these various assessment parameters is therefore a correspondingly large initial quantity of image files that have been optimized and adjusted in terms of the recording technology. Forms of eczema with disseminated eruption, such as the corresponding manifestation patterns of atopic dermatitis, would also be linked to the availability of automated digital, serial whole-body photography for an efficient and time-saving AI-supported calculation of an area score. Han et al., trained a deep neural-network-based algorithm. The algorithm is able to differentiate between eczema and other infectious skin diseases and to classify very rare skin lesions, which has direct clinical significance, and to serve as augmented intelligence to empower medical professionals in diagnostic dermatology. They even showed that treatment recommendations (e.g., topical steroids versus antiseptics) could also be learned by differentiating between inflammatory and infectious causes. It remains to be seen and questioned, however, whether an AI-aided severity assessment and a clinically practicable area score can be derived from this as a prerequisite for a valid follow-up in the case of eczema [93]. Schnuerle et al., designed a support-vector-machine-based image processing method for hand eczema segmentation with industry swiss4ward for operational use at the University Hospital Zurich. This system uses the F1-score as the primary measurement and is superior to a few advanced methods that were tested on their gold standard dataset likewise [100]. Presumably, a combination of such an AI-aided image analysis and molecular diagnostics can optimize the future differential diagnostic classification of eczema diseases, as recently predicted for various clinical manifestations of hand dermatitis [146].

Atopic Dermatitis

Atopic dermatitis (AD) is the most common chronic inflammatory disease, with a prevalence of 10% to 20% in developed countries [147]. It usually starts in childhood and recurs multiple times in adulthood, greatly affecting patients’ quality of life [148]. In 2017, Gustofson’s team identified patients with AD via a machine-learning-based phenotype algorithm. The algorithm combined code information with the collection of electronic health records to achieve a high positive predictive value and sensitivity. These results demonstrate the utility of natural language processing and machine learning in EHR-based phenotyping [105]. An ANN algorithm was developed to assess the influence of air contaminants and weather variation on AD patients; their results proved that the severity of AD symptoms was positively correlated with outdoor temperatures, RH, precipitation, NO2, O3 and PM10 [149]. In the latest study, a fully automatic approach based on CNN was proposed to analysis multiphoton tomography (MPT) data. The proposed algorithm correctly diagnosed AD in 97.0 ± 0.2% of all images presenting living cells, with a sensitivity of 0.966 ± 0.003 and specificity of 0.977 ± 0.003, indicating that MPT imaging can be combined with AI to successfully diagnose AD [96].

Acne

The assessment of AI has been very effective. Melina et al., showed an excellent correlation between the automatic and manual evaluation of the investigator’s global assessment with r = 0.958 [150]. In the case of acne vulgaris in particular, such a procedure could prevent far-reaching consequences with permanent skin damage in the form of scars.

Vitiligo

The depigmented macules of vitiligo are usually in high contrast to unaffected skin. Vitiligo is more easily recognized by AI systems than features of eczema or psoriasis lesions with poorly defined borders. Computer-based algorithms used for the detection of vitiligo with an F1 score of 0.8462 demonstrated an impressive superiority to pustular psoriasis [151]. Luo designed a vitiligo AI diagnosis system employing cycle-consistent adversarial networks (cycle GANs) to generate images in Wood’s lamp and improved the image resolution via an attention-aware dense net with residual deconvolution (ADRD). The system achieved a 9.32% improvement in classification performance accuracy compared to direct classification of the original images using Resnet50 [106]. Makena’s team built a CNN that performs vitiligo skin lesion segmentation quickly and robustly. The network was trained on 308 images with various lesion sizes, intricacies and anatomical locations. The modified network outperformed the state-of-the-art U-Net with a much higher Jaccard index score (73.6% versus 36.7%) and shorter segmentation time than the previously proposed semi-autonomous watershed approach [107]. These novel systems have proved promising for clinical applications by greatly saving the testing time and improving the diagnostic accuracy.

Fungal Dermatosis

Gao et al., invented an automated microscope for fungal detection in dermatology based on deep learning. The system is as proficient as a dermatologist in detecting skin and nail specimens, with sensitivities of 99.5% and 95.2% and specificities of 91.4% and 100%, respectively [101].

5. Application of AI for Aesthetic Dermatology

AI combined with new optical technologies is also increasingly being applied in aesthetics dermatology. Examples include face recognition, automatic beautification in smartphones and related software. So-called smart mirror analyzers are now available on the Internet, which are AI-assisted technologies with image recognition systems that analyze the skin based on its appearance and current external environment and recommend skin care products accordingly [152]. The program ArcSoft Protrait can automatically identify the wrinkles, moles, acne and cicatrice and intelligently soften, moisturize and smooth the skin while retaining a maximum skin texture and detail, greatly simplifying the cumbersome and time-consuming portrait process [61,64,153]. AI also plays an essential role in facial aesthetics assessing. For this purpose, ANNs are trained using face image material that people judge independently according to aesthetic criteria based on various criteria. The ANN learns from photos and their respective attractiveness ratings to make human-like judgments about the aesthetics of the face [65]. New applications objectively evaluate each photo on the basis of over 80 facial coordinates and nearly 7000 associated distances and angles [108].

6. Applications of AI for Skin Surgery

Radical resection and amputation are the best means of preventing recurrence and fatal metastasis for malignant dermatoma [154]. A skin or flap graft via microsurgery and the application of prosthesis play a crucial role in improving the quality of life of patients after resection [155,156]. Adequate microvascular anastomosis is the key to a successful microvascular-free tissue transfer. As a basic requirement in this regard, the surgeon must have excellent microsurgery skills. Thanks to the support of a series of auxiliary equipment such as microscopes, magnifications of up to 10 to 15 times are possible and allow for the anastomosis of small vessels. Nevertheless, due to physiological tremor, only vessels of up to approximately 0.5–1 mm in size can be safely anastomosed, especially in lymphatic surgery or perforator-based flaps, where the vascular caliber may even be smaller, which is why surgeons reach their limits here [157]. In this background, the expansion of surgical microscopes to include robotics and AI capabilities represents a promising and innovative approach for surpassing the capabilities of the human hand. The aim is to use robots equipped with AI to eliminate human tremor and to enable motion scaling for an increased precision and dexterity in the smallest of spaces [158]. By downscaling human movements, finer vessels can be attached. In the future, advances could be achieved in the field of ultra-microsurgery and anastomoses in the range of 0.1–0.8 mm on the smallest vessels or nerve fascicles. In the long term, intelligent robotics could also automate technically demanding tasks, such as microsurgical anastomosis performed by robots, or the implementation of a real-time feedback system for the surgeon.

Prosthetics have also evolved with the implementation of AI. After amputation injuries, prostheses can now restore not only the shape but also essential functions of the amputated extremity; in this way, they make a significant contribution to the reintegration of the patient into society. The mental control of the extremity remains in the brain even after amputation. When movement patterns are imagined, despite the lack of end organs to perform them, neurons will still transmit corresponding nerve signals [159]. Prostheses can now receive the electrical potential via up to eight electrodes and assign them to the respective functions via pattern recognition and innovative technological methods equipped with AI, and can ensure that patients better use the prosthesis in their daily lives [160,161]. This enables the patient to directly control different grip shapes and movements, which means that gripping movements can be realized much faster and more naturally in terms of movement behavior.

The application of AI-based surgical robots in skin surgery is now also becoming widespread. Compared to traditional open surgery, robotic-assisted surgery offers 3D vision systems and flexible operating instruments, with potentially fewer postoperative complications as a result. In 2010, Sohn et al., first applied this technique to treat two pelvic metastatic melanoma patients [162]. In 2017, Kim successfully treated one case of vaginal malignant melanoma using robotic-assisted anterior pelvic organ resection with ileoccystostomy [15]. One year later, Hyde successfully treated four cases of malignant melanoma using robotic-assisted inguinal lymph node dissection [163]. Miura et al., found that robotic assistance provided a safe, effective and minimally invasive method of removing a pelvic lymph from patients with peritoneal metastases melanoma, with shorter hospital stays compared to normal open surgery [164]. Medical robots are also involved in the field of hair transplantation. In 2011, the ARTAS system was officially approved by the US-FDA for male hair transplantation, providing clear and detailed characteristics of the donor area by capturing microscopically magnified images and computer-aided parameters to facilitate the acquisition of complete follicular units from the donor area [16]. The system reduces labor consumption and eliminates human fatigue and potential errors, and the procedure time is significantly reduced [165].

This entry is adapted from the peer-reviewed paper 10.3390/jcm11226826

This entry is offline, you can click here to edit this entry!