Chest X-ray radiography (CXR) is among the most frequently used medical imaging modalities. It has a preeminent value in the detection of multiple life-threatening diseases. Radiologists can visually inspect CXR images for the presence of diseases. Most thoracic diseases have very similar patterns, which makes diagnosis prone to human error and leads to misdiagnosis. Machine learning (ML) and deep learning (DL) provided techniques to make this task more efficient and faster. Numerous experiments in the diagnosis of various diseases proved the potential of these techniques.

- radiography

- chest X-ray

- computer-aided detection

- machine learning

- deep learning

- deep convolutional neural networks

1. Introduction

2. Datasets

3. Image Preprocessing Techniques

3.1. Augmentation

3.2. Enhancement

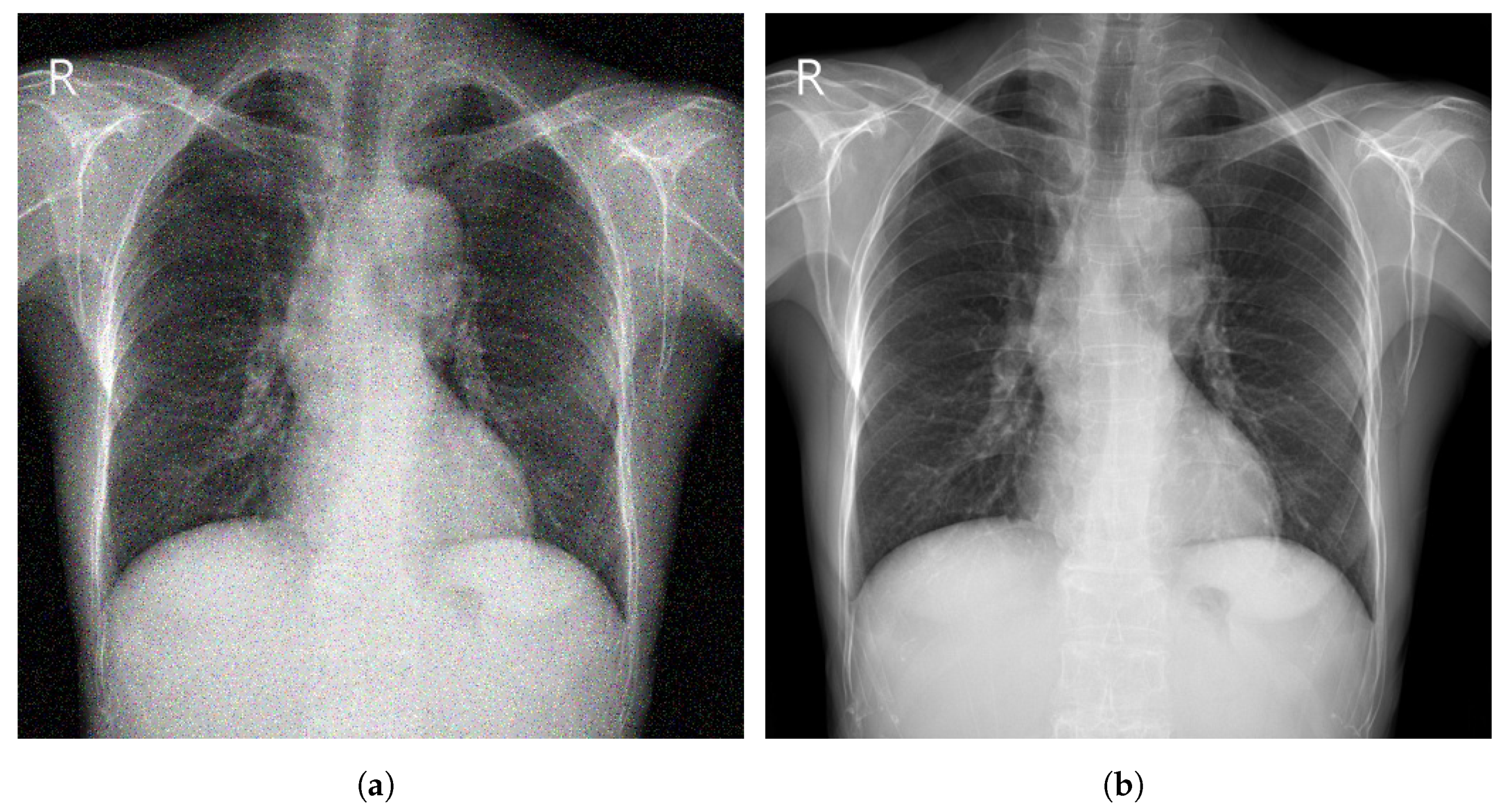

3.3. Segmentation

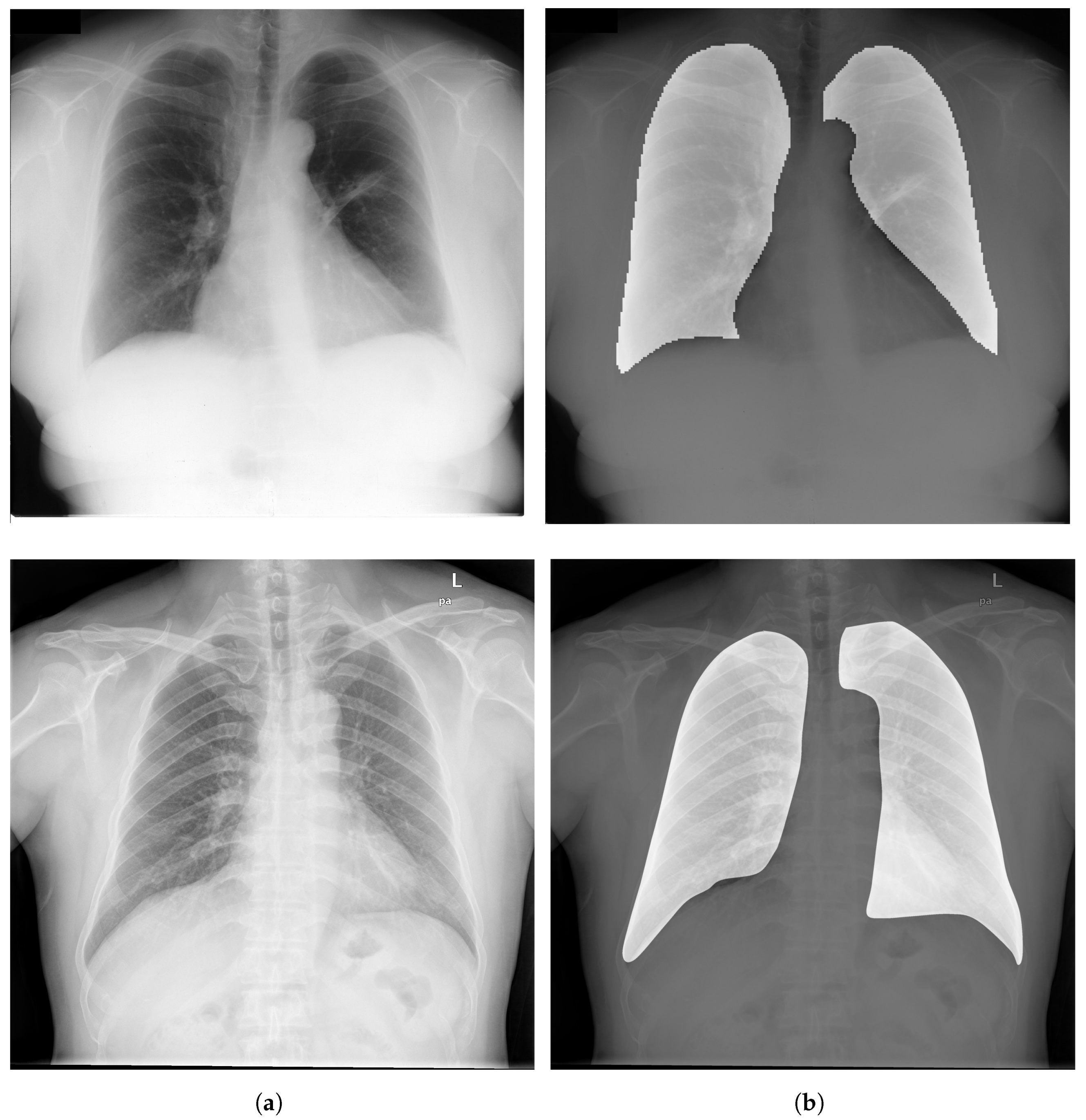

3.4. Bone Suppression

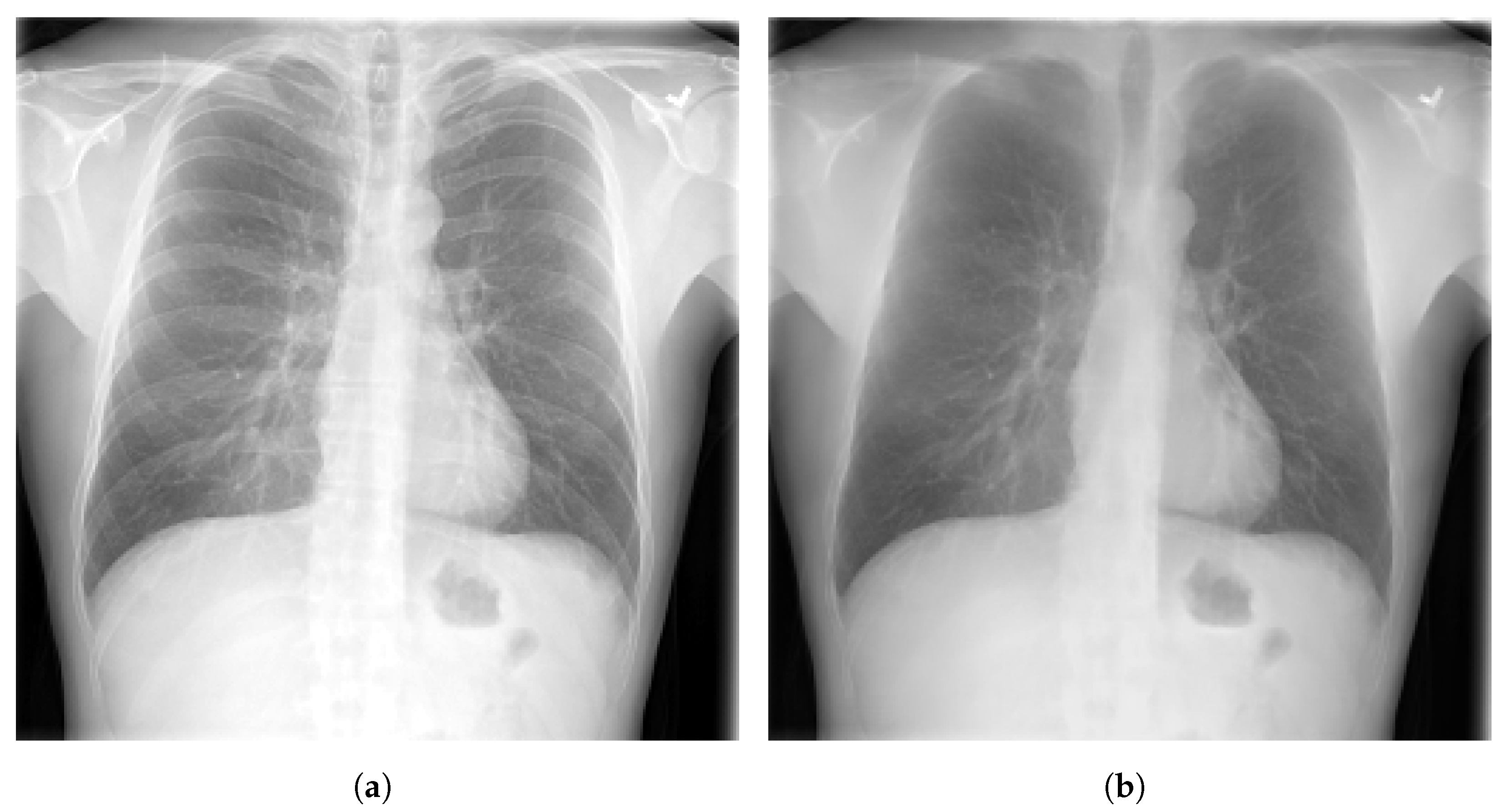

3.5. Evaluation Metrics

4. Deep Learning for Chest Disease Detection Using CXR Images

Several CAD systems were developed to detect chest diseases using different techniques. Early diagnosis of thoracic conditions gives a chance to overcome the disease. Diseases such as TB, pneumonia, and COVID-19 become more serious and severe when they are at an advanced stage. In CXR images, three main types of abnormalities can be observed: (1) Texture abnormalities, which are distinguished by changes diffusing in the appearance and structure of the area. (2) Focal abnormalities, that occur as isolated density changes and (3) Abnormal form where the anomaly changes the outline of the normal morphology.

4.1. Pneumonia Detection

Ma and Lv [22] proposed a Swin transformer model for features extraction with a fully connected layer for classification of pneumonia in CXR images. The performance of the model was evaluated against DCNN models using two different datasets (Pediatric-CXR and ChestX-ray8). Image enhancement and data-augmentation techniques were applied, which improved the performance of the introduced model, achieving an ACC of 97.20% on Pediatric-CXR and 87.30% on ChestX-ray8. Singh et al. [23] proposed an attention mechanism-based DCNN model for the classification of CXR images into two classes (normal or pneumonia). ResNet50 with attention achieved the best results with an ACC of 95.73% using images from Pediatric-CXR dataset. Darapaneni et al. [24] implemented two DCNN model with transfer learning (ResNet-50 and Inception-V4) for a binary classification of pneumonia cases using CXR images from RSNA-Pneumonia-CXR dataset. The best performing model was Inception-V4 with a validation ACC of 94.00% overcoming ResNet-50 which achieved a validation ACC of 90.00%. Rajpurkar et al. [25] developed CheXNet model, which is composed of 121-layer convolutional network to detect and localize the lung areas that show the presence of pneumonia. ChestX-ray14 was used to train the model, which was fine-tuned by replacing the final fully connected layer with one that has a single output. A nonlinear sigmoid function was used as an activation function, and the weights were initialized with the weights from ImageNet. CheXNet model showed high performance, achieving an AUC of 76.80%.

4.2. Pulmonary Nodule Detection

4.3. Tuberculosis Detection

4.4. COVID-19 Detection

4.5. Multiple Disease Detection

This entry is adapted from the peer-reviewed paper 10.3390/diagnostics13010159

References

- Abiyev, R.; Ma’aitah, M.K.S. Deep Convolutional Neural Networks for Chest Diseases Detection. J. Healthc. Eng. 2018, 2018, 4168538.

- Radiological Society of North America. X-ray Radiography-Chest. Available online: https://www.radiologyinfo.org/en/info.cfm?pg=chestrad (accessed on 1 November 2022).

- US Food and Drugs Administration. Medical X-ray Imaging. Available online: https://www.fda.gov/radiation-emitting-products/medical-imaging/medical-x-ray-imaging (accessed on 1 November 2022).

- Avni, U.; Greenspan, H.; Konen, E.; Sharon, M.; Goldberger, J. X-ray Categorization and Retrieval on the Organ and Pathology Level, Using Patch-Based Visual Words. IEEE Trans. Med. Imaging 2011, 30, 733–746.

- Jaeger, S.; Karargyris, A.; Candemir, S.; Folio, L.; Siegelman, J.; Callaghan, F.; Xue, Z.; Palaniappan, K.; Singh, R.; Antani, S.; et al. Automatic Tuberculosis Screening Using Chest Radiographs. IEEE Trans. Med. Imaging 2014, 33, 233–245.

- Pattrapisetwong, P.; Chiracharit, W. Automatic lung segmentation in chest radiographs using shadow filter and multilevel thresholding. In Proceedings of the International Computer Science and Engineering Conference (ICSEC), Chiang Mai, Thailand, 14–17 December 2016; pp. 1–6.

- Piccialli, F.; Di Somma, V.; Giampaolo, F.; Cuomo, S.; Fortino, G. A survey on deep learning in medicine: Why, how and when? Inf. Fusion 2021, 66, 111–137.

- Elangovan, A.; Jeyaseelan, T. Medical imaging modalities: A survey. In Proceedings of the International Conference on Emerging Trends in Engineering, Technology and Science (ICETETS), Pudukkottai, India, 24–26 February 2016; pp. 1–4.

- Saczynski, J.; McManus, D.; Goldberg, R. Commonly Used Data-collection Approaches in Clinical Research. Am. J. Med. 2013, 126, 946–950.

- Horng, S.; Liao, R.; Wang, X.; Dalal, S.; Golland, P.; Berkowitz, S. Deep learning to quantify pulmonary edema in chest radiographs. Radiol. Artif. Intell. 2021, 3, e190228.

- Tolkachev, A.; Sirazitdinov, I.; Kholiavchenko, M.; Mustafaev, T.; Ibragimov, B. Deep learning for diagnosis and segmentation of pneumothorax: The results on the kaggle competition and validation against radiologists. J. Biomed. Health Inform. 2021, 25, 1660–1672.

- Cha, M.J.; Chung, M.J.; Lee, J.H.; Lee, K.S. Performance of deep learning model in detecting operable lung cancer with chest radiographs. J. Thorac. Imaging 2019, 34, 86–91.

- Schultheiss, M.; Schober, S.; Lodde, M.; Bodden, J.; Aichele, J.; Müller-Leisse, C.; Renger, B.; Pfeiffer, F.; Pfeiffer, D. A robust convolutional neural network for lung nodule detection in the presence of foreign bodies. Sci. Rep. 2020, 10, 12987.

- Johnson, A.; Pollard, T.; Berkowitz, S.; Greenbaum, N.; Lungren, M.; Deng, C.y.; Mark, R.; Horng, S. MIMIC-CXR, a de-identified publicly available database of chest radiographs with free-text reports. Sci. Data 2019, 6, 317.

- Demner-Fushman, D.; Kohli, M.; Rosenman, M.; Shooshan, S.; Rodriguez, L.; Antani, S.; Thoma, G.; McDonald, C. Preparing a collection of radiology examinations for distribution and retrieval. Am. Med. Inform. Assoc. 2016, 23, 304–310.

- Wang, X.; Peng, Y.; Lu, L.; Lu, Z.; Bagheri, M.; Summers, R.M. ChestX-ray8: Hospital-scale Chest X-ray Database and Benchmarks on Weakly-Supervised Classification and Localization of Common Thorax Diseases. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2097–2106.

- SK, S.; Naveen, N. Algorithm for pre-processing chest-x-ray using multi-level enhancement operation. In Proceedings of the International Conference on Wireless Communications, Signal Processing and Networking (WiSPNET), Chennai, India, 23–25 March 2016; pp. 2182–2186.

- Reza, A. Realization of the contrast limited adaptive histogram equalization (CLAHE) for real-time image enhancement. J. VLSI Signal Process. Syst. Signal Image Video Technol. 2004, 38, 35–44.

- Agaian, S.; Panetta, K.; Grigoryan, A. Transform-based image enhancement algorithms with performance measure. IEEE Trans. Image Process. 2001, 10, 367–382.

- Chen, S.; Cai, Y. Enhancement of chest radiograph in emergency intensive care unit by means of reverse anisotropic diffusion-based unsharp masking model. Diagnostics 2019, 9, 45.

- Stanford ML Group. ChexPert a Large Chest X-ray Dataset and Competition. Available online: https://stanfordmlgroup.github.io/competitions/chexpert/ (accessed on 1 November 2022).

- Ma, Y.; Lv, W. Identification of Pneumonia in Chest X-ray Image Based on Transformer. Int. J. Antennas Propag. 2022, 2022, 5072666.

- Singh, S.; Rawat, S.; Gupta, M.; Tripathi, B.; Alanzi, F.; Majumdar, A.; Khuwuthyakorn, P.; Thinnukool, O. Deep Attention Network for Pneumonia Detection Using Chest X-ray Images. Comput. Mater. Contin. 2022, 74, 1673–1691.

- Darapaneni, N.; Ranjan, A.; Bright, D.; Trivedi, D.; Kumar, K.; Kumar, V.; Paduri, A.R. Pneumonia Detection in Chest X-rays using Neural Networks. arXiv 2022, arXiv:2204.03618.

- Rajpurkar, P.; Irvin, J.; Zhu, K.; Yang, B.; Mehta, H.; Duan, T.; Ding, D.; Bagul, A.; Langlotz, C.; Shpanskaya, K. Chexnet: Radiologist-level pneumonia detection on chest X-rays with deep learning. arXiv 2017, arXiv:1711.05225.

- World Health Organization. World Cancer Report. Available online: https://www.who.int/cancer/publications/WRC_2014/en/ (accessed on 1 November 2022).

- Sim, Y.; Chung, M.J.; Kotter, E.; Yune, S.; Kim, M.; Do, S.; Han, K.; Kim, H.; Yang, S.; Lee, D.J.; et al. Deep Convolutional Neural Network–based Software Improves Radiologist Detection of Malignant Lung Nodules on Chest Radiographs. Radiology 2020, 294, 199–209.

- World Health Organization. Tuberculosis. Available online: https://www.who.int/news-room/fact-sheets/detail/tuberculosis (accessed on 1 November 2022).

- Iqbal, A.; Usman, M.; Ahmed, Z. An efficient deep learning-based framework for tuberculosis detection using chest X-ray images. Tuberculosis 2022, 136, 102234.

- Jawahar, M.; Anbarasi, J.; Ravi, V.; Jayachandran, P.; Jasmine, G.; Manikandan, R.; Sekaran, R.; Kannan, S. CovMnet-Deep Learning Model for classifying Coronavirus (COVID-19). Health Technol. 2022, 12, 1009–1024.

- Majdi, M.; Salman, K.; Morris, M.; Merchant, N.; Rodriguez, J. Deep learning classification of chest X-ray images. In Proceedings of the Southwest Symposium on Image Analysis and Interpretation (SSIAI), Albuquerque, NM, USA, 29–31 March 2020; pp. 116–119.

- Bar, Y.; Diamant, I.; Wolf, L.; Greenspan, H. Deep learning with non-medical training used for chest pathology identification. In Medical Imaging 2015: Computer-Aided Diagnosis; SPIE: Bellingham, WA, USA, 2015; pp. 215–221.

- Cicero, M.; Bilbily, A.; Colak, E.; Dowdell, T.; Gray, B.; Perampaladas, K.; Barfett, J. Training and validating a deep convolutional neural network for computer-aided detection and classification of abnormalities on frontal chest radiographs. Investig. Radiol. 2017, 52, 281–287.