Augmented reality (AR) is one of the leading expanding immersive experiences of the 21st century. AR has brought a revolution in different realms including health and medicine, teaching and learning, tourism, designing, manufacturing, and other similar industries whose acceptance accelerated the growth of AR in an unprecedented manner. The tracking technologies are the building blocks of AR and establish a point of reference for movement and for creating an environment where the virtual and real objects are presented together. To achieve a real experience with augmented objects, several tracking technologies are presented.

- augmented reality

- virtual reality

- tracking technology

1. Augmented Reality Overview

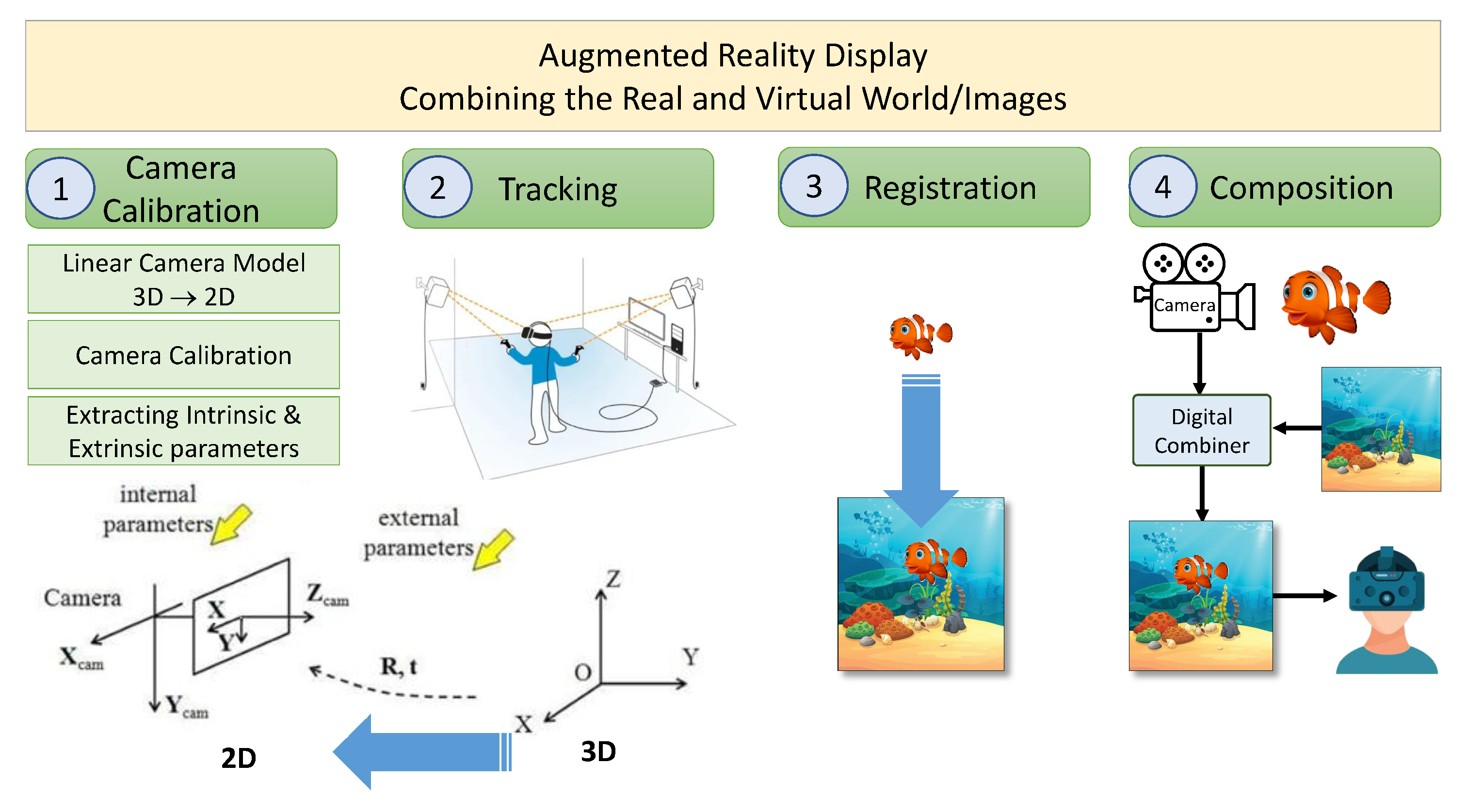

Augmented Reality provides the composite view to the users by superimposing the computer-generated virtual content i.e. audio, graphic, text, or video on the real world object. The major components of the AR process are the tracking of the position for placing virtual objects in the real environment and display of the virtual content to the user. Tracking process in AR is to follow a defined pattern in the real world using the computer or mobile for the correct placement of the virtual object in the real world. While display technologies are used to display the virtual content in front of the viewer's eyes.

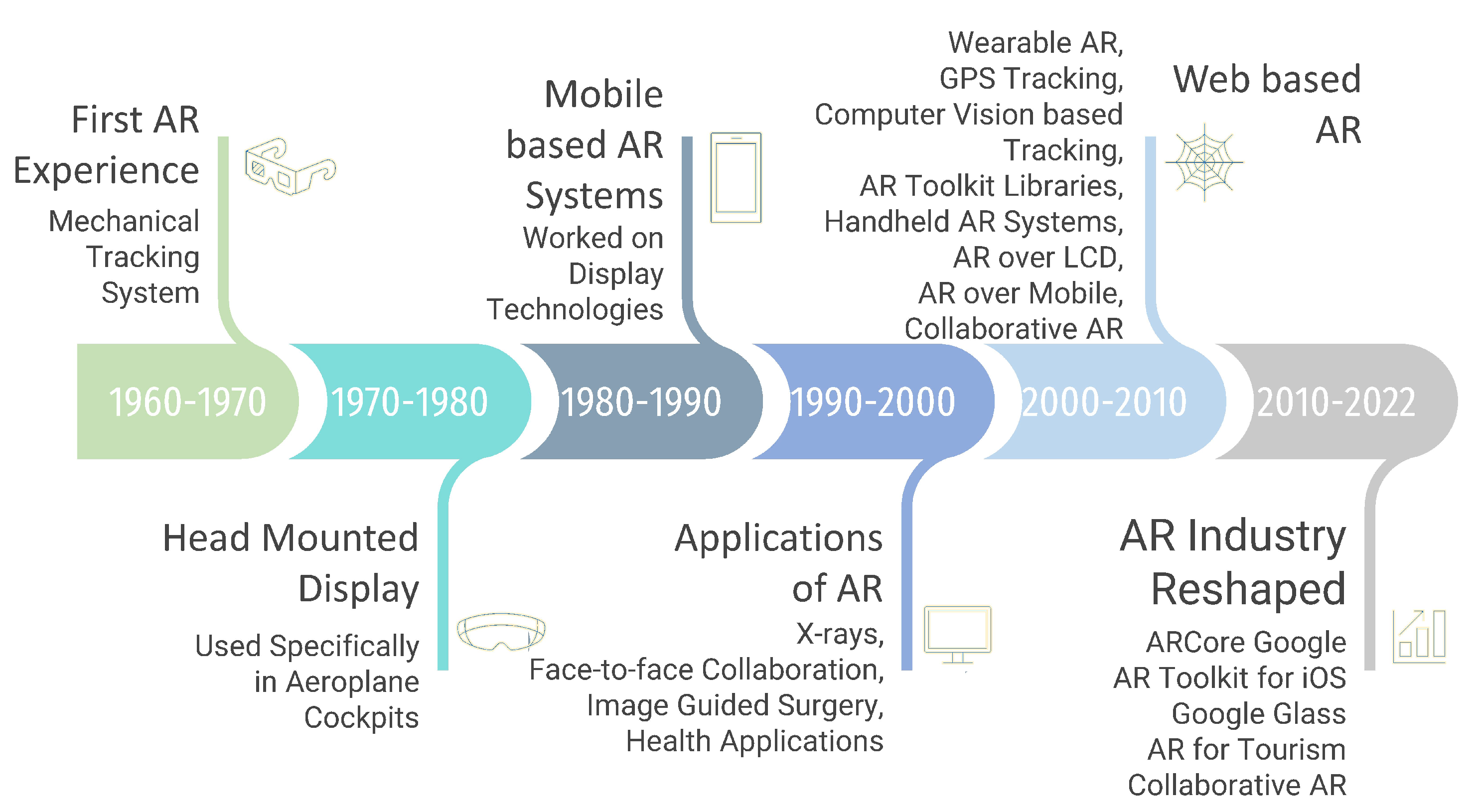

For many years, people have been using lenses, light sources, and mirrors to create illusions and virtual images in the real world [1][2][3]. Ivan Sutherland was the first person to truly generate the AR experience. Sketchpad, developed at MIT in 1963 by Ivan Sutherland, is the world’s first interactive graphic application [4]. In Figure 1, an overview of the development of AR technology from the beginning to 2022 is given. Bottani et al. [5] reviewed the AR literature published during the time period of 2006–2017. Moreover, Sereno et al. [6] use a systematic survey approach to detail the existing literature available on the intersection of computer-supported collaborative work and AR.

1.1. Head-Mounted Display

1.2. AR Towards Applications

1.3. Augmented Reality for the Web

1.4. AR Application Development

1.5. AR Security and Privacy

2. Tracking Technology of AR

- it combines virtual and the real content;

- it is interactive in real time;

- it is registered in three dimensions.

- Determination of the position and orientation of the viewer relative to the real-world anchor: registration phase;

- Upgrading of viewer’s pose with respect to previously known pose: tracking phase.

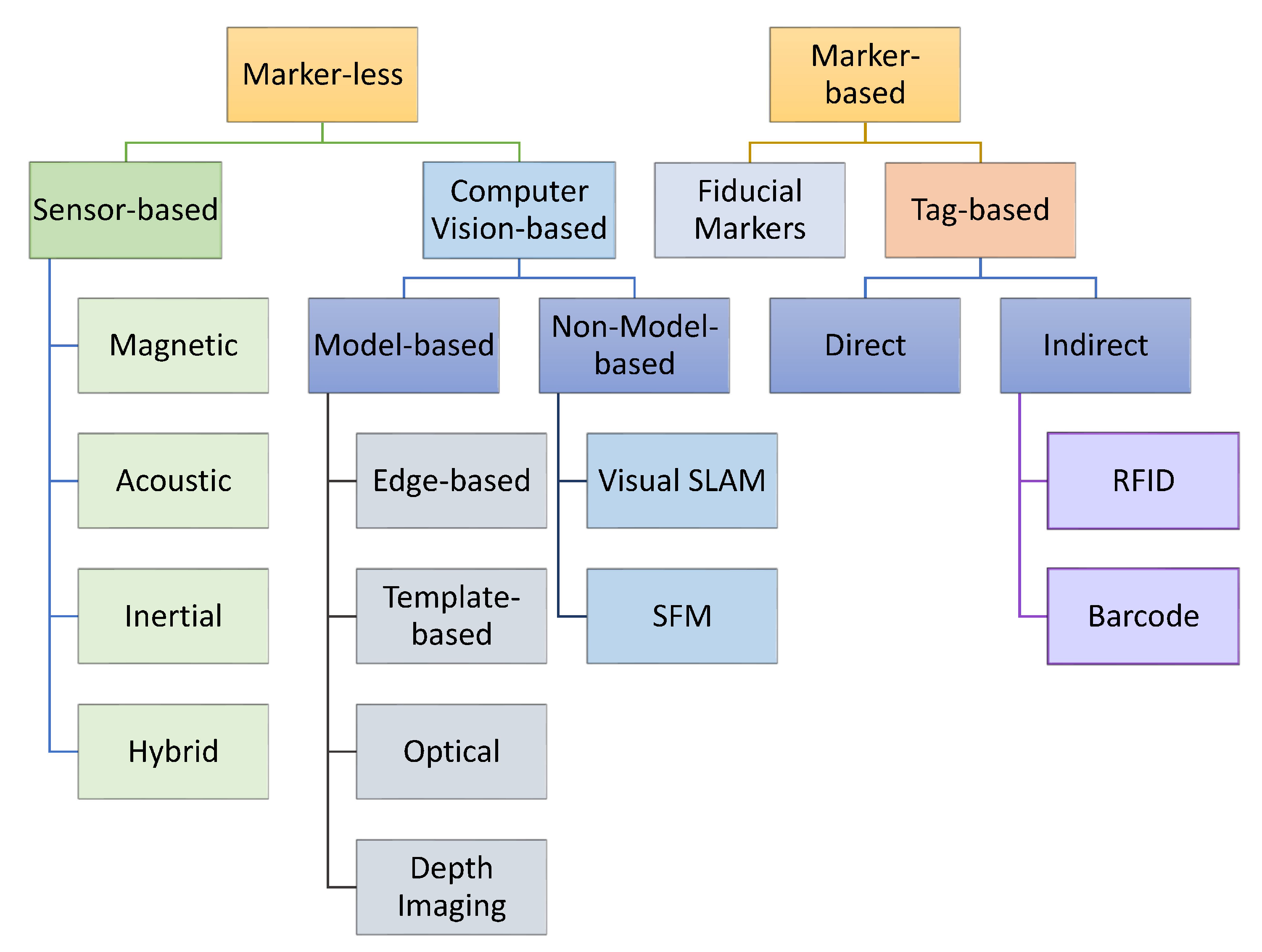

2.1. Markerless Tracking Techniques

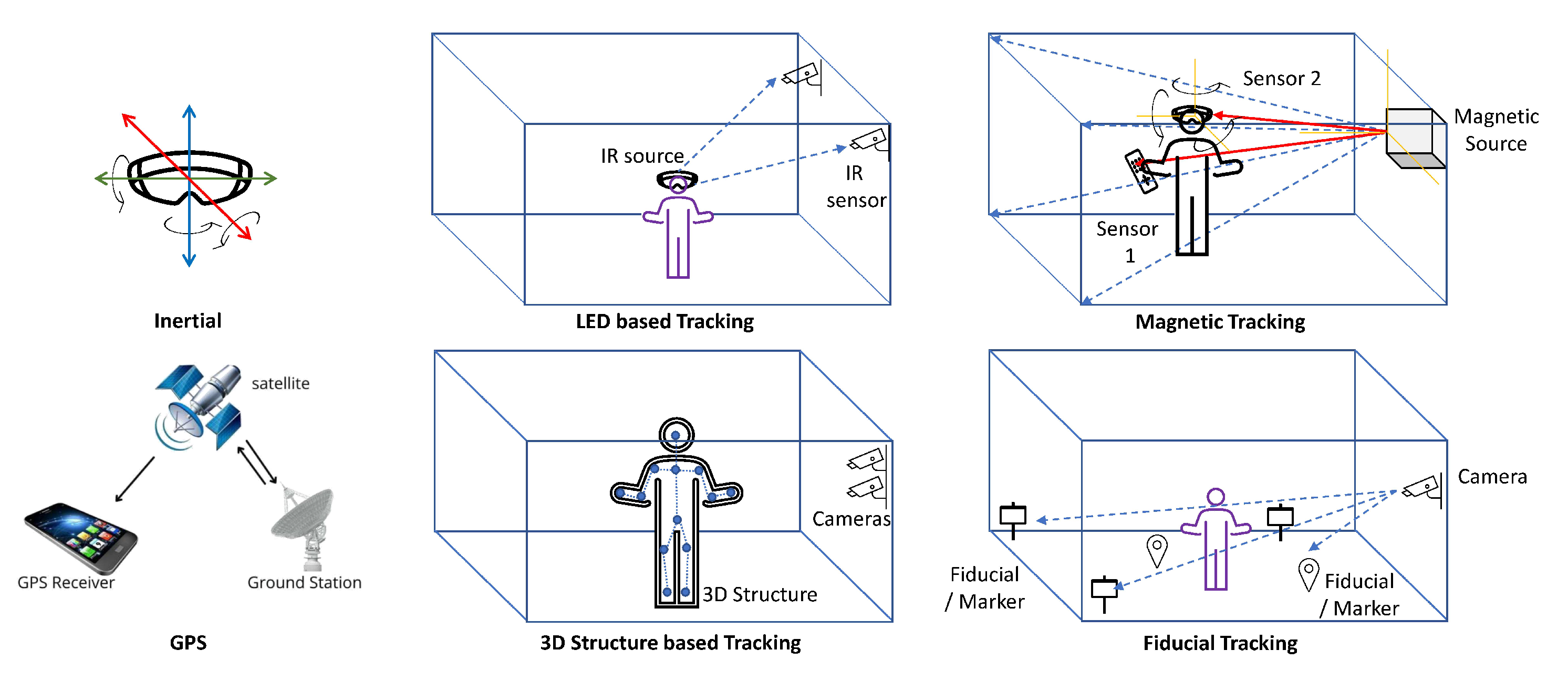

2.1.1. Sensor-Based Tracking

2.1.2. Vision-Based Tracking

- visible light tracking;

- 3D structure tracking;

- infrared tracking.

2.1.3. Three-Dimensional Structure Tracking

2.1.4. Infrared Tracking

2.1.5. Model-Based Tracking

2.1.6. Global Positioning System—GPS Tracking

| No. | Tracking Technology | Category of Tracking Technique | Status of Technique, Used in Current Devices | Tools/Company Currently Using the Technology | Key Concepts | Advantages | Challenges | Example Application Areas | Example Studies |

|---|---|---|---|---|---|---|---|---|---|

| 1 | Magnetic | Marker-less/Sensor based | Yes | i. Edge Tracking/Premo etc. ii. Most HMD/Most Recent Android Devices |

Sensors are placedwithin an electromagnetic field | +360 degree motion +navigation around the environments +manipulationof objects |

-limited positioning range -constrained working volume -highly sensitive to surrounding environments |

Maintenance Medicine Manufacturing |

[26][27][28][29][30][31][32][33][34] |

| 2 | Inertial | Marker-less/Sensor based | Yes | ARCore/Unity | Motion sensors (e.g., accelerometers and gyroscopes) are used to determine the velocity and orientation of objects | +high-bandwidth motion measurement +Negligible latency |

-drift overtime impacting position measurement | Transport Sports | [35] |

| 3 | Optical | Marker-less/Vision based | Yes | i. Unity ii. Opti Track Used in conguction with Inertial sensors + Optical (Vision Based) sensors |

Virtual content is added to real environments through cameras and optical sensors.Example approaches include visible light, 3D structure, and infrared tracking. | +Popular due to affordable consumer devices +Strong tracking algorithms +Applicationto real-world scenarios |

-occlusion when objects are in close range | Education and Learning E-commerce Tourism | [80][81] |

| 4 | Model Based i. Edge-Based ii. Template-Based iii. Depth Imaging |

Marker-less/Computer Vision-based | Yes | i. VisionLib ii. Unity iii. ViSP |

A 3D model is visualized of real objects | +implicit knowledge of the 3D structure +empowersspatial tracking +robustness is achieved even in complex environments |

-algorithms are required to track and predict movements -models need to be created using dedicated tools and libraries |

Manufacturing Construction Entertainment | [60][61][62][63][64][65][66][67][68] |

| 5 | GPS | Marker-less/Sensor based | Yes | i. ARCore/ARKit ii. Unity/ARFoundation iii. Vuforia |

GPS sensors are employed to track the price location of objects in the environment | +high tracking accuracy (up to cms) | -hardware requirements -objects should be modelled ahead |

Gaming | [82][83][84][85][86][87] |

| 6 | Hybrid | Marker-less/Sensor based/Computer Vision | Yes | i. ARCore ii. ARKit |

A mix of markerless technologies is used to overcome the challenges of a single-tracking technology | +improved tracking range and accuracy +higher degree of freedom +lower drift and jitter |

-the need for multiple technologies (e.g., accelerators, sensors) so cost issues | Simulation Transport | [88][89][90][91] |

| 7 | SLAM | Marker-less/Computer Vision/Non-Model-based | Yes | i. WikiTude ii. Unity iii. ARCore |

A map is created via a vision of the real environment to track the virtual object on it. | Can track unknown environments, Parallel mapping engine | Does not have the capability to close large loops in the constrained environment | Mobile based AR Tracking, Robot Navigation, | [92][93][94] |

| 8 | Structure from Motion (SFM) | Marker-Less/Computer Vision/Non-Model-Based | Yes | i. SLAM ii. Research Based |

3D model reconstruction approach based on Multi View Stereo | Can be used for estimating the 3D structure of a scene from a series of 2D images | Shows limited reconstruction ability in vegetated environments | 3-D scanning, augmented reality, and visual simultaneous localization and mapping (vSLAM) | [72] |

| 9 | Fiducial/Landmark | Marker-based /Fiducial | Yes | i. Solar/Unity ii. Uniducial/Unity |

Tracking is made with reference to artificial landmarks (i.e., markers) added to the AR environment | +better accuracy is achieved +stable tracking with less cost |

-the need for landmarks -requires image recognition (i.e., camera) -less flexible compared to marker-based |

Marketing | [95][96][97] |

| 10 | QR Code based Tracking | Marker-Based/Tag-Based | Yes | Microsoft Hololense/Immersive Headsets/Unity | Tracking is made | +better accuracyis achieved +stable tracking with less cost |

QR codes pose significant security risks. | Supply Chain Management | [95] |

2.1.7. Miscellaneous Tracking

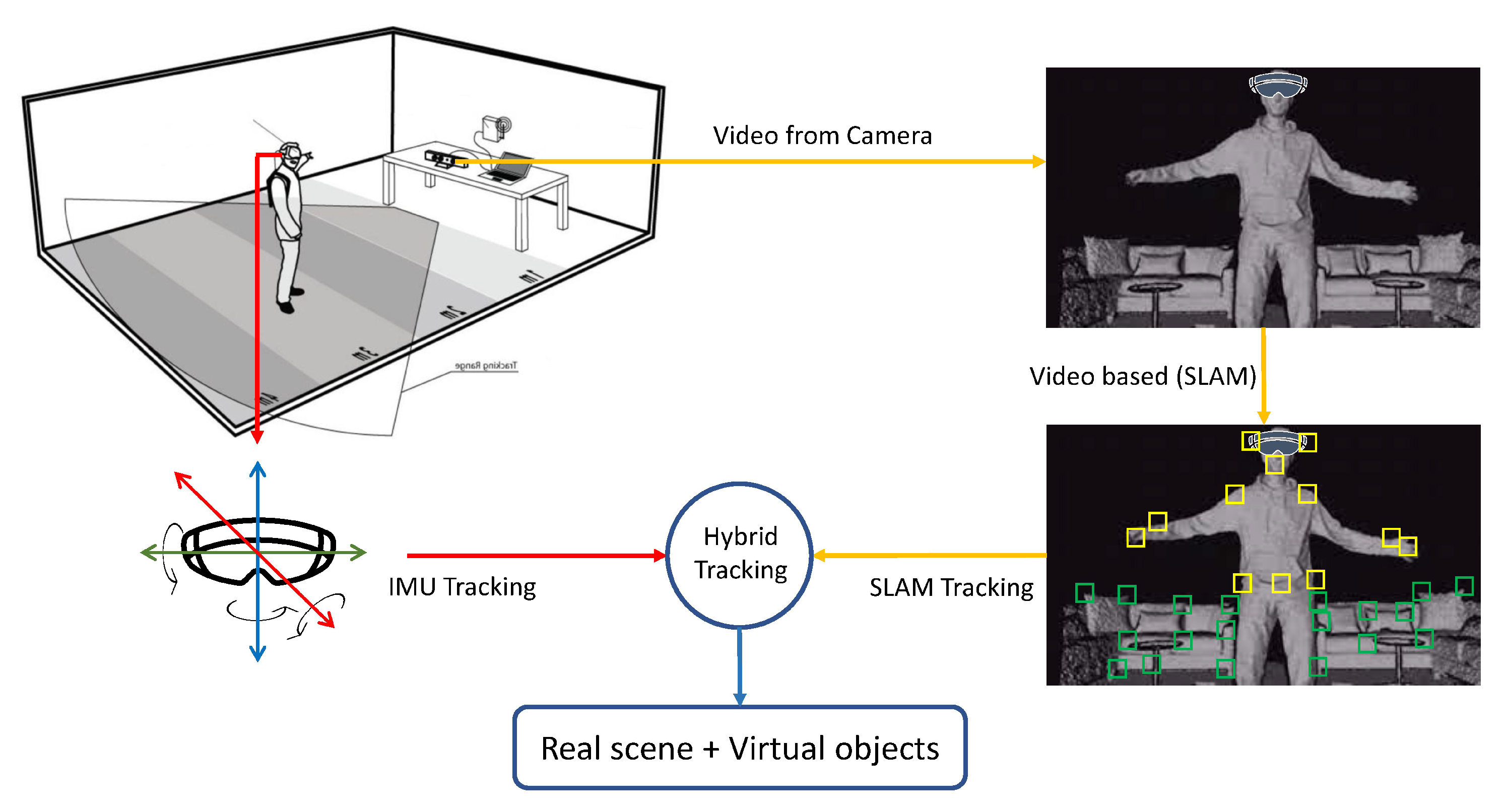

2.1.8. Hybrid Tracking

- Improving the accuracy of the tracking system.

- Coping with the weaknesses of the respective tracking methods.

- Adding more degrees of freedom.

- Low drift of vision-based tracking.

- Low jitter of vision-based tracking.

- They had a robust sensor with high update rates. These characteristics decreased the invalid pose computation and ensured the responsiveness of the graphical updates [103].

- They had more developed inertial and magnetic trackers which were capable of extending the range of tracking and did not require the line of sight. The above-mentioned benefits suggest that the utilization of the hybrid system is more beneficial than just using the inertial trackers.

2.2. Marker-Based Tracking

2.3. Summary

This entry is adapted from the peer-reviewed paper 10.3390/s23010146

References

- Kerber, R. Advanced tactic targeted grocer. The Boston Globe. 2008. Available online: https://seclists.org/isn/2008/Mar/126 (accessed on 20 October 2022).

- Mansfield-Devine, S. Interview: BYOD and the enterprise network. Comput. Fraud Secur. 2012, 2012, 14–17.

- Nofer, M.; Gomber, P.; Hinz, O.; Schiereck, D. Blockchain. Bus. Inf. Syst. Eng. 2017, 59, 183–187.

- Behzadan, A.H.; Aziz, Z.; Anumba, C.J.; Kamat, V.R. Ubiquitous location tracking for context-specific information delivery on construction sites. Autom. Constr. 2008, 17, 737–748.

- Bottani, E.; Vignali, G. Augmented reality technology in the manufacturing industry: A review of the last decade. IISE Trans. 2019, 51, 284–310.

- Sereno, M.; Wang, X.; Besançon, L.; McGuffin, M.J.; Isenberg, T. Collaborative work in augmented reality: A survey. IEEE Trans. Vis. Comput. Graph. 2020, 28, 2530–2549.

- Ens, B.; Quigley, A.; Yeo, H.S.; Irani, P.; Piumsomboon, T.; Billinghurst, M. Counterpoint: Exploring mixed-scale gesture interaction for AR applications. In Proceedings of the Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–6.

- Khan, D.; Ullah, S.; Yan, D.M.; Rabbi, I.; Richard, P.; Hoang, T.; Billinghurst, M.; Zhang, X. Robust tracking through the design of high quality fiducial markers: An optimization tool for ARToolKit. IEEE Access 2018, 6, 22421–22433.

- Hettig, J.; Engelhardt, S.; Hansen, C.; Mistelbauer, G. AR in VR: Assessing surgical augmented reality visualizations in a steerable virtual reality environment. Int. J. Comput. Assist. Radiol. Surg. 2018, 13, 1717–1725.

- Goh, E.S.; Sunar, M.S.; Ismail, A.W. 3D object manipulation techniques in handheld mobile augmented reality interface: A review. IEEE Access 2019, 7, 40581–40601.

- Kollatsch, C.; Klimant, P. Efficient integration process of production data into Augmented Reality based maintenance of machine tools. Prod. Eng. 2021, 15, 311–319.

- Bhattacharyya, P.; Nath, R.; Jo, Y.; Jadhav, K.; Hammer, J. Brick: Toward a model for designing synchronous colocated augmented reality games. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 1–9.

- Kim, K.; Billinghurst, M.; Bruder, G.; Duh, H.B.L.; Welch, G.F. Revisiting trends in augmented reality research: A review of the 2nd decade of ISMAR (2008–2017). IEEE Trans. Vis. Comput. Graph. 2018, 24, 2947–2962.

- Liu, H.; Wang, L. An AR-based worker support system for human-robot collaboration. Procedia Manuf. 2017, 11, 22–30.

- Cortés-Dávalos, A.; Mendoza, S. Collaborative Web Authoring of 3D Surfaces Using Augmented Reality on Mobile Devices. In Proceedings of the 2016 IEEE/WIC/ACM International Conference on Web Intelligence (WI), Omaha, NE, USA, 13–16 October 2016; pp. 640–643.

- Qiao, X.; Ren, P.; Dustdar, S.; Liu, L.; Ma, H.; Chen, J. Web AR: A promising future for mobile augmented reality—State of the art, challenges, and insights. Proc. IEEE 2019, 107, 651–666.

- Agati, S.S.; Bauer, R.D.; Hounsell, M.d.S.; Paterno, A.S. Augmented reality for manual assembly in industry 4.0: Gathering guidelines. In Proceedings of the 2020 22nd Symposium on Virtual and Augmented Reality (SVR), Porto de Galinhas, Brazil, 7–10 November 2020; pp. 179–188.

- Shukri, S.A.A.; Arshad, H.; Abidin, R.Z. The design guidelines of mobile augmented reality for tourism in Malaysia. In AIP Conference Proceedings; AIP Publishing LLC: Melville, NY, USA, 2017; Volume 1891, p. 020026.

- Fallahkhair, S.; Brito, C.A. Design Guidelines for Development of Augmented Reality Application with Mobile and Wearable Technologies for Contextual Learning. Braz. J. Technol. Commun. Cogn. Sci. 2019, 7, 1–16.

- Akçayır, M.; Akçayır, G. Advantages and challenges associated with augmented reality for education: A systematic review of the literature. Educ. Res. Rev. 2017, 20, 1–11.

- da Silva, M.M.; Teixeira, J.M.X.; Cavalcante, P.S.; Teichrieb, V. Perspectives on how to evaluate augmented reality technology tools for education: A systematic review. J. Braz. Comput. Soc. 2019, 25, 1–18.

- Sarkar, P.; Pillai, J.S.; Gupta, A. ScholAR: A collaborative learning experience for rural schools using Augmented Reality application. In Proceedings of the 2018 IEEE Tenth International Conference on Technology for Education (T4E), Chennai, India, 10–13 December 2018; pp. 8–15.

- Soleimani, H.; Jalilifar, A.; Rouhi, A.; Rahmanian, M. Augmented Reality and Virtual Reality Scaffoldings in Improving the Abstract Genre Structure in a Collaborative Learning Environment: A CALL Study. J. Engl. Lang. Teach. Learn. 2019, 11, 327–356.

- Hadar, E. Toward Development Tools for Augmented Reality Applications—A Practitioner Perspective. In Workshop on Enterprise and Organizational Modeling and Simulation; Springer: Berlin/Heidelberg, Germany, 2018; pp. 91–104.

- Mukhametshin, S.; Makhmutova, A.; Anikin, I. Sensor tag detection, tracking and recognition for AR application. In Proceedings of the 2019 International Conference on Industrial Engineering, Applications and Manufacturing (ICIEAM), Tokyo, Japan, 12–15 April 2019; pp. 1–5.

- Santoni, F.; De Angelis, A.; Moschitta, A.; Carbone, P. MagIK: A Hand-Tracking Magnetic Positioning System Based on a Kinematic Model of the Hand. IEEE Trans. Instrum. Meas. 2021, 70, 1–13.

- Frikha, R.; Ejbali, R.; Zaied, M. Handling occlusion in augmented reality surgical training based instrument tracking. In Proceedings of the 2016 IEEE/ACS 13th International Conference of Computer Systems and Applications (AICCSA), Agadir, Morocco, 29 November–2 December 2016; pp. 1–5.

- Wang, M.; Shi, Q.; Song, S.; Meng, M.Q.H. A novel magnetic tracking approach for intrabody objects. IEEE Sensors J. 2020, 20, 4976–4984.

- Davis, F.D.; Bagozzi, R.P.; Warshaw, P.R. User acceptance of computer technology: A comparison of two theoretical models. Manag. Sci. 1989, 35, 982–1003.

- Davison, A.J.; Reid, I.D.; Molton, N.D.; Stasse, O. MonoSLAM: Real-time single camera SLAM. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 1052–1067.

- De Smet, J. The Smart Contact Lens: From an Artificial Iris to a Contact Lens Display. Ph.D. Thesis, Ghent University, Ghent, Belgium, 2014.

- Dissanayake, M.G.; Newman, P.; Clark, S.; Durrant-Whyte, H.F.; Csorba, M. A solution to the simultaneous localization and map building (SLAM) problem. IEEE Trans. Robot. Autom. 2001, 17, 229–241.

- Dodgson, N.A. Autostereoscopic 3D displays. Computer 2005, 38, 31–36.

- De Smet, J.; Avci, A.; Joshi, P.; Schaubroeck, D.; Cuypers, D.; De Smet, H. Progress toward a liquid crystal contact lens display. J. Soc. Inf. Disp. 2013, 21, 399–406.

- Heidemann, G.; Bax, I.; Bekel, H. Multimodal interaction in an augmented reality scenario. In Proceedings of the 6th International Conference on Multimodal Interfaces, State College, PA, USA, 13–15 October 2004; pp. 53–60.

- Chakrabarty, A.; Morris, R.; Bouyssounouse, X.; Hunt, R. Autonomous indoor object tracking with the Parrot AR. Drone. In Proceedings of the 2016 International Conference on Unmanned Aircraft Systems (ICUAS), Arlington, VA, USA, 7–10 June 2016; pp. 25–30.

- Buttner, S.; Sand, O.; Rocker, C. Exploring design opportunities for intelligent worker assistance: A new approach using projetion-based AR and a novel hand-tracking algorithm. In Proceedings of the European Conference on Ambient Intelligence, Malaga, Spain, 26–28 April 2017; Springer: Berlin/Heidelberg, Germany, 2017; pp. 33–45.

- Gupta, S.; Chaudhary, R.; Gupta, S.; Kaur, A.; Mantri, A. A survey on tracking techniques in augmented reality based application. In Proceedings of the 2019 Fifth International Conference on Image Information Processing (ICIIP), Shimla, India, 15–17 November 2019; pp. 215–220.

- Krishna, V.; Ding, Y.; Xu, A.; Höllerer, T. Multimodal biometric authentication for VR/AR using EEG and eye tracking. In Proceedings of the Adjunct of the 2019 International Conference on Multimodal Interaction, Suzhou, China, 14–18 October 2019; pp. 1–5.

- Dzsotjan, D.; Ludwig-Petsch, K.; Mukhametov, S.; Ishimaru, S.; Kuechemann, S.; Kuhn, J. The Predictive Power of Eye-Tracking Data in an Interactive AR Learning Environment. In Proceedings of the Adjunct Proceedings of the 2021 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2021 ACM International Symposium on Wearable Computers, Virtual, 21–26 September 2021; pp. 467–471.

- Chen, L.; Cao, C.; De la Torre, F.; Saragih, J.; Xu, C.; Sheikh, Y. High-fidelity Face Tracking for AR/VR via Deep Lighting Adaptation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13059–13069.

- Rambach, J.; Pagani, A.; Schneider, M.; Artemenko, O.; Stricker, D. 6DoF object tracking based on 3D scans for augmented reality remote live support. Computers 2018, 7, 6.

- Ha, T.; Billinghurst, M.; Woo, W. An interactive 3D movement path manipulation method in an augmented reality environment. Interact. Comput. 2012, 24, 10–24.

- Syahidi, A.A.; Tolle, H.; Supianto, A.A.; Arai, K. AR-Child: Analysis, Evaluation, and Effect of Using Augmented Reality as a Learning Media for Preschool Children. In Proceedings of the 2019 5th International Conference on Computing Engineering and Design (ICCED), Purwokerto, Indonesia, 5–6 August 2019; pp. 1–6.

- Wang, X.; Dunston, P.S. User perspectives on mixed reality tabletop visualization for face-to-face collaborative design review. Autom. Constr. 2008, 17, 399–412.

- Wang, X.; Dunston, P.S. Comparative effectiveness of mixed reality-based virtual environments in collaborative design. IEEE Trans. Syst. Man Cybern. Part C 2011, 41, 284–296.

- Hauptmann, A.G. Speech and gestures for graphic image manipulation. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Austin, TX, USA, 30 April–4 June 1989; pp. 241–245.

- Heath, C.; Luff, P. Disembodied conduct: Communication through video in a multi-media office environment. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, New Orleans, LA, USA, 27 April–2 May 1991; pp. 99–103.

- Rambach, J.; Pagani, A.; Stricker, D. Augmented things: Enhancing AR applications leveraging the internet of things and universal 3D object tracking. In Proceedings of the 2017 IEEE International Symposium on Mixed and Augmented Reality (ISMAR-Adjunct), Nantes, France, 9–13 October 2017; pp. 103–108.

- Viyanon, W.; Songsuittipong, T.; Piyapaisarn, P.; Sudchid, S. AR furniture: Integrating augmented reality technology to enhance interior design using marker and markerless tracking. In Proceedings of the 2nd International Conference on Intelligent Information Processing, Bangkok Thailand, 17–18 July 2017; pp. 1–7.

- Turkan, Y.; Radkowski, R.; Karabulut-Ilgu, A.; Behzadan, A.H.; Chen, A. Mobile augmented reality for teaching structural analysis. Adv. Eng. Inform. 2017, 34, 90–100.

- Dorfmüller, K. Robust tracking for augmented reality using retroreflective markers. Comput. Graph. 1999, 23, 795–800.

- Danielsson, O.; Holm, M.; Syberfeldt, A. Augmented reality smart glasses for operators in production: Survey of relevant categories for supporting operators. Procedia CIRP 2020, 93, 1298–1303.

- Dörner, R.; Geiger, C.; Haller, M.; Paelke, V. Authoring mixed reality—A component and framework-based approach. In Entertainment Computing; Springer: Berlin/Heidelberg, Germany, 2003; pp. 405–413.

- Drascic, D.; Milgram, P. Positioning accuracy of a virtual stereographic pointer in a real stereoscopic video world. In Proceedings of the Stereoscopic Displays and Applications II, San Jose, CA, USA, 25–27 February 1991; Volume 1457, pp. 302–313.

- Drascic, D.; Grodski, J.J.; Milgram, P.; Ruffo, K.; Wong, P.; Zhai, S. ARGOS: A display system for augmenting reality. In Proceedings of the INTERACT’93 and CHI’93 Conference on Human Factors in Computing Systems, Amsterdam, The Netherlands, 24–29 April 1993; p. 521.

- Drascic, D.; Milgram, P. Perceptual issues in augmented reality. In Proceedings of the Stereoscopic Displays and Virtual Reality Systems III. International Society for Optics and Photonics, San Jose, CA, USA, 30 January–2 February 1996; Volume 2653, pp. 123–134.

- Dünser, A. Supporting low ability readers with interactive augmented reality. Annu. Rev. Cybertherapy Telemed. 2008, 6, 39–46.

- Dünser, A.; Hornecker, E. Lessons from an AR book study. In Proceedings of the 1st International Conference on Tangible and Embedded Interaction, Baton Rouge, LA, USA, 15–17 February 2007; pp. 179–182.

- Gibson, L.; Hanson, V.L. Digital motherhood: How does technology help new mothers? In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Paris, France, 27 April–2 May 2013; pp. 313–322.

- Wuest, H.; Engekle, T.; Wientapper, F.; Schmitt, F.; Keil, J. From CAD to 3D Tracking—Enhancing & Scaling Model-Based Tracking for Industrial Appliances. In Proceedings of the 2016 IEEE International Symposium on Mixed and Augmented Reality (ISMAR-Adjunct), Merida, Mexico, 19–23 September 2016; pp. 346–347.

- LaViola, J.J. A discussion of cybersickness in virtual environments. ACM SIGCHI Bull. 2000, 32, 47–56.

- Gao, Y.F.; Wang, H.Y.; Bian, X.N. Marker tracking for video-based augmented reality. In Proceedings of the 2016 International Conference on Machine Learning and Cybernetics (ICMLC), Jeju Island, Republic of Korea, 10–13 July 2016; Volume 2, pp. 928–932.

- Szalavári, Z.; Eckstein, E.; Gervautz, M. Collaborative gaming in augmented reality. In Proceedings of the ACM Symposium on Virtual Reality Software and Technology, Taipei, Taiwan, 2–5 November 1998; pp. 195–204.

- Dolata, M.; Agotai, D.; Schubiger, S.; Schwabe, G. Pen-and-paper rituals in service interaction: Combining high-touch and high-tech in financial advisory encounters. Proc. ACM Hum.-Comput. Interact. 2019, 3, 1–24.

- Butz, A.; Hollerer, T.; Feiner, S.; MacIntyre, B.; Beshers, C. Enveloping users and computers in a collaborative 3D augmented reality. In Proceedings of the 2nd IEEE and ACM International Workshop on Augmented Reality (IWAR’99), San Francisco, CA, USA, 20–21 October 1999; pp. 35–44.

- Müller, J.; Rädle, R.; Reiterer, H. Remote collaboration with mixed reality displays: How shared virtual landmarks facilitate spatial referencing. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; pp. 6481–6486.

- Gül, L.F. Studying gesture-based interaction on a mobile augmented reality application for co-design activity. J. Multimodal User Interfaces 2018, 12, 109–124.

- Benko, H.; Ishak, E.W.; Feiner, S. Collaborative mixed reality visualization of an archaeological excavation. In Proceedings of the Third IEEE and ACM International Symposium on Mixed and Augmented Reality, Arlington, VA, USA, 5 November 2004; pp. 132–140.

- Franz, J.; Alnusayri, M.; Malloch, J.; Reilly, D. A comparative evaluation of techniques for sharing AR experiences in museums. Proc. ACM Hum.-Comput. Interact. 2019, 3, 1–20.

- An, Z.; Xu, X.; Yang, J.; Liu, Y.; Yan, Y. Research of the three-dimensional tracking and registration method based on multiobjective constraints in an AR system. Appl. Opt. 2018, 57, 9625–9634.

- Oskiper, T.; Samarasekera, S.; Kumar, R. CamSLAM: Vision Aided Inertial Tracking and Mapping Framework for Large Scale AR Applications. In Proceedings of the 2017 IEEE International Symposium on Mixed and Augmented Reality (ISMAR-Adjunct), Nantes, France, 9–13 October 2017; pp. 216–217.

- Zhang, W.; Han, B.; Hui, P. Jaguar: Low latency mobile augmented reality with flexible tracking. In Proceedings of the 26th ACM International Conference on Multimedia, Seoul, Republic of Korea, 22–26 October 2018; pp. 355–363.

- Tokusho, Y.; Feiner, S. Prototyping an outdoor mobile augmented reality street view application. In ISMAR Workshop on Outdoor Mixed and Augmented Reality; Citeseer: Princeton, NJ, USA, 2009; Volume 2.

- Henrysson, A.; Ollila, M. UMAR: Ubiquitous mobile augmented reality. In Proceedings of the 3rd International Conference on Mobile and Ubiquitous Multimedia, College Park, MD, USA, 27–29 October 2004; pp. 41–45.

- Henrysson, A.; Ollila, M.; Billinghurst, M. Mobile phone based AR scene assembly. In Proceedings of the 4th International Conference on Mobile and Ubiquitous Multimedia, Christchurch, New Zealand, 8–10 December 2005; pp. 95–102.

- Hilliges, O.; Kim, D.; Izadi, S.; Weiss, M.; Wilson, A. HoloDesk: Direct 3d interactions with a situated see-through display. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Austin, TX, USA, 5–10 May 2012; pp. 2421–2430.

- Ar, Y.; Ünal, M.; Sert, S.Y.; Bostanci, E.; Kanwal, N.; Güzel, M.S. Evolutionary Fuzzy Adaptive Motion Models for User Tracking in Augmented Reality Applications. In Proceedings of the 2018 2nd International Symposium on Multidisciplinary Studies and Innovative Technologies (ISMSIT), Ankara, Turkey, 19–21 October 2018; pp. 1–6.

- Ashutosh, K. Hardware Performance Analysis of Mobile-Based Augmented Reality Systems. In Proceedings of the 2020 International Conference on Computational Performance Evaluation (ComPE), Shillong, India, 2–4 July 2020; pp. 671–675.

- Hu, X.; Hua, H. Design of an optical see-through multi-focal-plane stereoscopic 3d display using freeform prisms. In Frontiers in Optics; Optical Society of America: Washington, DC, USA, 2012; p. FTh1F-2.

- Hourcade, J.P. Interaction Design and Children; Now Publishers Inc.: Delft, The Netherland, 2008.

- Kang, D.; Ma, L. Real-Time Eye Tracking for Bare and Sunglasses-Wearing Faces for Augmented Reality 3D Head-Up Displays. IEEE Access 2021, 9, 125508–125522.

- Jeong, J.; Lee, C.K.; Lee, B.; Lee, S.; Moon, S.; Sung, G.; Lee, H.S.; Lee, B. Holographically printed freeform mirror array for augmented reality near-eye display. IEEE Photonics Technol. Lett. 2020, 32, 991–994.

- Park, S.g. Augmented and mixed reality optical see-through combiners based on plastic optics. Inf. Disp. 2021, 37, 6–11.

- Lee, Y.H.; Zhan, T.; Wu, S.T. Prospects and challenges in augmented reality displays. Virtual Real. Intell. Hardw. 2019, 1, 10–20.

- Jang, C.; Mercier, O.; Bang, K.; Li, G.; Zhao, Y.; Lanman, D. Design and fabrication of freeform holographic optical elements. ACM Trans. Graph. (TOG) 2020, 39, 1–15.

- Yu, C.; Peng, Y.; Zhao, Q.; Li, H.; Liu, X. Highly efficient waveguide display with space-variant volume holographic gratings. Appl. Opt. 2017, 56, 9390–9397.

- Gorovyi, I.M.; Sharapov, D.S. Advanced image tracking approach for augmented reality applications. In Proceedings of the 2017 Signal Processing Symposium (SPSympo), Auckland, New Zealand, 27–30 November 2017; pp. 1–5.

- Hix, D.; Gabbard, J.L.; Swan, J.E.; Livingston, M.A.; Hollerer, T.H.; Julier, S.J.; Baillot, Y.; Brown, D. A cost-effective usability evaluation progression for novel interactive systems. In Proceedings of the 37th Annual Hawaii International Conference on System Sciences, Big Island, HI, USA, 5–8 January 2004.

- Hodges, S.; Williams, L.; Berry, E.; Izadi, S.; Srinivasan, J.; Butler, A.; Smyth, G.; Kapur, N.; Wood, K. SenseCam: A retrospective memory aid. In Proceedings of the International Conference on Ubiquitous Computing, Seoul, Republic of Korea, 21–24 September 2008; Springer: Berlin/Heidelberg, Germany, 2006; pp. 177–193.

- Isham, M.I.M.; Mohamed, F.; Siang, C.V.; Yusoff, Y.A.; Abd Aziz, A.A.; Dewi, D.E.O. A framework of ultrasounds image slice positioning and orientation in 3D augmented reality environment using hybrid tracking method. In Proceedings of the 2018 IEEE Conference on Big Data and Analytics (ICBDA), Seattle, WA, USA, 10–13 December 2018; pp. 105–110.

- Park, J.H.; Kim, S.B. Optical see-through holographic near-eye-display with eyebox steering and depth of field control. Opt. Express 2018, 26, 27076–27088.

- Chakravarthula, P.; Peng, Y.; Kollin, J.; Fuchs, H.; Heide, F. Wirtinger holography for near-eye displays. ACM Trans. Graph. (TOG) 2019, 38, 1–13.

- Peng, Y.; Choi, S.; Padmanaban, N.; Wetzstein, G. Neural holography with camera-in-the-loop training. ACM Trans. Graph. (TOG) 2020, 39, 1–14.

- Ruan, W.; Yao, L.; Sheng, Q.Z.; Falkner, N.J.; Li, X. Tagtrack: Device-free localization and tracking using passive rfid tags. In Proceedings of the 11th International Conference on Mobile and Ubiquitous Systems: Computing, Networking and Services, London, UK, 2–5 December 2014; pp. 80–89.

- Ellis, S.R.; Menges, B.M. Studies of the Localization of Virtual Objects in the Near Visual Field. In Fundamentals of Wearable Computers and Augmented Reality, 1st ed.; CRC Press: Boca Raton, FL, USA, 2001.

- Evennou, F.; Marx, F. Advanced integration of WiFi and inertial navigation systems for indoor mobile positioning. EURASIP J. Adv. Signal Process. 2006, 2006, 1–11.

- Yang, X.; Fan, X.; Wang, J.; Yin, X.; Qiu, S. Edge-based cover recognition and tracking method for an AR-aided aircraft inspection system. Int. J. Adv. Manuf. Technol. 2020, 111, 3505–3518.

- Kang, D.; Heo, J.; Kang, B.; Nam, D. Pupil detection and tracking for AR 3D under various circumstances. Electron. Imaging 2019, 2019, 55-1–55-5.

- Bach, B.; Sicat, R.; Pfister, H.; Quigley, A. Drawing into the AR-CANVAS: Designing embedded visualizations for augmented reality. In Workshop on Immersive Analytics; IEEE Vis: Piscataway, NJ, USA, 2017.

- Zeng, H.; He, X.; Pan, H. FunPianoAR: A novel AR application for piano learning considering paired play based on multi-marker tracking. J. Phys. Conf. Ser. 2019, 1229, 012072.

- Rewkowski, N.; State, A.; Fuchs, H. Small Marker Tracking with Low-Cost, Unsynchronized, Movable Consumer Cameras For Augmented Reality Surgical Training. In Proceedings of the 2020 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Recife, Brazil, 9–13 November 2020; pp. 90–95.

- Hoffman, H.G. Physically touching virtual objects using tactile augmentation enhances the realism of virtual environments. In Proceedings of the IEEE 1998 Virtual Reality Annual International Symposium (Cat. No. 98CB36180), Atlanta, GA, USA, 14–18 March 1998; pp. 59–63.

- Mao, W.; He, J.; Qiu, L. Cat: High-precision acoustic motion tracking. In Proceedings of the 22nd Annual International Conference on Mobile Computing and Networking, New York, NY, USA, 3–7 October 2016; pp. 69–81.

- Höllerer, T.; Wither, J.; DiVerdi, S. “Anywhere augmentation”: Towards mobile augmented reality in unprepared environments. In Location Based Services and TeleCartography; Springer: Berlin/Heidelberg, Germany, 2007; pp. 393–416.

- Hong, J. Considering privacy issues in the context of Google glass. Commun. ACM 2013, 56, 10–11.

- Hua, H.; Brown, L.D.; Gao, C.; Ahuja, N. A new collaborative infrastructure: SCAPE. In Proceedings of the IEEE Virtual Reality, 2003. Proceedings, Los Angeles, CA, USA, 22–26 March 2003; IEEE: Piscataway, NJ, USA, 2003; pp. 171–179.

- Huang, Y.; Weng, D.; Liu, Y.; Wang, Y. Key issues of wide-area tracking system for multi-user augmented reality adventure game. In Proceedings of the 2009 Fifth International Conference on Image and Graphics, Xi’an, China, 20–23 September 2009; pp. 646–651.

- Huber, M.; Pustka, D.; Keitler, P.; Echtler, F.; Klinker, G. A system architecture for ubiquitous tracking environments. In Proceedings of the 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, 13–16 November 2007; pp. 211–214.

- Hugues, O.; Fuchs, P.; Nannipieri, O. New augmented reality taxonomy: Technologies and features of augmented environment. In Handbook of Augmented Reality; Springer: Berlin/Heidelberg, Germany, 2011; pp. 47–63.

- Inami, M.; Kawakami, N.; Sekiguchi, D.; Yanagida, Y.; Maeda, T.; Tachi, S. Visuo-haptic display using head-mounted projector. In Proceedings of the Proceedings IEEE Virtual Reality 2000 (Cat. No. 00CB37048), New Brunswick, NJ, USA, 18–22 March 2000; IEEE: Piscataway, NJ, USA, 2000; pp. 233–240.

- Ekman, P.; Friesen, W.V. The Repertoire of Nonverbal Behavior: Categories, Origins, Usage, and Coding; De Gruyter Mouton: Berlin, Germany, 2010.

- Ellis, S.R.; Menges, B.M. Judgments of the distance to nearby virtual objects: Interaction of viewing conditions and accommodative demand. Presence Teleoperators Virtual Environ. 1997, 6, 452–460.