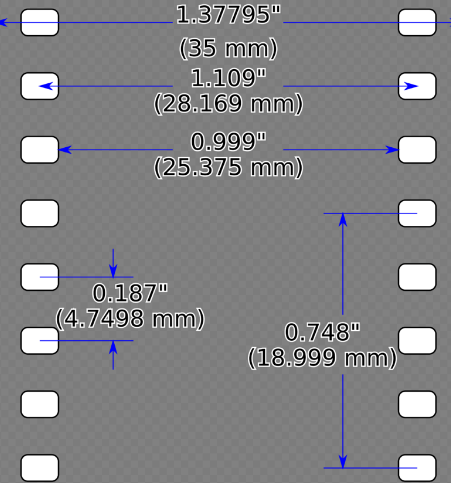

35 mm film (millimeter) is the film gauge most commonly used for motion pictures and chemical still photography (see 135 film). The name of the gauge refers to the width of the photographic film, which consists of strips 34.98 ±0.03 mm (1.377 ±0.001 inches) wide.[fn 1] The standard negative pulldown for movies ("single-frame" format) is four perforations per frame along both edges, which results in 16 frames per foot of film. For still photography, the standard frame has eight perforations on each side. A variety of largely proprietary gauges were devised for the numerous camera and projection systems being developed independently in the late 19th century and early 20th century, ranging from 13 to 75 mm (0.51 to 2.95 in), as well as a variety of film feeding systems. This resulted in cameras, projectors, and other equipment having to be calibrated to each gauge. The 35 mm width, originally specified as 1 3⁄8 inches, was introduced in 1892 by William Dickson and Thomas Edison, using 120 film stock supplied by George Eastman.[fn 1] Film 35 mm wide with four perforations per frame became accepted as the international standard gauge in 1909, and remained by far the dominant film gauge for image origination and projection until the advent of digital photography and cinematography, despite challenges from smaller and larger gauges, because its size allowed for a relatively good trade-off between the cost of the film stock and the quality of the images captured. The gauge has been versatile in application. It has been modified to include sound, redesigned to create a safer film base, formulated to capture color, has accommodated a bevy of widescreen formats, and has incorporated digital sound data into nearly all of its non-frame areas. Eastman Kodak, Fujifilm and Agfa-Gevaert are some companies that offered 35 mm films. Today Kodak is the last remaining manufacturer of motion picture film. The ubiquity of 35 mm movie projectors in commercial movie theaters made 35 mm the only motion picture format that could be played in almost any cinema in the world, until digital projection largely superseded it in the 21st century. It is difficult to compare the quality of film to digital media, but a good estimate would be about 33.6 megapixels (67.2 megapixels DSLR Bayer equivalent) would equal one 35-millimeter high quality color frame of film.

- digital photography

- international standard

- cinematography

1. Early History

In 1880, George Eastman began to manufacture gelatin dry photographic plates in Rochester, New York. Along with W. H. Walker, Eastman invented a holder for a roll of picture-carrying gelatin layer-coated paper. Hannibal Goodwin's invention of nitrocellulose film base in 1887 was the first transparent, flexible film.[1][2] Eastman's was the first major company, however, to mass-produce these components, when in 1889 Eastman realized that the dry-gelatino-bromide emulsion could be coated onto this clear base, eliminating the paper.[3]

With the advent of flexible film, Thomas Edison quickly set out on his invention, the Kinetoscope, which was first shown at the Brooklyn Institute of Arts and Sciences on 9 May 1893.[5] The Kinetoscope was a film loop system intended for one-person viewing.[6] Edison, along with assistant W. K. L. Dickson, followed that up with the Kinetophone, which combined the Kinetoscope with Edison's cylinder phonograph. Beginning in March 1892, Eastman and then, from April 1893 into 1896, New York's Blair Camera Co. supplied Edison with film stock. At first Blair would supply only 40 mm (1 9⁄16 in) film stock that would be trimmed and perforated at the Edison lab to create 1 3⁄8 in (34.925 mm) gauge filmstrips, then at some point in 1894 or 1895, Blair began sending stock to Edison that was cut exactly to specification.[7][8] Edison's aperture defined a single frame of film at 4 perforations high.[9] Edison claimed exclusive patent rights to his design of 35 mm motion picture film, with four sprocket holes per frame, forcing his only major filmmaking competitor, American Mutoscope & Biograph, to use a 68 mm film that used friction feed, not sprocket holes, to move the film through the camera. A court judgment in March 1902 invalidated Edison's claim, allowing any producer or distributor to use the Edison 35 mm film design without license. Filmmakers were already doing so in Britain and Europe, where Edison had failed to file patents.[10]

At the time, film stock was usually supplied unperforated and punched by the filmmaker to their standards with perforation equipment. A variation developed by the Lumière Brothers used a single circular perforation on each side of the frame towards the middle of the horizontal axis.[11] It was Edison's format, however, that became first the dominant standard and then the "official" standard of the newly formed Motion Picture Patents Company, a trust established by Edison, which agreed in 1909 to what would become the standard: 35 mm gauge, with Edison perforations and a 1.33:1 (4:3) aspect ratio.[12] Scholar Paul C. Spehr describes the importance of these developments:

The early acceptance of 35 mm as a standard had momentous impact on the development and spread of cinema. The standard gauge made it possible for films to be shown in every country of the world… It provided a uniform, reliable and predictable format for production, distribution and exhibition of movies, facilitating the rapid spread and acceptance of the movies as a world-wide device for entertainment and communication.[8]

The film format was introduced into still photography as early as 1913 (the Tourist Multiple) but first became popular with the launch of the Leica camera, created by Oskar Barnack in 1925.[13]

1.1. Amateur Interest

The costly image-forming silver compounds in a film stock's emulsion meant from the start that 35 mm filmmaking was to be an expensive hobby with a high barrier to entry for the public at large. Furthermore, the nitrocellulose film base of all early film stock was highly flammable, creating considerable risk for those not accustomed to the precautions necessary in its handling. The cost of film stock was directly proportional to its surface area, so a smaller film gauge for amateur use was the obvious path to affordability. The downside was that smaller images were less sharp and detailed, and because less light could be put through them in the finished film the size of an acceptably bright projected image was also limited.

Birt Acres was the first to attempt an amateur format, creating Birtac in 1898 by slitting the film into 17.5 mm widths. By the early 1920s, several formats had successfully split the amateur home movies market away from 35 mm: 28 mm (1.1 in) (1912), 9.5 mm (0.37 in) (1922), 16 mm (0.63 in) (1923), and Pathe Rural, a 17.5 mm format designed for safety film (1926). Eastman Kodak's 16 mm format won the amateur market and is still widely in use today, mainly in the Super 16 variation, which remains popular with professional filmmakers. The 16 mm size was specifically chosen to prevent third-party slitting, as it was easy to create 17.5 mm stock from slitting 35 mm stock in two. It also was the first major format to be released with only fireproof cellulose diacetate (and later cellulose triacetate) "safety film" base. This amateur market would be further diversified by the introduction of 8 mm film (0.31 in) in 1932, intended for amateur filmmaking and "home movies".[14] By law, 16 mm and 8 mm gauge stock (and 35 mm films intended for non-theatrical use) had to be manufactured on safety stock. The effect of these gauges was to essentially make the 35 mm gauge almost the exclusive province of professional filmmakers, a divide which mostly remains to this day.

1.2. Still Cameras

Just as the format was recognized as a standard in 1909, still film cameras were developed that took advantage of the 35 mm format and allowed a large number of exposures for each length of film loaded into the camera. The frame size was increased to 24×36 mm. (This increase is on the same gauge since the stills are shot horizontally instead of vertically. 24 mm from perforation to perforation, 36 mm along an 8-perforation segment of the 35 mm film stock.) Although the first design was patented as early as 1908, the first commercial 35 mm camera was the 1913 Tourist Multiple, for movie and still photography, soon followed by the Simplex providing selection between full and half frame format. Oskar Barnack built his prototype Ur-Leica in 1913 and had it patented, but Ernst Leitz did not decide to produce it before 1924. The first Leica camera to be fully standardised was the Leica Standard of 1932.[15]

2. How Film Works

Inside the photographic emulsion are millions of light-sensitive silver halide crystals. Each crystal is a compound of silver plus a halogen (such as bromine, iodine or chlorine) held together in a cubical arrangement by electrical attraction. When the crystal is struck with light, free-moving silver ions build up a small collection of uncharged atoms. These small bits of silver, too small to even be visible under a microscope, are the beginning of a latent image. Developing chemicals use the latent image specks to build up density, an accumulation of enough metallic silver to create a visible image.[16]

The emulsion is attached to the film base with a transparent adhesive called the subbing layer. On the back of the base is a layer called the anti-halation backing, which usually contains absorber dyes or a thin layer of silver or carbon (called rem-jet on color negative stocks). Without this coating, light not absorbed by the emulsion and passing into the base would be partly reflected back at the outer surface of the base, re-exposing the emulsion in less focused form and thereby creating halos around bright points and edges in the image. The anti-halation backing can also serve to reduce static buildup, which could be a significant problem with early black-and-white films. The film, running through a motion picture camera at 12 inches (300 mm) (early silent speed) to 18 inches (460 mm) (sound speed) per second, could build up enough static electricity to cause sparks bright enough to record their own forms on the film; anti-halation backing solved this problem.

Color films have multiple layers of silver halide emulsion to separately record the red, green and blue thirds of the spectrum. For every silver halide grain there is a matching color coupler grain (except Kodachrome film, to which color couplers were added during processing). The top layer of emulsion is sensitive to blue; below it is a yellow filter layer to block blue light; and under that is a green-sensitive layer followed by a red-sensitive layer. Just as in black-and-white, the first step in color development converts exposed silver halide grains into metallic silver – except that an equal amount of color dye will be formed as well. The color couplers in the blue-sensitive layer will form yellow dye during processing, the green layer will form magenta dye and the red layer will form cyan dye. A bleach step will convert the metallic silver back into silver halide, which is then removed along with the unexposed silver halide in the fixer and wash steps, leaving only color dyes.[17]

In the 1980s Eastman Kodak invented the T-Grain, a synthetically manufactured silver halide grain that had a larger, flat surface area and allowed for greater light sensitivity in a smaller, thinner grain. Thus Kodak could solve the problem of higher speed (greater light sensitivity—see film speed) which required larger grain and therefore more "grainy" images. With T-Grain technology, Kodak refined the grain structure of all their "EXR" line of motion picture film stocks[18] (which was eventually incorporated into their "MAX" still stocks). Fuji films followed suit with their own grain innovation, the tabular grain in their SUFG (Super Unified Fine Grain) SuperF negative stocks, which are made up of thin hexagonal tabular grains.[19]

3. Attributes

3.1. Color

Originally, film was a strip of cellulose nitrate coated with black-and-white photographic emulsion.[6] Early film pioneers, like D. W. Griffith, color tinted or toned portions of their movies for dramatic impact, and by 1920, 80 to 90 percent of all films were tinted.[20] The first successful natural color process was Britain's Kinemacolor (1908–1914), a two-color additive process that used a rotating disk with red and green filters in front of the camera lens and the projector lens.[21][22] But any process that photographed and projected the colors sequentially was subject to color "fringing" around moving objects, and a general color flickering.[23]

In 1916, William Van Doren Kelley began developing Prizma, the first commercially viable American color process using 35 mm film. Initially, like Kinemacolor, it photographed the color elements one after the other and projected the results by additive synthesis. Ultimately, Prizma was refined to bipack photography, with two strips of film, one treated to be sensitive to red and the other not, running through the camera face to face. Each negative was printed on one surface of the same duplitized print stock and each resulting series of black-and-white images was chemically toned to transform the silver into a monochrome color, either orange-red or blue-green, resulting in a two-sided, two-colored print that could be shown with any ordinary projector. This system of two-color bipack photography and two-sided prints was the basis for many later color processes, such as Multicolor, Brewster Color and Cinecolor.

Although it had been available previously, color in Hollywood feature films first became truly practical from the studios' commercial perspective with the advent of Technicolor, whose main advantage was quality prints in less time than its competitors. In its earliest incarnations, Technicolor was another two-color system that could reproduce a range of reds, muted bluish greens, pinks, browns, tans and grays, but not real blues or yellows. The Toll of the Sea, released in 1922, was the first film printed in their subtractive color system. Technicolor's camera photographed each pair of color-filtered frames simultaneously on one strip of black-and-white film by means of a beam splitter prism behind the camera lens. Two prints on half-thickness stock were made from the negative, one from only the red-filtered frames, the other from the green-filtered frames. After development, the silver images on the prints were chemically toned to convert them into images of the approximately complementary colors. The two strips were then cemented together back to back, forming a single strip similar to duplitized film.

In 1928, Technicolor started making their prints by the imbibition process, which was mechanical rather than photographic and allowed the color components to be combined on the same side of the film. Using two matrix films bearing hardened gelatin relief images, thicker where the image was darker, aniline color dyes were transferred into the gelatin coating on a third, blank strip of film.

Technicolor re-emerged as a three-color process for cartoons in 1932 and live action in 1934. Using a different arrangement of a beam-splitter cube and color filters behind the lens, the camera simultaneously exposed three individual strips of black-and-white film, each one recording one-third of the spectrum, which allowed virtually the entire spectrum of colors to be reproduced.[24] A printing matrix with a hardened gelatin relief image was made from each negative, and the three matrices transferred color dyes into a blank film to create the print.[25]

Two-color processes, however, were far from extinct. In 1934, William T. Crispinel and Alan M. Gundelfinger revived the Multicolor process under the company name Cinecolor. Cinecolor saw considerable use in animation and low-budget pictures, mainly because it cost much less than three-color Technicolor. If color design was carefully managed, the lack of colors such as true green could pass unnoticed. Although Cinecolor used the same duplitized stock as Prizma and Multicolor, it had the advantage that its printing and processing methods yielded larger quantities of finished film in less time.

In 1950 Kodak announced the first Eastman color 35 mm negative film (along with a complementary positive film) that could record all three primary colors on the same strip of film.[26] An improved version in 1952 was quickly adopted by Hollywood, making the use of three-strip Technicolor cameras and bipack cameras (used in two-color systems such as Cinecolor) obsolete in color cinematography. This "monopack" structure is made up of three separate emulsion layers, one sensitive to red light, one to green and one to blue.

3.2. Safety Film

Although Eastman Kodak had first introduced acetate-based film, it was far too brittle and prone to shrinkage, so the dangerously flammable nitrate-based cellulose films were generally used for motion picture camera and print films. In 1949 Kodak began replacing all nitrocellulose (nitrate-based) films with the safer, more robust cellulose triacetate-based "Safety" films. In 1950 the Academy of Motion Picture Arts and Sciences awarded Kodak with a Scientific and Technical Academy Award (Oscar) for the safer triacetate stock.[27] By 1952, all camera and projector films were triacetate-based.[14] Most if not all film prints today are made from synthetic polyester safety base (which started replacing Triacetate film for prints in the early 1990s). The downside of polyester film is that it is extremely strong, and, in case of a fault, will stretch and not break–potentially causing damage to the projector and ruining a fairly large stretch of film: 2–3 ft or approximately 2 seconds. Also, polyester film will melt if exposed to the projector lamp for too long. Original camera negative is still made on a triacetate base, and some intermediate films (certainly including internegatives or "dupe" negatives, but not necessarily including interpositives or "master" positives) are also made on a triacetate base as such films must be spliced during the "negative assembly" process, and the extant negative assembly process is solvent-based. Polyester films are not compatible with solvent-based assembly processes.

3.3. Other Types

Besides black & white and color negative films, there are black & white and color reversal films, which when developed create a positive ("natural") image that is projectable. There are also films sensitive to non-visible wavelengths of light, such as infrared.

4. Common Formats

4.1, Academy Format

In the conventional motion picture format, frames are four perforations tall, with an aspect ratio of 1.375:1, 22 by 16 mm (0.866 by 0.630 in). This is a derivation of the aspect ratio and frame size designated by Thomas Edison (24.89 mm by 18.67 mm or 0.980 in by 0.735 in) at the dawn of motion pictures, which was an aspect ratio of 1.33:1.[28] The first sound features were released in 1926–27, and while Warner Bros. was using synchronized phonograph discs (sound-on-disc), Fox placed the soundtrack in an optical record directly on the film (sound-on-film) on a strip between the sprocket holes and the image frame.[29] "Sound-on-film" was soon adopted by the other Hollywood studios, resulting in an almost square image ratio of 0.860 in by 0.820 in.[30]

By 1929, most movie studios had revamped this format using their own house aperture plate size to try to recreate the older screen ratio of 1.33:1. Furthermore, every theater chain had their own house aperture plate size in which the picture was projected. These sizes often did not match up even between theaters and studios owned by the same company, and therefore, uneven projection practices occurred.[30]

In November 1929, the Society of Motion Pictures Engineers set a standard aperture ratio of 0.800 in by 0.600 in. Known as the "1930 standard," studios which followed the suggested practice of marking their camera viewfinders for this ratio were: Paramount-Famous-Lasky, Metro-Goldwyn Mayer, United Artists, Pathe, Universal, RKO, Tiffany-Stahl, Mack Sennett, Darmour, and Educational. The Fox Studio markings were the same width but allowed .04 in more height.[31]

In 1932, in refining this ratio, the Academy of Motion Picture Arts and Sciences expanded upon this 1930 standard. The camera aperture became 22 by 16 mm (0.87 by 0.63 in), and the projected image would use an aperture plate size of 0.825 by 0.600 in (21.0 by 15.2 mm), yielding an aspect ratio of 1.375:1. This became known as the "Academy" ratio, named so after them.[32] Since the 1950s the aspect ratio of some theatrically released motion picture films has been 1.85:1 (1.66:1 in Europe) or 2.35:1 (2.40:1 after 1970). The image area for "TV transmission" is slightly smaller than the full "Academy" ratio at 21 by 16 mm (0.83 by 0.63 in), an aspect ratio of 1.33:1. Hence when the "Academy" ratio is referred to as having an aspect ratio of 1.33:1, it is done so mistakenly.[32]

4.2. Widescreen

The commonly used anamorphic format uses a similar four-perf frame, but an anamorphic lens is used on the camera and projector to produce a wider image, today with an aspect ratio of about 2.39:1 (more commonly referred to as 2.40:1). The ratio was formerly 2.35:1—and is still often mistakenly referred to as such—until an SMPTE revision of projection standards in 1970).[33] The image, as recorded on the negative and print, is horizontally compressed (squeezed) by a factor of 2.[34]

The unexpected success of the Cinerama widescreen process in 1952 led to a boom in film format innovations to compete with the growing audiences of television and the dwindling audiences in movie theaters. These processes could give theatergoers an experience that television could not at that time—color, stereophonic sound and panoramic vision. Before the end of the year, 20th Century Fox had narrowly "won" a race to obtain an anamorphic optical system invented by Henri Chrétien, and soon began promoting the Cinemascope technology as early as the production phase.[35]

Looking for a similar alternative, other major studios hit upon a simpler, less expensive solution by April 1953: the camera and projector used conventional spherical lenses (rather than much more expensive anamorphic lenses), but by using a removable aperture plate in the film projector gate, the top and bottom of the frame could be cropped to create a wider aspect ratio. Paramount Studios began this trend with their aspect ratio of 1.66:1, first used in Shane, which was originally shot for Academy ratio.[36] It was Universal Studios, however, with their May release of Thunder Bay that introduced the now standard 1.85:1 format to American audiences and brought attention to the industry the capability and low cost of equipping theaters for this transition.

Other studios followed suit with aspect ratios of 1.75:1 up to 2:1. For a time, these various ratios were used by different studios in different productions, but by 1956, the aspect ratio of 1.85:1 became the "standard" US format. These flat films are photographed with the full Academy frame, but are matted (most often with a mask in the theater projector, not in the camera) to obtain the "wide" aspect ratio. The standard, in some European countries, became 1.66:1 instead of 1.85:1, although some productions with pre-determined American distributors composed for the latter to appeal to US markets.

In September 1953, 20th Century Fox debuted CinemaScope with their production of The Robe to great success.[37] CinemaScope became the first marketable usage of an anamorphic widescreen process and became the basis for a host of "formats," usually suffixed with -scope, that were otherwise identical in specification, although sometimes inferior in optical quality. (Some developments, such as SuperScope and Techniscope, however, were truly entirely different formats.) By the early 1960s, however, Panavision would eventually solve many of the CinemaScope lenses' technical limitations with their own lenses,[34] and by 1967, CinemaScope was replaced by Panavision and other third-party manufacturers.[38]

The 1950s and 1960s saw many other novel processes using 35 mm, such as VistaVision, SuperScope, and Technirama, most of which ultimately became obsolete. VistaVision, however, would be revived decades later by Lucasfilm and other studios for special effects work, while a SuperScope variant became the predecessor to the modern Super 35 format that is popular today.

4.3. Super 35

The concept behind Super 35 originated with the Tushinsky Brothers' SuperScope format, particularly the SuperScope 235 specification from 1956. In 1982, Joe Dunton revived the format for Dance Craze, and Technicolor soon marketed it under the name "Super Techniscope" before the industry settled on the name Super 35.[39] The central driving idea behind the process is to return to shooting in the original silent "Edison" 1.33:1 full 4-perf negative area (24.89 mm by 18.67 mm or 0.980 in by 0.735 in), and then crop the frame either from the bottom or the center (like 1.85:1) to create a 2.40:1 aspect ratio (matching that of anamorphic lenses) with an area of 24 by 10 mm (0.94 by 0.39 in). Although this cropping may seem extreme, by expanding the negative area out perf-to-perf, Super 35 creates a 2.40:1 aspect ratio with an overall negative area of 240 square millimeters (0.372 sq in), only 9 mm2 (0.014 sq in) less than the 1.85:1 crop of the Academy frame (248.81 mm2 or 0.386 sq in).[40] The cropped frame is then converted at the intermediate stage to a 4-perf anamorphically squeezed print compatible with the anamorphic projection standard. This allows an "anamorphic" frame to be captured with non-anamorphic lenses, which are much more common. Up to 2000, once the film was photographed in Super 35, an optical printer was used to anamorphose (squeeze) the image. This optical step reduced the overall quality of the image and made Super 35 a controversial subject among cinematographers, many who preferred the higher image quality and frame negative area of anamorphic photography (especially with regard to granularity).[40] With the advent of Digital intermediates (DI) at the beginning of the 21st century, however, Super 35 photography has become even more popular, since everything could be done digitally, scanning the original 4-perf 1.33:1 (or 3-perf 1.78:1) picture and cropping it to the 2.39:1 frame already in-computer, without anamorphosing stages, and also without creating an additional optical generation with increased grain. This process of creating the aspect ratio in the computer allows the studios to perform all post-production and editing of the movie in its original aspect (1.33:1 or 1.78:1) and to then release the cropped version, while still having the original when necessary (for Pan & Scan, HDTV transmission, etc.).

4.4. 3-Perf

The non-anamorphic widescreen ratios (most commonly 1.85:1) used in modern feature films makes inefficient use of the available image area on 35 mm film using the standard 4-perf pulldown; the height of a 1.85:1 frame occupying only 65% of the distance between the frames. It is clear, therefore, that a change to a 3-perf pulldown would allow for a 25% reduction in film consumption whilst still accommodating the full 1.85:1 frame. Ever since the introduction of these widescreen formats in the 1950s various film directors and cinematographers have argued in favour of the industry making such a change. The Canadian cinematographer Miklos Lente invented and patented a three-perforation pull down system which he called "Trilent 35" in 1975 though he was unable to persuade the industry to adopt it.[41]

The idea was later taken up by the Swedish film-maker Rune Ericson who was a strong advocate for the 3-perf system.[42] Ericson shot his 51st feature Pirates of the Lake in 1986 using two Panaflex cameras modified to 3-perf pulldown and suggested that the industry could change over completely over the course of ten-years. However, the movie industry did not make the change mainly because it would have required the modification of the thousands of existing 35 mm projectors in movie theaters all over the world. Whilst it would have been possible to shoot in 3-perf and then convert to standard 4-perf for release prints the extra complications this would cause and the additional optical printing stage required made this an unattractive option at the time for most film makers.

However, in television production, where compatibility with an installed base of 35 mm film projectors is unnecessary, the 3-perf format is sometimes used, giving—if used with Super 35—the 16:9 ratio used by HDTV and reducing film usage by 25 percent. Because of 3-perf's incompatibility with standard 4-perf equipment, it can utilize the whole negative area between the perforations (Super 35 mm film) without worrying about compatibility with existing equipment; the Super 35 image area includes what would be the soundtrack area in a standard print.[43] All 3-perf negatives require optical or digital conversion to standard 4-perf if a film print is desired, though 3-perf can easily be transferred to video with little to no difficulty by modern telecine or film scanners. With digital intermediate now a standard process for feature film post-production, 3-perf is becoming increasingly popular for feature film productions which would otherwise be averse to an optical conversion stage.[44]

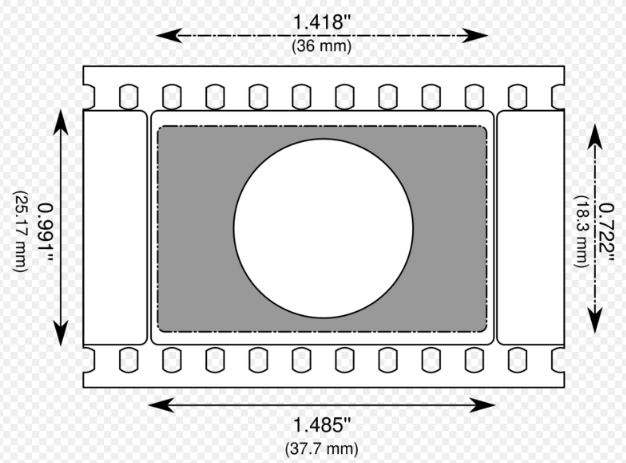

4.5. VistaVision

The VistaVision motion picture format was created in 1954 by Paramount Pictures to create a finer-grained negative and print for flat widescreen films.[45] Similar to still photography, the format uses a camera running 35 mm film horizontally instead of vertically through the camera, with frames that are eight perforations long, resulting in a wider aspect ratio of 1.5:1 and greater detail, as more of the negative area is used per frame.[40] This format is unprojectable in standard theaters and requires an optical step to reduce the image into the standard 4-perf vertical 35 mm frame.[46]

While the format was dormant by the early 1960s, the camera system was revived for visual effects by John Dykstra at Industrial Light and Magic, starting with Star Wars, as a way of reducing granularity in the optical printer by having increased original camera negative area at the point of image origination.[47] Its usage has again declined since the dominance of computer-based visual effects, although it still sees limited utilization.[48]

5. Perforations

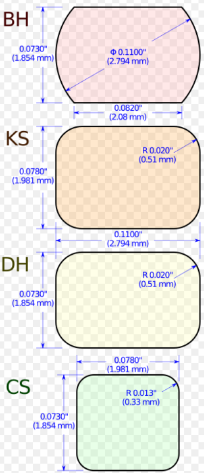

- BH perfs

- Film perforations were originally round holes cut into the side of the film, but as these perforations were subject to wear and deformation, the shape was changed to what is now called the Bell & Howell (BH) perforation, which has straight top and bottom edges and outward curving sides. The BH perforation's dimensions are 0.110 inches (2.8 mm) from the middle of the side curve to opposite top corner by 0.073 inches (1.9 mm) in height.[49] The BH1866 perforation, or BH perforation with a pitch of 0.1866 inches (4.74 mm), is the modern standard for negative and internegative films.[50]

- KS perfs

- Because BH perfs have sharp corners, the repeated use of the film through intermittent movement projectors creates strain that can easily tear the perforations. Furthermore, they tended to shrink as the print slowly decayed. Therefore, larger perforations with a rectangular base and rounded corners were introduced by Kodak in 1924 to improve steadiness, registration, durability, and longevity. Known as "Kodak Standard" (KS), they are 0.0780 inches (1.98 mm) high by 0.1100 inches (2.79 mm) wide.[51] Their durability makes KS perfs the ideal choice for some (but not all) intermediate and all release prints, and original camera negatives which require special use, such as high-speed filming, but not for bluescreen, front projection, rear projection, or matte work as these specific applications demand the more accurate registration which is only possible with BH or DH perforations. The increased height also means that the image registration was considerably less accurate than BH perfs, which remains the standard for negatives.[52][53] The KS1870 perforation, or KS perforation with a pitch of 0.1870 inches (4.75 mm), is the modern standard for release prints.[50]

These two perforations have remained by far the most commonly used ones. BH perforations are also known as N (negative) and KS as P (positive). The Bell & Howell perf remains the standard for camera negative films because of its perforation dimensions in comparison to most printers, thus it can keep a steady image compared to other perforations.[50][54]

- DH perfs

- The Dubray–Howell (DH) perforation was first proposed in 1932[55][56] to replace the two perfs with a single hybrid. The proposed standard was, like KS, rectangular with rounded corners and a width of 0.1100 inches (2.79 mm), and, like BH, was 0.073 inches (1.9 mm) tall.[46][57] This gave it longer projection life but also improved registration. One of its primary applications was usage in Technicolor's dye imbibition printing (dye transfer).[54] The DH perf never had broad uptake, and Kodak's introduction of monopack Eastmancolor film in the 1950s reduced the demand for dye transfer,[53] although the DH perf persists in special application intermediate films to this day.[58]

- CS perfs

- In 1953, the introduction of CinemaScope by Fox Studios required the creation of a different shape of perforation which was nearly square and smaller to provide space for four magnetic sound stripes for stereophonic and surround sound.[6] These perfs are commonly referred to as CinemaScope (CS) or "Fox hole" perfs. Their dimensions are 0.0780 inches (1.98 mm) in width by 0.0730 inches (1.85 mm) in height.[59] Due to the size difference, CS perfed film cannot be run through a projector with standard KS sprocket teeth, but KS prints can be run on sprockets with CS teeth. Shrunken film with KS prints that would normally be damaged in a projector with KS sprockets may sometimes be run far more gently through a projector with CS sprockets because of the smaller size of the teeth. Magnetic striped 35 mm film became obsolete in the 1980s after the advent of Dolby Stereo, as a result film with CS perfs is no longer manufactured.

During continuous contact printing, the raw stock and the negative are placed next to one another around the sprocket wheel of the printer. The negative, which is the closer of the two to the sprocket wheel (thus creating a slightly shorter path), must have a marginally shorter pitch between perforations (0.1866 in pitch); the raw stock has a long pitch (0.1870 in). While cellulose nitrate and cellulose diacetate stocks used to shrink during processing slightly enough to have this difference naturally occur, modern safety stocks do not shrink at the same rate, and therefore negative (and some intermediate) stocks are perforated at a pitch of 0.2% shorter than print stock.[49]

6. Innovations in Sound

Three different digital soundtrack systems for 35 mm cinema release prints were introduced during the 1990s. They are: Dolby Digital, which is stored between the perforations on the sound side; SDDS, stored in two redundant strips along the outside edges (beyond the perforations); and DTS, in which sound data is stored on separate compact discs synchronized by a timecode track on the film just to the right of the analog soundtrack and left of the frame.[60] Because these soundtrack systems appear on different parts of the film, one movie can contain all of them, allowing broad distribution without regard for the sound system installed at individual theatres.

The analogue optical track technology has also changed: in the early years of the 21st century distributors changed to using cyan dye optical soundtracks instead of applicated tracks, which use environmentally unfriendly chemicals to retain a silver (black-and-white) soundtrack. Because traditional incandescent exciter lamps produce copious amounts of infra-red light, and cyan tracks do not absorb infra-red light, this change has required theaters to replace the incandescent exciter lamp with a complementary colored red LED or laser. These LED or laser exciters are backwards-compatible with older tracks.[61] The film Anything Else (2003) was the first to be released with only cyan tracks.[61]

To facilitate this changeover, intermediate prints known as "high magenta" prints were distributed. These prints used a silver plus dye soundtrack that were printed into the magenta dye layer. The advantage gained was an optical soundtrack, with low levels of sibilant (cross-modulation) distortion, on both types of sound heads.[62]

7. 3D Systems for Theatrical 35 mm Presentation

The success of digitally projected 3D movies in the first two decades of the 21st century led to a demand from some theater owners to be able to show these movies in 3D without incurring the high capital cost of installing digital projection equipment. To satisfy that demand, a number of systems had been proposed for 3D systems based on 35 mm film by Technicolor,[63] Panavision[64] and others. These systems are improved version of the "over-under" stereo 3D prints first introduced in the 1960s.

To be attractive to exhibitors, these schemes offered 3D films that can be projected by a standard 35 mm cinema projector with minimal modification, and so they are based on the use of "over-under" film prints. In these prints a left-right pair of 2.39:1 non-anamorphic images are substituted for the one 2.39:1 anamorphic image of a 2D "scope" print. The frame dimensions are based on those of the Techniscope 2-perf camera format used in the 1960s and '70s. However, when used for 3D the left and right frames are pulled down together, thus the standard 4-perf pulldown is retained, minimising the need for modifications to the projector or to long-play systems. The linear speed of film through the projector and sound playback both remain exactly the same as in normal 2D operation.

The Technicolor system uses the polarisation of light to separate the left and right eye images and for this they rent to exhibitors a combination splitter-polarizer-lens assembly which can be fitted to a lens turret in the same manner as an anamorphic lens. In contrast, the Panavision system uses a spectral comb filter system, but their combination splitter-filter-lens is physically similar to the Technicolor assembly and can be used in the same way. No other modifications are required to the projector for either system, though for the Technicolor system a silver screen is necessary, as it would be with polarised-light digital 3D. Thus a programme can readily include both 2D and 3D segments with only the lens needing to be changed between them.

In June 2012 Panavision 3D systems for both 35 mm film and digital projection were withdrawn from the market by DVPO theatrical (who marketed these system on behalf of Panavision) citing "challenging global economic and 3D market conditions".[65]

8. Decline

In transition period centered around 2005–2015, the rapid conversion of the cinema exhibition industry to digital projection has seen 35 mm film projectors removed from most of the projection rooms as they are replaced by digital projectors. By the mid-2010s, most of the theaters across the world have been converted to digital projection, while others are still running 35 mm projectors.[66] In spite of the uptake in digital projectors installed in global cinemas, 35mm film remains in a niche market of enthusiasts and format lovers.

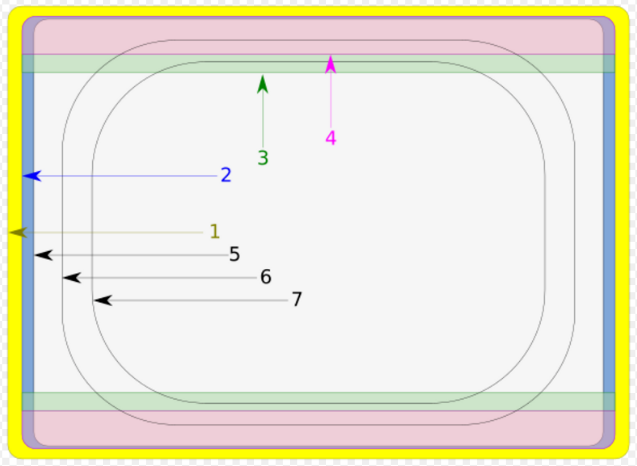

9. Technical Specifications

- Camera aperture

- Academy ratio, 1.375:1

- 1.85:1 Ratio

- 1.66:1 Ratio

- Television scanned area

- Television "action safe" area

- Television "title safe" area

Technical specifications for 35 mm film are standardized by SMPTE.

- 16 frames per foot (0.748 inches (19.0 mm) per frame (long pitch))

- 24 frames per second (fps); 90 feet (27 m) per minute. 1,000 feet (300 m) is about 11 minutes at 24 fps. ({{convert|900|ft|m|abbr=on}inutes)

- vertical pulldown

- 4 perforations per frame (all projection and most origination except 3-perf). 1 perforation = 3⁄16 in or 0.1875 in. 1 frame = 3⁄4 in or 0.75 in.

35 mm spherical[40]

- aspect ratio: 1.375:1 on camera aperture; 1.85:1 and 1.66:1 are hard- or soft-matted over this

- camera aperture: 0.866 by 0.630 in (22.0 by 16.0 mm)

- projector aperture (full 1.375:1): 0.825 by 0.602 in (21.0 by 15.3 mm)

- projector aperture (1.66:1): 0.825 by 0.497 in (21.0 by 12.6 mm)

- projector aperture (1.85:1): 0.825 by 0.446 in (21.0 by 11.3 mm)

- TV station aperture: 0.816 by 0.612 in (20.7 by 15.5 mm)

- TV transmission: 0.792 by 0.594 in (20.1 by 15.1 mm)

- TV safe action: 0.713 by 0.535 in (18.1 by 13.6 mm); corner radii: 0.143 inches (3.6 mm)

- TV safe titles: 0.630 by 0.475 in (16.0 by 12.1 mm); corner radii: 0.125 inches (3.2 mm)

Super 35 mm film[40]

- aspect ratio: 1.33:1 on 4-perf camera aperture

- camera aperture (4-perf): 0.980 by 0.735 in (24.9 by 18.7 mm)

- picture used (35 mm anamorphic): 0.945 by 0.394 inches (24.0 by 10.0 mm)

- picture used (70 mm blowup): 0.945 by 0.430 inches (24.0 by 10.9 mm)

- picture used (35 mm flat 1.85): 0.945 by 0.511 inches (24.0 by 13.0 mm)

35 mm anamorphic[40]

- aspect ratio: 2.39:1, in a 1.19:1 frame with a 2× horizontal anamorphosis

- camera aperture: 0.866 by 0.732 inches (22.0 by 18.6 mm)

- projector aperture: 0.825 by 0.690 inches (21.0 by 17.5 mm)

The content is sourced from: https://handwiki.org/wiki/Engineering:35_mm_film

References

- Alfred, Randy (2 May 2011). "May 2, 1887: Celluloid-Film Patent Ignites Long Legal Battle". Wired. https://www.wired.com/2011/05/0502celuloid-photographic-film/. Retrieved 29 August 2017.

- "The Wizard of Photography: The Story of George Eastman and How He Transformed Photography". Timeline PBS American Experience Online. https://www.pbs.org/wgbh/amex/eastman/timeline/index_2.html. Retrieved July 5, 2006.

- Mees, C. E. Kenneth (1961). From Dry Plates to Ektachrome Film: A Story of Photographic Research. Ziff-Davis Publishing. pp. 15–16.

- Miller, A.J.; Robertson, A.C. (January 1965). "Motion-Picture Film — Its Size and Dimensional Characteristics". Journal of the SMPTE (Society of Motion Picture and Television Engineers) 74: 3–11. http://www.wsmr.army.mil/RCCsite/Documents/451-00_Photography,%20Motion%20Picture%20Film%20Cores%20and%20Spools,%20Perforations%20and%20Other%20Technical%20Information/451-00.pdf. Retrieved 30 August 2018.

- Robinson, David (1996). From peep show to palace: the birth of American film. Columbia University Press. p. 39. ISBN 978-0-231-10338-1. https://books.google.com/?id=3kjkXYdqNQMC.

- Eastman Professional Motion Picture Films. Eastman Kodak Co. June 1, 1983. ISBN 978-0-87985-477-5. https://books.google.com/?id=Z_4VAAAACAAJ.

- Dickson, W. K. L. (December 1933). "A Brief History of the Kinetograph, The Kinetoscope and The Kineto-Phonograph". Journal of the Society of Motion Picture Engineers (Society of Motion Picture Engineers) XXI (6): 435–455. https://archive.org/stream/journalofsociety21socirich#page/434/mode/2up. Retrieved March 13, 2012.

- Fullerton, John; Söderbergh-Widding, Astrid (June 2000). Moving images: from Edison to the Webcam. John Libbey & Co Ltd. p. 3. ISBN 978-1-86462-054-2. https://books.google.com/?id=9lMAfjLi1LEC.

- Katz, Ephraim (1994). The Film Encyclopedia. HarperCollins. ISBN 978-0-06-273089-3. https://books.google.com/?id=WhB2snVhKLcC.

- Musser, Charles (1994). The Emergence of Cinema: The American Screen to 1907. Berkeley, California: University of California Press. pp. 303–313. ISBN 978-0-520-08533-6. https://books.google.com/?id=IEUMWToGOtUC.

- Lobban, Grant. "Film Gauges and Soundtracks", BKSTS wall chart (sample frame provided). [Year unknown]

- The gauge and perforations are almost identical to modern film stock; the full silent ratio is also used as the film gate in movie cameras, although portions of the image are later cropped out in post-production and projection.

- Scheerer, Theo M. (1960). The Leica and the Leica System (3rd ed.). Frankfurt am Main: Umschau Verlag. pp. 7–8.

- Slide, Anthony (1990). The American film industry: a historical dictionary. Amadeus Press. ISBN 978-0-87910-139-8. https://books.google.com/?id=DO8JAQAAMAAJ.

- Laney, Dennis (August 31, 2004). Leica Collectors Guide. Hove Books. ISBN 978-1-874707-00-4. https://books.google.com/?id=YV8xPQAACAAJ.

- Upton, Barbara London; Upton, John (1989). Photography (4th ed.). BL Books, Inc./Scott, Foresman and Company. ISBN 978-0-673-39842-0.

- Malkiewicz, J. Kris; Mullen, M. David (June 21, 2005). Cinematography: a guide for filmmakers and film teachers. Fireside. p. 49. ISBN 978-0-7432-6438-9. https://books.google.com/?id=fR--7yVROI4C.

- Probst, Christopher (May 2000). "Taking Stock: Part 2 of 2". American Cinematographer (ASC Press): 110–120.

- Holben, Jay (April 2000). "Taking Stock: Part 1 of 2". American Cinematographer (ASC Press): 118–130.

- Koszarski, Richard (May 4, 1994). An Evening's Entertainment: The Age of the Silent Feature Picture, 1915–1928. University of California Press. p. 127. ISBN 978-0-520-08535-0. https://books.google.com/?id=PLUbxH1_PREC.

- Robertson, Patrick (September 1, 2001). Film Facts. New York: Billboard Books. p. 166. ISBN 978-0-8230-7943-8. https://books.google.com/?id=4PnEvNC_F9oC.

- Hart, Martin (1998). "Kinemacolor: The First Successful Color System". http://www.widescreenmuseum.com/oldcolor/kinemaco.htm. Retrieved July 8, 2006.

- Hart, Martin (May 20, 2004). "Kinemacolor to Eastmancolor: Faithfully Capturing an Old Technology with a Modern One". http://www.widescreenmuseum.com/oldcolor/kinemacolortoeastmancolor.htm. Retrieved July 8, 2006.

- Hart, Martin (2003). "The History of Technicolor". http://www.widescreenmuseum.com/oldcolor/technicolor1.htm. Retrieved July 7, 2006.

- Sipley, Louis Walton (1951). A Half Century of Color. New York: The Macmillan Company.

- "Chronology of Motion Picture Films 1940 to 1959". Kodak. Archived from the original on June 25, 2009. https://web.archive.org/web/20090625062139/http://motion.kodak.com/US/en/motion/Products/Chronology_Of_Film/chrono2.htm. Retrieved August 12, 2009.

- "Broadening the Impact of Pictures". Kodak.com. Archived from the original on February 1, 2012. https://web.archive.org/web/20120201104103/http://www.kodak.com/global/en/corp/historyOfKodak/impacting.jhtml?pq-path=2217%2F2687%2F2691. Retrieved August 29, 2016.

- Belton, John (1992). Widescreen Cinema. Cambridge, Mass.: Harvard University Press. pp. 17–18. ISBN 978-0-674-95261-4. https://books.google.com/?id=PKUfAQAAIAAJ.

- Dibbets, Karel (1996). "The Introduction of Sound". The Oxford History of World Cinema. Oxford: Oxford University Press.

- Cowan, Lester (January 1930). "Camera and Projector Apertures in Relation to Sound on Film Pictures". Society of Motion Picture Engineers Journal 14: 108–121.

- "Studios Seek to Aid Towards Better Projection Goal". Movie Age: 18. November 9, 1929.

- Hummel, Rob, ed (2001). American Cinematographer Manual (8th ed.). Hollywood: ASC Press. pp. 18–22.

- Hart, Martin (2000). "Of Apertures and Aspect Ratios". http://www.widescreenmuseum.com/widescreen/apertures.htm. Retrieved August 10, 2006.

- Hora, John (2001). "Anamorphic Cinematography". American Cinematographer Manual (8th ed.). Hollywood: ASC Press.

- Hart, Martin (2000). "Cinemascope Wing 1". http://www.widescreenmuseum.com/widescreen/wingcs1.htm. Retrieved August 10, 2006.

- Hart, Martin (2000). "Early Evolution from Academy to Wide Screen Ratios". http://www.widescreenmuseum.com/Widescreen/evolution.htm. Retrieved August 10, 2006.

- Samuelson, David W. (September 2003). "Golden Years". American Cinematographer Magazine (ASC Press): 70–77.

- Nowell-Smith, Geoffrey, ed (1996). The Oxford History of World Cinema. Oxford: Oxford University Press. p. 266.

- Mitchell, Rick. "The Widescreen Revolution: Expanding Horizons — The Spherical Campaign". Society of Camera Operators Magazine (Summer 1994). Archived from the original on January 3, 2004. https://web.archive.org/web/20040103160036/http://www.soc.org/opcam/04_s94/mg04_widescreen.html. Retrieved August 25, 2016.

- Burum, Stephen H. (2004). American Cinematographer Manual. A.S.C. Holding Corp. ISBN 978-0-935578-24-9. https://books.google.com/?id=kTmGAQAACAAJ.

- "Trilent 35 System". Image Technology 70 (7). July 1988.

- Ericson, Rune (March 1987). "Three Perfs for Four". Image Technology 69 (3).

- "3 perf: The future of 35 mm filmmaking". Archived from the original on July 13, 2006. https://web.archive.org/web/20060713061542/http://www.aaton.com/products/film/35/3perf.php. Retrieved August 10, 2006.

- "Film Types_and Formats". Archived from the original on 2013-06-01. https://web.archive.org/web/20130601172936/http://motion.kodak.com/motion/uploadedFiles/US_plugins_acrobat_en_motion_newsletters_filmEss_05_Film_Types_and_Formats.pdf.

- Nowell-Smith, Geoffrey, ed (1996). The Oxford History of World Cinema. Oxford: Oxford University Press. pp. 446–449.

- Hart, Douglas C. (1996). The Camera Assistant: A Complete Professional Handbook. Boston: Focal Press.

- Blalack, Robert; Roth, Paul (July 1977). "Composite Optical and Photographic Effects". American Cinematographer Magazine.

- "Double Negative Breaks Down Batman Begins". July 18, 2005. Archived from the original on October 16, 2006. https://web.archive.org/web/20061016104400/http://www.fxguide.com/article262.html. Retrieved August 11, 2006.

- Case, Dominic (1985). Motion Picture Film Processing. Boston: Focal Press.

- "Perforation Sizes and Shapes". Motion Newsletters. Kodak. October 30, 2007. p. 95. http://motion.kodak.com/motion/uploadedFiles/US_plugins_acrobat_en_motion_newsletters_filmEss_11_Film_Specs.pdf#page=3. Retrieved March 14, 2012.

- ANSI/SMPTE 139–1996. SMPTE STANDARD for Motion-Picture Film (35 mm) - Perforated KS. Society of Motion Picture and Television Engineers. White Plains, NY.

- Society of Motion Picture Engineers (May 1930). "Standards Adopted by the Society of Motion Picture Engineers". Journal of the Society of Motion Picture Engineers XIV (5): 545–566. https://archive.org/stream/journalofsociety14socirich#page/552/mode/2up.

- "Technical Glossary of Common Audiovisual Terms: Perforations". ScreenSound Australia. Archived from the original on October 31, 2007. https://web.archive.org/web/20071031093815/http://www.nfsa.afc.gov.au/preservation/audiovisual_terms/audiovisual_item.php?term=Perforations. Retrieved August 11, 2006.

- Gray, Peter (1997). "Perforations/Sprocket Holes: Peter Gray - Director of Photography". Archived from the original on April 12, 2008. https://web.archive.org/web/20080412041026/http://www.jkor.com/peter/perfs.html. Retrieved March 14, 2012.

- Howell, A.S. (April 1932). "Change in 355 Mm. Film Perforations". Journal of the Society of Motion Picture Engineers XVIII (4). OCLC 1951231. https://archive.org/stream/journalofsociety18socirich#page/n513/mode/2up.

- "Committee Activities, Report of the Standards and Nomenclature Committee, Wide Film". Journal of the Society of Motion Picture Engineers (New York, NY: The Society) XVII (3): 431–436. September 1931. OCLC 1951231. https://archive.org/stream/journalofsociety17socirich#page/432/mode/2up.

- "Why Do Sound Negative Films Use Kodak Standard Perforations?". Technical Information. Kodak. Archived from the original on March 14, 2012. https://www.webcitation.org/query?url=http%3A%2F%2Fmotion.kodak.com%2Fmotion%2FSupport%2FTechnical_Information%2FProcessing_Information%2Fperforations.htm&date=2012-03-14. Retrieved March 14, 2012.

- "Kodak Vision Color Intermediate Film - Technical Data". Archived from the original on September 5, 2006. https://web.archive.org/web/20060905085939/http://www.kodak.com/US/en/motion/products/intermediate/tech5242.jhtml?id=0.1.4.6.4.4.4&lc=en. Retrieved August 11, 2006.

- ANSI/SMPTE 102-1997. SMPTE STANDARD for Motion-Picture Film (35 mm) - Perforated CS-1870. Society of Motion Picture and Television Engineers. White Plains, New York.

- "Corporate Milestones". Archived from the original on 2010-06-09. https://web.archive.org/web/20100609183206/http://www.dts.com/Corporate/About_Us/Milestones.aspx.

- Hull, Joe. "Committed to Cyan". Archived from the original on September 21, 2006. https://web.archive.org/web/20060921125129/http://www.dyetracks.org/FJI_Sept04.pdf. Retrieved August 11, 2006.

- "Cyan Dye Tracks Laboratory Guide". Archived from the original on 2009-11-26. https://web.archive.org/web/20091126055003/http://motion.kodak.com/US/en/motion/Support/Technical_Information/Lab_Tools_And_Techniques/Cyan_Dye_Tracks/guide.htm.

- "Entertainment Services". Technicolor. http://www.technicolor.com/en/hi/cinema. Retrieved 29 August 2016.

- "Seeing is Believing". Cinema Technology 24 (1). March 2011.

- "Home". Archived from the original on 2012-04-07. https://web.archive.org/web/20120407125922/http://www.dpvotheatrical.com/Home_Page.html.

- Barraclough, Leo (23 June 2013). "Digital Cinema Conversion Nears End Game". Variety. https://variety.com/2013/film/news/digital-cinema-conversion-nears-end-game-1200500975/. Retrieved 29 August 2016.