1. Transformation from ML to DL Approaches for the Effective Prediction of AD

During the last decade, ML has been employed to discover neuroimaging indicators of AD. Several ML technologies are now being used to enhance the diagnosis and prognosis of AD [

5]. The authors of [

6] used a “support vector machine (SVM)” to accurately categorize steady MCI vs. progressing MCI in 35 occurrences of control subjects and 67 MCI instances. In most ML procedures for bio-image identification, slicing is prioritized, but recovery of robust shape features has mostly been ignored. In several circumstances, however, extracting convincing qualities from a feature space could eliminate the necessity for image classification [

7]. Most early studies relied on traditional shape features such as “Gabor filters” and “Haralick texture” attributes [

8,

9]. DL is defined as a novel domain of ML research that was launched with the purpose of bringing ML nearer to its initial objective: “artificial intelligence (AI)”. To interpret textual, voice, and multimedia files, the DL architecture often requires more abstraction and representation levels [

10].

The authors of [

11] provide a comparative analysis of classical ML and DL techniques for the early diagnosis of AD and the development of mild cognitive impairment to Alzheimer’s disease. They examined sixteen techniques, four of which included both DL and ML, and twelve employed only DL. Using a combination of DL and ML, an accuracy rate of 96% was attained for feature selection and 84.2% for MCI-to-AD transformation. Utilizing CNN in the DL method, an attribute selection accuracy of 96.0% and a MCI-to-AD conversion predictive performance of 84.2% were obtained. In particular, the authors discovered that categorization ability could be enhanced by combining composite neuroimaging with serum biomarkers.

According to the study in [

12], it is obvious that DL approaches for feature extraction and the ML strategy of classification using a SVM classifier are extremely effective for AD diagnosis and prediction. It has also been noted that prognosis and treatment based on many modalities fare better than those based on a single modality. Recent developments show a rise in the application of DL algorithms for the study of medical images, allowing for quicker interpretation and more improved precision than a human clinician.

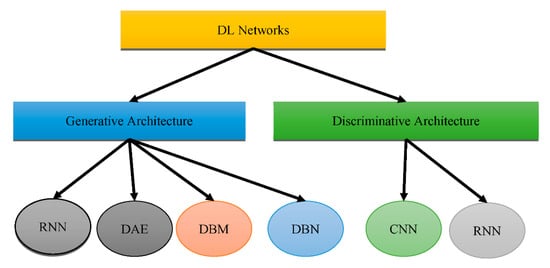

Figure 1 shows that DL could be placed into two groups: “generative architecture” and “discriminative architecture”.

Figure 1. Types of DL architectures.

The “Recurrent Neural Network (RNN)”, “Deep Auto-Encoder (DAE)”, “Deep Boltzmann Machine (DBM)”, and “Deep Belief Networks (DBN)” are the four kinds of “generative architecture”, whereas the “Convolutional Neural Network (CNN)” and RNN are the two kinds of “discriminative architecture”. The structurally complex transformations and local derivative structures were recently discovered as current segmentation techniques for Phyto analytics by many scientists [

11,

12,

13]. These descriptions are referred to as hand-crafted traits since they were created by people to extract characteristics from photos. A major aspect of employing these characteristics was to utilize vectors to locate a part of a picture, whereupon the created pattern is extracted. The SVM then receives the characteristics obtained by the customized approach [

14] as a form of predictor. The best characteristics extract characteristics from a database. Several of the most widely used and concise descriptors rely on DL to achieve this [

15,

16]. As shown in

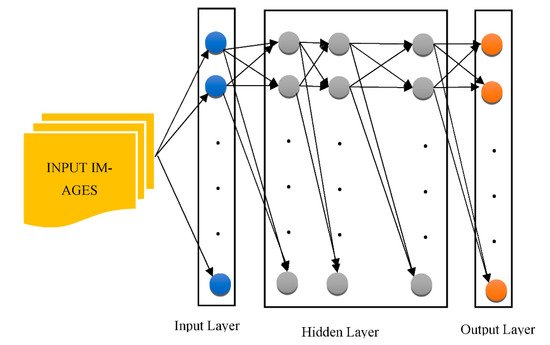

Figure 2, the CNN is used to pull descriptions out of the images for this reason.

Figure 2. The architecture of a generalized CNN.

CNNs are particularly good at retrieving general features [

17]. Various layers of approximations are formed when a deep network has been built on a large volume of imagery. The first-layer characteristics, for example, are like “Gabor filters” or color objects, which can be used for a wide range of picture issues and repositories [

18]. “Deep neural networks (DNN)” can be employed on bio-image records; however, this method necessitates a large volume of information that is difficult to come by in many circumstances [

19]. The information augmentation procedure is an answer to this situation, as it could customize the preliminary data using its own approach, allowing it to build information. Reflection, translation, and pivoting original imageries to generate opposing portrayals are certain popular information augmentation processes [

20]. Customizing the picture’s luminosity, intensity, as well as brightness could also produce diverse images [

21,

22]. “Principal component analysis (PCA)” is another commonly utilized technique for information augmentation. Certain essential elements are inserted into a PCA once they have been scaled down to a smaller proportion [

23,

24]. The major goal of this procedure is to display only the picture’s highly appropriate features. “Generative adversarial networks” have been used in recent studies [

25,

26] to combine images that vary with the primary ones. This strategy necessitates the creation of a separate domain [

27,

28].

The images generated, however, are not reliant on modifications in the image database. As a result, different techniques may be applied depending upon the issue. For instance, element-wise computation was used to mimic random noise in radar altimeter imagery in [

29]. Ductility was used in [

30] to mimic the process of stretching in prostate chemotherapeutics. An alternative technique that takes advantage of DL is to adjust a pre-trained DL model, such as a CNN, on fresh data reflecting a different challenge. This method takes advantage of a pre-trained CNN’s shallow depth layers. Fine-tuning (also known as “tuning”) is a technique for stretching the learning phase on a new image dataset. This strategy significantly decreases the computing expenses of learning new information and is suited for modest populations. Another advantage of fine-tuning is that it enables scientists to readily study CNN combinations because of lower processing expenses. Such configurations could be created with multiple pre-trained CNNs and a variety of hyperparameters.

CNNs are also used as attribute extractors in certain investigations [

31]. SVM with quadratic or regular kernels plus “logistic regression” and “extreme ML random forest” or “XGBoost” and “decision trees” are used for classifications [

32]. Shmulev et al. [

33] evaluated the findings acquired via the CNN technique to those obtained through alternative classifiers that only analyzed characteristics derived by CNN and determined that the latter works better than the former. Rather than being deployed explicitly for visual information, CNNs could be utilized on pre-extracted characteristics. This is particularly pertinent whenever a CNN is administered to the outcomes of different regression methods and whenever diagnostic ratings are matched across other model parameters and magnetic resonance characteristics.

CNNs could also be used to analyze non-Euclidean environments such as clinical charts or cerebral interface pictures. Morphological MRIs could be used with different designs. Various perceptron variants, such as a “probabilistic neural network” or a “stacked of FC layers,” were used in various studies. Several studies used both “supervised” (deep polynomial networks) and “unsupervised” (deep Boltzmann machine and AE) designs to retrieve enhanced interpretations of attributes, whereas SVMs are primarily used for classification [

34]. Imagery parameters such as texturing, forms, trabecular bone, and environment factors are subjected to considerable pre-processing, which is common in non-CNN designs. Furthermore, to further minimize the dimensions, the integration or extraction of attributes is commonly utilized. On the other hand, DL-based categorization techniques are really not limited to cross-sectional structural MRIs. Observational research could combine data from various time frames while researching relatively similar topics.

In [

35], the authors developed an SVM with kernels that permitted antipsychotic MCI to be switched to AD while the other premonitory categories of AD were removed. They were able to achieve a 90.5 percent cross-validation effectiveness in both the AD and NC studies. They were also 72.3 percent accurate in predicting the progression of MCI to AD. Regarding the extraction of attributes, two methods were utilized:

Researchers further found that characteristics ranging from 24 to 26 are the most accurate predictors of MCI advancing to AD. They also discovered that the width of the bilateral neocortex may be the most important indicator, followed by right hippocampus thickness and APOE E”4 state. Costafreda et al. [

36] employed hippocampus size to identify MCI patients who were inclined to progress to AD. A number of 103 MCI patients from “AddNeuroMed” were used in their research. They employed the “FreeSurfer” for information pre-processing and SVM with a semi-Stochastic radial basis kernel for information categorization. Following model training on the entire AD and NC datasets, researchers put it into practice. In less than a year, they were able to achieve an accuracy of 85 percent for AD and 80 percent for NC. They concluded that hippocampus alterations could enhance predictive efficacy by consolidating forebrain degeneration.

According to a comprehensive analysis of various SVM-centered studies [

37], SVM is a commonly used technique to differentiate between AD patients and apparently healthy patients, as well as between steady and progressing subtypes of MCI. Regarding diagnoses, advancement projections, and therapy outcomes, functional and structural neuroimaging approaches were applied. Eskildsen et al. [

38] found five important ways to tell the difference between stable MCI and MCI that is becoming worse.

To differentiate and diagnose AD, the researchers in [

39] studied 135+ AD subjects, 220+ CN patients, and 350+ MCI patients. They trained on the neuroimaging utilizing information from ADNI. To differentiate AD patients from CN patients, they employed “neural networks” and “logistic regression”. The metrics were determined to have extensive brain properties. Rather than relying on specific parts of the brain, important properties such as volume and thickness were determined.

Because of its capacity to gradually analyze multiple levels and properties of MRI and PET brain pictures, the authors of [

40] advised using cascading CNNs in 2018. Since no picture segmentation was used in the pre-treatment of the information, no skill was necessary. This trait is widely seen as a benefit of this technique over others. The attributes were extracted and afterwards adapted to the framework in the other techniques. Depending on the ADNI dataset, their research included 90 plus NC and AD subjects, with 200 plus MCI cases. The efficiency rate was greater than 90%.

The work in [

41] suggested a knowledge-picture recovery system that is based on “3D Capsules Networks (CapsNets)”, a “3D CNN”, and pre-treated 3D auto-encoder technologies to identify AD in its early phases. According to the authors, 3D CapsNets are capable of quick scanning.

Unlike deep CNN, however, this strategy could only increase identification. The authors were able to distinguish AD with a 98.42% accuracy. The authors of [

42] looked at 407 normal participants, 418 AD patients, 280 progressing MCI patients, and 533 steady MCI instances from an institution. They practiced on 3D T1-weighted pictures using CNNs. The repository they used was ADNI. They looked at CNN operations to identify AD, progressing MCI, and stable MCI. Whenever CNNs were utilized to separate the progressing MCI individuals from the steady MCI patients, there was a 75% accuracy rate. The researchers in [

43] developed an algorithm that used MRI scans to determine medical symptoms. The maximum number of cases that researchers could use was 2000 or more, and they chose to work on the ADNI repository.

“DSA-3DCNN” was reported to be quite accurate compared to alternative contemporary classifiers in diagnosing AD that relied on MRI scans by Hosseini-Asl et al. [

44]. The authors demonstrated that distinguishing between AD, MCI, and NC situations can improve the retrieval of characteristics in 3D-CNN. With respect to analysis, the cerebral extraction technique used seven parameters. The FMRIB application package was utilized. This collection offers technologies to help MRI, fMRI, and DTI neuroimaging information, in addition to outlining the method of processing the information. By eliminating quasi-cerebral tissues from head MRIs, PET was utilized to categorize them into cerebral and non-cerebral imageries (a vital aspect of any assessment). In BET, no prior treatment was required, and the procedure was quick.

2. Diagnosis and Prognosis of AD Using DL Methods

DL is a subfield of ML [

45] that discovers characteristics across a layered training process [

46]. DL approaches for prediction and classification are being used in a variety of disciplines, such as object recognition [

47,

48,

49] and computational linguistics [

50,

51], which together show significant improvements over past methods [

52,

53,

54]. Since DL approaches have been widely examined in the past few years [

55,

56,

57], this section concentrates on the fundamental ideas of “Artificial Neural Networks (ANNs)”, which underpin DL [

58]. The DL architectural schemes used for AD classification and prognosis assessment are also discussed. NN is a network of connected processing elements that have been modeled and established using the “Perceptron”, the “Group Method of Data Handling” (GMDH), and the “Neocognitron” concepts. Because the single layer perceptron could only generate linearly separable sequences, these significant works investigated effective error functions and gradient computational algorithms. Furthermore, the back-propagation approach, which utilizes gradient descent to minimize the error function, was implemented [

59].

After detection, a person with AD can expect to live for an average of 3 to 11 years. Certain individuals, nevertheless, may survive for 20 years or more after receiving a diagnosis. The prognosis typically relies on the patient’s age and how far the illness has advanced prior to detection. The sixth most frequent cause of mortality in the US is AD. Other ailments brought on by the problems of AD can be fatal. For instance, if a person with AD has trouble swallowing, they may suffer from dehydration, malnourishment, or respiratory infections if foods or fluids enter their lungs. The individuals responsible for the patient’s care are also directly and significantly impacted by AD in addition to the patients themselves. Caregiver stress condition refers to a deterioration in the psychological and/or physical well-being of the individual caring for the Alzheimer’s sufferer and is another persistent complication of AD in this regard.

Rapid progress in neuroimaging techniques has rendered the integration of massively high-dimensional, heterogeneous neuroimaging data essential. Consequently, there has been great interest in computer-aided ML techniques for the integrative analysis of neuroimaging data. The use of popular ML methods such as the Support Vector Machine (SVM), Linear Discriminant Analysis (LDA), and Decision Trees (DT), among others, promises early recognition and progressive forecasting of AD. Nevertheless, proper pre-processing processes are required prior to employing these methods. In addition, for classification and prediction, these steps involve attribute mining, attribute selection, dimensionality reduction, and feature-based classification. These methods require specialized knowledge as well as multiple time-consuming optimization phases [

5]. Deep learning (DL), an emerging branch of machine learning research that uses raw neuroimaging data to build features through “on-the-fly” learning, is gaining significant interest in the field of large-scale, high-dimensional neuroimaging analysis as a means of overcoming these obstacles [

59].

This entry is adapted from the peer-reviewed paper 10.3390/healthcare10101842