TPT (time partition testing) is a systematic test methodology for the automated software test and verification of embedded control systems, cyber-physical systems, and dataflow programs. TPT is specialised on testing and validation of embedded systems whose inputs and outputs can be represented as signals and is a dedicated method for testing continuous behaviour of systems. Most control systems belong to this system class. The outstanding characteristic of control systems is the fact that they interact closely interlinked with a real world environment. Controllers need to observe their environment and react correspondingly to its behaviour. The system works in an interactional cycle with its environment and is subject to temporal constraints. Testing these systems is to stimulate and to check the timing behaviour. Traditional functional testing methods use scripts – TPT uses model-based testing. TPT combines a systematic and graphic modelling technique for test cases with a fully automated test execution in different environments and automatic test evaluation. TPT covers the following four test activities:

- embedded control

- functional testing

- testing and validation

1. Graphic Test Cases

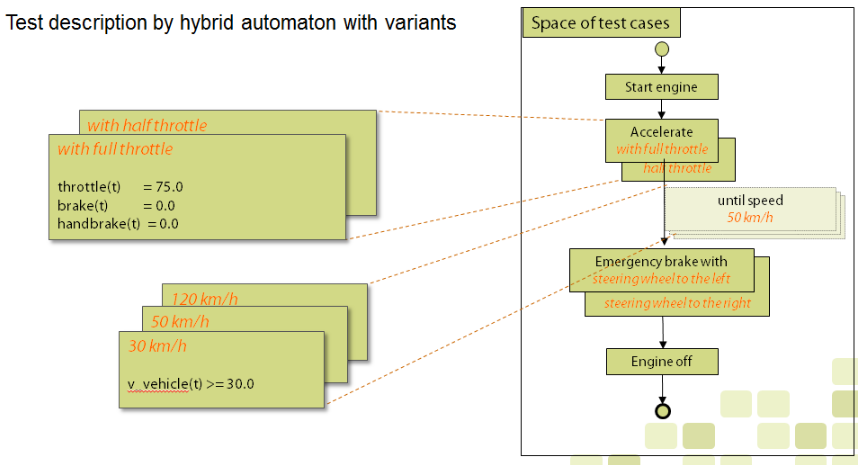

In TPT tests are modelled graphically with the aid of special state machines and time partitioning.[1][2] All test cases for one system under test can be modelled using one hybrid automaton. Tests often consist of a sequence of logical phases. The states of the finite-state machine represent the logical passes of a test which are similar for all tests. Trigger conditions model the transitions between the test phases. Each state and transition of the automaton may have different variants. The combination of the variants model the individual test cases.

Natural language texts become part of the graphics, supporting the simple and demonstrative readability even for non-programmers. Substantial techniques such as parallel and hierarchical branching state machines, conditional branching, reactivity, signal description, measured signals as well as lists of simple test steps allow an intuitive and graphic modelling even of complex test cases.

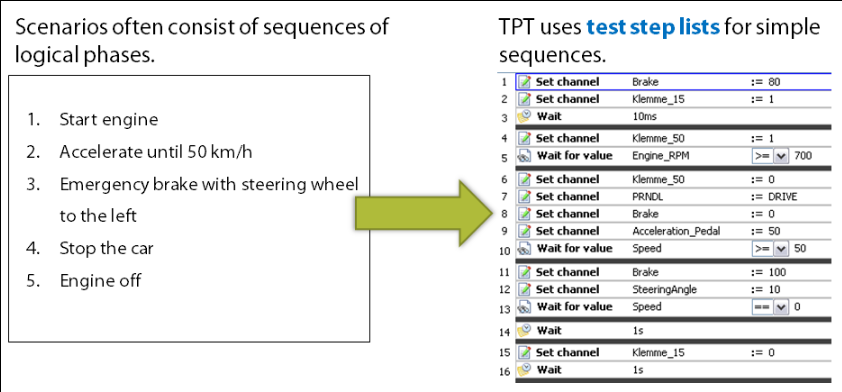

The test's complexity is hidden behind graphics. The lowest level signal description consists of either test step lists or so called direct definitions.

1.1. Modelling Simple Sequences: Test-Step List

Through the use of the Test-Step List, one can model simple sequences of test steps that do not need to execute in parallel, such as setting signals (Set channel), ramping signals (Ramp channel), setting parameters (Set parameter), and waiting (Wait). Requests for the expected test results can be made within the test sequence to evaluate the system under test as it runs. It is also possible to place subautomatons in the Test-Step List, which in turn contain automatons and sequences, resulting in hierarchical Test-Step Lists. The test sequences can also be combined with other modelling methods, allowing for a great deal of complexity (or simplicity) in one's test. Test sequences can also be combined and parallelised with other modelling methods.

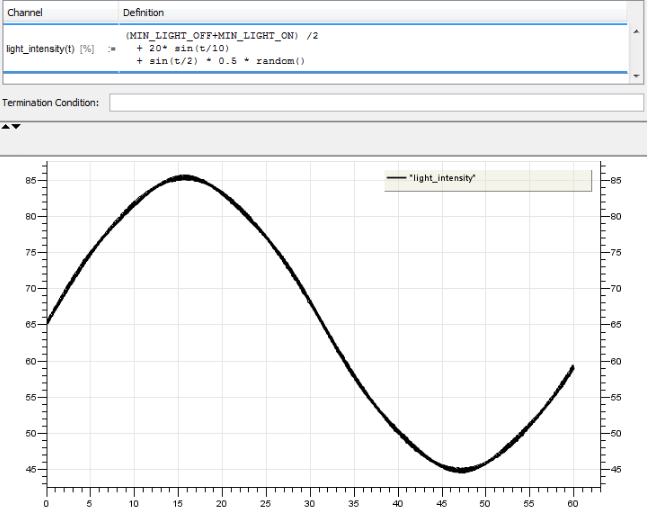

1.2. Direct Signal Definition: Direct Definition

Within the Test-Step-List it is possible to implement so-called "Direct Definitions". Using this type of modelling, one can define signals as a function of time, past variables/test events, and other signals. It is also possible to define these signals by writing "C-Style" code as well as importing measurement data and using a manual signal editor.

1.3. Functions

It is possible to define functions that can act as a clients or servers. Client functions are called from TPT in the system under test, where server functions implemented in TPT can be called as "stub functions" from the system under test. TPT itself may also call the server functions.

1.4. Systematic Test Cases

TPT was developed specifically for testing of continuous and reactive behaviour of embedded systems.[3] TPT can be seen as the extension of the Classification Tree Method in terms of timing behaviour. Because of its systematic approach in test case generation, TPT even keeps track of very complex systems whose thorough testing requires a large number of test cases thus making it possible to find failures in the system under test with an ideal number of test cases.

The underlying idea of TPT's systematic is the separation of similarities and differences among the test cases: most test cases are very similar in their structural process and can “only” be differentiated in a few, but crucial details.[4] TPT makes use of this fact by jointly modelling and using joint structures. On the one hand, redundancies are thus avoided. On the other hand, it is made very clear what the test cases actually differ in – i.e. which specific aspect they respectively test. The comparability of test cases and thus the overview is improved in this approach and the attention of the tester is focused on the essential – the differentiating features of the test cases.

The hierarchical structure of the test cases makes it possible to break complex test problems down into sub-problems thus also improving the clarity and – as a result – the quality of the test.

These modelling techniques support the tester in finding the actually relevant cases, avoiding redundancies and keeping track of even large numbers of test cases.[5]

1.5. Automatic Test Case Generation

TPT comprises several possibilities to automatically generate test cases:

- test cases from equivalence classes

- test cases for the coverage of Simulink models by using static analysis and a search-based method[6]

- test cases by building sequence from variants of states and transitions of a test model

- test cases by transforming recordings of user interactions with the system under test via a graphical user interface (Dashboard)

1.6. Reactive tTests

With TPT, each test case can specifically react to the system's behaviour[7] during the testing process in real time – for instance to react on the system exactly when a certain system-state occurs or a sensor signal exceeds a certain threshold. If, for example, a sensor failure for an engine controller is to be simulated when the engine idling speed is exceeded, it has to be possible to react to the event "engine idling speed exceeded" in the description of the test case.

2. Test Execution

TPT test cases are made independent of its execution. The test cases can be executed in almost any environment due to the so-called virtual machine (VM) concept also in real time environments. Examples are MATLAB/Simulink, TargetLink, ASCET, C-code, CAN, AUTOSAR, SystemDesk, DaVinci CT, LABCAR, INCA, Software-in-the-Loop (SiL) and HiL. Thus TPT is an integrated tool to be used in all testing phases of the development like unit testing, integration testing, system testing and regression testing.

For analysis and measurement of code coverage, TPT can interact with coverage tools like Testwell CTC++ for C-code.

A configurable graphical user interface (Dashboard), based on GUI widgets, can be used to interact with tests.

2.1. TPT Virtual Machine

The modelled test cases in TPT are compiled and during test execution interpreted by the so-called virtual machine (VM). The VM is the same for all platforms and all tests. Only a platform adapter realises the signal mapping for the individual application. The TPT-VM is implemented in ANSI C and requires a memory of just a few kilobytes and can completely do without a dynamic memory allocation, allowing it to be applied in minimalist and environments with few resources too. There are also APIs for C and .NET.

TPT's Virtual Machine is able to process tests in real time with defined response behaviour. The response times of TPT test cases are normally given within micro seconds – depending on the complexity and test hardware.

3. Programmed Test Assessment

The expected system behaviour for individual test cases should also be automatically tested to assure efficient test processes. TPT offers the possibility to compute the properties for the expected behaviour online (during test execution) and offline (after test execution). While online evaluation uses the same modelling techniques as test modelling, offline evaluation offers decidedly more far-reaching possibilities for more complex evaluations, including operations such as comparisons with external reference data, limit-value monitoring, signal filters, analyses of state sequences and time conditions.

The offline evaluation is, technically speaking, based on the Python script language, which has been extended by specific syntactic language elements and a specialised evaluation library to give optimal support to the test evaluation. The use of a script language ensures a high degree of flexibility in the test evaluation: access to reference data, communication with other tools and development of one's own domain-specific libraries for test evaluation is supported. Besides of the script based test result evaluation user interfaces provide simple access to the test assessments and help non-programmers to avoid scripting.

Measurement data from other sources like TargetLink and Simulink signal logging or MCD-3 measurement data can be assessed automatically. This data can be independent from the test execution.

4. Test Documentation

TPT test documentation according to IEEE 829 presents the result of the test evaluation to the tester in a HTML, report, in which not only the pure information "success", "failed" or "unknown" can be depicted as the test result for each test case, but also details such as characteristic parameters or signals that have been observed in the test execution or computed in the test evaluation. Since the test assessment returns proper information about the timing and the checked behaviour this information can be made available in the report. The content of the test documentation as well as the structure of the document can be freely configured with the help of a template.

5. Test Management

TPT supports test management of TPT test projects with the following activities:

- Test case development in a test project

- Test planning through test set configuration and test execution configuration

- Automatic test execution and evaluation (assessment) in a Test campaign

- Test reporting (detailed for an individual test run)

- Test summary reporting over different release cycles and

- Traceability of requirements, tests, test runs, test results

6. Requirements Tracing

Industry norms such as IEC 61508, DO-178B, EN 50128 and ISO 26262 require traceability of requirements and tests. TPT offers an interface to requirements tools like Telelogic DOORS to support these activities.

7. Application

TPT is a model-based testing tool and is applied mainly in the automotive controller development[8] and has originally been developed within Daimler AG for their own development. Daimler coordinated the development of the testing tool for years.[9] Since 2007 PikeTec continues the development of the tool. TPT is used by many different other car manufacturers like BMW, Volkswagen, Audi, Porsche and General Motors as well as suppliers like Robert Bosch GmbH, Continental and Hella.[10]

The content is sourced from: https://handwiki.org/wiki/Software:TPT

References

- "Justyna Zander-Nowicka, Abel Marrero Pérez, Ina Schieferdecker, Zhen Ru Dai: Test Design Patterns for Embedded Systems, In: 10th International Conference on Quality Engineering in Software Technology, CONQUEST 2007, Potsdam, Germany, September 2007". http://www.fokus.fraunhofer.de/de/motion/ueber_motion/unser_team/zander_justyna/Conquest_07_vfinal_DV2.pdf.

- "Schieferdecker, Bringmann, Grossmann: Continuous TTCN-3: Testing of Embedded Control Systems, In: Proceedings of 28th International Conference on Software Engineering, Shanghai, China, 2006". http://www.irisa.fr/lande/lande/icse-proceedings/seas/p29.pdf.

- "Bringmann, Krämer: Systematic testing of the continuous behavior of automotive systems In: International Conference on Software Engineering: Proceedings of the 2006 international workshop on Software, Shanghai, China, 2006". http://piketec.com/downloads/papers/bringmann-kraemer-systematic-testing-of-the-continuous-behavior-of-automotive-systems.pdf.

- "Lehmann, TPT – Dissertation, 2003". http://www.piketec.com/downloads/papers/ELehmannDissertation.pdf.

- "Lehmann: Time Partition Testing: A Method for Testing Dynamical Functional Behavior IN: Proceedings of Test2000, Lindon, Great Britain, 2000". Evotest.iti.upv.es. http://evotest.iti.upv.es/index.php?option=com_docman&task=doc_view&gid=29&Itemid=70.

- Benjamin Wilmes: Hybrides Testverfahren für Simulink/TargetLink-Modelle, Dissertation, TU-Berlin, Germany, 2015. [1]

- "Grossmann, Müller: A Formal Behavioral Semantics for TestML; In:Proc. of ISOLA 06, Paphos, Cyprus, November 2006". Immos-project.de. http://www.immos-project.de/site_immos/download/TestML_Semantics.pdf.

- Bringmann, E.; Krämer, A. (2008). "Model-Based Testing of Automotive Systems". International Conference on Software Testing, Verification, and Validation (ICST). pp. 485–493. doi:10.1109/ICST.2008.45. ISBN 978-0-7695-3127-4. http://www.piketec.com/downloads/papers/Kraemer2008-Model_based_testing_of_automotive_systems.pdf.

- Conrad, Mirko; Fey, Ines; Grochtmann, Matthias; Klein, Torsten (2001-07-09). "Modellbasierte Entwicklung eingebetteter Fahrzeugsoftware bei DaimlerChrysler". Informatik - Forschung und Entwicklung 20 (1–2): 3–10. doi:10.1007/s00450-005-0197-5. https://dx.doi.org/10.1007%2Fs00450-005-0197-5

- Hauser Automotive Website. Abgerufen am 16. März 2015 http://www.hanser-automotive.de/aktuelle-entwicklungswerkzeuge/entwicklungswerkzeuge/article/piketec-tpt-als-test-und-verifikationssoftware.html