Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is an old version of this entry, which may differ significantly from the current revision.

Augmented reality (AR) is an innovative system that enhances the real world by superimposing virtual objects on reality. The application of AR in image-guided surgery (IGS) can be an increasingly important opportunity for the treatment of patients. In particular, AR allows one to see 3D images projected directly onto patients thanks to the use of special displays.

- augmented reality

- image guided surgery

- surgery

1. Introduction

Imaging is known to play an increasingly important role in many surgery domains [1]. Its origin can be dated back to 1895 when W. C. Roentgen discovered the existence of X-rays [2]. While in the course of the twentieth century, X-rays have found increasing application, in more recent years, other techniques have been developed and acquiring data from the internal structures of the human body has become more and more useful [1][3][4][5]. All this facilitated an increasing use of images to guide surgeons during interventions, leading to the affirmation of image-guided surgery (IGS) [6]. In this sense, the need for reducing surgery evasiveness, by supporting physicians in the diagnosis and preoperative phases as well as during surgeries themselves, led to the use of different solutions such as the 3D visualization of anatomical parts and the application of augmented reality (AR) in surgery [1][3][4]. Augmented reality consists in merging the real word with virtual objects (VOs) generated by computer graphic systems, creating a world for the user that is augmented with VOs. The first application of AR in medicine dates back to 1968 when Sutherland created the first head-mounted display [7]. The term AR is often used in conjunction with virtual reality (VR). The difference between them is that VR creates a digital artificial environment by stimulating the senses of the user and simulating the external world through computer graphic systems [8], while AR overlays computer-generated images onto the real world, increasing the user perception and showing something that would otherwise not be perceptible as reported by Park et al. in [1] and Desselle et al. in [9].

The application of AR in IGS can be an increasingly important opportunity for the treatment of patients. In particular, AR allows one to see 3D images projected directly onto patients thanks to the use of special displays. All this can facilitate the perception of the reality examined and lighten the task of the operators themselves compared to the traditional approach consisting in 2D preoperative images displayed on 2D monitors [1][5].

In this way, doctors can directly see 3D images projected onto patients using special displays, described in the next paragraph, instead of using 2D preoperative images displayed on 2D monitors that require the doctor to mentally transform them into 3D objects as well as remove the sight from the patient [1][5].

2. Augmented Reality in Surgery

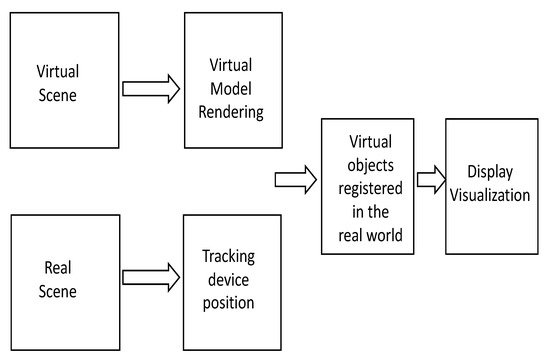

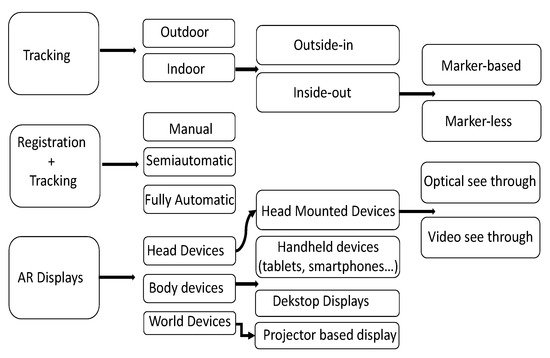

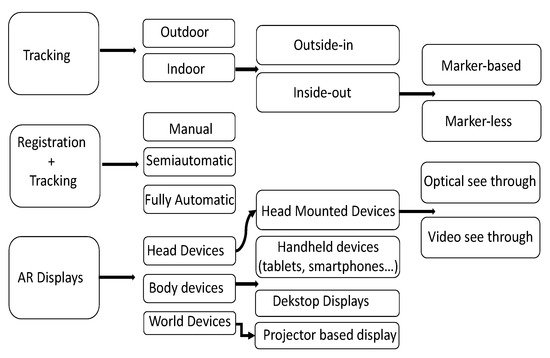

This section describes the main aspects leading to the visualization of the VOs superimposed on the real world. The workflow of augmented-reality-enabled systems is shown in Figure 1. This Figure 1 shows that once the virtual model has been rendered, tracking and recording are the two basic steps. In this sense, tracking and registration provide the correct spatial positioning of the VOs with respect to the real world [10]. This result is possible because, with monitoring, the spatial characteristics of an object are detected and measured. Specifically, with regard to AR, tracking indicates the operations necessary to determine the device’s six degrees of freedom, 3D location and orientation within the environment, necessary to calculate the real time user’s point of view. Tracking can be performed outdoors and indoors. Researchers focused on the latter. Two methods of indoor tracking are then distinguishable: outside-in and inside-out. In the outside-in method, the sensors are placed in a stationary place in the environment and sense the device location, often resorting to marker-based systems [11]. In the inside-out method, the camera or the sensors are placed on the actual device whose spatial features are to be tracked in the environment. In this case, the device aims to determine how its position changes in relation to the environment, as for the head-mounted displays (HMDs). The inside-out tracking can be marker-based or marker-less. The marker-based vision technique, making use of optical sensors, measures the device pose starting from the recognition of some fiducial markers placed in the environment. This method can also hyperlink physical objects to web-based content using graphic tags or automatic identification technologies such as radio-frequency-identification (RFId) systems [12]. The marker-less method, conversely, does not require fiducial markers. It bases its measures on the recognition of distinct characteristics, present in the environment, that in turn are used to localize the position of the device in combination with computer vision and image-processing techniques. Registration involves the matching and alignment of tracked spatial features obtained from the real world (RW) with the corresponding points of the VOs to reach an optimal overlapping between them [1]. The accuracy of this process allows an accurate representation of the virtual reality over the real world and determines the natural appearance of an augmented image [13]. The registration phase is connected to the tracking one. Based on the ways these two are accomplished, the process is defined as manual, fully automatic or semiautomatic. The manual one refers to manual registration and manual tracking. It consists in finding landmarks both on the model and the patient and consequently manually orienting and resizing of the obtained preoperative 3D model displayed on the operative monitor to make it match real images. The fully automatic process is the most complex one, especially with soft tissues. Since real world objects change their shapes with time, the same deformation needs to be applied to the VOs to address the fact that any deformation during surgery, due to events such as respiration, can result in an inaccurate real-time registration, subsequently causing an imprecise overlapping between 3D VOs and ROs. Finally, the semiautomatic process associates the automatic tracking with the manual registration. The identification of landmark structures, both on the obtained 3D model and on the real structures, occurs automatically, while its overlay on the model, and its orienting and resizing, occurs manually. This aspect is what differentiates the automatic process from the semiautomatic one. The latter provides the overlay of the AR images on real life statically and manually, while the former makes the 3D virtual models dynamically match the actual structures [1][14][15][16]. For the visualization of the VOs onto the real world, several AR display technologies exist, usually classified in head, body and world devices, depending on the place where they are located [7][17]. World devices are located in a fixed place. This category includes desktop displays used as AR displays, and projector-based displays. The former are equipped with a webcam, a virtual mirror showing the scene framed by the camera and a virtual showcase, allowing the user to see the scene, alongside additional information. Projector-based displays cast virtual objects directly onto the corresponding real-world objects’ surfaces. With body devices, researchers usually refer to handheld Android-based platforms, such as tablets or mobile phones. These devices use the camera for capturing the actual scenes in real time, while some sensors (e.g., gyroscopes and accelerometers and magnetometer) can determine their rotation. These devices usually resort to fiducial image targets for the tracking-registration phase [18]. Finally, the HMDs are near eye displays, wearable devices consisting in sort of glasses that have the advantage of leaving the hands free to perform other tasks. HMDs are mainly of two types: video see-through and optical see-through. The first ones refer to special lenses that let the user see the external real world through a camera whose frames are in turn combined with VOs. In this way, the external environment is recorded in real time and the final images overlaying the VOs are produced directly over the user’s lenses. Differently, the optical see-through devices consist of an optical combiner or holographic waveguides, the lenses, that enable the overlay of images transmitted by a projector over the same lenses through which a normal visualization of the real world is allowed. In this way the user visualizes directly the reality augmented with the VOs overlaid onto it [7][19]. Figure 2 shows an example of HMD.

Figure 1. Workflow of augmented-reality-enabled systems.

Figure 2. Example of HMD, HoloLens 2 (Microsoft, WA, USA).

The different techniques are summarized in Figure 3.

Figure 3. Summary of the techniques.

This entry is adapted from the peer-reviewed paper 10.3390/app12146890

References

- Park, B.J.; Hunt, S.J.; Martin, C., III; Nadolski, G.J.; Wood, B.; Gade, T.P. Augmented and Mixed Reality: Technologies for Enhancing the Future of IR. J. Vasc. Interv. Radiol. 2020, 31, 1074–1082.

- Villarraga-Gómez, H.; Herazo, E.L.; Smith, S.T. X-ray computed tomography: From medical imaging to dimensional metrology. Precis. Eng. 2019, 60, 544–569.

- Cutolo, F. Augmented Reality in Image-Guided Surgery. In Encyclopedia of Computer Graphics and Games; Lee, N., Ed.; Springer International Publishing: Cham, Switzterland, 2017; pp. 1–11.

- Allison, B.; Ye, X.; Janan, F. MIXR: A Standard Architecture for Medical Image Analysis in Augmented and Mixed Reality; IEEE Computer Society: Washington, DC, USA, 2020; pp. 252–257.

- Marmulla, R.; Hoppe, H.; Mühling, J.; Eggers, G. An augmented reality system for image-guided surgery: This article is derived from a previous article published in the journal International Congress Series. Int. J. Oral Maxillofac. Surg. 2005, 34, 594–596.

- Peters, T.M. Image-guidance for surgical procedures. Phys. Med. Biol. 2006, 51, R505–R540.

- Eckert, M.; Volmerg, J.S.; Friedrich, C.M. Augmented Reality in Medicine: Systematic and Bibliographic Review. JMIR Publ. 2019, 7, e10967.

- Kim, Y.; Kim, H.; Kim, Y.O. Virtual Reality and Augmented Reality in Plastic Surgery: A Review. Arch. Plast. Surg. 2017, 44, 179–187.

- Desselle, M.R.; Brown, R.A.; James, A.R.; Midwinter, M.J.; Powell, S.K.; Woodruff, M.A. Augmented and Virtual Reality in Surgery. Comput. Sci. Eng. 2020, 22, 18–26.

- Pérez-Pachón, L.; Poyade, M.; Lowe, T.; Gröning, F. Image Overlay Surgery Based on Augmented Reality: A Systematic Review. In Biomedical Visualisation. Advances in Experimental Medicine and Biology; Springer International Publishing: Cham, Switzterland, 2020; Volume 1260, pp. 175–195.

- Zafari, F.; Gkelias, A.; Leung, K.K. A Survey of Indoor Localization Systems and Technologies. IEEE Commun. Surv. Tutor. 2019, 21, 2568–2599.

- Cheng, J.; Chen, K.; Chen, W. Comparison of marker-based AR and markerless AR: A case study on indoor decoration system. In Proceedings of the Lean and Computing in Construction Congress (LC3): Proceedings of the Joint Conference on Computing in Construction (JC3), Heraklion, Greece, 4–7 July 2017; pp. 483–490.

- Thangarajah, A.; Wu, J.; Madon, B.; Chowdhury, A.K. Vision-based registration for augmented reality-a short survey. In Proceedings of the 2015 IEEE International Conference on Signal and Image Processing Applications (ICSIPA), Kuala Lumpur, Malaysia, 19–21 October 2015; pp. 463–468.

- Quero, G.; Lapergola, A.; Soler, L.; Shahbaz, M.; Hostettler, A.; Collins, T.; Marescaux, J.; Mutter, D.; Diana, M.; Pessaux, P. Virtual and Augmented Reality in Oncologic Liver Surgery. Surg. Oncol. Clin. N. Am. 2019, 28, 31–44.

- Tuceryan, M.; Greer, D.S.; Whitaker, R.T.; Breen, D.E.; Crampton, C.; Rose, E.; Ahlers, H.K. Calibration requirements and procedures for a monitor-based augmented reality system. IEEE Trans. Vis. Comput. Graph. 1995, 1, 255–273.

- Maybody, M.; Stevenson, C.; Solomon, S.B. Overview of Navigation Systems in Image-Guided Interventions. Tech. Vasc. Interv. Radiol. 2013, 16, 136–143.

- Zhanat, M.; Vslor, H.A. Augmented Reality for Robotics: A Review. Robotics 2020, 9, 21.

- Mourtzis, D.; Angelopoulos, J.; Panopoulos, N. Intelligent Predictive Maintenance and Remote Monitoring Framework for Industrial Equipment Based on Mixed Reality. Front. Mech. Eng. 2020, 6, 578379.

- Bruce, T.H. A Survey of Visual, Mixed, and Augmented Reality Gaming. Assoc. Comput. Mach. 2012, 10, 1.

This entry is offline, you can click here to edit this entry!