Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is an old version of this entry, which may differ significantly from the current revision.

The most dangerous factor in a fire scene is smoke and heat, especially smoke. How to locate people and guide them out of a heavy smoke environment will be the key to surviving an evacuation process. A variety of instruments have been studied that can be used in fire and smoky situations, including visible camera, kinetic depth sensor, LIDAR, night vision, IR camera, radar, and sonar.

- human detection

- smoky fire scene

- firefighter protection

- human rescue

- real-time object detection

- YOLO

- thermal imaging camera

1. Introduction

Fire is one of the biggest workplace safety threats. It is a hazard that we would want to not only prevent in advance, but also to respond to quickly in terms of emergency response. An effective fire evacuation plan is important to save human lives, protect firefighters, and minimize property loss [1]. It is widely known that the most dangerous factor in a fire scene is smoke and heat, especially smoke. Smoke causes zero visibility and people can die from smoke inhalation during evacuations from buildings or from firefighter rescue [3].

In a fire situation, smoke spreads at a speed of 3–5 m/s, while humans’ top movement speed is 0.5 m/s. Where the smoke arrives, the fire will follow. With this in mind, how to locate people and guide them out of a heavy smoke environment will be the key to surviving an evacuation process. Detecting a human in heavy smoke is a challenging task. The most commonly used solutions are laser detection and ranging (LADAR), 3D laser scanning, ultrasonic sensor, or infrared thermal camera [3,4].

2. Human Detection in Heavy Smoke Scenarios

2.1. Thermal Spectrum and Sensor

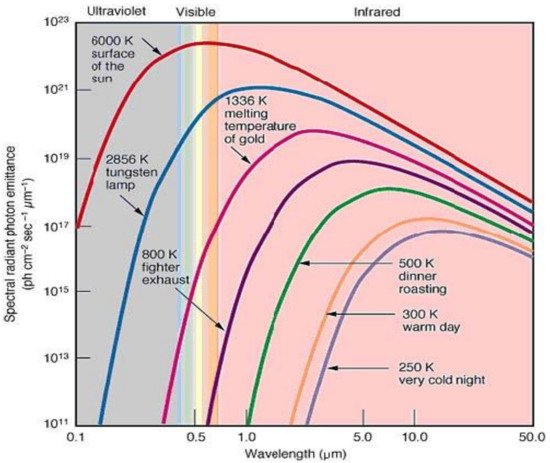

Objects above absolute zero emit infrared radiation across a spectrum of wavelengths referred to as thermal radiation, as shown in Figure 1. The higher the thermal radiation, the shorter the wavelength in the spectrum.

Figure 1. Thermal radiation in different temperatures (Hajebi, 2008; pp. 105–112) [6].

A thermal imaging camera (TIC) converts the thermal radiation into an electric signal to make the invisible infrared (IR) wavelength into a visible RGB or grayscale image. This is a commonly used approach to detect heat sources and to change temperatures into a visible digital image.

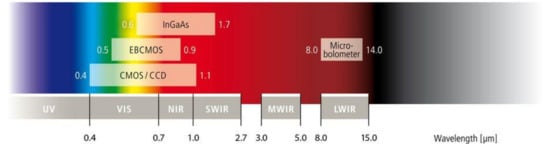

IR from 0.7 μm to 15 μm is invisible to the human eye. Different sensor materials can be used to detect corresponding wavelength ranges, as illustrated in Figure 2 [6]. A micro-bolometer is commonly used as a long-wavelength infrared (LWIR) TIC since it does not require low temperature cooling and provides good contrast with human images in both day and night as described in Table 1.

Figure 2. Camera sensor type responding to different wavelengths.

Table 1. Spectrum wavelength range and properties.

| Spectrum | Wavelength Range | Property |

|---|---|---|

| Visible spectrum | 0.4–0.7 μm | Range visible to the human eye. |

| Near-infrared (NIR) | 0.7–1.0 μm | Corresponds to a band of high-atmosphere transmission, yielding the best imaging clarity and resolution. |

| Short-wave infrared (SWIR) | 1–3 μm | |

| Mid-wave infrared (MWIR) | 3–5 μm | Nearly 100% transmission, with the advantage of lower background noise. |

| Long-wave infrared (LWIR) | 8–15 μm | Nearly 100% transmission on the 9–12 μm band. Offers excellent visibility of most terrestrial objects. |

| Very long-wave infrared (VLWIR) | >15 μm |

2.2. Different Sensor Used in Smoky Fire Scene

A variety of instruments have been studied that can be used in fire and smoky situations, including visible camera, kinetic depth sensor, LIDAR, night vision, IR camera, radar, and sonar. It has been demonstrated that the most efficient instruments are thermal cameras, radar, and LIDAR.

The time-of-flight technology of LIDAR and radar have the best distance measurement accuracy that is suitable for robot navigation [6,7,8,9].

Thermal cameras and radar are the best technology to penetrate heavy smoke and are less affected by heavy smoke and high temperatures.

2.3. NFPA1801 Standard of Thermal Imagers

The National Fire Protection Association (NFPA) defines a consensus standard for the design and performance of thermal imaging sensors (TICs) to be used in smoky fire scenarios [10]. The NFPA defines the standard for TICs because the high-temperature and low-visibility conditions are dangerous for firefighters. It defines the criteria for TICs including “interoperability”, “durability”, and “resolution” to make it easy to operate the Tic in a smoky fire scene.

The interoperability is to allow firefighters to operate a TIC with no hesitation during a rescue to save both victims and themselves in a low-visibility scene.

The durability is to enable the TIC to operate in a high-flame or dusty environment with no malfunction.

The most important specifications for TICs on the market for various applications include:

- High resolution (>320 × 240),

- High refresh rate (>25 Hz),

- Wide field of view,

- Low temperature sensitivity (0 °F–650 °F),

- Temperature bar: gray, yellow, orange, and red (Figure 3).

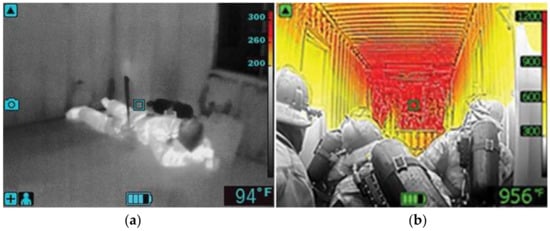

Figure 3. Different sensitivity image comparison (a) Medium sensitivity: 0 °F–300+ °F (b) Low Sensitivity: 0 °F–1200 °F (Source: TechValidate. TVID: B52-065-90D) [11].

Section 6.6.3.1.1 indicates the low sensitivity mode indicator as a basic operational format. The intention of this design is to show high-heat regions in color to enable firefighters to easily distinguish dangerous areas, and to present victims in gray for easy searching, as in Figure 3.

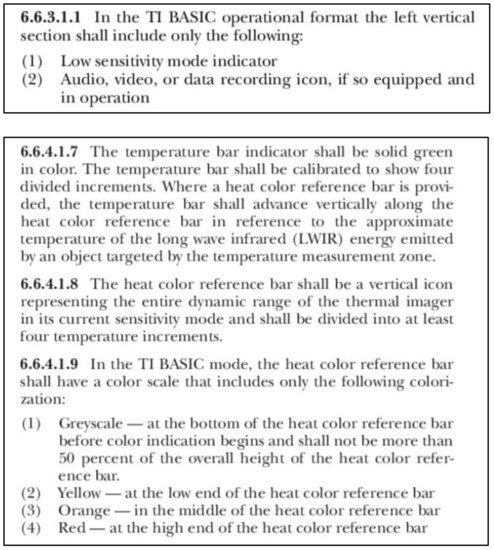

Section 6.6.4 defines the temperature in the color bar to have <50% temperature range in gray and higher temperatures in yellow, orange, and red, respectively, as in Figure 4. TICs that follow NFPA1801(2021) on the market for firefighting purposes commonly define the 25% temperature range in gray, as in Figure 5. In this case, the 25% range for gray will be 162.5 °F (72.5 °C), meaning that humans fall within the grayscale at the 105 gray level, as in Figure 4.

Figure 4. Definition of NFPA1801 for TIC sensitivity and temperature colorization bar [10].

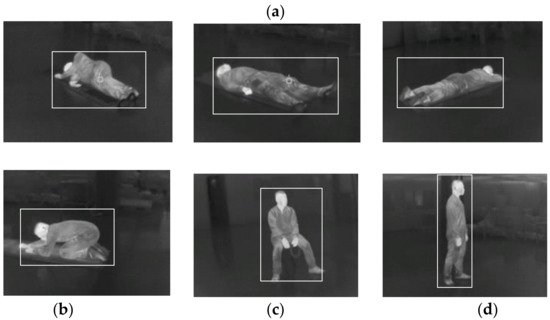

Figure 5. Flue Ti300+ self-taken images to include (a) side lying, lying upward, lying downward, (b) squatting, (c) sitting, and (d) standing postures.

NFPA code 1801: Standard on Thermal Imagers for the Fire Service, 2021 edition. In NFPA National Fire Codes Online. Retrieved from http://codesonline.nfpa.org.

2.4. Different Sensors Used in Smoky Fire Scenes

There is an increasing number of papers demonstrating the ability of firefighting robots with stereo infrared vision, radar, or LIDAR to generate fusion images or environmental measurements to identify and locate objects [8,9,12].

In low-visibility smoky scenes, robots can be implemented for environment mapping and indoor navigation. Among the studies on this topic, 3D infrared vision is always implemented into the system that can be used for distance measurement and object detection.

There is related work using FLIR imaging that followed NFPA1801 with a CNN model to help firefighters to navigate in a fire scene [13]. It shows the capability of convolutional neural network models for detecting humans with a thermal imaging camera.

2.5. Convolutional Neural Network (CNN) Object Detection

The task of object detection requires the localization of the objects of interest with coordinates or a bounding box in the image frame.

The CNN model has robust object detection results compared to the traditional computer vision model [14], because of the benefit of the feature extraction of a full image by sliding windows and the combination of multi-scale feature extraction with the increasing depth of network architecture for complex features.

Successful CNN architectures have been proposed recently for object detection tasks, such as R-CNN [15], RFCN [16], Fast R-CNN [17], Faster R-CNN, Mask R-CNN [18], and YOLO [19].

YOLOv4 has been shown to be a fast, real-time object detection technique with high accuracy, with a precision of 43.5% in MS COCO datasets and fast detection of 65 FPS (frame per second) with Nvidia GPU Tesla V100 [5].

There is also evidence of an LWIR sensor with a faster R-CNN model in military surveillance [20] achieving a mean average precision (mAP) of 87% in military operations. In outdoor pedestrian detection, a region-CNN-based model is proposed to achieve an mAP of 59.91% [21].

This entry is adapted from the peer-reviewed paper 10.3390/s22145351

This entry is offline, you can click here to edit this entry!