Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is an old version of this entry, which may differ significantly from the current revision.

Hand gesture recognition plays a significant part in delivering diverse messages using hand gestures in the digital domain. Real-time hand gesture identification is now possible because of advancements in both imaging technology and image processing algorithmic frameworks.

- gesture recognition

- feature extraction

- gesture classification

- sign language

- artificial intelligence

1. Hand Gestures Types

Hand gestures are a kind of body language in which the position and shape of the center of the palm and the fingers communicate specific information. The gesture is made up of both static and dynamic hand movements in general. Dynamic hand gestures are made up of a series of hand movements, while static hand gestures are based only on the shape of the hand. Different individuals describe gestures differently due to the cultural variety and uniqueness of gestures. Static hand gestures rely on the shape of the hand gesture to convey the message, while dynamic hand gestures rely on the movement of the hands to transfer the meaning. The ability to instantly and without delay identify hand motions is known as the detection of real-time hand gestures. Processing speed, image processing techniques, acceptable delay in conveying results, and recognition algorithms differ between real-time and non-real-time hand gestures.

2. Recognition Technologies of Hand Gesture

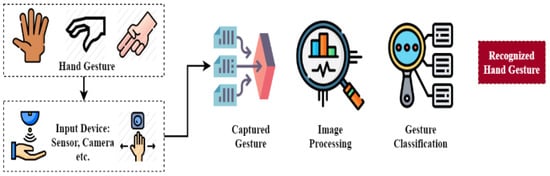

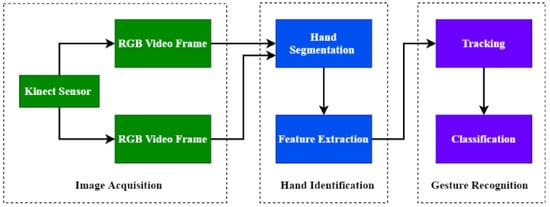

The results of the research show that hand gestures allow technology to be divided into three types: sensor-driven, vision-driven and deep learning. Sensor-based technology, as its name suggests, uses different sensors such as the accelerometer and the gyroscope, while RGB cameras and infrared sensors are used to extract and identify properties from a collection of datasets of hand movements, respectively. The general framework about hand gesture recognition and standard framework for hand gesture recognition using Kinect is shown in Figure 5 and Figure 6, respectively.

Figure 5. General framework about hand gesture recognition.

Figure 6. Standard framework for hand gesture recognition using Kinect.

2.1. Technology Based on Sensor

In sensor-based hand gesture recognition algorithms, motion sensors, which are either integrated into gloves or utilized in smart devices such as smartphones using built-in accelerometers with gyroscope sensors, are used.

The main objective is to gather and use triaxial data for the proper application. This method of gesture recognition in a smart gadget that uses a three-axis accelerometer and Gyro sensor, Ref. [1] presents a continuous hand gesture identification (CHG) technique to constantly identify hand movements. A Samsung ATIV smartphone is employed as an intelligent tool for gesture motions, and smart devices are operated using the Samsung AllShare protocol. Since the waveforms reflect change in amplitude and phase, the machine techniques such as CNN may be used for sensing them. Ref. [2] presented the feedforward neural network and similarity matching (FNN/SM) hand motion detection technique for accelerometer-based pen type sensing devices. A triaxal accelerometer, which is preprocessed by a moving average filter, is used to identify hand motion acceleration data. There is also a segmentation algorithm to control each key motion on the fly at the initial and final positions. Using the basic gesture samples used to train the FNN model after feature extraction, the fundamental gesture sequences are categorized. The series of basic acts is then coded using the codes of Johnson. Finally, the complex gesture is found by comparing the anticipated basic series of gestures with frequent template sequences.

2.1.1. Techniques for Recognizing Hand Gestures Using Impulse Radio Signals

The transmission (Tx) produces an infrared signal through its antenna and sends it. The waved shape of the hand (Rx), consisting of an antenna, amplifiers, a low-pass filter and an oscilloscope with a high speed, is received from the receiver. Due to the varying amplitude and phase of the reflected waveforms, movement shapes may be used in machine learning methods, such as CNN. It is demonstrated in the picture below that this technique works.

2.1.2. Ultrasonic Hand Gesture Recognition Techniques

In this technique, loudspeakers and microphones are utilized as ultrasonic I/O devices. The Doppler shift of ultrasonic waves reflected in a moving human body is used in this technique. During a gesture, the system constantly samples the ultrasound. It produces a series of time variations which are rich in the distinct characteristics of each action. We classify future motions using a mixture of fundamental patterns and supervised methods of machine learning.

2.2. Technology Based on Vision

The three essential phases of a vision-based method are image acquisition, image segmentation and lastly image identification. Many academics have developed hand motion detection systems based on these three stages in real time. The dynamic recognition of hand gestures is the identification of a moving hand with a number of motions, whereas the recognition of the hand position is static hand gestures. Hand motions may be visually categorized utilizing methods based on 3D modeling and looks. Those are the visual sub models of 3D models and model-based appearance methods. The 3D hand gesture model provides a 3D spatial representation of the human hand with time automation. The four kinds of appearance-based hand gesture representation methods are color, silhouette, decorative, and motion-based models.

Vision-based techniques for recognizing gestures: as previously mentioned, there are three basic stages: detection, tracking, and recognition. Ref. [3] explains briefly the numerous sub-techniques employed at various phases of their study. The main phases in the detecting phase include color, shape pixel, 3D model, and motion. Correlation-based and contour-based tracking are two kinds of template-based tracking. Other tracking techniques include optimum estimation, particle filtering, and camshift. Using a range of algorithms and machine learning techniques, the last stage in the complex identification process is to identify static and dynamic hand movements. Time delay neural networks, hidden Markov models, dynamic time warping networks, and finite state machines are examples of these techniques.

The most frequent use of color detection is the detection of skin color on the hand. The color space conversion to either HSV or YCbCr is done first for reliable hand segmentation. Binarization is achieved using skin color threshold values, and noise is reduced using image processing techniques, such as morphological processing. The segmented hand is then identified using a variety of techniques for extracting features and recognizing the segmented hand. One of the study articles by [4] was based on robust hand motion segmentation utilizing YCbCr color space and K-means clustering.

Tracking is essential since it sees and detects the hand in real time for the identification of hand gestures. Many tracking methods, one based on contour moments, were proposed. In order to perform hand center identification, ref. [5] utilized transformation and contour detection on complicated backdrops, which could be used for hand tracking. After that, the fingertip position method used to detect hand motions was calculated using a convex hull. In 1972, Sklansky launched the three-coin technique of the convex hull.

This entry is adapted from the peer-reviewed paper 10.3390/jimaging8060153

This entry is offline, you can click here to edit this entry!