Information security and cybersecurity management play a key role in modern enterprises. There is a plethora of standards, frameworks, and tools, ISO 27000 and the NIST Cybersecurity Framework being two relevant families of international Information Security Management Standards (ISMSs). Globally, these standards are implemented by dedicated tools to collect and further analyze the information security auditing that is carried out in an enterprise. The overall goal of the auditing is to evaluate and mitigate the information security risk. The risk assessment is grounded by auditing processes, which examine and assess a list of predefined controls in a wide variety of subjects regarding cybersecurity and information security. For each control, a checklist of actions is applied and a set of corrective measures is proposed, in order to mitigate the flaws and to increase the level of compliance with the standard being used. The auditing process can apply different ISMSs in the same time frame. However, as these processes are time-consuming, involve on-site interventions, and imply specialized consulting teams, the methodology usually adopted by enterprises consists of applying a single ISMS and its existing tools and frameworks. This strategy brings overall less flexibility and diversity to the auditing process and, consequently, to the assessment results of the audited enterprise.

1. Proposed Architecture

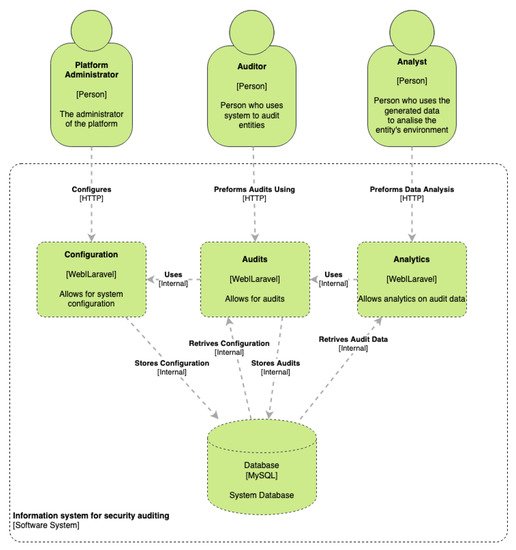

Figure 1 depicts the auditing process, namely the collection of evidence made by the auditing team, the automatic processing of auditing reports, and the results’ analysis. The web application is versatile and customizable, as it receives a predefined checklist of actions and a list of corrections to be applied. The whole auditing process is stored in a database, starting with the auditing and interventions’ records, going through the intermediate updates and reports’ delivery, and finishing with the global results’ analysis. This application enabled the achievement of three main goals: (1) to harmonize the auditing process; (2) to automatically generate auditing and intervention reports; (3) to process and analyze the aggregated results, at different stages of the auditing process.

Figure 1. The C4 Level 2 diagram of the application architecture.

The application is organized into three layers: web access through a web browser; an application layer developed in Laravel; and a data layer implemented in a MySQL database. Three major profiles were defined, according to the roles in the auditing process, namely audited enterprise, auditing team, and project manager. In each auditing process, the auditor can carry on multiple interventions. Each intervention is essentially the annotation by the auditor of the results obtained at each visit to the enterprise. Mitigation measures associated with the controls that did not pass are also registered in the application. The report summarizes all the interventions made, the controls that passed and failed, as well as the mitigation actions to be applied to each control, to elevate its compliance level. This report may be generated when needed, therefore allowing the continuous monitoring of the auditing process. Nevertheless, the most common approach is to generate the final report at the end of each intervention (when supported in that scenario) and in the final stage of auditing process to visualize the final results.

The project was developed with two main purposes in mind. First was to have uniformization of the collected data, which is vital for the aim of the project. Second was to allow the automatic generation of reports during the process and, at the end, to assess the outcomes. The IS was implemented in the Laravel framework, since the aim was to have a web application that could be easily accessed by the auditors’ teams. Before starting a software implementation, it is important to ensure that all necessary prerequisites have been met or have at least progressed far enough to provide a solid foundation for the requirements that are needed. If the various prerequisites are not satisfied, then the software is likely to be unsatisfactory, even if it is complete. Therefore, the first phase was extremely important during the development phase, as it allowed fully understanding the users’ needs and the level of data that was necessary to collect.

The application has three architectural and conceptual layers: a data layer where the data model is defined; a logic layer defining the business processes that are implemented; and finally, a view layer, which implements the data reporting functionalities for the user. The Model–View–Controller (MVC) design pattern integrates well with this architecture and is one of the most used in software engineering. Defined in 1998

[1] in the then-revolutionary Smalltalk programming language, it is still being researched today

[2]. This pattern implies the separation of responsibilities of the three architectural layers, by keeping each component easy to evolve, isolated, independent, and highly reusable.

There are several options in today’s web development to implement this kind of architecture. Some of them show great promise and interesting features, but this implies the downside of having a great deal of volatility in their ecosystem. PHP is among the most-used web languages, as it is very stable, and it has a few well-established frameworks that fit architectural needs. PHP’s Laravel Framework was chosen for this project because of its strong relationship with the MVC design pattern and the maturity of its codebase

[3][4].

3. Database Model

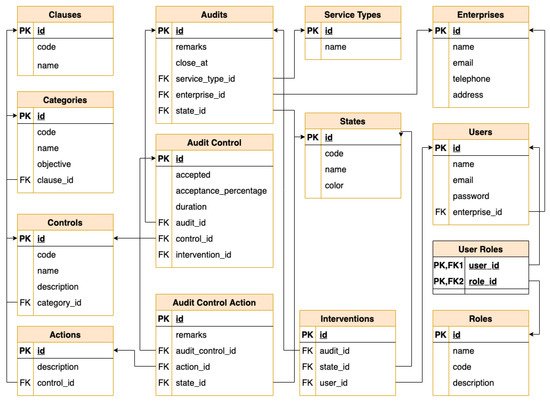

The data modeling design included the ISO 27001:2013 standard controls, lists of actions, and mitigation task lists, but not making them explicitly dependent. Therefore, by using this strategy, any other auditing standard can be loaded to the database without additional changes needed to the data model. The data model entities are represented in the database scheme depicted in Figure 4. As can be seen, there is only one direct dependency between the ISO 27001:2013 parts of the data model and the controls’ identification. This dependency can be easily assumed to be present in any standard, that is the entities Clauses, Categories, and Controls (upper left of Figure 4) are parts of the standards and are mapped in corresponding data tables of the data model.

The uniformization of the data collection process was initially performed by a “true/false” classification of the controls, which tends to make auditors perform round (and, in some way, binary) calculations during the control’s analysis. A better uniformization was performed by applying a “true/false” classification to specific actions (i.e., some specific element to be checked) and grouping a set of actions for each control. This change gave more dynamicity to the calculation of the acceptance criteria, as is inferred from the actions’ checklist, instead of the control itself. The mitigation tasks’ list related to each control, which should be applied to those that did not fully pass, is provided to the auditors. The application associates this list to the auditing, which enables the possibility to report the mitigation tasks to the management board, for further implementations that may increase the compliance level of the corresponding control.

The database mimics the overall concepts of an information security standard, namely its associated controls, actions’ checklists, and mitigation tasks’ list. The information system developed organizes the inputs by categories; each one is composed of controls, and each control has clauses. These entities represent the data template that will be presented to the auditors for a specific intervention.

The platform was developed to deliver a customizable way to import the data entities previously described. These automatization and parameterization features allow the automatic importing and ingestion of these data entities from well-known structured formats, such as CSV. This format is easily adjustable to the checklist used by the information standard, and it can be imported automatically and applied in different scenarios. Therefore, the platform can be set to support different types of standards that follow the same correlation between controls and actions.

Besides the standard entities imported from the CSV file, the data model incorporates other entities and their respective relationships, which are needed to store the auditing activity. As an example, the auditing interventions collect the controls validated by the auditor, which brings an additional management tool to evaluate and control the auditing as a whole.

This entry is adapted from the peer-reviewed paper 10.3390/app12094102