Distributed edge intelligence is a disruptive research area that enables the execution of machine learning and deep learning (ML/DL) algorithms close to where data are generated. Since edge devices are more limited and heterogeneous than typical cloud devices, many hindrances have to be overcome to fully extract the potential benefits of such an approach (such as data-in-motion analytics).

- machine learning

- artificial intelligence

- distributed

- edge intelligence

- fog intelligence

- Internet of Things

1. Introduction

- High latency [8]: offloading intelligence tasks to the edge enables achievement of faster inference, decreasing the inherent delay in data transmission through the network backbone;

- Security and privacy issues [9][10]: it is possible to train and infer on sensitive data fully at the edge, preventing their risky propagation throughout the network, where they are susceptible to attacks. Moreover, edge intelligence can derive non-sensitive information that could then be submitted to the cloud without further processing;

- The need for continuous internet connection: in locations where connectivity is poor or intermittent, the ML/DL could still be carried out;

- Bandwidth degradation: edge computing can perform part of processing tasks on raw data and transmit the produced data to the cloud (filtered/aggregated/pre-processed), thus saving network bandwidth. Transmitting large amounts of data to the cloud burdens the network and impacts the overall Quality of Service (QoS) [11];

- Power waste [12]: unnecessary raw data being transmitted through the internet demands power, decreasing energy efficiency on a large scale.

2. Related Work

|

Scope |

|||

|---|---|---|---|

|

Paper |

Challenges |

Group of Techniques |

Different Application Domains |

|

Al-Rakhami et al. [13] |

0/6 |

2/8 |

1/6 |

|

Wang et al. [14] |

1/6 |

4/8 |

4/6 |

|

Verbraeken et al. [16] |

1/6 |

0/8 |

0/6 |

|

Zhou et al. [15] |

2/6 |

4/8 |

0/6 |

|

Dianlei Xu et al. [10] |

6/6 |

3/8 |

0/6 |

|

The researchers' work |

6/6 |

8/8 |

6/6 |

3. Research Methodology

4. Answering the RQs

4.1. RQ1—Research Challenges in Edge Intelligence (EI)

|

Challenges |

|

|---|---|

|

CH1 |

Running ML/DL on devices with limited resources |

|

CH2 |

Ensuring energy efficiency without compromising the accuracy |

|

CH3 |

Communication efficiency |

|

CH4 |

Ensuring data privacy and security |

|

CH5 |

Handling failure in edge devices |

|

CH6 |

Heterogeneity and low quality of data |

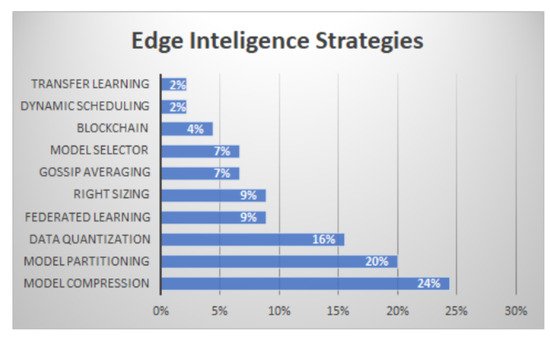

4.2. RQ2—Techniques and Strategies

4.3. RQ3—Frameworks for Edge Intelligence

|

Framework |

Groups of Techniques or Strategies |

Comments |

|---|---|---|

|

Neurosurgeon [73] |

Model Partitioning |

Lightweight scheduler to automatically partition DNN computation between edge devices and cloud at the granularity of NN layers |

|

JointDNN [74] |

Model Partitioning |

JointDNN provides an energy- and performance-efficient method of querying some layers on the mobile device and some layers on the cloud server. |

|

H. Li et al. [32] |

Model Partitioning |

They divide the NN layers and deploy the part with the lower ones (closer to the input) into edge servers and the part with higher layers (closer to the output) into the cloud for offloading processing. They also propose an offline and an online algorithm that schedules tasks in Edge servers. |

|

Musical chair [75] |

Model Partitioning |

Musical Chair aims at alleviating the compute cost and overcoming the resource barrier by distributing their computation: data parallelism and model parallelism. |

|

AAIoT [76] |

Model Partitioning |

Accurate segmenting NNs under multi-layer IoT architectures |

|

MobileNet [43] |

Model Compression Model Selector |

Presented by Google Inc., the two hyperparameters introduced allow the model builder to choose the right sized model for the specific application. |

|

Squeezenet |

Model Compression |

It is a reduced DNN that achieves AlexNet-level accuracy with 50 times fewer parameters |

|

Tiny-YOLO |

Model Compression |

Tiny Yolo is a very lite NN and is hence suitable for running on edge devices. It has an accuracy that is comparable to the standard AlexNet for small class numbers but is much faster. |

|

BranchyNet |

Right sizing |

Open source DNN training framework that supports the early-exit mechanism. |

|

TeamNet [77] |

Model Compression Transfer Learning |

TeamNet trains shallower models using the similar but downsized architecture of a given SOTA (state of the art) deep model. The master node compares its uncertainty with the worker’s and selects the one with the least uncertainty as to the final result. |

|

OpenEI [43] |

Model Compression Data Quantization Model Selector |

The algorithms are optimized by compressing the size of the model, quantizing the weight. The model selector will choose the most suitable model based on the developer’s requirement (the default is accuracy) and the current computing resource. |

|

TensorFlow Lite [78] |

Data Quantization |

TensorFlow’s lightweight solution, which is designed for mobile and edge devices. It leverages many optimization techniques, including quantized kernels, to reduce the latency. |

|

QNNPACK (Quantized Neural Networks PACKage) [79] |

Data Quantization |

Developed by Facebook, is a mobile-optimized library for high-performance NN inference. It provides an implementation of common NN operators on quantized 8-bit tensors. |

|

ProtoNN [80] |

Model Compression |

Inspired by k-Nearest Neighbor (KNN) and could be deployed on the edges with limited storage and computational power. |

|

EMI-RNN [81] |

Right Sizing |

It requires 72 times less computation than standard Long Short term Memory Networks (LSTM) and improves its accuracy by 1%. |

|

CoreML [82] |

Model Compression Data Quantization |

Published by Apple, it is a deep learning package optimized for on-device performance to minimize memory footprint and power consumption. Users are allowed to integrate the trained machine learning model into Apple products, such as Siri, Camera, and QuickType. |

|

DroNet [34] |

Model Compression Data Quantization |

The DroNet topology was inspired by residual networks and was reduced in size to minimize the bare image processing time (inference). The numerical representation of weights and activations reduces from the native one, 32-bit floating-point (Float32), down to a 16-bit fixed point one (Fixed16). |

|

Stratum [83] |

Model Selector Dynamic Scheduling |

Stratum can select the best model by evaluating a series of user-built models. A resource monitoring framework within Stratum keeps track of resource utilization and is responsible for triggering actions to elastically scale resources and migrate tasks, as needed, to meet the ML workflow’s Quality of Services (QoS). ML modules can be placed on the edge of the Cloud layer, depending on user requirements and capacity analysis. |

|

Efficient distributed deep learning (EDDL) [54] |

Model Compression Model Partitioning Right-Sizing |

A systematic and structured scheme based on balanced incomplete block design (BIBD) used in situations where the dataflows in DNNs are sparse. Vertical and horizontal model partition and grouped convolution techniques are used to reduce computation and memory. To speed up the inference, BranchyNet is utilized. |

|

In-Edge AI [5] |

Federated Learning |

Utilizes the collaboration among devices and edge nodes to exchange the learning parameters for better training and inference of the models. |

|

Edgence [84] |

Blockchain |

Edgence (EDGe + intelligENCE) is proposed to serve as a blockchain-enabled edge-computing platform to intelligently manage massive decentralized applications in IoT use cases. |

|

FederatedAveraging (FedAvg) [85] |

Federated Learning |

Combines local stochastic gradient descent (SGD) on each client with a server that performs model averaging. |

|

SSGD [86] |

Federated Learning |

System that enables multiple parties to jointly learn an accurate neural network model for a given objective without sharing their input datasets. |

|

BlockFL [87] |

Blockchain Federated Learning |

Mobile devices’ local model updates are exchanged and verified by leveraging blockchain. |

|

Edgent [6] |

Model Partitioning Right-Sizing |

Adaptively partitions DNN computation between the device and edge, in order to leverage hybrid computation resources in proximity for real-time DNN inference. DNN right-sizing accelerates DNN inference through the early exit at a proper intermediate DNN layer to further reduce the computation latency. |

|

PipeDream [88] |

Model Partitioning |

PipeDream keeps all available GPUs productive by systematically partitioning DNN layers among them to balance work and minimize communication. |

|

GoSGD [89] |

Gossip Averaging |

Method to share information between different threads based on gossip algorithms and showing good consensus convergence properties. |

|

Gossiping SGD [90] |

Gossip Averaging |

Asynchronous method that replaces the all-reduce collective operation of synchronous training with a gossip aggregation algorithm. |

|

GossipGraD [91] |

Gossip Averaging |

Asynchronous communication of gradients for further reducing the communication cost. |

|

INCEPTIONN [92] |

Data Quantization |

Lossy-compression algorithm for floating-point gradients. The framework reduces the communication time by 70.9 80.7% and offers 2.2 3.1× speedup over the conventional training system while achieving the same level of accuracy. |

|

Minerva [93] |

Data Quantization Model compression |

Quantization analysis minimizes bit widths without exceeding a strict prediction error bound. Compared to a 16-bit fixed-point baseline, Minerva reduces power consumption by 1.5×. Minerva identifies operands that are close to zero and removes them from the prediction computation such that model accuracy is not affected. Selective pruning further reduces power consumption by 2.0× on top of bit width quantization. |

|

AdaDeep [94] |

Model Compression |

Automatically selects a combination of compression techniques for a given DNN that will lead to an optimal balance between user-specified performance goals and resource constraints. AdaDeep enables up to 9.8× latency reduction, 4.3× energy efficiency improvement, and 38× storage reduction in DNNs while incurring negligible accuracy loss. |

|

JALAD [95] |

Data Quantization Model Partitioning |

Data compression by jointly considering compression rate and model accuracy. A latency-aware deep decoupling strategy to minimize the overall execution latency is employed. Decouples a deep NN to run a part of it at edge devices and the other part inside the conventional cloud. |

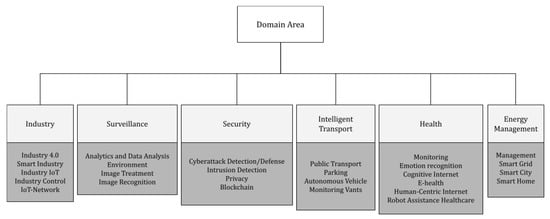

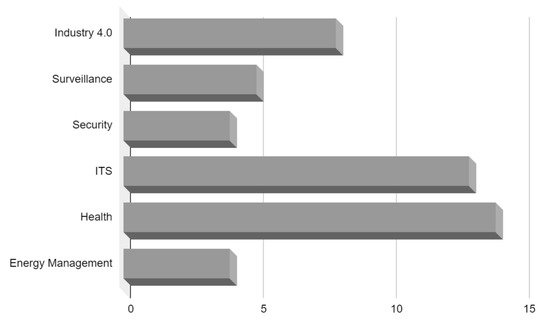

4.4. RQ4—Edge Intelligence Application Domains

This entry is adapted from the peer-reviewed paper 10.3390/s22072665