Virtual reality has been shown to facilitate perception and navigation inside 3D models, while stimulating creativity and enhancing architect/client interaction. In this scenario, in order to better explore paths along the design space that are suggested from this interaction, it is important to support quick updates to the model while still immersed in it. Algorithmic design, an approach to architectural design that uses parametric algorithms to represent a design space, rather than a single design instance, provides such support.

We present a novel architectural design process based on the integration of live coding with virtual reality, promoting an immersive approach to algorithmic design. The proposed workflow entails the use of an algorithmic design tool embedded in a virtual environment, where the architect not only creates the design but also interacts with said design, changing it by live coding its algorithmic representation from within virtual reality. In this paper, we explain the challenges faced and solutions devised for the implementation of the proposed workflow. Moreover, we discuss the applicability of algorithmic design in virtual reality to different stages of the architectural design process and the future developments that may arise from this proposal.

1. Introduction

Current digital representation tools for architectural design employ a visualization strategy that conveys the building’s form through the use of plans, section, elevations, and perspective views. This forces architects and clients to not only combine separate views of the model to form a mental model of the entire scenario [

1], but also to scale it to real size in their imagination. This process can be considerably improved through Virtual Reality (VR) technology, allowing users to inhabit the building and perceive it at its natural scale [

2].

Despite the advantages VR brings to architectural design, it has an important limitation: the majority of the ideas suggested during a VR session cannot be implemented and experimented during that session. In fact, most approaches for changing the design, with or without VR, are limited to manual model manipulation and, thus, cannot have extensive effects in the design. Algorithmic Design (AD) addresses this problem through the algorithmic description of the model, allowing parametric changes to the design that preserve its internal logic, thus ensuring the consistency of the modified design. However, AD has not yet been used in combination with VR and, thus, designers cannot update the algorithmic descriptions of their models while immersed in a Virtual Environment (VE).

To address this problem, in this paper, we propose Algorithmic Design in Virtual Reality (ADVR), a workflow based on the use of algorithms to represent designs that supports extensive design changes while immersed in VR. Using ADVR, architects can quickly experiment design variations without leaving the VE. However, this workflow also entails considerable challenges, which we discuss in the following sections.

2. Related Work

In 1965, Ivan Sutherland envisioned the ultimate display [

3], a reality within which the computer controls the existence of matter itself. Although we are still far from achieving this radical vision, VR technology can already provide enough realism and immersiveness to make it an appealing tool for various professions, and architecture is no exception. AD uses algorithms to describe architectural designs, producing complex and parametric results that greatly benefit from immersive visualization. Having this in mind, several approaches have been developed that allow architects to access and change their AD programs from the VE. This section presents the current state of the art on the integration of parametric and algorithmic-design solutions with VR.

2.1. Virtual Reality in Architecture

The architectural industry is rapidly embracing VR since this technology helps users understand building designs, particularly when compared to the traditional alternative, which is based on flat projections that must be combined and scaled to form a mental model of the design [

1].

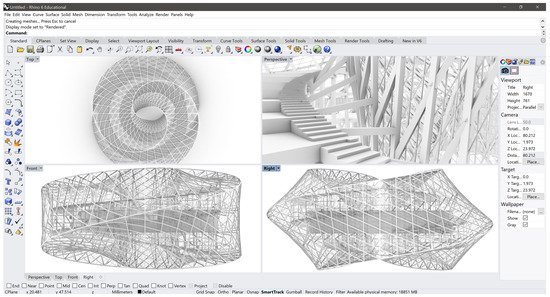

Figure 1 illustrates this scenario for the case of the Astana National Library (ANL) project. This building, originally designed by Bjarke Ingels Group in 2008, has the shape of a 3D Moebius strip, a rather complex form for one to understand through projections only. Although architects are known for their 3D visualization capabilities, therefore being more than able to work within these boundaries, their clients, on the other hand, are not. For this reason, early adoption of VR technologies in this industry was mostly motivated by contractors and real estate owners [

4], but nowadays more and more architectural studios use them to support design processes as well [

2,

5,

6,

7,

8].

Figure 1. Rendered ANL model in Rhinoceros 3D shown in the 4 default viewports (top, perspective, front, and right view).

Despite the advantages of VR for facilitating the understanding of a building design, there is one significant limitation: most applications of VR in architecture focus on model visualization only [

9,

10,

11], and the few existing approaches for changing the design while in the VE require virtual manual model manipulation [

12,

13] using VR controllers and associated transformation operations. This modeling workflow tends to be slower than the traditional (digital) one, which in turn is already quite slow when systematic changes are needed. There is one approach, however, that overcomes this problem: AD.

2.2. Algorithmic Design

AD defines the creation of architectural designs through algorithmic descriptions, implemented as computer programs [

14,

15]. AD allows architects (1) to model complex geometries that would take a considerable amount of time to produce otherwise, (2) automate repetitive and time-consuming tasks, and (3) quickly generate diverse design solutions without having to rework the model for every iteration [

16,

17].

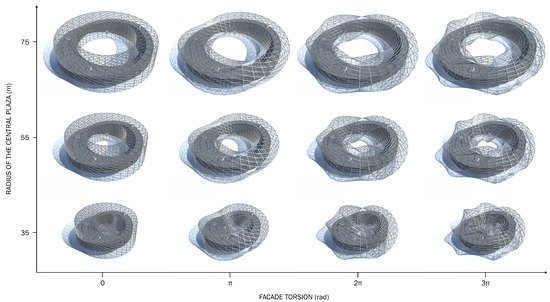

Figure 2 presents several variations of the ANL model, obtained by manipulating the radius of the central plaza and the building’s facade torsion.

Figure 2. Variations of the ANL model produced by changing selected parameters in the program: radius of the central plaza and the building’s facade torsion.

Traditionally, AD entails the incremental development of the program that describes a building design and the visualization of the generated model to confirm the correctness of the program. In the case of VR, however, the design process needs to become a real-time and interactive one. Real-time interaction between the program and the model requires the use of a very performative AD tool, so that the designer can get immediate visual feedback on the impact of the changes applied to the program. Grasshopper [

18], a visual programming environment, and LunaMoth [

19], a textual programming one, are good examples of tools that possess this liveliness [

20] aspect. Nevertheless, they were not intended to be used in VR and known integrations with this technology remain within the sphere of static visualization [

21], failing to take advantage of AD’s interactive potential.

2.3. Live Coding

Live coding is a technique centered upon the writing of interactive programs on the fly [

20]. In the case of VR, we envision the application of live coding in a scenario where the architect can code the algorithmic description of the model alongside the resulting geometry. The idea is similar to the workflow supported by some AD tools such as Dynamo [

22] and Luna Moth [

19], where the code (or visual components) is manipulated in the same environment where the model is concurrently being updated.

The concept of using VR for coding is also not a novelty. The Primitive tool [

23], for instance, has proven the use of VR for collaborative software analysis visualization. Specific coding applications for VR have also been developed, such as NeosVR’s LogiX [

24]—a VR multiplayer visual programming system; Rumpus [

25]—a live coding playground for room-scale VR; Fenek [

26]—a live coding JavaScript framework that allows developers to modify the underlying render engine while immersed in the VE; RiftSketch [

27] and CodeChisel3D [

28]—both browser-based live coding environments for VR. However, most of these tools were only tested with simple graphical models, and none were applied to the architectural context.

2.4. Parametric Design Solutions for Virtual Reality

Within the specific context of architectural design, by transporting the programming environment to the VE, we can have architects and clients manipulating the models in VR. Given that, in this industry, the production line involves multiple iterations of the solutions discussed by several stakeholders, having these discussions take place synchronously with the project’s development can resolve the existent asymmetric collaboration [

29]. This, in turn, considerably accelerates the ideation process, thus, saving time and resources.

Solutions that allow for the modification of programs in VR have also been developed. Parametric Modelling Within Immersive Environments [

30], Shared Immersive Environments for Parametric Model Manipulation [

31], and Immersive Algorithmic Design [

32] present solutions to connect AD tools to a VE, where architects are immersed in their models, apply changes to the program, and visualize the corresponding impacts in real time.

All three solutions contemplate a visual programming outline, with the former two [

30,

31] only allowing parameter manipulation. This means users do not have access to the entirety of the code in VR, but only to a chosen set of parameters, which they can change via sliders. For this reason, these solutions are framed within the scope of parametric design only, not AD, according to the definitions proposed by [

33]. The third approach [

32] goes further, also supporting textual programming and full control over the program. Nevertheless, and despite having presented multiple code manipulation solutions in the paper, the implementation of these solutions within the proposed system is not discussed, nor is the proposal formally evaluated.

3. Algorithmic Design in Virtual Reality

ADVR aims to aid the algorithmic design process by integrating live coding in VR. In this workflow, architects use a Head Mounted Display (HMD) and an AD tool integrated in a VE to code their designs while immersed in them. In the VE, the generated design is concurrently updated in accordance with the changes made to its algorithmic description. Seeing these updates in near real time allows designers to conduct an iterative process of concurrent programming and visualization of the generated model in VR, enhancing the project with each cycle.

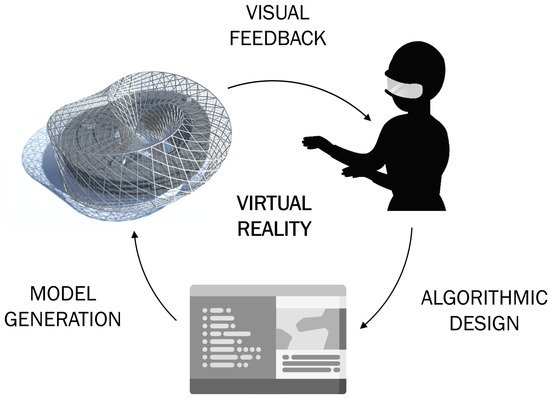

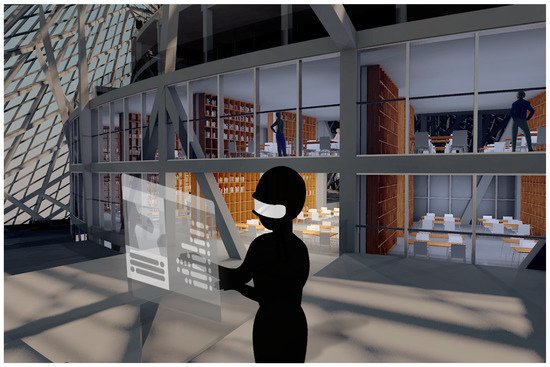

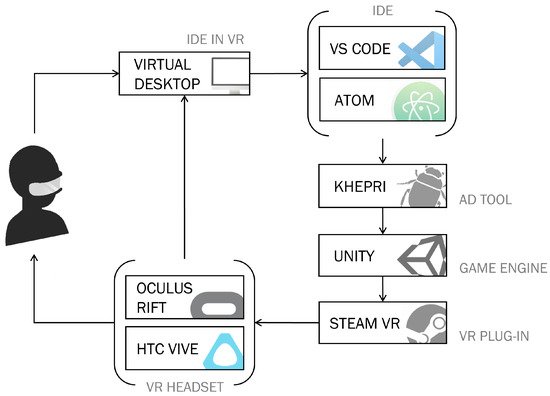

Figure 3 presents a conceptual scheme of this loop: architects develop the algorithmic description of the design using an Interactive Development Environment (IDE) or a programming editor to input the coding instructions into the AD tool, which then generates the corresponding model. From the VE designers, then, evaluate the results and, possibly, modify the algorithmic description from there, thus repeating the loop. Figure 4 presents a mock-up of the corresponding VR experience.

Figure 3. Conceptual scheme of the architect/program/model loop happening in VR: architects develop the algorithmic description of the design in VR, generating the corresponding model around them, whose visualization them motivates further changes to the description.

Figure 4. Mock-up of the ADVR experience: the architects live codes the algorithmic description of the design from within the VE.

In order to provide an efficient coding platform in the VE that will enable this workflow, the following components are required: (1) an interactive AD tool that allows for the generation of complex architectural models, along with (2) a VR tool that can be coupled to the AD framework. This tool must be a sufficiently performative visualizer, such as a game engine, to guarantee near real-time feedback; (3) a mechanism that allows designers to code while immersed, i.e., an IDE or a programming editor; (4) text input mechanisms, as well as (5) language and IDE considerations; and, finally, (6) the ability to smoothly update the model even when its complexity hinders performance. The implementations we chose to pursuit for each of the numbered items are described below. Figure 5 presents the implementation workflow, featuring the chosen tools.

Figure 5. ADVR implementation: while immersed in the VE, the user accesses the IDE through the headset’s virtual desktop application. The AD tool is responsible for translating the given instructions into operations recognized by the game engine, which is connected to the HMD through the VR plug-in.

3.1. AD Tool

Regarding the AD tool, we opted for Khepri [

36], a portable AD tool that integrates multiple backends for different design purposes, namely Computer-Aided Design (CAD), Building Information Modeling (BIM), game engines, rendering, analysis, and optimization tools. The use of multiple tools along the development process is motivated by their different benefits: while CAD tools outperform the rest in free-form modelling [

37]; BIM tools are essential for dealing with construction information [

38]; game engines present a good alternative for fast visualization and navigation [

39]; rendering tools offer realistic but time-consuming representations of models for presentation; and, finally, analysis and optimization tools inform and guide the design process based on the model’s performance [

40].

3.2. VR Tool

Regarding the game engine, our choice fell upon Unity [

41], since it provides good visual quality, including lighting, shadows, and physics; good visualization performance for average-scale architectural projects; platform independence; and availability of assets; as well as VR integration. Through the Steam VR plug-in, Unity communicates with the two tested headsets: Oculus Rift and HTC Vive. It must also be noted that, despite the fast response guaranteed by the game engine, the capacity for real-time feedback will always be conditioned by the model’s complexity.

3.3. IDE Projection

For architects to be able to program from inside the VE, they need to access their preferred IDE while immersed in VR. To this end, we use the virtual desktop application provided by most HMDs (including the ones used for this implementation), which mirrors the user’s desktop in VR, allowing the use of any application and, more specifically, of any IDE.

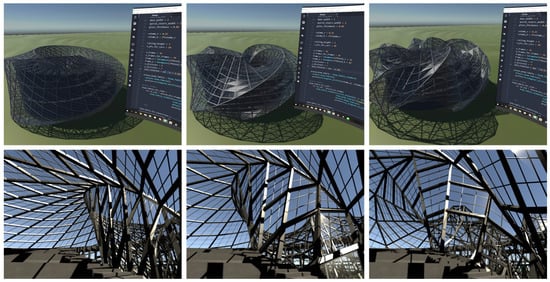

Figure 6 presents the workflow with the ANL model being live coded in VR. In this case, a change in the facade torsion parameter can be observed. Looking at the pictures in the first row, one may also observe that the mirrored desktop represents a partial visual blocker to the scene. However, it should be noted that this two-dimensional representation fails to convey the full 360° experience the user has in the VE. Moreover, the screen can be moved, scaled, or hidden at the designer’s will, as shown in the second row of images in Figure 6.

Figure 6. ADVR of the ANL model: manipulation of the facade torsion parameter (π, 2π, and 4π).

3.4. Text Input

Regarding textual input, there are several solutions currently available for the use of virtual and physical keyboards. Considering the result obtained in previous studies on the matter [

42,

43], we opted for the latter solution in this implementation.

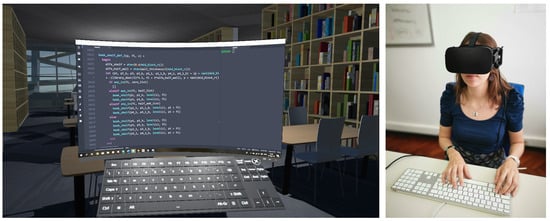

Figure 7 presents a use-case of the assembled workflow, showcasing both the IDE display along with the generated model of ANL in VR, and the interaction mechanisms in action, inside and outside the VE.

Figure 7. ADVR workflow: on the left, the VE where the model, the IDE and the responsive virtual keyboard are visible; on the right, the architect typing in the physical keyboard.

We stress that, despite the performance of the chosen solution in comparison to the remaining ones, it does not yet reach that of typing on a normal keyboard outside the VE, particularly for users who cannot touch type and, thus, heavily rely on both visual and haptic feedback. This question is particularly relevant in the present context, as the majority of programming architects are, in fact, non-experienced typists. Hence, other solutions for the problem must be sought and we believe the industry will soon provide them, as some interesting new concepts are already starting to emerge [

44].

3.5. Language and Editor

In order to guarantee fast typing results, we opted for a dynamically-typed programming language, as these tend to be more concise than statically-typed ones. Although the latter offer more performance in run time and can detect static semantic errors, they force the user to provide type information, which is a lot more verbose. The chosen IDE can also help the user in the typing task, particularly, by providing automatic completion for names and for entire syntactical structures, such as function definitions. For this implementation, we used the Julia language running in the Atom editor with the help of the Juno plugin, a combination which considerably augments the user’s typing speed. As shown in Figure 5, the Visual Studio Code editor was also tested, although the lack of a user friendly menu with shortcut buttons forced users to type more in order to run commands.

3.6. Model Update

ADVR takes advantage of game engines’ ability to efficiently process geometry. As a result, we can generate large-scale models, as is the case of the ANL, in a matter of seconds. When applying changes to the model in VR, these seconds are, nevertheless, troublesome, since the AD tool deletes and (re)generates the entire model in each iteration, regardless of the number of changes applied. Consequently, as the model grows, a small lag becomes noticeable and the sudden reshaping of the entire VE is disorienting and makes it difficult to understand the effects of the applied changes.

To solve these problems, we implemented a multi-buffering approach that keeps the user in an outdated but consistent model, while a new model is invisibly being generated. When finished, the new model replaces the old one, allowing the user to immediately visualize the impact of the changes. It is also possible to have several models available simultaneously, in different buffers, for the user to switch back and forth between them, facilitating comparisons and improving the decision-making process. Figure 6 illustrates this by showing two different views of the variations created.

This entry is adapted from the peer-reviewed paper 10.3390/architecture2010003