Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is an old version of this entry, which may differ significantly from the current revision.

Subjects:

Oncology

Lung cancer is the leading cause of malignancy-related mortality worldwide. AI has the potential to help to treat lung cancer from detection, diagnosis and decision making to prognosis prediction. AI could reduce the labor work of LDCT, CXR, and pathology slides reading. AI as a second reader in LDCT and CXR reading reduces the effort of radiologists and increases the accuracy of nodule detection.

- artificial intelligence

- machine learning

- lung cancer

1. Introduction

Lung cancer constitutes the largest portion of malignancy-related deaths worldwide [1]. It is also the leading cause of malignancy-related death in Taiwan [2,3]. The majority of the patients diagnosed with lung cancer are in the late-stage, and therefore have a poor prognosis. In addition to the late stage at diagnosis, the heterogeneity of imaging features and histopathology of lung cancer also makes it a challenge for clinicians to choose the best treatment option.

The imaging features of lung cancer vary from a single tiny nodule to ground-glass opacity, multiple nodules, pleural effusion, lung collapse, and multiple opacities [4]; simple and small lesions are extremely difficult to detect [5]. Histopathological features include adenocarcinoma, squamous cell carcinoma, small cell carcinoma, and many other rare histological types. The histology subtypes vary even more. For example, at least six common subtypes and a total of eleven subtypes of adenocarcinoma were reported in the 2015 World Health Organization classification of lung tumors [6], with more subtypes added to the 2021 version [7]. Treatment options are heavily dependent on the clinical staging, histopathology, and genomic features of the lung cancer. In the era of precision medicine, clinicians need to collect all the features and make a decision to administer chemotherapy, targeted therapy, immunotherapy, and/or combined with surgery or radiotherapy.

Whether to treat or not to treat the disease is always a question in daily practice. Clinicians would like to know the true relationship between the observations and interventions (inputs) and the results (outputs). In other words, to find a model for disease detection, classification, or prediction. Currently, this knowledge is based on clinical trials and the experience of doctors. This exhausts the doctors in reading images and/or pathology slides repeatedly to make an accurate diagnosis. Reviewing charts to determine the best treatment options for patients also consumes a considerable amount of time. A good prediction/classification model would simplify the entire process. Here, artificial intelligence(AI) is introduced.

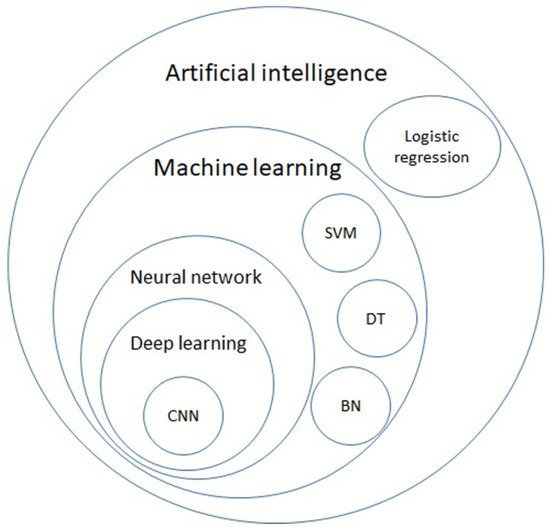

AI is a general term that does not have a strict definition. AI is an algorithm driven by existing data to predict or classify objects [8]. The main components include the dataset used for training, pretreatment method, an algorithm used to generate the prediction model, and the pre-trained model to accelerate the speed of building models and inherit previous experience. Machine learning (ML) is a subclass of AI, and is the science of obtaining algorithms to solve problems without being explicitly programmed, including decision trees (DTs), support vector machines (SVMs), and Bayesian networks (BNs). Deep learning is a further subclass of ML, featured with multiple layered ML, achieving feature selection and model fitting at the same time [9]. The hierarchical relationship between those definitions is displayed in Figure 1.

Figure 1. Venn diagram of artificial intelligence (AI), machine learning (ML), neural network, deep learning, and further algorithms in each category. AI is a general term for a program that predicts an answer to a certain problem, where one of the conventional methods is logistic regression. ML learns the algorithm through input data without explicit programming. ML includes algorithms such as decision trees (DTs), support vector machines (SVMs), and Bayesian networks (BNs). By using each ML algorithm as a neuron with multiple inputs and a single output, a neural network is a structure that mimics the human brain. Deep learning is formed with multiple layers of neural networks, and convolutional neural network (CNN) is one of the elements of the famous architecture.

2. Diagnosis

When a nodule is detected, clinicians must know the properties of the lung nodule. The gold standard is to acquire tissue samples via either biopsy or surgery. The image features provide a way to guess the properties of the lung nodule by radiomics as mentioned in the previous section. Aside from imaging features, the histopathological features also affect further treatment. Following the path of digital radiology, whole slide imaging (WSI) has opened the trend of digital histopathology. With digitalized WSI data, AI can help pathologists with daily tasks and beyond, ranging from tumor cell recognition and segmentation [47], histological subtype classification [48,49,50,51], PD-L1 scoring [52], to tumor-infiltrating lymphocyte (TIL) count [53].

2.1. Radiomics

Following the idea of radiomics in nodule detection and malignancy risk stratification, radiomics was applied to predict the histopathological features of lung nodules/masses [40]. Researchers used logistic regression of radiomics and clinical features to distinguish small cell lung cancer from non-small cell lung cancer with an AUC of 0.94 and an accuracy of 86.2% [41]. The LASSO logistic regression model was used to classify adenocarcinomas and squamous cell carcinomas in the NSCLC group [42]. Further molecular features such as Ki-67 [43], epidermal growth factor receptor (EGFR) [44], anaplastic lymphoma kinase (ALK) [45], and programmed cell death 1 ligand, (PD-L1) [46] were also shown to be predictable with AI-analyzed radiomics, a non-invasive and simple method.

2.2. WSI

The emergence of WSI is a landmark in modern digital pathology. The WSI depends on a slide scanner that can transform glass slides into digital images with the desired resolution. Once the images are stored on the server, pathologists can view them on their personal computers or handheld devices. Similar to DICOM in diagnostic radiology, in 2017, the FDA approved two vendors for the WSI system for primary diagnosis [115,116]. Meanwhile, the DICOM also planned support for WSI in the PACS systems to facilitate the adaption of digital pathology in hospitals and further information exchange [117,118]. These features enable the building of a digital pathology network to share expertise for consultations and make education across the country possible [119].

Each WSI digital slide is a large image. It may contain more than 4 billion pixels and may exceed 15 GB when scanned with a resolution of 0.25 micrometers/pixel, referred to as 40× magnification [118,120]. With recent advances in AI and DL in image classification, segmentation, and transformation, digitalized WSI provides another broad field to play. There are many applications for deep learning in cytopathology.

2.3. Histopathology

Detecting cancerous regions is the most basic and essential task of deep learning in pathology. Some models combine the detection, segmentation, and histological subtyping together [47,48,49]. Accuracy depends on the data quality, quantity, and abundance of the malignant cell differentiation status. It is difficult to perform histological subtyping of lung cancer without special immunohistochemistry (IHC) staining. This causes inter-observer disagreement when reading H&E staining. While the agreement between pathologists came to a Kappa value of 0.485, a trained AI model can achieve a Kappa value of up to 0.525 when compared with a pathologist [48]. In the detection of lymph node metastasis, a well-trained AI model can help reduce human workload and prevent errors [121]. It obviously performs better than a pathologist in a limited time and has a greater detection rate of single-cell metastasis or micro-metastasis [121].

Although WSI with H&E-stained slides is designed to view the morphology of tissues, with the aid of AI, researchers have designed methods to predict specific gene mutations, PD-L1 expression level, treatment response, and even the prognosis of patients. Focusing on lung adenocarcinoma, Coudray et al. developed an AI application using Inception-V3 for the prediction of frequently appearing gene mutations including STK11, EGFR, FAT1, SETBP1, KRAS, and TP53 [50]. The AUC of this prediction reached 0.754 for EGFR and 0.814 for KRAS which can be treated with effective targeted agents. Sha et al., used ResNet-18 as the backbone to predict the PD-L1 status in NSCLC [55]. Their model showed an AUC between 0.67 and 0.81, while different PD-L1 cutoff levels were chosen. They believed that the morphological features may be related to PD-L1 expression level.

Next-generation sequencing (NGS) plays an important role in modern lung cancer treatment [122]. Successful NGS testing depends on a sufficient number of tumor cells and tumor DNA. AI can assist in determining tumor cellularity [123,124]. In addition, a trained AI can help count the immune cells, while the tissue specimen is adequately stained for special surface markers [53]. Since the PD-L1 expression level is the key predictor for immunotherapy in lung cancer, AI has been trained to count the proportion score for PD-L1 expression [52,125]. When properly stained, computer-aided PD-L1 scoring and quantitative tumor microenvironment analysis may meet the requests of pathologists, and eliminate inter-observer variations and achieve precise lung cancer treatment [126].

However, there are several barriers to the translation of AI applications into clinical services. First, AI applications may not work well when applied to other pathology laboratories, scanners, or diverse protocols [127]. Second, most AIs are designed for their own unique functions. Users are requested to launch several applications for different purposes and spend a lot of time transferring the data. Medical devices powered by AI applications require approval by regulations. Most articles and works were in-house studies and laboratory-developed tests. All of these barriers may restrict the deployment of trained AI models in daily clinical practice [119].

2.4. Cytology

The WSI for cytology differs from pathology. Cytology slides are not even sliced flat layers. Instead, they have an entire cell on the glass and would be multiple cell layers. Cytologists tend to use the focus function and look into the cells. While digitalizing the cytology glass slide, the focus function was simulated through the Z-stack function and multiple layers of different focus [128,129]. This method yields a larger WSI file, approximately 10 times that of a typical histological case. Multiple image layers also increase complexity and pose challenges to AI applications.

Few articles have discussed cytology, especially those focusing on lung cancer. For thyroid cancer, Lin et al. proposed a DL method for thyroid fine-needle aspiration (FNA) samples and ThinPrep (TP) cytological slides for detecting papillary thyroid carcinoma [130]. The authors did not claim the ability to detect other cell types of thyroid cancer using their method. AI can be performed for various cytology samples from lung cancer patients, including pleural effusion, lymph node aspiration, tissue aspiration samples, and endobronchial ultrasound-guided fine-needle aspiration (EBUS-TBNA) of mediastinal lymph nodes.

3. Decision Making and Prognosis Prediction

Oncologists prefer to deploy this technique to its limits. There are many exciting possibilities for the use of the AI technique. By predicting treatment response, including survival and adverse events, AI was proven to have the potential to play a role in clinical decision making [13], to help surgeons choose the specific groups of patients to receive surgery, and to aid radiotherapists in planning the radiation zone.

3.1. Medication Selection

In late-stage lung cancer, the identification of driver mutations, PD-L1 expression, and tumor oncogenes affects most the treatment of choice. Using WSI and radiomics, AI could help to identify EGFR mutations [44,50], ALK [45], and PD-L1 expression [46,55,56]. EGFR mutation subtypes have also been classified using radiomic features [57].

Another research point is the use of radiomics, WSI, and clinical data to directly predict cancer treatment response or survival [131]. Dercle et al. retrospectively analyzed the data from prospective clinical trials and found that the AI model based on the random forest algorithm and CT-based radiomic features predicted the treatment sensitivity of nivolumab with an AUC of 0.77, docetaxel with an AUC of 0.67, and gefitinib with an AUC of 0.82 [58]. CT-based radiomics models have also been reported to predict the overall survival of lung cancer [59,60].

One patent application publication declared that using radiomics features of segmented cell nuclei of lung cancer can predict responses to immunotherapy with an AUC up to 0.65 in the validation dataset [132]. Although there is no specific survival prediction model for lung cancer, Ellery et al. developed a risk prediction model using the TCGA Pan-Cancer WSI database including lung cancer [133]. However, the DL algorithm did not provide acceptable prediction power for lung adenocarcinoma or lung squamous cell carcinoma.

3.2. Surgery

The gold standard for the treatment of early-stage lung cancer is surgical resection. AI was applied to pre-surgical evaluation [61,62], and prognosis prediction after surgery, and could help identify patients who are suitable to receive adjuvant chemotherapy after surgery [54].

In pre-surgical evaluation, radiologist-level AI could help predict visceral pleural invasion [62], and identify early stage lung adenocarcinomas suitable for sub-lobar resection [61]. After surgery, AI could play a role in predicting prognosis. The model based on radiomic feature nomograms could identify high-risk groups whose postsurgical tumor recurrence risk is 16-fold higher than that of low-risk group [134]. The CNN model pre-trained with the radiotherapy dataset successfully predict a 2-year overall survival after surgery [135]. The model integrating genomic and clinicopathological features was able to identify patients at risk for recurrence and who were suitable to receive adjuvant therapy [54].

3.3. Radiotherapy

SBRT is currently the standard of care to treat early-stage lung cancer and/or provide local control for patients who are medically inoperable or refuse surgery. Radiomics-based models have been reported to successfully predict 1-year tumor recurrence via CT scans performed after 3 and 6 months of SBRT [63]. Lewis and Kemp also developed a model trained on TCGA dataset to predict cancer resistance to radiation [64]. As a well-known side effect of radiotherapy, radiation pneumonitis can be lethal, and clinicians would like to prevent this situation. The AI model based on pretreatment CT radiomics was superior to the traditional model using dosimetric and clinical predictors in predicting radiation pneumonitis [65]. Another ANN algorithm trained with radiomics extracted from a 3D dose map of radiotherapy has been shown to predict the acute and late pulmonary toxicities with an accuracy of 0.69 [66]. A well-designed prediction model for radiation pneumonitis may help to prevent radiation pneumonitis in the future.

This entry is adapted from the peer-reviewed paper 10.3390/cancers14061370

This entry is offline, you can click here to edit this entry!