Fabric-handling robots could be used by individuals as home-assistive robots. While in most industries, most processes are automated and human workers have either been replaced by robots or work alongside them, fewer changes have occurred in industries that use limp materials, like fabrics, clothes, and garments, than might be expected with today’s technological evolution. Integration of robots in these industries is a relatively demanding and challenging task, mostly because of the natural and mechanical properties of limp materials.

- sensors

- limp materials

- garments

- fabrics

- automation

- grippers

- robot

1. Introduction

Textile industries present a relatively low percentage of automation in their production lines, which are constantly transporting, handling, and processing limp materials. In 1990, the challenges of developing a fully automated garment manufacturing process were stated in [1]. In line with the article, one of the major mistakes usually made is facing the problem without considering engineering, by characterizing materials as soft, harsh, slippery, etc., without using quantitative values. It is suggested that fabrics must be carefully studied and, if needed, redesigned to fulfil the desired specifications. When it comes to the machinery used for manipulation of fabrics, the soft and sensitive surface of materials of this kind, combined with their lack of rigidity, demands the development of sophisticated and specially designed grippers and robots that can effectively handle limp materials like fabrics in an accurate, dexterous, and quick manner. Integration of robots in production aims to improve the quality of the final product so technology that might harm the material surface, like bulk machines used in other industries, is not implemented.

2. Sensor Analysis

2.1. Working Principle

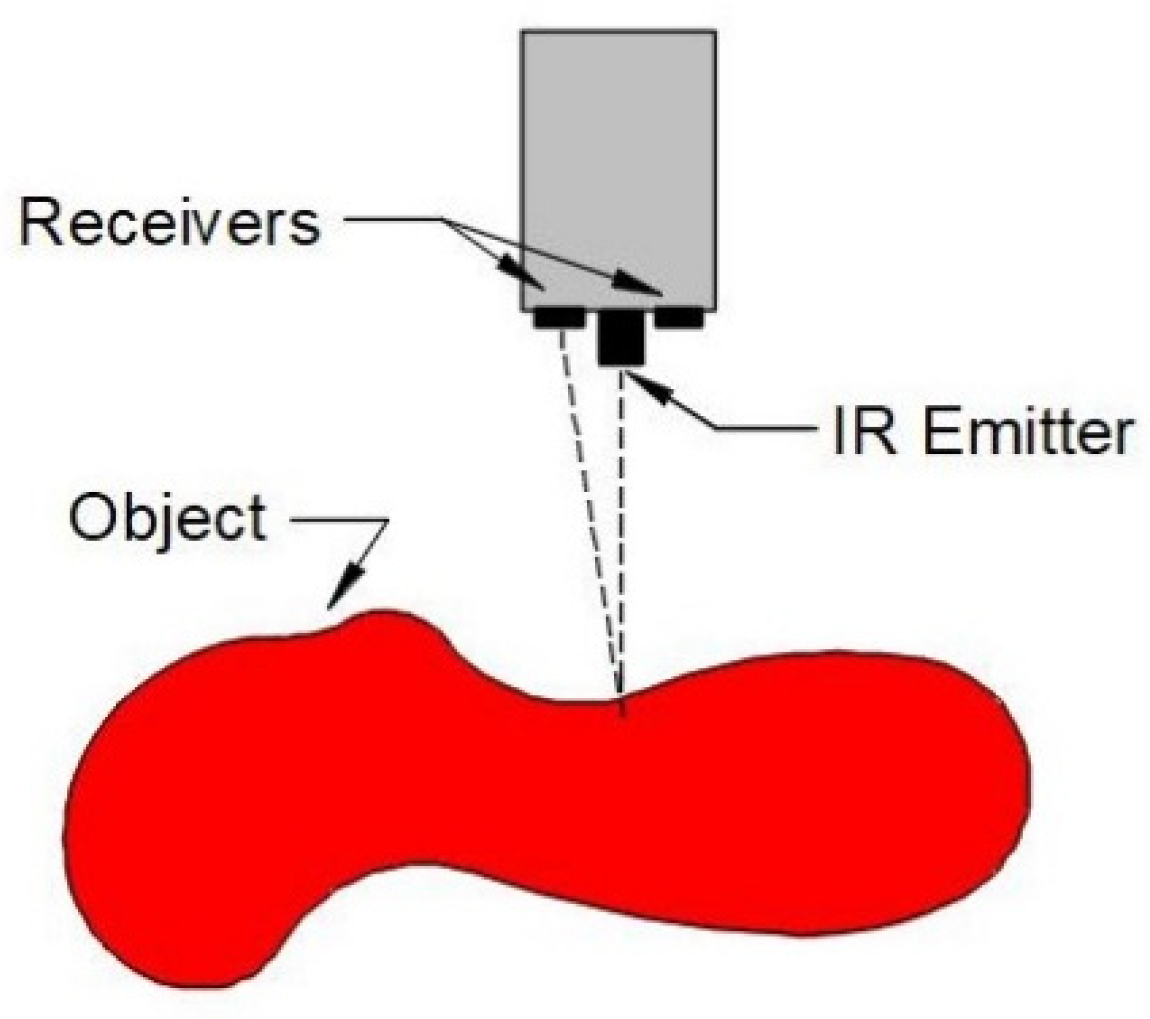

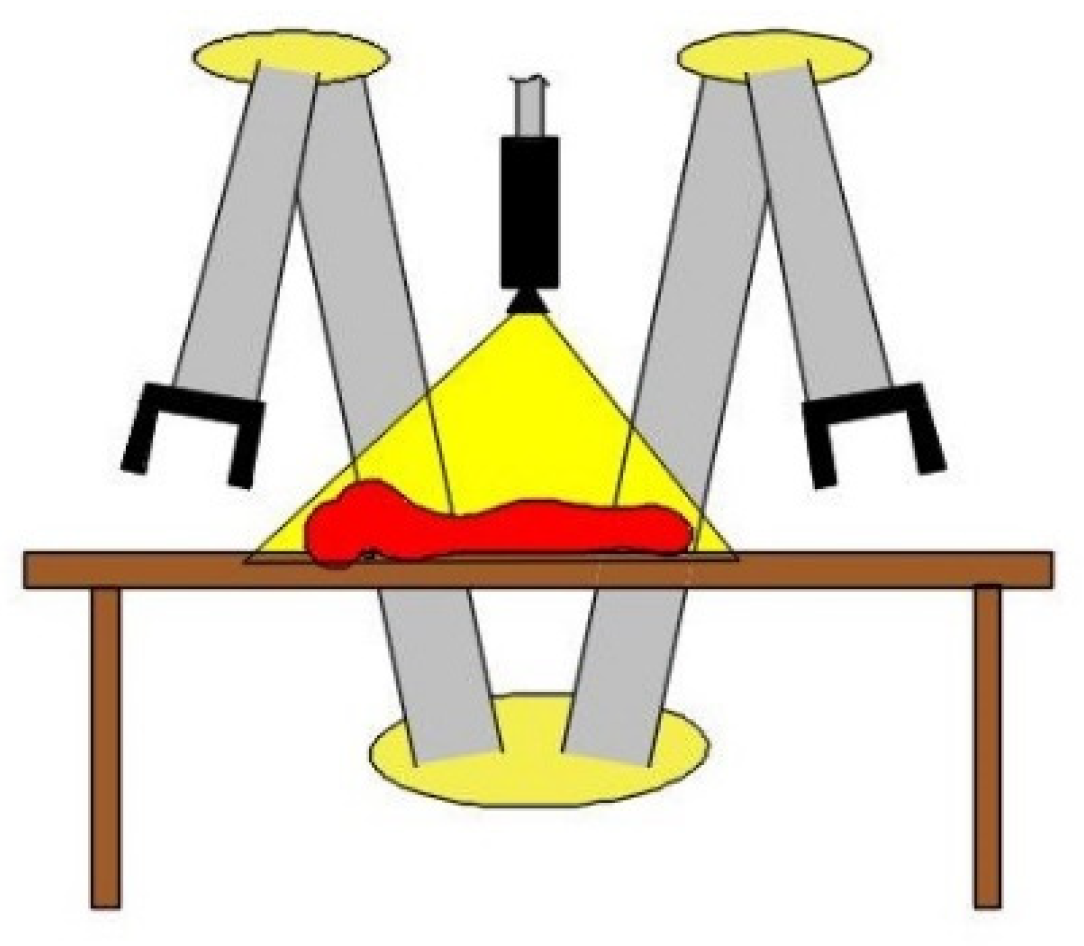

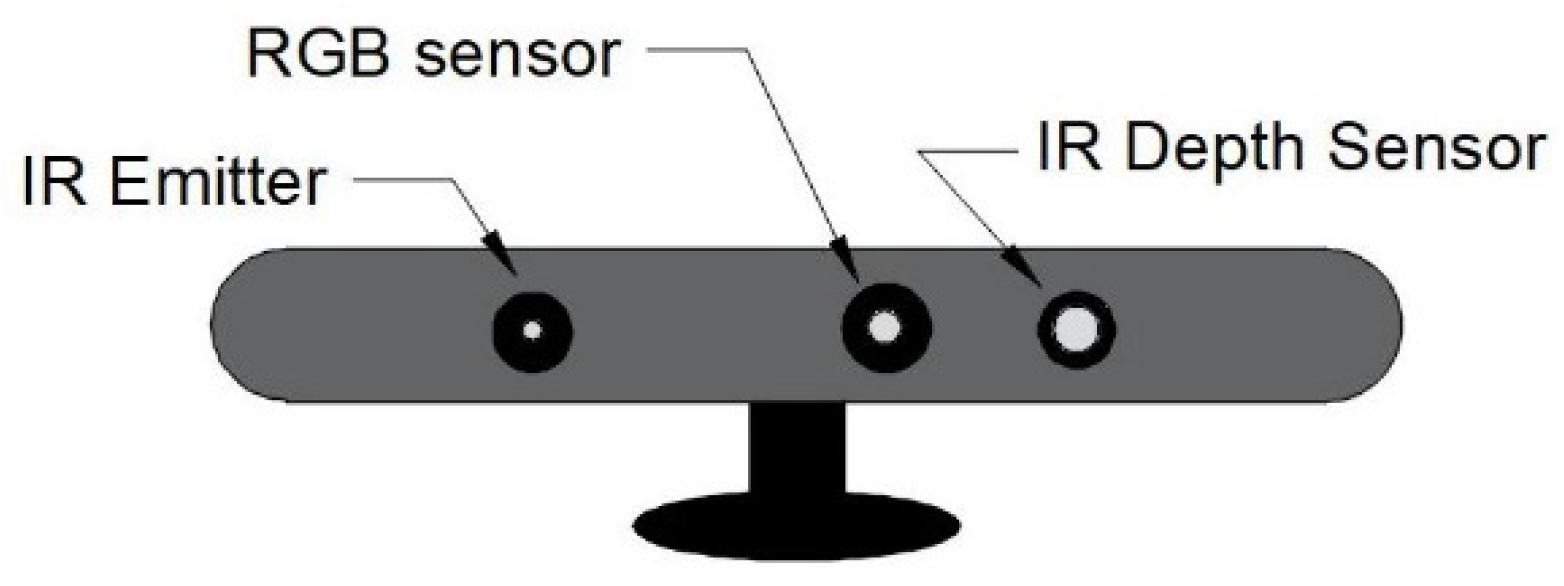

2.1.1. Visual Sensors

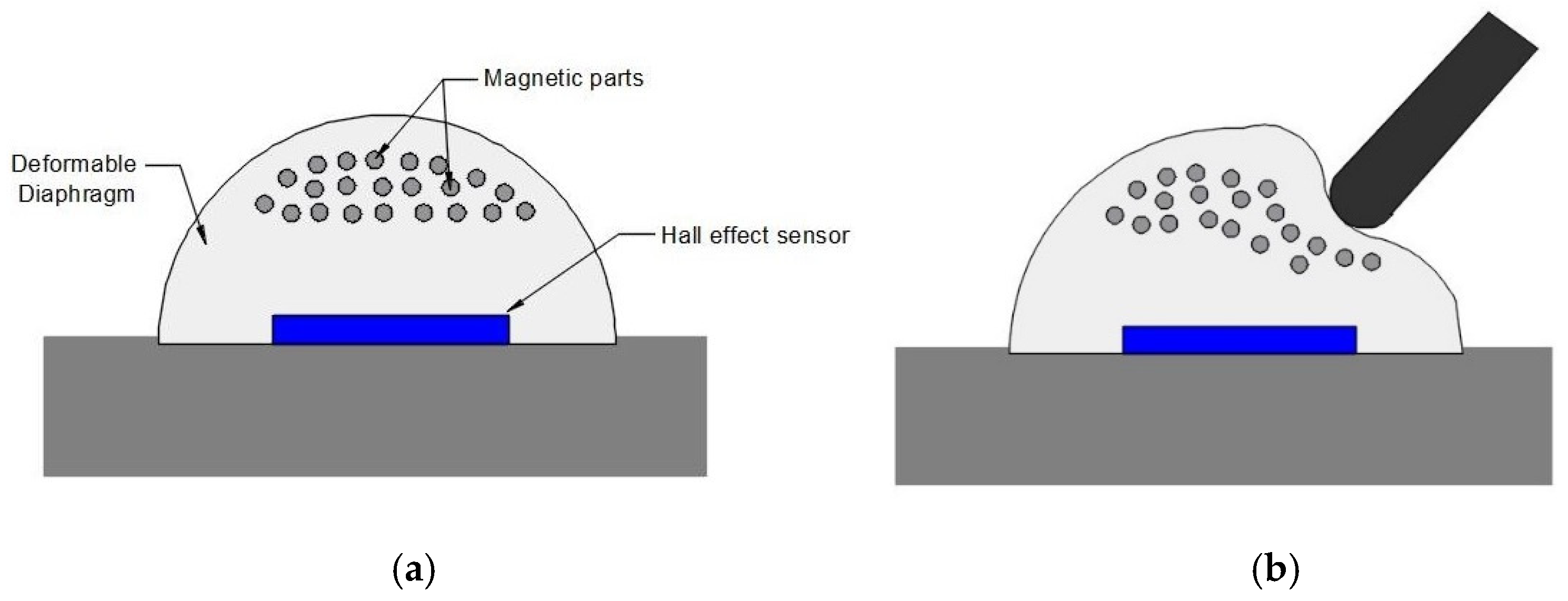

2.1.2. Tactile Sensors

2.1.3. Body Sensors

2.1.4. Wearable Sensors

2.2. Data Processing and a Reference to the Type of Manipulated Object

2.2.1. Object Detection

2.2.2. Control

2.2.3. Object Classification

3. Conclusions

This entry is adapted from the peer-reviewed paper 10.3390/machines10020101

References

- Seesselberg, H.A. A Challenge to Develop Fully Automated Garment Manufacturing. In Sensory Robotics for the Handling of Limp Materials; Springer: Berlin/Heidelberg, Germany, 1990; pp. 53–67.

- Koustoumpardis, P.N.; Aspragathos, N.A. A Review of Gripping Devices for Fabric Handling. In Proceedings of the International Conference on Intelligent Manipulation and Grasping IMG04, Genova, Italy, 1–2 July 2004; pp. 229–234.

- Seita, D.; Jamali, N.; Laskey, M.; Kumar Tanwani, A.; Berenstein, R.; Baskaran, P.; Iba, S.; Canny, J.; Goldberg, K. Deep Transfer Learning of Pick Points on Fabric for Robot Bed-Making. arXiv 2018, arXiv:1809.09810.

- Osawa, F.; Seki, H.; Kamiya, Y. Unfolding of Massive Laundry and Classification Types by Dual Manipulator. J. Adv. Comput. Intell. Intell. Inform. 2007, 11, 457–463.

- Kita, Y.; Kanehiro, F.; Ueshiba, T.; Kita, N. Clothes handling based on recognition by strategic observation. In Proceedings of the 2011 11th IEEE-RAS International Conference on Humanoid Robots, Bled, Slovenia, 26–28 October 2011; pp. 53–58.

- Bersch, C.; Pitzer, B.; Kammel, S. Bimanual Robotic Cloth Manipulation for Laundry Folding. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; pp. 1413–1419.

- Miller, S.; Berg, J.V.D.; Fritz, M.; Darrell, T.; Goldberg, K.; Abbeel, P. A geometric approach to robotic laundry folding. Int. J. Robot. Res. 2011, 31, 249–267.

- Maitin-Shepard, J.; Cusumano-Towner, M.; Lei, J.; Abbeel, P. Cloth grasp point detection based on multiple-view geometric cues with application to robotic towel folding. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010; pp. 2308–2315.

- Osawa, F.; Seki, H.; Kamiya, Y. Clothes Folding Task by Tool-Using Robot. J. Robot. Mechatron. 2006, 18, 618–625.

- Yamazaki, K.; Inaba, M. A Cloth Detection Method Based on Image Wrinkle Feature for Daily Assistive Robots. In Proceedings of the MVA2009 IAPR International Conference on Machine Vision Applications, Yokohama, Japan, 20–22 May 2009; pp. 366–369. Available online: http://www.mva-org.jp/Proceedings/2009CD/papers/11-03.pdf (accessed on 19 April 2021).

- Twardon, L.; Ritter, H. Interaction skills for a coat-check robot: Identifying and handling the boundary components of clothes. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 3682–3688.

- Li, Y.; Hu, X.; Xu, D.; Yue, Y.; Grinspun, E.; Allen, P.K. Multi-Sensor Surface Analysis for Robotic Ironing. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 5670–5676.

- Yu, W.; Kapusta, A.; Tan, J.; Kemp, C.C.; Turk, G.; Liu, C.K. Haptic Simulation for Robot-Assisted Dressing. In Proceedings of the IEEE International Conference on Robotics and Automation, Singapore, 29 May–3 June 2017; pp. 6044–6051.

- Kruse, D.; Radke, R.J.; Wen, J.T. Collaborative Human-Robot Manipulation of Highly Deformable Materials. In Proceedings of the IEEE International Conference on Robotics and Automation, Seattle, WA, USA, 26–30 May 2015; pp. 3782–3787.

- Yamazaki, K.; Oya, R.; Nagahama, K.; Okada, K.; Inaba, M. Bottom Dressing by a Life-Sized Humanoid Robot Provided Failure Detection and Recovery Functions. In Proceedings of the 2014 IEEE/SICE International Symposium on System Integration, Tokyo, Japan, 13–15 December 2014; pp. 564–570.

- Joshi, R.P.; Koganti, N.; Shibata, T. A framework for robotic clothing assistance by imitation learning. Adv. Robot. 2019, 33, 1156–1174.

- Tamei, T.; Matsubara, T.; Rai, A.; Shibata, T. Reinforcement learning of clothing assistance with a dual-arm robot. In Proceedings of the 2011 11th IEEE-RAS International Conference on Humanoid Robots, Bled, Slovenia, 26–28 October 2011; pp. 733–738.

- Erickson, Z.; Clever, H.M.; Turk, G.; Liu, C.K.; Kemp, C.C. Deep Haptic Model Predictive Control for Robot-Assisted Dressing. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 1–8.

- King, C.H.; Chen, T.L.; Jain, A.; Kemp, C.C. Towards an Assistive Robot That Autonomously Performs Bed Baths for Patient Hygiene. In Proceedings of the IEEE/RSJ 2010 International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 319–324.

- Clothes Perception and Manipulation (CloPeMa)—HOME—CloPeMa—Clothes Perception and Manipulation. Available online: http://clopemaweb.felk.cvut.cz/clothes-perception-and-manipulation-clopema-home/ (accessed on 17 May 2021).

- Balaguer, B.; Carpin, S. Combining Imitation and Reinforcement Learning to Fold Deformable Planar Objects. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; pp. 1405–1412.

- Sahari, K.S.M.; Seki, H.; Kamiya, Y.; Hikizu, M. Clothes Manipulation by Robot Grippers with Roller Fingertips. Adv. Robot. 2010, 24, 139–158.

- Taylor, P.M.; Taylor, G.E. Sensory robotic assembly of apparel at Hull University. J. Intell. Robot. Syst. 1992, 6, 81–94.

- Kolluru, R.; Valavanis, K.P.; Steward, A.; Sonnier, M.J. A flat surface robotic gripper for handling limp material. IEEE Robot. Autom. Mag. 1995, 2, 19–26.

- Kolluru, R.; Valavanis, K.P.; Hebert, T.M. A robotic gripper system for limp material manipulation: Modeling, analysis and performance evaluation. In Proceedings of the 1999 IEEE International Conference on Robotics and Automation (Cat. No.99CH36288C), Albuquerque, NM, USA, 25 April 1997; Volume 1, pp. 310–316.

- Hebert, T.; Valavanis, K.; Kolluru, R. A robotic gripper system for limp material manipulation: Hardware and software development and integration. In Proceedings of the 1999 IEEE International Conference on Robotics and Automation (Cat. No.99CH36288C), Albuquerque, NM, USA, 25 April 1997; Volume 1, pp. 15–21.

- Doulgeri, Z.; Fahantidis, N. Picking up flexible pieces out of a bundle. IEEE Robot. Autom. Mag. 2002, 9, 9–19.

- Schrimpf, J.; Wetterwald, L.E. Experiments towards automated sewing with a multi-robot system. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012; pp. 5258–5263.

- Kelley, R.B. 2D Vision Techniques for the Handling of Limp Materials. In Sensory Robotics for the Handling of Limp Materials; Springer Science and Business Media LLC: Cham, Switzerland, 1990; pp. 141–157.

- Triantafyllou, D.; Koustoumpardis, P.; Aspragathos, N. Type independent hierarchical analysis for the recognition of folded garments’ configuration. Intell. Serv. Robot. 2021, 14, 427–444.

- Doumanoglou, A.; Kargakos, A.; Kim, T.-K.; Malassiotis, S. Autonomous active recognition and unfolding of clothes using random decision forests and probabilistic planning. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 987–993.

- Platt, R.; Permenter, F.; Pfeiffer, J. Using Bayesian Filtering to Localize Flexible Materials during Manipulation. IEEE Trans. Robot. 2011, 27, 586–598.

- Koustoumpardis, P.N.; Nastos, K.X.; Aspragathos, N.A. Underactuated 3-Finger Robotic Gripper for Grasping Fabrics. In Proceedings of the 2014 23rd International Conference on Robotics in Alpe-Adria-Danube Region (RAAD), Smolenice, Slovakia, 3–5 September 2014.

- Kondratas, A. Robotic Gripping Device for Garment Handling Operations and Its Adaptive Control. Fibres Text. East. Eur. 2005, 13, 84–89.

- Paraschidis, K.; Fahantidis, N.; Vassiliadis, V.; Petridis, V.; Doulgeri, Z.; Petrou, L.; Hasapis, G. A robotic system for handling textile materials. In Proceedings of the 1995 IEEE International Conference on Robotics and Automation, Nagoya, Japan, 21–27 May 1995; Volume 2, pp. 1769–1774.

- Yamazaki, K.; Inaba, M. Clothing Classification Using Image Features Derived from Clothing Fabrics, Wrinkles and Cloth Overlaps. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 2710–2717.

- Bouman, K.L.; Xiao, B.; Battaglia, P.; Freeman, W.T. Estimating the Material Properties of Fabric from Video. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013.