Artificial intelligence (AI) and machine learning (ML) have recently been radically improved and are now being employed in almost every application domain to develop automated or semi-automated systems. To facilitate greater human acceptability of these systems, explainable artificial intelligence (XAI) has experienced significant growth over the last couple of years with the development of highly accurate models but with a paucity of explainability and interpretability. XAI methods are mostly developed for safety-critical domains worldwide, deep learning and ensemble models are being exploited more than other types of AI/ML models, visual explanations are more acceptable to end-users and robust evaluation metrics are being developed to assess the quality of explanations.

1. Introduction

With the recent developments of artificial intelligence (AI) and machine learning (ML) algorithms, people from various application domains have shown increasing interest in taking advantage of these algorithms. As a result, AI and ML are being used today in many application domains. Different AI/ML algorithms are being employed to complement humans’ decisions in various tasks from diverse domains, such as education, construction, health care, news and entertainment, travel and hospitality, logistics, manufacturing, law enforcement, and finance [

1]. While these algorithms are meant to help users in their daily tasks, they still face acceptability issues. Users often remain doubtful about the proposed decisions. In worse cases, users oppose the AI/ML model’s decision since their inference mechanisms are mostly opaque, unintuitive, and incomprehensible to humans. For example, today, deep learning (DL) models demonstrate convincing results with improved accuracy compared to established algorithms. DL models’ outstanding performances hide one major drawback, i.e., the underlying inference mechanism remains unknown to a user. In other words, the DL models function as a black-box [

2]. In general, almost all the prevailing expert systems built with AI/ML models do not provide additional information to support the inference mechanism, which makes systems nontransparent. Thus, it has become a sine qua non to investigate how the inference mechanism or the decisions of AI/ML models can be made transparent to humans so that these intelligent systems can become more acceptable to users from different application domains [

3].

Upon realising the need to explain AI/ML model-based intelligent systems, a few researchers started exploring and proposing methods long ago. The bibliographic databases contain the earliest published evidence on the association between expert systems and the term

explanation from the mid-eighties [

4]. Over time, the concept evolved to be an immense growing research domain of explainable artificial intelligence (XAI). However, researchers did not pay much attention to XAI until 2017/2018 which can be justified by the trend of publications per year with the keyword

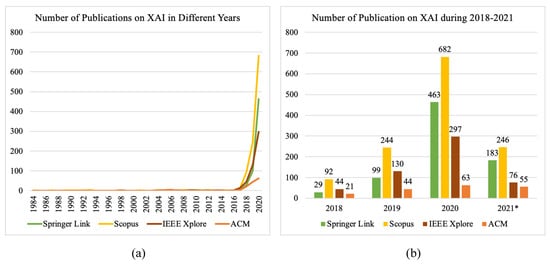

explainable artificial intelligence in titles or abstracts from different bibliographic databases illustrated in

Figure 1a. The increased attention paid by researchers towards XAI from all the domains utilising systems developed with AI/ML models was caused by three major incidents. First of all, the funding of the “Explainable AI (XAI) Program” was funded in early 2017 by the Defense Advanced Research Projects Agency (DARPA) [

5]. After a couple of months in mid-2017, the Chinese government released “The Development Plan for New Generation of Artificial Intelligence” to encourage the high and strong extensibility of AI [

6]. Last but not least, in mid-2018, the European Union granted their citizens a “Right to Explanation” if they were affected by algorithmic decision making by publishing the “General Data Protection Regulation” (GDPR) [

7]. The impact of these events is prominent among the researchers since the search results from the significant bibliographic databases depict a rapidly increasing number of publications related to XAI during recent years (

Figure 1b). The bibliographic databases that were considered to assess the number of publications per year on XAI were found to be the main sources of the research articles from the AI domain.

Figure 1. Number of published articles (y axis) on XAI made available through four bibliographic databases in recent decades (x axis): (a) Trend of the number of publications from 1984 to 2020. (b) Specific number of publications from 2018 to June 2021. The illustrated data were extracted on 1 July 2021 from four renowned bibliographic databases. The asterisk (*) with 2021 refers to the partial data on the number of publications on XAI until June.

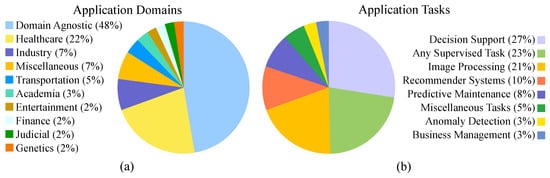

The continuously increasing momentum of publications in the domain of XAI is producing an abundance of knowledge from various perspectives, e.g., philosophy, taxonomy, and development. Unfortunately, this scattered plentiful knowledge and the use of different closely related taxonomies interchangeably demand the organisation and definition of boundaries through a systematic literature review (SLR), as it contains a structured procedure for conducting the review with provisions for assessing the outcome in terms of a predefined goal. Figure 2 presents the distribution of articles on XAI methods for various application domains and tasks. From Figure 2a, it is realisable that today, most of XAI methods are developed as domain agnostic. However, the most influential use of XAI is in the healthcare domain; this may be because of the demand for explanations from the end-user perspective. Obviously, in many application domains, AI and ML methods are used for decision support systems, and the need for XAI is high for decision support tasks, as can be seen in Figure 2b. Although there is an increasing number of publications, some challenges have not been considered, for example, user-centric and domain knowledge incorporating explanation.

Figure 2. Percentage of the selected articles on different XAI methods for different application (a) domains and (b) tasks.

2. Theoretical Background

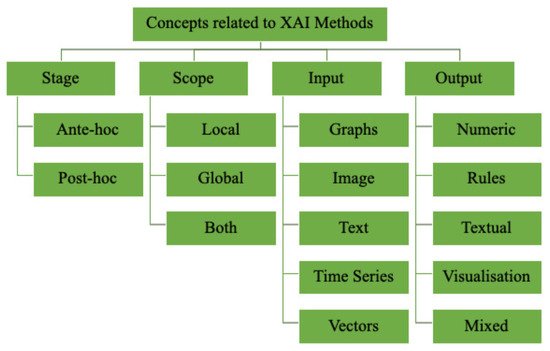

Figure 3 summarises the prime concepts behind developing XAI applications which were adopted from the recent review studies by Vilone and Longo [

8,

9].

Figure 3. Overview of the different concepts on developing methodologies for XAI, adapted from the review studies by Vilone and Longo [

8,

9].

2.1. Stage of Explainability

The AI/ML models learn the fundamental characteristics of the supplied data and subsequently try to cluster, predict or classify unseen data. The stage of explainability refers to the period in the process mentioned above when a model generates the explanation for the decision it provides. According to Vilone and Longo, the stages are

ante hoc and post hoc [

8,

9]. Brief descriptions of the stages are as follows:

-

Ante hoc methods generally consider generating the explanation for the decision from the very beginning of the training on the data while aiming to achieve optimal performance. Mostly, explanations are generated using these methods for transparent models, such as fuzzy models and tree-based models;

-

Post hoc methods comprise an external or surrogate model and the base model. The base model remains unchanged, and the external model mimics the base model’s behaviour to generate an explanation for the users. Generally, these methods are associated with the models in which the inference mechanism remains unknown to users, e.g., support vector machines and neural networks. Moreover, the post hoc methods are again divided into two categories: model-agnostic and model-specific. The model-agnostic methods apply to any AI/ML model, whereas the model-specific methods are confined to particular models.

2.2. Scope of Explainability

The scope of explainability defines the extent of an explanation produced by some explainable methods. Two recent literature studies on more than 200 scientific articles published on XAI deduced that the scope of explainability can be either

global or

local [

8,

9]. With a

global scope, the whole inferential technique of a model is made transparent or comprehensible to the user, for example, a decision tree. On the other hand, explanation with a

local scope refers to explicitly explaining a single instance of inference to the user, e.g., for decision trees, a single branch can be termed as a local explanation.

2.3. Input and Output

Along with the core concepts, stages and scopes of explainability, input and output formats were also found to be significant in developing XAI methods [

2,

8,

9]. The explainable models’ mechanisms unquestionably differ when learning different input data types, such as images, numbers, texts, etc. Including these basic forms of input, several others are found to be utilised in different studies, which are elaborately discussed in

Section 5.3.1. Finally, the prime concern of XAI, the output format or the form of explanation varies following the solution to the prior problems. The different forms of explanation simultaneously vary concerning the circumstances and expertise of the end-users. The most common forms of explanations are numeric, rules, textual, visual and mixed.

3. Related Studies

During the past couple of years, research on the developing theories, methodologies and tools of XAI has been very active, and over time, the popularity of XAI as a research domain has continued to increase. Before the massive attention of researchers towards XAI, the earliest review that could be found in the literature was that by Lacave and Diéz [

10]. They reviewed the then prevailing explanation methods precisely for Bayesian networks. In the article, the authors referred to the level and methods of explanations followed by several techniques that were mostly probabilistic. Later, Ribeiro et al. reviewed the suggested interpretable models as a solution to the problem of adding explainability to AI/ML models, such as additive models, decision trees, attention-based networks, and sparse linear models [

11]. Subsequently, they proposed a model-agnostic technique that involves the combined development of an interpretable model from the predictions of black-box and perturbing inputs to observe the reaction of black-box models [

12].

With the remarkable implications of GDPR, an enormous number of works have been published in recent years. The initial works included the notion of explainability and its use from different points of view. Alonso et al. accumulated the bibliometric information on the XAI domain to understand the research trends, identify the potential research groups and locations, and discover possible research directions [

13]. Gobel et al. discussed older concepts and linked them to newer concepts such as deep learning [

14]. Black-box models were compared with the white-box models based on their advantages and disadvantages from a practical point of view [

3]. Additionally, survey articles were published that advocated that explainable models replace black-box models for high-stakes decision-making tasks [

1,

15]. Surveys were also conducted on the methods of explainability and addressed the philosophy behind the usage from the perspective of different domains [

16,

17,

18] and stakeholders [

19]. Some works included the specific definitions of technical terms, possible applications, and challenges towards attaining responsible AI [

6,

20,

21].

This entry is adapted from the peer-reviewed paper 10.3390/app12031353