Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is an old version of this entry, which may differ significantly from the current revision.

Augmented Reality (AR) applications have become prevalent within smart industry manufacturing and wider popular culture sectors over the last decade, yet use of AR technology within an agricultural setting is still within its infancy.

- augmented reality

- agriculture

- precision farming

1. Introduction

Augmented Reality (AR) applications have become prevalent within smart industry manufacturing [1] and wider popular culture sectors over the last decade [2]. This is largely due to the cumulative accessibility of game engine-based Software Development Kits (SDK) (e.g., Vuforia [3], Aryzon [4], Zappar [5]) and the affordability of hardware devices for content display. Several libraries (e.g., AR.js [6]) now also cater for web-based development. AR is now firmly established within many smart city application domains, with smart production line engineering [7], preventative maintenance [8] and education-based applications in particular benefitting from the lower barrier of entry for AR development.

Within agriculture-based research, digital visualisation technologies are contributing to the betterment of both precision livestock [9] and crop farming by increasing efficiency and reducing supervisory costs [10]. It is within this domain that AR finds an essential use in an agricultural setting. The reason AR is developing a particular role, more so than other related optical technologies such as Virtual Reality (VR), is that the physical world is enhanced by the use of 3D assets or projected data insights, meaning the user’s interaction with the corporal environment is not inhibited but rather upgraded through an extension of the reality. For example, as Huuskonen et al. demonstrate, AR has the ability to provide a way-finding process to guide farmers during traditionally laborious soil sampling processes [11]. Gains are also achieved by overlaying visualisations of simulated crop growth models [12], and the projection of data into real-world objects for real-time decision support, such as insect or disease species identification. In all examples, the virtual assets seamlessly co-exist with the visual perception of the real world [13].

It is clear that AR is displaying potential for the enrichment of both the crop and livestock management processes within a precision farming setting [10]. However, even though many works specify the benefits AR offers within agriculture [11,14,15,16], it must be emphasised that the advantages of AR for precision farming are reliant on a symbiotic relationship with other core smart city-based technologies, such as machine learning or GPS integration, to provide a cite-specific management service.

Both the AR software and hardware constraints have unique requirements when used within an agriculture-based setting compared to the use in other sectors, such as entertainment or manufacturing. The role of the farmer is practical in nature, meaning that AR users will tend to work long hours and in remote locations [17]. Therefore, head-mounted displays or hand-held devices should be lightweight, weather resistant, not restrict movement and capable of providing multiple applications from the same hardware.

2. AR in for Crop and Livestock Management

The basis of the precision farming concept within crop management, is the achievement of a higher production using fewer resources through technological enhancements, by supporting management spatially and temporally through industry 4.0 technologies [21,24]. This may include working with existing equipment, such as human-driven machines, but adding remote sensing or data collection methods that provide a data-driven insight, unlocking an optimisation of resources; or a layer of automation [9]. More advanced smart city technological solutions, such as drones, and digital twin technologies further enhance solutions, providing autonomous services using intelligent data insights; with a comprehensive range, including yield mapping, parallel running of machinery and automated soil sampling, provided by Klepacki et al. [25]. However, there are additional added values aside from increased agricultural output, namely better operational economics, reliability and general insight into the systematic operation of the farm as a whole. Notably, as demonstrated in this article, AR has the flexibility to be deployed comfortably within each precision farming setting. By coupling with existing equipment, in parallel with the diverse industry 4.0 applications, and integrated into new autonomous machine solutions, AR is able to synergise virtual objects with the existing physical real world. This makes the technology particularly suited to practical crop farming, as discussed in the following examples.

Yet, in order for AR to fulfil a data visualisation function, integration is required with other services; namely a platform capable of autonomously controlling machines when in the field. This is also the case in [12], where AR provides a visual outlet for the integration of other industry 4.0 digital solutions such as sensors, network infrastructure, GPRS, Wi-Fi, compass, crop information, a decision layer for crop image detection and database matching technological services [12,26].

Within crop farming, AR’s adaptability suits a wide spread distribution model. Relevant and on time information is a powerful tool for farmers, even more so when the data is provided as an integration with the real-world. For instance, smart phones, which have become a highly accessible technology (provide network access when working in remote locations), mean that widespread deployment is possible. AR can be positioned successfully, where more immersive-heavy solutions, such as VR, may not prevail.

Regarding livestock management, precision farming principles are based on the production and management of animals, driven through sensors and data services. In this setting, precision livestock farming concerns the implementation of technologies to enable real-time monitoring for a per-animal approach. Demand and consumption of animal products are projected to increase in the coming years [28]. This results in less manpower to manage larger numbers of animals, making individual management and subject identification a difficult task. Implementation of technological tools, such as radio frequency identification devices (RFID) and livestock monitoring systems (LMS), offer solutions for this per animal identification and management concept [13,16]. However, the provided implementation is limited without a visual or interactive outlet for the information. Yet, similarly to the crop management applications, AR opens up the possibilities for a real-time data-driven display of individual animals in the farmers view, both in dynamic scenarios (i.e., grazing cows) and more static scenarios (i.e., lactating cows). For example, Maria et al. [16] showcase the advantages of using AR through smart glasses to retrieve information (feeding, milking, breeding, health etc.) per subject in real-time. The technology provides a window to the remote sensor data on a per animal approach level. A further example is presented by Zhao et al., who developed a mobile application to locate (track) and manage large numbers of cattle in extensive areas [13]. The application combines GPS measurements and computer vision algorithms to deliver information of cow locations in the field.

3. AR Types and Coupled Technologies

AR has flexibility within different farming contexts. While it is clear that AR is employed as means to communicate information visually, it is the integration of AR with other technologies that enables the tailored functionality for precision farming and the visual-based modality [10,31] Coupled technologies used are diverse, but core commonalities are present; such as the use of on-line databases, machine learning algorithms and sensor/IoT connectivity (i.e., humidity, temperature and weather sensors). The choice of technologies can be described as dependent on the context and domain in which the application will be used, with both foundational differences and overlaps between the two application areas of crop farming and livestock management.

Whilst each AR application addresses a specific need (often unique to its deployment context) resulting in aspects that might be most notable for one domain but not be relevant for the other, AR technologies do share common requirements. For example, the use of QR codes to facilitate management tasks in environments where a low region of movement in space is expected; for example for indoor dairy farming or greenhouse management [16]. However, when dealing with a more dynamic scenario, for instance livestock dispersed over a wide area or large-field crop management, synergies between the AR deployment may include the combination of GPS and computer vision algorithms, as demonstrated by [13].

Yet a notable consideration is apparent in all deployment scenarios, and that is that location is key. Whether it is a local coordinate system enabled by cameras or a geographic coordinate system using GPS sensors [15], or a hybrid approach [13], applications are only able to properly convey information through AR with an appropriate level of location awareness and suitable coupling technologies to enable the process.

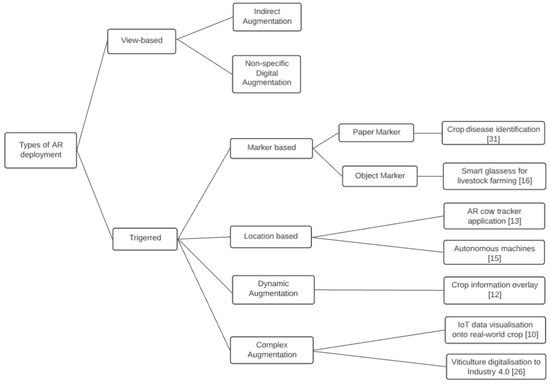

The type of coupled technology provides insights into the nature of the application for precision farming. In that regard, it is possible to classify the applications into specific AR types. Edwards-Stewart et al., for example, categorize AR applications based on their functional characteristics and propose two main categories, (A) triggered and (B) view-based AR applications [32]. Triggered AR applications, which as the name suggests, are AR approaches that need to be triggered by a stimuli to deliver the augmentation of reality. In contrast, view-based applications can augment content without the need of a reference in the view.

Triggered applications can be of four types: (1) marker-based, (2) marker-less (location-based), (3) dynamic augmentation and (4) complex augmentation. Marker-based involves the use of a QR code pattern to initiate the AR projection. Marker-less does not require a QR code, and in some instance the user would be required to tap on screen to create the projection on their desired location. The latter two types (dynamic and complex augmentation) highlight specific types of AR marker-less applications. Figure 1 displays an overview of the reviewed works in Section 2.1 divided by AR type, based on the four classifications in [32], to provide an example of the triggered applications currently deployed within an agriculture setting.

Figure 1. Types of AR deployment within crop and livestock management, adapted from the model in [32].

Coupled technologies are facilitators for AR. For example, without GPS to facilitate location tracking [15], it would not be possible to create a marker-less AR approach. Similarly, without the combination of more than one coupled technology, higher location accuracy might be unattainable impacting the success of [10,12,14,16,26]. For marker-less applications, position is often not the only factor involved in the correct overlay of data. For example, the multirobot system developed by Huuskonen et al., requires information on the orientation of the AR headset used by the driver to correctly overlay objects in the real world [11,15]. The accuracy of the internal headset orientation sensor was, however, not sufficient for this application; therefore, an extra sensor is introduced into the framework.

This entry is adapted from the peer-reviewed paper 10.3390/smartcities4040077

This entry is offline, you can click here to edit this entry!