Morden Navigation aids for the visually impaired is an applied science for people with special needs. They are a key sociotechnique that helps users to independently navigate and access needed resources indoors and outdoors.

- assistive navigation aids

- visual impairment

1. Introduction

Visual impairment refers to the congenital or acquired impairment of visual function, resulting in decreased visual acuity or an impaired visual field. According to the World Health Organization, approximately 188.5 million people worldwide suffer from mild visual impairment, 217 million from moderate to severe visual impairment, and 36 million people are blind, with the number estimated to reach 114.6 million by 2050 [1]. In daily life, it is challenging for people with visual impairments (PVI) to travel, especially in places they are not familiar with. Although there have been remarkable efforts worldwide toward barrier-free infrastructure construction and ubiquitous services, people with visual impairments have to rely on their own relatives or personal travel aids to navigate, in most cases. In the post-epidemic era, independent living and independent travel elevate in importance since people have to maintain a social distance from each other. Thus, there has been consistent research conducted that concentrates on coupling technology and tools with a human-centric design to extend the guidance capabilities of navigation aids.

CiteSpace is a graphical user interface (GUI) bibliometric analysis tool developed by Chen [2], which has been widely adopted to analyze co-occurrence networks with rich elements, including authors, keywords, institutions, countries, and subject categories, as well as cited authors, cited literature, and a citation network of cited journals [3][4]. It has been widely applied to analyze the research features and trends in information science, regenerative medicine, lifecycle assessment, and other active research fields. Burst detection, betweenness centrality, and heterogeneous networks are the three core concepts of CiteSpace, which help to identify research frontiers, influential keywords, and emerging trends, along with sudden changes over time [3]. The visual knowledge graph derived by CiteSpace consists of nodes and relational links. In this graph, the size of a particular node indicates the co-occurrence frequency of an element, the thickness and color of the ring indicate the co-occurrence time slice of this element, and the thickness of a link between the two nodes shows the frequency of popularity in impacts [5]. Additionally, the purple circle represents the centrality of an element, and the thicker the purple circle, the stronger the centrality. Nodes with high centrality are usually regarded as turning points or pivotal points in the field [6]. In this work, we answer the following questions: What are the most influential publication sources? Who are the most active and influential authors? What are their research interests and primary contributions to society? What are the featured key studies in the field? What are the most popular topics and research trends, described by keywords? Moreover, we closely investigate milestone sample works that use different multisensor fusion methods that help to better illustrate the machine perception, intelligence, and human-machine interactions for renowned cases and reveals how frontier technologies influence the PVI navigation aids. By conducting narrative studies on representative works with unique multisensor combinations or representative multimodal interaction mechanisms, we aim to enlighten upcoming researchers by illustrating the state-of-art multimodal trial works conducted by predecessors.

2. Narrative Study of Navigation Aids for PVI

| Year | Title of Reference |

|---|---|

| 2012 | NAVIG: augmented reality guidance system for the visually impaired [7] |

| 2012 | An indoor navigation system for the visually impaired [8] |

| 2013 | Multichannel ultrasonic range finder for blind people navigation [9] |

| 2013 | New indoor navigation system for visually impaired people using visible light communication [10] |

| 2013 | Blind navigation assistance for visually impaired based on local depth hypothesis from a single image [11] |

| 2013 | A system-prototype representing 3D space via alternative-sensing for visually impaired navigation [12] |

| 2014 | Navigation assistance for the visually impaired using RGB-D sensor with range expansion [13] |

| 2015 | Design, implementation and evaluation of an indoor navigation system for visually impaired people [14] |

| 2015 | An assistive navigation framework for the visually impaired [15] |

| 2016 | NavCog: turn-by-turn smartphone navigation assistant for people with visual impairments or blindness [16] |

| 2016 | ISANA: wearable context-aware indoor assistive navigation with obstacle avoidance for the blind [17] |

| 2018 | PERCEPT navigation for visually impaired in large transportation hubs [18] |

| 2018 | Safe local navigation for visually impaired users with a time-of-flight and haptic feedback device [19] |

| 2019 | An astute assistive device for mobility and object recognition for visually impaired people [20] |

| 2019 | Wearable travel aid for environment perception and navigation of visually impaired people [21] |

| 2019 | An ARCore based user centric assistive navigation system for visually impaired people [22] |

| 2020 | Integrating wearable haptics and obstacle avoidance for the visually impaired in indoor navigation: A user-centered approach [23] |

| 2020 | ASSIST: Evaluating the usability and performance of an indoor navigation assistant for blind and visually impaired people [24] |

| 2020 | V-eye: A vision-based navigation system for the visually impaired [25] |

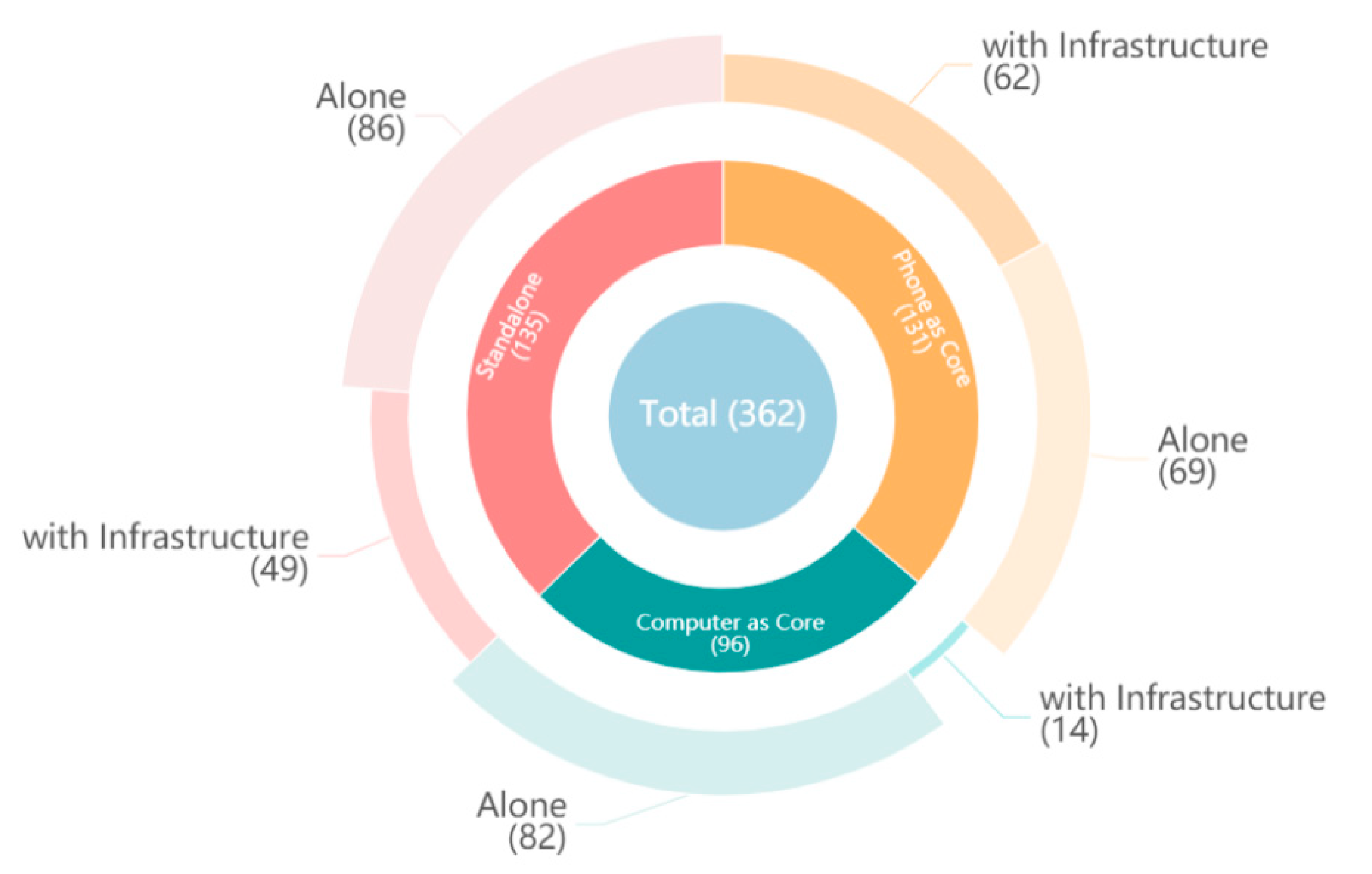

2.1. Hardware Composition

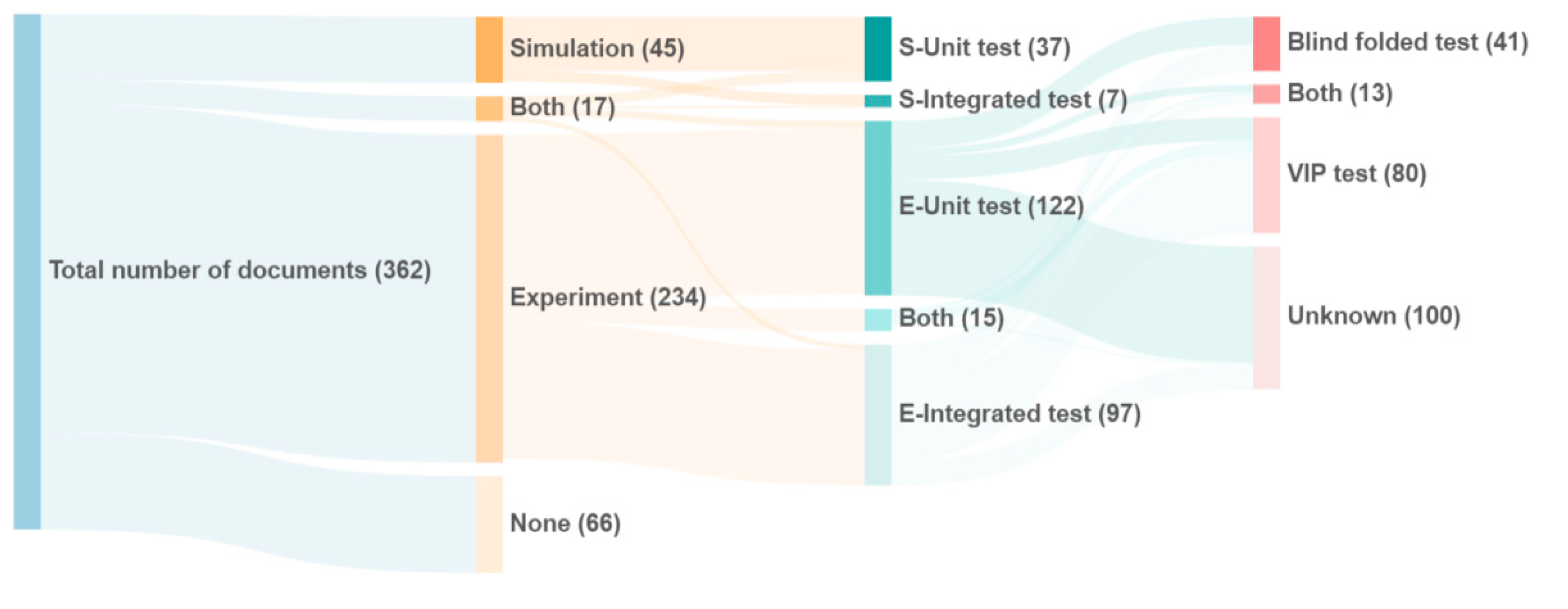

2.2. Validation Methods

3. Conclusions

This entry is adapted from the peer-reviewed paper 10.3390/su13168795

References

- Bourne, R.R.; Flaxman, S.R.; Braithwaite, T.; Cicinelli, M.V.; Das, A.; Jonas, J.B.; Keeffe, J.; Kempen, J.H.; Leasher, J.; Limburg, H.; et al. Magnitude, temporal trends, and projections of the global prevalence of blindness and distance and near vision impairment: A systematic review and meta-analysis. Lancet Glob. Health 2017, 5, e888–e897.

- Ping, Q.; He, J.; Chen, C. How many ways to use CiteSpace? A study of user interactive events over 14 months. J. Assoc. Inf. Sci. Technol. 2017, 68, 1234–1256.

- Chen, C. CiteSpace II: Detecting and visualizing emerging trends and transient patterns in scientific literature. J. Am. Soc. Inf. Sci. Technol. 2006, 57, 359–377.

- Liu, Z.; Yin, Y.; Liu, W.; Dunford, M. Visualizing the intellectual structure and evolution of innovation systems research: A bibliometric analysis. Scientometrics 2015, 103, 135–158.

- Chen, C.; Hu, Z.; Liu, S.; Tseng, H. Emerging trends in regenerative medicine: A scientometric analysis in CiteSpace. Expert Opin. Biol. Ther. 2012, 12, 593–608.

- Chen, C. The centrality of pivotal points in the evolution of scientific networks. In Proceedings of the 10th International Conference on Intelligent User Interfaces, San Diego, CA, USA, 10–13 January 2005; pp. 98–105.

- Katz, B.F.G.; Kammoun, S.; Parseihian, G.; Gutierrez, O.; Brilhault, A.; Auvray, M.; Truillet, P.; Denis, M.; Thorpe, S.; Jouffrais, C. NAVIG: Augmented reality guidance system for the visually impaired. Virtual Real. 2012, 16, 253–269.

- Guerrero, L.A.; Vasquez, F.; Ochoa, S.F. An indoor navigation system for the visually impaired. Sensors 2012, 12, 8236–8258.

- Gelmuda, W.; Kos, A. Multichannel ultrasonic range finder for blind people navigation. Bull. Pol. Acad. Sci. 2013, 61, 633–637.

- Nakajima, M.; Haruyama, S. New indoor navigation system for visually impaired people using visible light communication. EURASIP J. Wirel. Commun. Netw. 2013, 2013, 37.

- Praveen, R.G.; Paily, R.P. Blind navigation assistance for visually impaired based on local depth hypothesis from a single image. Procedia Eng. 2013, 64, 351–360.

- Bourbakis, N.; Makrogiannis, S.K.; Dakopoulos, D. A system-prototype representing 3D space via alternative-sensing for visually impaired navigation. IEEE Sens. J. 2013, 13, 2535–2547.

- Aladren, A.; López-Nicolás, G.; Puig, L.; Guerrero, J.J. Navigation assistance for the visually impaired using RGB-D sensor with range expansion. IEEE Syst. J. 2014, 10, 922–932.

- Martinez-Sala, A.S.; Losilla, F.; Sánchez-Aarnoutse, J.C.; García-Haro, J. Design, implementation and evaluation of an indoor navigation system for visually impaired people. Sensors 2015, 15, 32168–32187.

- Xiao, J.; Joseph, S.L.; Zhang, X.; Li, B.; Li, X.; Zhang, J. An assistive navigation framework for the visually impaired. IEEE Trans. Hum.-Mach. Syst. 2015, 45, 635–640.

- Ahmetovic, D.; Gleason, C.; Kitani, K.M.; Takagi, H.; Asakawa, C. NavCog: Turn-by-turn smartphone navigation assistant for people with visual impairments or blindness. In Proceedings of the 13th Web for All Conference, Montreal, QC, Canada, 11–13 April 2016; pp. 1–2.

- Li, B.; Munoz, J.P.; Rong, X.; Xiao, J.; Tian, Y.; Arditi, A. ISANA: Wearable context-aware indoor assistive navigation with obstacle avoidance for the blind. In European Conference on Computer Vision; Springer: Berlin, Germany, 2016; pp. 448–462.

- Ganz, A.; Schafer, J.; Tao, Y.; Yang, Z.; Sanderson, C.; Haile, L. PERCEPT navigation for visually impaired in large transportation hubs. J. Technol. Pers. Disabil. 2018, 6, 336–353.

- Katzschmann, R.K.; Araki, B.; Rus, D. Safe local navigation for visually impaired users with a time-of-flight and haptic feedback device. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 583–593.

- Meshram, V.V.; Patil, K.; Meshram, V.A.; Shu, F.C. An astute assistive device for mobility and object recognition for visually impaired people. IEEE Trans. Hum. Mach. Syst. 2019, 49, 449–460.

- Bai, J.; Liu, Z.; Lin, Y.; Li, Y.; Lian, S.; Liu, D. Wearable travel aid for environment perception and navigation of visually impaired people. Electronics 2019, 8, 697.

- Zhang, X.; Yao, X.; Zhu, Y.; Hu, F. An ARCore based user centric assistive navigation system for visually impaired people. Appl. Sci. 2019, 9, 989.

- Barontini, F.; Catalano, M.G.; Pallottino, L.; Leporini, B.; Bianchi, M. Integrating wearable haptics and obstacle avoidance for the visually impaired in indoor navigation: A user-centered approach. IEEE Trans. Haptics 2020, 14, 109–122.

- Nair, V.; Olmschenk, G.; Seiple, W.H.; Zhu, Z. ASSIST: Evaluating the usability and performance of an indoor navigation assistant for blind and visually impaired people. Assist. Technol. 2020, 1–11.

- Duh, P.J.; Sung, Y.C.; Chiang, L.Y.F.; Chang, Y.J.; Chen, K.W. V-eye: A vision-based navigation system for the visually impaired. IEEE Trans. Multimed. 2020, 23, 1567–1580.

- Rodrigo-Salazar, L.; Gonzalez-Carrasco, I.; Garcia-Ramirez, A.R. An IoT-based contribution to improve mobility of the visually impaired in Smart Cities. Computing 2021, 103, 1233–1254.

- Mishra, G.; Ahluwalia, U.; Praharaj, K.; Prasad, S. RF and RFID based Object Identification and Navigation system for the Visually Impaired. In Proceedings of the 2019 32nd International Conference on VLSI Design and 2019 18th International Conference on Embedded Systems (VLSID), Delhi, India, 5–9 January 2019; pp. 533–534.

- Ganz, A.; Schafer, J.M.; Tao, Y.; Wilson, C.; Robertson, M. PERCEPT-II: Smartphone based indoor navigation system for the blind. In Proceedings of the 2014 36th annual international conference of the IEEE engineering in medicine and biology society, Chicago, IL, USA, 26–30 August 2014; pp. 3662–3665.

- Kahraman, M.; Turhan, C. An intelligent indoor guidance and navigation system for the visually impaired. Assist. Technol. 2021, 1–9.

- Pełczyński, P.; Ostrowski, B. Automatic calibration of stereoscopic cameras in an electronic travel aid for the blind. Metrol. Meas. Syst. 2013, 20, 229–238.

- Song, M.; Ryu, W.; Yang, A.; Kim, J.; Shin, B.S. Combined scheduling of ultrasound and GPS signals in a wearable ZigBee-based guidance system for the blind. In Proceedings of the 2010 Digest of Technical Papers International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 9–13 January 2010; pp. 13–14.