Blind and Visually impaired people (BVIP) face a range of practical difficulties when undertaking outdoor journeys as pedestrians. Over the past decade, a variety of assistive devices have been researched and developed to help BVIP navigate more safely and independently. In addition, research in overlapping domains are addressing the problem of automatic environment interpretation using computer vision and machine learning, particularly deep learning, approaches. Our aim in this article is to present a comprehensive review of research directly in, or relevant to, assistive outdoor navigation for BVIP. We breakdown the navigation area into a series of navigation phases and tasks. We then use this structure for our systematic review of research, analysing articles, methods, datasets and current limitations by task. We also provide an overview of commercial and non-commercial navigation applications targeted at BVIP. Our review contributes to the body of knowledge by providing a comprehensive, structured analysis of work in the domain, including the state of the art, and guidance on future directions. It will support both researchers and other stakeholders in the domain to establish an informed view of research progress.

- assistive systems

- navigation systems

- visually impaired people

- smart cities

- planning journeys

- independent children navigation

1. Introduction

According to the World Health Organization (WHO), at least 1 billion people are visually impaired in 2020 [1]. There are various causes of vision impairment and blindness, including uncorrected refractive errors, neurological defects from birth, and age-related cataracts [1]. For those who suffer from vision impairment, both independence and confidence in undertaking daily activities of living are impacted. Assistive systems exist to help BVIP in various activities of daily living, such as recognizing people [2], distinguishing banknotes [3][4], choosing clothes [5], and navigation support, both indoors and outdoors [6].

2. A Taxonomy of Outdoor Navigation Systems for BVIP

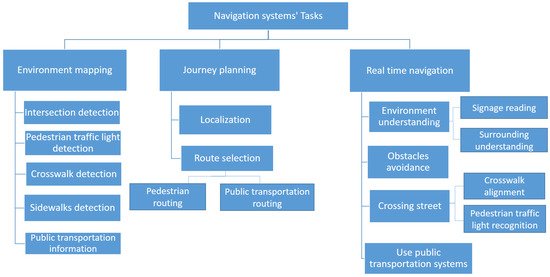

Assistive navigation systems in an urban environment focus on any aspect of supporting pedestrian BVIP in moving in a controlled and safe way for a particular route. The first step in analysing this domain is to develop and apply a clear view on both the scope and terminology involved in outdoor pedestrian navigation systems. We present a taxonomy of outdoor navigation in Figure 1. At the top level, we identify the three main sequenced phases which encompass the area of outdoor navigation systems, from environment mapping, through journal planning to navigating the journey in real time. Each of these phases consists of a task breakdown structure. The tasks comprise the range of actions and challenges that a visually impaired person need to succeed at in order to move successfully from an initial point to a selected destination safely and efficiently. In effect, the phases represent higher-level research areas, while the task breakdown structure for each phase shows the research sub-domains.

Figure 1. A taxonomy of navigation support tasks for BVIP pedestrians.

Looking at each phase in Figure 1, the environment mapping phase provides appropriate and relevant location-specific information to support BVIP pedestrians in journey planning and real-time journey support. It defines the locations and information of static street elements such as intersections, public transportation stations, and traffic lights. The environment mapping phase is an off-line up-front data gathering and processing phase that underpins the remaining navigation phases. The second phase Journey planning begins by determining the start location. It then selects the optimal route to the user’s destination, allowing for safety and routing, using the information from the environment mapping phase. Finally, BVIP need support for challenges in real-time navigation including real-time environment understanding, crossing a street, obstacle avoidance, and using public transportation. We explain each of the taxonomy entries in more detail:

Environment mapping phase:

Existing map applications do not provide the level of information needed to support the BVIP community when planning and undertaking pedestrian journeys. This phase addresses the tasks associated with enriching available maps with useful information for such journeys. Pre-determined location and information about sidewalks, public transportation, road intersections, appropriate crossing points (crosswalks), and availability of traffic lights are all essential points of information for this user group. We identify five tasks or sub-domains within the environment mapping phase.

-

Intersection detection: detects the location of road intersections. An intersection is defined as a point where two or more roads meet, and represents a critical safety point of interest to BVIP.

-

Pedestrian traffic light detection: detects the location and orientation of pedestrian traffic lights. These are traffic lights that have stop/go signals designed for pedestrians, as opposed to solely vehicle drivers.

-

Crosswalk detection: detects an optimal marked location where visually impaired users can cross a road, such as a zebra crosswalk.

-

Sidewalk detection: detects the existence and location of the pedestrian sidewalk (pavement) where BVIP can walk safely.

-

Public transportation information: defines the locations of public transportation stops and stations, and information about the degree of accessibility of each one.

Journey planning phase:

For the BVIP community, journey planning is a critical part of building the confidence and knowledge to undertaking a pedestrian journey to a new destination. This phase supports the planning of journeys, so as to select the safest and most efficient route from a BVIP’s location to their destination. It builds upon the enriched mapping information from the environmental mapping phase, and consists of the following two tasks:

-

Localization: defines the initial start point of the journey, where users start their journey from.

-

Route selection: finds the best route to reach a specified destination.

Real-time navigation phase:

The final phase is about supporting the BVIP while undertaking their journey. Real-time navigation support recognises the dynamic factors during the journey. We identify the following four tasks or sub-domains:

-

Environment understanding: helps BVIP to understand their surroundings, including reading signage and physical surrounding understanding.

-

Avoiding obstacles: detects the obstacles on a road and helps BVIP to avoid them.

-

Crossing street: helps BVIP in crossing a road when at a junction. This task helps the individual to align with the location of a crosswalk. Furthermore, it recognizes the status of a pedestrian traffic light to determine the appropriate time to cross, so they can cross safely.

-

Using public transportation systems: This task assists BVIP in using public transportation systems such as a bus or train.

3. Overview of Navigation Systems by Device

Navigation systems research literature differs substantially along two particular lines (1) the scope and depth of the functionality (akin to tasks) offered across these systems and (2) the nature of the hardware/device provided to the user, which gathers (perceives) data about the environment. This data may be a captured image or other sensor feedback. Navigation assistive systems extract useful information from this data to help the BVIP during their navigation—such as the type and location of obstacles. We divide assistive systems for BVIP into four categories, based on the used device for data gathering:

-

Sensors-based: this category collects data through various sensors such as ultrasonic sensors, liquid sensors, and infrared (IR) sensors.

-

Electromagnetic/radar-based: radar is used to receive information about the environment, particularly objects in the environment.

-

Camera-based: cameras capture a scene to produce more detailed information about the environment, such as an object’s colour and shape.

-

Smartphone-based: in this case, the BVIP has their own device with a downloaded application. Some applications utilise just the phone camera, with others using the phone camera and other phone sensors such as GPS, compass, etc.

-

Combination: in these categories, two types of data gathering methods are used to combine the benefits of both of them such as sensor and smartphone, sensor and camera, and camera and smartphone.

To establish a broad-brush view of the BVIP specific literature in BVIP systems, we present Table 1.

Research works are classified across the phases/tasks of navigation systems and the type of device/hardware system, as shown in Table 1. From this table, we note that the tasks that have received the most attention from the research community are the tasks of obstacle avoidance and localization. Secondly, while the environment mapping phase is a critical part of BVIP navigation systems, it is has not been addressed in the navigation systems for BVIP research base so is not included here. Thirdly, we note that previous navigation systems work has not included signage reading as a focus area, with just two published work. Although using public transportation systems has a significant effect on the mobility and employment of BVIP, it is not included in the majority of navigation systems. None of the previous articles address all tasks for real-time navigation, so no single system presents a complete navigation solution to the BVIP community. We note that while most hardware/device systems aim to address aspects of both journey planning and real-time navigation, sensor and camera based systems focus solely on the tasks of obstacle avoidance. In addition, there is only one smartphone based system that uses a separate camera in the literature suggesting that smartphone solutions rely on the in-built camera.

Having examined the distribution of BVIP navigation systems research effort across navigation functions, we now analyse the research base at a more detailed level using our phase and task taxonomy. As our focus is by task, we include both BVIP and non BVIP literature.

Table 1. Tasks coverage of published navigation systems, by data collection device.

| Devices | Journey Planning | Real-Time navigation | ||||||

|---|---|---|---|---|---|---|---|---|

| Localization | Route Selection | Environment Understanding | Obstacle Avoidance | Crossing Street | Using Public Transportation | |||

| Signage Reading | Surrounding Understanding | Pedestrian traffic Lights Recognition | Crosswalk Alignment | |||||

| Sensors-based | [7][8][9] | [7][8][10][11][12][13][14][15][16] | [17] | |||||

| Electromagnetic/radar-based | [18][19][20] | |||||||

| Camera-based | [21][22][23][24] | [25][26] | [27][28] | [24][29][30][31][32][33][34][35] | [36][37][38] | [36] | ||

| Smartphone-based | [39][40][41][42][43][44] | [39][40][42] | [42][43] | [42][45][46] | [42][47][48][49] | [47][50] | [51] | |

| Sensor and camera based | [52][53][54][55][56] | |||||||

| Electromagnetic/radar-based and camera based | [57] | |||||||

| Sensor and smartphone based | [58][59][60] | [59] | [58][60][61] | [62] | [62] | [63][64] | ||

| Camera and smartphone based | [65] | [65] | [65] | |||||

This entry is adapted from the peer-reviewed paper 10.3390/s21093103

References

- WHO. Visual Impairment and Blindness. Available online: (accessed on 25 November 2020).

- Mocanu, B.C.; Tapu, R.; Zaharia, T. DEEP-SEE FACE: A Mobile Face Recognition System Dedicated to Visually Impaired People. IEEE Access 2018, 6, 51975–51985.

- Dunai Dunai, L.; Chillarón Pérez, M.; Peris-Fajarnés, G.; Lengua Lengua, I. Euro banknote recognition system for blind people. Sensors 2017, 17, 184.

- Park, C.; Cho, S.W.; Baek, N.R.; Choi, J.; Park, K.R. Deep Feature-Based Three-Stage Detection of Banknotes and Coins for Assisting Visually Impaired People. IEEE Access 2020, 8, 184598–184613.

- Tateno, K.; Takagi, N.; Sawai, K.; Masuta, H.; Motoyoshi, T. Method for Generating Captions for Clothing Images to Support Visually Impaired People. In Proceedings of the 2020 Joint 11th International Conference on Soft Computing and Intelligent Systems and 21st International Symposium on Advanced Intelligent Systems (SCIS-ISIS), Hachijo Island, Japan, 5–8 December 2020; pp. 1–5.

- Aladren, A.; López-Nicolás, G.; Puig, L.; Guerrero, J.J. Navigation Assistance for the Visually Impaired Using RGB-D Sensor with Range Expansion. IEEE Syst. J. 2016, 10, 922–932.

- Kaushalya, V.; Premarathne, K.; Shadir, H.; Krithika, P.; Fernando, S. ‘AKSHI’: Automated help aid for visually impaired people using obstacle detection and GPS technology. Int. J. Sci. Res. Publ. 2016, 6, 110.

- Meshram, V.V.; Patil, K.; Meshram, V.A.; Shu, F.C. An astute assistive device for mobility and object recognition for visually impaired people. IEEE Trans. Hum. Mach. Syst. 2019, 49, 449–460.

- Alghamdi, S.; van Schyndel, R.; Khalil, I. Accurate positioning using long range active RFID technology to assist visually impaired people. J. Netw. Comput. Appl. 2014, 41, 135–147.

- Jeong, G.Y.; Yu, K.H. Multi-section sensing and vibrotactile perception for walking guide of visually impaired person. Sensors 2016, 16, 1070.

- Chun, A.C.B.; Al Mahmud, A.; Theng, L.B.; Yen, A.C.W. Wearable Ground Plane Hazards Detection and Recognition System for the Visually Impaired. In Proceedings of the 2019 International Conference on E-Society, E-Education and E-Technology, Taipei, Taiwan, 15–17 August 2019; pp. 84–89.

- Rahman, M.A.; Sadi, M.S.; Islam, M.M.; Saha, P. Design and Development of Navigation Guide for Visually Impaired People. In Proceedings of the IEEE International Conference on Biomedical Engineering, Computer and Information Technology for Health (BECITHCON), Dhaka, Bangladesh, 28–30 November 2019; pp. 89–92.

- Chang, W.J.; Chen, L.B.; Chen, M.C.; Su, J.P.; Sie, C.Y.; Yang, C.H. Design and Implementation of an Intelligent Assistive System for Visually Impaired People for Aerial Obstacle Avoidance and Fall Detection. IEEE Sen. J. 2020, 20, 10199–10210.

- Kwiatkowski, P.; Jaeschke, T.; Starke, D.; Piotrowsky, L.; Deis, H.; Pohl, N. A concept study for a radar-based navigation device with sector scan antenna for visually impaired people. In Proceedings of the 2017 First IEEE MTT-S International Microwave Bio Conference (IMBIOC), Gothenburg, Sweden, 15–17 May 2017; pp. 1–4.

- Sohl-Dickstein, J.; Teng, S.; Gaub, B.M.; Rodgers, C.C.; Li, C.; DeWeese, M.R.; Harper, N.S. A device for human ultrasonic echolocation. IEEE Trans. Biomed. Eng. 2015, 62, 1526–1534.

- Patil, K.; Jawadwala, Q.; Shu, F.C. Design and construction of electronic aid for visually impaired people. IEEE Trans. Hum. Mach. Syst. 2018, 48, 172–182.

- Sáez, Y.; Muñoz, J.; Canto, F.; García, A.; Montes, H. Assisting Visually Impaired People in the Public Transport System through RF-Communication and Embedded Systems. Sensors 2019, 19, 1282.

- Cardillo, E.; Di Mattia, V.; Manfredi, G.; Russo, P.; De Leo, A.; Caddemi, A.; Cerri, G. An electromagnetic sensor prototype to assist visually impaired and blind people in autonomous walking. IEEE Sens. J. 2018, 18, 2568–2576.

- Pisa, S.; Pittella, E.; Piuzzi, E. Serial patch array antenna for an FMCW radar housed in a white cane. Int. J. Antennas Propag. 2016, 2016.

- Kiuru, T.; Metso, M.; Utriainen, M.; Metsävainio, K.; Jauhonen, H.M.; Rajala, R.; Savenius, R.; Ström, M.; Jylhä, T.N.; Juntunen, R.; et al. Assistive device for orientation and mobility of the visually impaired based on millimeter wave radar technology—Clinical investigation results. Cogent Eng. 2018, 5, 1450322.

- Cheng, R.; Hu, W.; Chen, H.; Fang, Y.; Wang, K.; Xu, Z.; Bai, J. Hierarchical visual localization for visually impaired people using multimodal images. Expert Syst. Appl. 2021, 165, 113743.

- Lin, S.; Cheng, R.; Wang, K.; Yang, K. Visual localizer: Outdoor localization based on convnet descriptor and global optimization for visually impaired pedestrians. Sensors 2018, 18, 2476.

- Fang, Y.; Yang, K.; Cheng, R.; Sun, L.; Wang, K. A Panoramic Localizer Based on Coarse-to-Fine Descriptors for Navigation Assistance. Sensors 2020, 20, 4177.

- Duh, P.J.; Sung, Y.C.; Chiang, L.Y.F.; Chang, Y.J.; Chen, K.W. V-Eye: A Vision-based Navigation System for the Visually Impaired. IEEE Trans. Multimed. 2020.

- Hairuman, I.F.B.; Foong, O.M. OCR signage recognition with skew & slant correction for visually impaired people. In Proceedings of the International Conference on Hybrid Intelligent Systems (HIS), Malacca, Malaysia, 5–8 December 2011; pp. 306–310.

- Devi, P.; Saranya, B.; Abinayaa, B.; Kiruthikamani, G.; Geethapriya, N. Wearable Aid for Assisting the Blind. Methods 2016, 3.

- Bazi, Y.; Alhichri, H.; Alajlan, N.; Melgani, F. Scene Description for Visually Impaired People with Multi-Label Convolutional SVM Networks. Appl. Sci. 2019, 9, 5062.

- Mishra, A.A.; Madhurima, C.; Gautham, S.M.; James, J.; Annapurna, D. Environment Descriptor for the Visually Impaired. In Proceedings of the International Conference on Advances in Computing, Communications and Informatics (ICACCI), Bangalore, India, 19–22 September 2018; pp. 1720–1724.

- Lin, Y.; Wang, K.; Yi, W.; Lian, S. Deep learning based wearable assistive system for visually impaired people. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Seoul, Korea, 27 October–2 November 2019.

- Younis, O.; Al-Nuaimy, W.; Rowe, F.; Alomari, M.H. A smart context-aware hazard attention system to help people with peripheral vision loss. Sensors 2019, 19, 1630.

- Elmannai, W.; Elleithy, K.M. A novel obstacle avoidance system for guiding the visually impaired through the use of fuzzy control logic. In Proceedings of the IEEE Annual Consumer Communications & Networking Conference (CCNC), Las Vegas, NV, USA, 12–15 January 2018; pp. 1–9.

- Yang, K.; Wang, K.; Bergasa, L.M.; Romera, E.; Hu, W.; Sun, D.; Sun, J.; Cheng, R.; Chen, T.; López, E. Unifying terrain awareness for the visually impaired through real-time semantic segmentation. Sensors 2018, 18, 1506.

- Kang, M.C.; Chae, S.H.; Sun, J.Y.; Lee, S.H.; Ko, S.J. An enhanced obstacle avoidance method for the visually impaired using deformable grid. IEEE Trans. Consum. Electron. 2017, 63, 169–177.

- Kang, M.C.; Chae, S.H.; Sun, J.Y.; Yoo, J.W.; Ko, S.J. A novel obstacle detection method based on deformable grid for the visually impaired. IEEE Trans. Consum. Electron. 2015, 61, 376–383.

- Poggi, M.; Mattoccia, S. A wearable mobility aid for the visually impaired based on embedded 3d vision and deep learning. In Proceedings of the IEEE Symposium on Computers and Communication (ISCC), Messina, Italy, 27–30 June 2016; pp. 208–213.

- Cheng, R.; Wang, K.; Lin, S. Intersection Navigation for People with Visual Impairment. In International Conference on Computers Helping People with Special Needs; Springer: Berlin/Heidelberg, Germany, 2018; pp. 78–85.

- Cheng, R.; Wang, K.; Yang, K.; Long, N.; Bai, J.; Liu, D. Real-time pedestrian crossing lights detection algorithm for the visually impaired. Multimed. Tools Appl. 2018, 77, 20651–20671.

- Li, X.; Cui, H.; Rizzo, J.R.; Wong, E.; Fang, Y. Cross-Safe: A computer vision-based approach to make all intersection-related pedestrian signals accessible for the visually impaired. In Science and Information Conference; Springer: Berlin/Heidelberg, Germany, 2019; pp. 132–146.

- Chen, Q.; Wu, L.; Chen, Z.; Lin, P.; Cheng, S.; Wu, Z. Smartphone Based Outdoor Navigation and Obstacle Avoidance System for the Visually Impaired. In International Conference on Multi-disciplinary Trends in Artificial Intelligence; Springer: Berlin/Heidelberg, Germany, 2019; pp. 26–37.

- Velazquez, R.; Pissaloux, E.; Rodrigo, P.; Carrasco, M.; Giannoccaro, N.I.; Lay-Ekuakille, A. An outdoor navigation system for blind pedestrians using GPS and tactile-foot feedback. Appl. Sci. 2018, 8, 578.

- Spiers, A.J.; Dollar, A.M. Outdoor pedestrian navigation assistance with a shape-changing haptic interface and comparison with a vibrotactile device. In Proceedings of the 2016 IEEE Haptics Symposium (HAPTICS), Philadelphia, PA, USA, 8–11 April 2016; pp. 34–40.

- Bai, J.; Liu, D.; Su, G.; Fu, Z. A cloud and vision-based navigation system used for blind people. In Proceedings of the 2017 International Conference on Artificial Intelligence, Automation and Control Technologies, Wuhan, China, 7–9 April 2017; pp. 1–6.

- Cheng, R.; Wang, K.; Bai, J.; Xu, Z. Unifying Visual Localization and Scene Recognition for People With Visual Impairment. IEEE Access 2020, 8, 64284–64296.

- Gintner, V.; Balata, J.; Boksansky, J.; Mikovec, Z. Improving reverse geocoding: Localization of blind pedestrians using conversational ui. In Proceedings of the 2017 8th IEEE International Conference on Cognitive Infocommunications (CogInfoCom), Debrecen, Hungary, 11–14 September 2017; pp. 000145–000150.

- Shadi, S.; Hadi, S.; Nazari, M.A.; Hardt, W. Outdoor navigation for visually impaired based on deep learning. Proc. CEUR Workshop Proc. 2019, 2514, 97–406.

- Lin, B.S.; Lee, C.C.; Chiang, P.Y. Simple smartphone-based guiding system for visually impaired people. Sensors 2017, 17, 1371.

- Yu, S.; Lee, H.; Kim, J. Street Crossing Aid Using Light-Weight CNNs for the Visually Impaired. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), Seoul, Korea, 27–28 October 2019; pp. 2593–2601.

- Ghilardi, M.C.; Simoes, G.S.; Wehrmann, J.; Manssour, I.H.; Barros, R.C. Real-Time Detection of Pedestrian Traffic Lights for Visually-Impaired People. In Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–8.

- Ash, R.; Ofri, D.; Brokman, J.; Friedman, I.; Moshe, Y. Real-time pedestrian traffic light detection. In Proceedings of the IEEE International Conference on the Science of Electrical Engineering in Israel (ICSEE), Eilat, Israel, 12–14 December 2018; pp. 1–5.

- Ghilardi, M.C.; Junior, J.J.; Manssour, I.H. Crosswalk Localization from Low Resolution Satellite Images to Assist Visually Impaired People. IEEE Comput. Graph. Appl. 2018, 38, 30–46.

- Yu, C.; Li, Y.; Huang, T.Y.; Hsieh, W.A.; Lee, S.Y.; Yeh, I.H.; Lin, G.K.; Yu, N.H.; Tang, H.H.; Chang, Y.J. BusMyFriend: Designing a bus reservation service for people with visual impairments in Taipei. In Proceedings of Companion Publication of the 2020 ACM Designing Interactive Systems Conference; ACM: New York, NY, USA, 2020; pp. 91–96.

- Ni, D.; Song, A.; Tian, L.; Xu, X.; Chen, D. A walking assistant robotic system for the visually impaired based on computer vision and tactile perception. Int. J. Soc. Robot. 2015, 7, 617–628.

- Joshi, R.C.; Yadav, S.; Dutta, M.K.; Travieso-Gonzalez, C.M. Efficient Multi-Object Detection and Smart Navigation Using Artificial Intelligence for Visually Impaired People. Entropy 2020, 22, 941.

- Vera, D.; Marcillo, D.; Pereira, A. Blind guide: Anytime, anywhere solution for guiding blind people. In World Conference on Information Systems and Technologies; Springer: Berlin/Heidelberg, Germany, 2017; pp. 353–363.

- Islam, M.M.; Sadi, M.S.; Bräunl, T. Automated walking guide to enhance the mobility of visually impaired people. IEEE Trans. Med. Robot. Bionics 2020, 2, 485–496.

- Martinez, M.; Roitberg, A.; Koester, D.; Stiefelhagen, R.; Schauerte, B. Using technology developed for autonomous cars to help navigate blind people. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 1424–1432.

- Long, N.; Wang, K.; Cheng, R.; Hu, W.; Yang, K. Unifying obstacle detection, recognition, and fusion based on millimeter wave radar and RGB-depth sensors for the visually impaired. Rev. Sci. Instrum. 2019, 90, 044102.

- Meliones, A.; Filios, C. Blindhelper: A pedestrian navigation system for blinds and visually impaired. In Proceedings of the ACM International Conference on PErvasive Technologies Related to Assistive Environments, Corfu Island, Greece, 29 June–1 July 2016; pp. 1–4.

- Ahmetovic, D.; Gleason, C.; Ruan, C.; Kitani, K.; Takagi, H.; Asakawa, C. NavCog: A navigational cognitive assistant for the blind. In Proceedings of the 18th International Conference on Human-Computer Interaction with Mobile Devices and Services, Florence, Italy, 6–9 September 2016; pp. 90–99.

- Elmannai, W.; Elleithy, K.M. A Highly Accurate and Reliable Data Fusion Framework for Guiding the Visually Impaired. IEEE Access 2018, 6, 33029–33054.

- Mocanu, B.C.; Tapu, R.; Zaharia, T.B. When Ultrasonic Sensors and Computer Vision Join Forces for Efficient Obstacle Detection and Recognition. Sensors 2016, 16, 1807.

- Shangguan, L.; Yang, Z.; Zhou, Z.; Zheng, X.; Wu, C.; Liu, Y. Crossnavi: Enabling real-time crossroad navigation for the blind with commodity phones. In Proceedings of ACM International Joint Conference on Pervasive and Ubiquitous Computing; ACM: New York, NY, USA, 2014; pp. 787–798.

- Flores, G.H.; Manduchi, R. A public transit assistant for blind bus passengers. IEEE Pervasive Comput. 2018, 17, 49–59.

- Shingte, S.; Patil, R. A Passenger Bus Alert and Accident System for Blind Person Navigational. Int. J. Sci. Res. Sci. Technol. 2018, 4, 282–288.

- Bai, J.; Liu, Z.; Lin, Y.; Li, Y.; Lian, S.; Liu, D. Wearable travel aid for environment perception and navigation of visually impaired people. Electronics 2019, 8, 697.