Blind and Visually impaired people (BVIP) face a range of practical difficulties when undertaking outdoor journeys as pedestrians.

- assistive systems

- navigation systems

- visually impaired people

- smart cities

- planning journeys

- independent children navigation

1. Introduction

According to the World Health Organization (WHO), at least 1 billion people are visually impaired in 2020 [1]. There are various causes of vision impairment and blindness, including uncorrected refractive errors, neurological defects from birth, and age-related cataracts [1]. For those who suffer from vision impairment, both independence and confidence in undertaking daily activities of living are impacted. Assistive systems exist to help BVIP in various activities of daily living, such as recognizing people [2], distinguishing banknotes [3,4], choosing clothes [5], and navigation support, both indoors and outdoors [6].

2. A Taxonomy of Outdoor Navigation Systems for BVIP

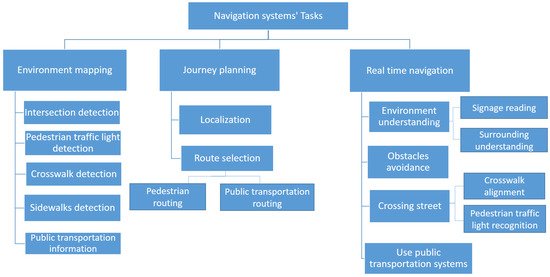

Assistive navigation systems in an urban environment focus on any aspect of supporting pedestrian BVIP in moving in a controlled and safe way for a particular route. The first step in analysing this domain is to develop and apply a clear view on both the scope and terminology involved in outdoor pedestrian navigation systems. We present a taxonomy of outdoor navigation in Figure 1. At the top level, we identify the three main sequenced phases which encompass the area of outdoor navigation systems, from environment mapping, through journal planning to navigating the journey in real time. Each of these phases consists of a task breakdown structure. The tasks comprise the range of actions and challenges that a visually impaired person need to succeed at in order to move successfully from an initial point to a selected destination safely and efficiently. In effect, the phases represent higher-level research areas, while the task breakdown structure for each phase shows the research sub-domains.

Figure 1. A taxonomy of navigation support tasks for BVIP pedestrians.

Looking at each phase in Figure 1, the environment mapping phase provides appropriate and relevant location-specific information to support BVIP pedestrians in journey planning and real-time journey support. It defines the locations and information of static street elements such as intersections, public transportation stations, and traffic lights. The environment mapping phase is an off-line up-front data gathering and processing phase that underpins the remaining navigation phases. The second phase Journey planning begins by determining the start location. It then selects the optimal route to the user’s destination, allowing for safety and routing, using the information from the environment mapping phase. Finally, BVIP need support for challenges in real-time navigation including real-time environment understanding, crossing a street, obstacle avoidance, and using public transportation. We explain each of the taxonomy entries in more detail:

Environment mapping phase:

Existing map applications do not provide the level of information needed to support the BVIP community when planning and undertaking pedestrian journeys. This phase addresses the tasks associated with enriching available maps with useful information for such journeys. Pre-determined location and information about sidewalks, public transportation, road intersections, appropriate crossing points (crosswalks), and availability of traffic lights are all essential points of information for this user group. We identify five tasks or sub-domains within the environment mapping phase.

-

Intersection detection: detects the location of road intersections. An intersection is defined as a point where two or more roads meet, and represents a critical safety point of interest to BVIP.

-

Pedestrian traffic light detection: detects the location and orientation of pedestrian traffic lights. These are traffic lights that have stop/go signals designed for pedestrians, as opposed to solely vehicle drivers.

-

Crosswalk detection: detects an optimal marked location where visually impaired users can cross a road, such as a zebra crosswalk.

-

Sidewalk detection: detects the existence and location of the pedestrian sidewalk (pavement) where BVIP can walk safely.

-

Public transportation information: defines the locations of public transportation stops and stations, and information about the degree of accessibility of each one.

Journey planning phase:

For the BVIP community, journey planning is a critical part of building the confidence and knowledge to undertaking a pedestrian journey to a new destination. This phase supports the planning of journeys, so as to select the safest and most efficient route from a BVIP’s location to their destination. It builds upon the enriched mapping information from the environmental mapping phase, and consists of the following two tasks:

-

Localization: defines the initial start point of the journey, where users start their journey from.

-

Route selection: finds the best route to reach a specified destination.

Real-time navigation phase:

The final phase is about supporting the BVIP while undertaking their journey. Real-time navigation support recognises the dynamic factors during the journey. We identify the following four tasks or sub-domains:

-

Environment understanding: helps BVIP to understand their surroundings, including reading signage and physical surrounding understanding.

-

Avoiding obstacles: detects the obstacles on a road and helps BVIP to avoid them.

-

Crossing street: helps BVIP in crossing a road when at a junction. This task helps the individual to align with the location of a crosswalk. Furthermore, it recognizes the status of a pedestrian traffic light to determine the appropriate time to cross, so they can cross safely.

-

Using public transportation systems: This task assists BVIP in using public transportation systems such as a bus or train.

3. Overview of Navigation Systems by Device

Navigation systems research literature differs substantially along two particular lines (1) the scope and depth of the functionality (akin to tasks) offered across these systems and (2) the nature of the hardware/device provided to the user, which gathers (perceives) data about the environment. This data may be a captured image or other sensor feedback. Navigation assistive systems extract useful information from this data to help the BVIP during their navigation—such as the type and location of obstacles. We divide assistive systems for BVIP into four categories, based on the used device for data gathering:

-

Sensors-based: this category collects data through various sensors such as ultrasonic sensors, liquid sensors, and infrared (IR) sensors.

-

Electromagnetic/radar-based: radar is used to receive information about the environment, particularly objects in the environment.

-

Camera-based: cameras capture a scene to produce more detailed information about the environment, such as an object’s colour and shape.

-

Smartphone-based: in this case, the BVIP has their own device with a downloaded application. Some applications utilise just the phone camera, with others using the phone camera and other phone sensors such as GPS, compass, etc.

-

Combination: in these categories, two types of data gathering methods are used to combine the benefits of both of them such as sensor and smartphone, sensor and camera, and camera and smartphone.

To establish a broad-brush view of the BVIP specific literature in BVIP systems, we present Table 1.

Research works are classified across the phases/tasks of navigation systems and the type of device/hardware system, as shown in Table 1. From this table, we note that the tasks that have received the most attention from the research community are the tasks of obstacle avoidance and localization. Secondly, while the environment mapping phase is a critical part of BVIP navigation systems, it is has not been addressed in the navigation systems for BVIP research base so is not included here. Thirdly, we note that previous navigation systems work has not included signage reading as a focus area, with just two published work. Although using public transportation systems has a significant effect on the mobility and employment of BVIP, it is not included in the majority of navigation systems. None of the previous articles address all tasks for real-time navigation, so no single system presents a complete navigation solution to the BVIP community. We note that while most hardware/device systems aim to address aspects of both journey planning and real-time navigation, sensor and camera based systems focus solely on the tasks of obstacle avoidance. In addition, there is only one smartphone based system that uses a separate camera in the literature suggesting that smartphone solutions rely on the in-built camera.

Having examined the distribution of BVIP navigation systems research effort across navigation functions, we now analyse the research base at a more detailed level using our phase and task taxonomy. As our focus is by task, we include both BVIP and non BVIP literature.

Table 1. Tasks coverage of published navigation systems, by data collection device.

| Devices | Journey Planning | Real-Time navigation | ||||||

|---|---|---|---|---|---|---|---|---|

| Localization | Route Selection | Environment Understanding | Obstacle Avoidance | Crossing Street | Using Public Transportation | |||

| Signage Reading | Surrounding Understanding | Pedestrian traffic Lights Recognition | Crosswalk Alignment | |||||

| Sensors-based | [22,23,24] | [22,23,25,26,27,28,29,30,31] | [32] | |||||

| Electromagnetic/radar-based | [33,34,35] | |||||||

| Camera-based | [36,37,38,39] | [40,41] | [42,43] | [39,44,45,46,47,48,49,50] | [51,52,53] | [51] | ||

| Smartphone-based | [54,55,56,57,58,59] | [54,55,57] | [57,58] | [57,60,61] | [57,62,63,64] | [62,65] | [66] | |

| Sensor and camera based | [67,68,69,70,71] | |||||||

| Electromagnetic/radar-based and camera based | [72] | |||||||

| Sensor and smartphone based | [73,74,75] | [74] | [73,75,76] | [77] | [77] | [78,79] | ||

| Camera and smartphone based | [80] | [80] | [80] | |||||

This entry is adapted from the peer-reviewed paper 10.3390/s21093103