Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is an old version of this entry, which may differ significantly from the current revision.

Subjects:

Automation & Control Systems

Subjects modification

- transport

- railway

- vehicles

Systems Developed by Manufacturers of No-Rail Surface Vehicles

3.1. Trucks

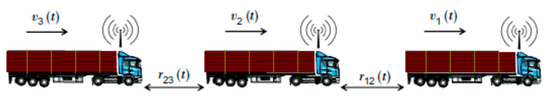

MAN PLATOONING by MAN and DB Schenker is a technical system that turns multiple vehicles into a convoy of trucks (Figure 1). The technology relies on driver assistance and control systems. The driver always keeps his/her hands on the wheel, even when another truck on the road behind him/her reacts directly, without active intervention. A specially designed display provides drivers with additional information about the convoy. Additionally, camera and radar systems continuously scan the surroundings. Figure 1 presents a conceptual visualization of platooning trucks.

Figure 1. Concept of trucks platooning [1].

HIGHWAY PILOT by Daimler [2] is an autopilot mechanism for trucks. It consists of assistance and connectivity systems enabling autonomous driving on highways by adapting the speed of trucks to traffic density. Overtaking maneuvers, lane changes or exiting the highway remain prerogatives of the driver. The user interface continuously informs the driver about the activation status and can manage received instructions, including activation and deactivation, meaning that the system can be overridden at any time. In addition, control is returned to the driver whenever the onboard system is no longer able to detect safety relevant aspects, as in cases of roadworks, extreme weather conditions or an absence of lane markings.

3.2. Cars

AUTOPILOT 2.5 by Tesla [3] equipment includes:

-

8 × 360° cameras;

-

12 ultrasonic sensors for the detection of hard and soft objects at a distance and with double the accuracy of previous systems;

-

A forward-facing radar system, with high processing capabilities, providing additional data on the surrounding environment on different wavelengths in order to counteract the effects of heavy rain, fog, dust and other cars.

The autopilot function suggests when to change lanes in order to optimize the route, and makes adjustments to avoid remaining behind slow vehicles. It also automatically guides the vehicle through junctions and onto highways exits based on the selected destination.

iNEXT-COPILOT by BMW (Figure 2) may be activated for autonomous driving or deactivated for traditional driving. The interior of the vehicle is readjusted according to touch or voice commands:

Figure 2. iNEXT-COPILOT system [4].

-

The steering wheel retracts, providing more room for passengers;

-

The pedals retract, creating a flat surface on the footwall;

-

The driver and front passenger can turn back towards other passengers in the rear seats;

-

Various displays provide information about the surrounding area.

3.3. Ships

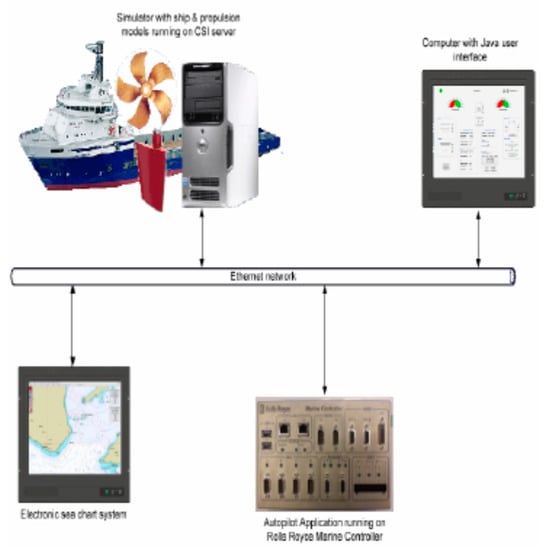

THE FALCO by Finferries and Rolls-Royce allows vehicles to detect objects through a fusion of sensors and artificial intelligence for collision avoidance. It is the culmination of studies launched 2008 (Figure 3) [5]; since then, its realism has improved greatly thanks to the presence of advanced sensors which provide real time images of surroundings with a level of accuracy higher than that of the human eye. Basing on this, the vessel is able to alter course and speed automatically during the approach to a quay, and dock in a full-automated maneuver without human intervention. A unified bridge provides the operator with a functional HMI with control levers, touch screens for calls and control, as well as logically presented information on system status. During maneuvers outside congested harbor areas, the operator can control operations remotely using a joystick or supervise them via onboard situation awareness systems. Various autonomy levels can operate selectively or in combination, depending on the vessel situation and external conditions.

Figure 3. Autopilot system concept based on dynamic positioning [5].

4. Simulators of Transport Systems

4.1. Rail Simulators

The PSCHITT-RAIL simulator [6] (Figure 4), designed to be an additional support for research and experimentation in the field of transport and funded by the European Union with the European Regional Development Fund (ERDF) and the Hauts-de-France Region, aims to integrate new modular equipment through the study of driver behavior in risky situations. Its main functionalities are:

Figure 4. PSCHITT-RAIL simulator interface.

-

Immersion in a sonic and visual environment;

-

Integration between real components and a simulated environment;

-

Management of driver information.

The equipment includes Alstom Citadis Dualis desk, a six-degrees-of-freedom motion system, OKSimRail software, five screens providing a 225° view, three eye trackers, cameras, a 6.1 audio system, and scripting, synthetized into a dynamic platform by means of measurements, such as systems capturing movements, physiological measurement sensors, etc.

SPICA RAIL, by University of Technology of Compiegne [6], is a supervision platform to recreate real accident scenarios in the laboratory in order to analyze human behaviors and decisions in such situations. It is able to analyze and simulate the entire network by integrating traffic control functions. Moreover, to simulate crises, personnel can start from an automatic control level, and progressively insert disturbances on the network.

OKTAL SYDAC simulators [7] cover solutions for trams, light rail, metro, freight (complex systems), high-speed trains, truck, bus and airports. The full cab or desk is an exact replica of a real cab. The compact desk simulators offer a solution with limited space for training.

The IFFSTAR-RAIL by IFFSTAR [8] is a platform designed to simulate rail traffic for the assessment of some European Rail Traffic Management System (ERTMS) components. It includes three subsystems:

-

A driving simulator desk used in combination with a 3D representation of tracks;

-

A traffic simulator, acting both as a single train management tool and for railway traffic control;

-

A test bench connected with onboard ERTMS equipment, in compliance with specifications and rules.

4.2. Car Simulators

IFFSTAR TS2 is a simulator of internal and external factors influencing driver behavior, and a human-machine interface located in Bron and Salon-de-Provence. It is capable of analyzing driver behavior relative to internal (e.g., anger, sadness) or external environmental factors and studying driver–vehicle interactions. The instrumentation includes:

-

Sensors for the control, communication and processing of of dashboard information;

-

Images of road scenes projected on five frontal screens in a visual field covering 200° × 40°:

-

A device providing rear-view images;

-

Quadraphonic sound reproducing internal (motor, rolling, starter) and external traffic noises.

NVIDIA DRIVE [9] is an open platform providing an interface that integrates environmental, vehicle and sensor models with traffic scenario and data managers, including two servers: A simulator, which generates output from virtual car sensors, and a computer, which contains the DRIVE AGX Pegasus AI car computer that runs the complete, binary compatible autonomous vehicle software stack. It processes the simulated data as if it were coming from the sensors of a car actually driving on the road. The car computer receives the sensor data, makes decisions and sends vehicle control commands back to the simulator in a closed loop process enabling bit-accurate, timing-accurate hardware-in-the-loop testing. The kit enables the development of Artificial Intelligence assistants for both drivers and passengers. HMI uses data from sensors tracking the drivers and the surrounding environment to keep them alert, anticipate passenger needs and provide insightful visualizations of journeys. The system uses deep learning networks to track head movements and gaze, and can communicate verbally via advanced speech recognition, lip reading and natural language understanding.

VRX-2019 by OPTIS [10,11] is a dynamic simulator with proportions, shapes, placement and surfaces for the interior which emphasize ergonomics and the comfort of passengers. From the integration of new technologies, such as driving assistance and autonomous driving, to the validation of ergonomics for future drivers and passengers, each step of the interior has been analyzed and validated. It reproduces the feeling of a cockpit HMI. It is very useful for virtual tests and for the integration of next-generation sensors before their actual release, helping to eliminate expensive real-world tests and reduce time-to-market. By virtual displays, actuators, visual simulations, eye and finger tracking and haptic feedback, it provides a tool for full HMI evaluation. Moreover, the user can validate safety and comfort improvements for drivers and pedestrians in dangerous scenarios. Key interface features are:

-

A finger-tracking system;

-

Tactile displays and dynamic content;

-

Windshield or glasshouse reflection studies based on physically accurate reflection simulations;

-

Testing and validation of head up displays, specifying and improving optical performance and the quality of the content.

4.3. Aviation Simulators

The CAE 7000XR Series Full-Flight Simulator (FFS) surpasses the operator requirements of Level D regulations. It provides integrated access to advanced functions, such as:

-

An intuitive lesson plan builder;

-

A 3D map of flight paths with event markers;

-

Increased information density;

-

Ergonomic redesign of interiors (Figure 5).

Figure 5. Integrated CAE 7000XR flight simulator [12].

The CAE 3000 Series Helicopter flight and mission Simulator [13] is helicopter-specific for training in severe conditions, e.g., offshore, emergency services, high-altitude, etc., based on the following features:

-

A visual system with high-definition commercial projectors;

-

Up to 220° × 80° field-of-view direct projection dome, with full chin window coverage tailored to helicopter training operations.

The EXCALIBUR MP521 Simulator includes a capsule with a six-axis motion system, visual and instrument displays, touch control panels and hardware for flight control. The user can enter parameters to define an aircraft through a graphical user interface (GUI). The graphics also feature airports and reconfigurable scenic elements, in order to meet the requirements of flight training, such as runway lighting, approach aids and surroundings, with the possibility of large wall screens vision for group lessons.

The ALSIM AL250 simulator [14] includes a visual system equipped with a high quality compact panoramic visual display (minimum frame rate of 60 images/s), 250° × 49° field of view, high definition visual systems for better weather rendering and ultra-realism, new map display, positioning/repositioning system, weather condition adjustment, failure menu, position and weather presets. Optional upset recovery and a touch screen with wider instructor surface and adjustable seats complete the setup.

AIRLINER is a multipurpose hybrid simulator which is able to cover the following training scenarios: Multi Crew Cooperation (MCC), Advanced Pilot Standard (APS), airline selection, preparation and skills tests, aircraft complex systems operation, Line Oriented Flight Training (LOFT), type-rating preparation and familiarization, Upset Prevention Training (UPT). It is based on the hybrid Airbus A320/Boeing B737 philosophy, with versatility to adapt flight training and interchangeable cockpit configuration.

i-VISION by OPTIS is an immersive virtual environment for the design and validation of human-centered aircraft cockpits. It is the result of a project developed and applied to accelerate design, validation and training processes of prototyping aircraft cockpits. The applied methods are also exportable to cars, trucks, boats and trains. i-VISION includes three main technological components (Figure 7), which were integrated and validated in collaboration with relevant industrial partners:

-

Human cockpit operations analysis module, with human factor methods demonstrated in the prototype of the project;

-

Semantic virtual cockpit, with semantic virtual scene-graph and knowledge-based reasoning of objects and intelligent querying functions, providing a semantic-based scene-graph and human task data processing and management engine;

-

Virtual cockpit design environment, with a virtual environment provided by human ergonomics evaluation software based upon the Airbus flight simulator, to develop a new general user interface for cockpits.

4.4. Integrated Simulators

MISSRAIL® and INNORAIL are tools developed entirely by the Université Polytechnique Hauts-de-France in Valenciennes [15,16,17]. They include four main modules (Figure 6): (1) railway infrastructure design, (2) route design module, (3) driving cab simulation module, 4) control-command room simulation module. A version with an immersive helmet is also available.

Figure 6. MISSRAIL® interface for rail simulation.

It is a Client/Server application which is capable of connecting different users at the same time. Several configurations are possible: wired, wireless, portable, fixed. Moreover, MISSRAIL® is able to design various automated driving assistance tools (e.g., eco-driving assistance, collision avoidance system, cruise control and vigilance control system) and accident scenarios combining pedestrians, trains and cars (Figure 7) [17].

Figure 7. Train, car and pedestrian control simulation on MISSRAIL®.

5. Support Tools for Driver Assistance

5.1. Human Factors and Their Limits

Human control engineering can use several technological means to control human factors, such as workload, attention or vigilance; however, controversies exist about some of them [18], i.e., studies have highlighted that, independent from technology, vigilance can be improved from dieting or fasting [19], or even from chewing gum [20,21]. Second, two kinds of technological supports can influence human cognitive state: passive tools, i.e., human–machine interaction supports, or active ones, which are capable of making decisions and acting accordingly. Examples are listening to music, which may improve concentration [22,23]. Meanwhile, a dedicated decision support system can decrease workload by improving performance [24]. Moreover, due to disengagement from a driving situation under monotonous driving conditions, automation might lead operators to become more fatigued that they would during manual driving conditions [25,26].

A great deal of research has reported on the utility of using physiological, behavioral, auditory and vehicle data to detect the mental state of the driver, such as presence/sleep, drowsiness or cognitive workload, with considerable accuracy [27,28,29]. Diverse parameters can provide information on targeted mental states: physiological measures include signals such as ElectroEncephaloGram (EEG) data, ElectroDermal Activity (EDA), and heart rate and heart rate variability. Behavioral measures include aspects such as head-direction, head-movement, gaze-direction, pose of the superior part of the body, gaze-dispersion, blinking, saccades, PERCLOS, pupil-size, eyelid movement, postural adjustment and nonself-centered gestures. Such data may be combined in multimodal approaches with information on vehicle activity and auditory information. However, existing approaches still present clear limitations, such as with electroencephalography (EEG), which is hardly usable in real contexts due to possible discomfort, an unprovable performance, as well as, in some cases, the high computational cost for calculations, which constrains implementation in real environments [30]. Note also that more traditional behavioral measures used in experimental psychology, such as the secondary task paradigm, have been shown to be quite useful in workload studies [31].

Results from studies on human factors in driver automation based on these techniques are often concerned with questions such as of how users tackle automated driving and transitions between manual and automated control. Most such studies were motivated by the increasing prevalence of automated control in commercial and public transport vehicles, as well as increases in the degree of automation. Moreover, while automated driving significantly reduces workload, this is not the case for Adaptive Cruise Control (ACC) [32]. For instance, a driving simulator and vehicle with eye-tracking measures showed that the time required to resume control of a car is about 15 s, and up to 40 s to stabilize it [33].

Alarms comprising beeps are safer than those comprising sounds with positive or negative emotional connotations [34], and human performances can differ according to the use of interaction means involving hearing or sight [35]. Moreover, interactions with visual or audio-visual displays are more efficient than those with auditory displays only [36]. In this sense, research on multimodal perception is particularly relevant when studying human factors of driver aid systems [37,38].

Other studies have not observed significant impacts of noise or music on human performance [39] and have even concluded that silence is able to increase attention during human disorder recovery conditions [40].

Moreover, the use of decision support systems can generate ambiguous results by leading to dissonances, affordances, contradictions or interferences with safety critical behavior [41,42,43], potentially increasing hypo-vigilance and extending human response time [44]. As an example, the well-known Head-Up Display (HUD) system is useful to display important information without requiring them to move their gaze in several directions, but it is also a mean to focus attention upon a reduced control area [45]. It is, therefore, a tool to prevent accidents, but can also cause problems of focused attention.

Neuropsychological studies generally use sensors connected to the brain to assess neural activities related to cognitive processes, such as perception or problem solving. In this context, eye trackers have been demonstrated to be useful for the study of visual attention or workload via parameters such as closure percentage, blink frequency, fixation duration, etc. [46,47,48,49]. Indeed, the pupil diameter increases with the increasing demand of the performed task and higher the cognitive loads [50], while an increase of physical demand does the opposite [51], as do external cues, such as variations of ambient light, use of drugs or strong emotions. Facial recognition is also incapable of detecting emotional dissonances between expressed and felt emotions. Moreover, eye blink frequency reduces as workload increases [52,53], but it increases when a secondary task is required [54,55].

Eye-trackers can be useful to analyze overt or covert attention: when a subject looks at a point on a scene, the analysis of the corresponding eye movement supposes that the attention is focused on this point, whereas attention can also focus on other points without any eye movement [56].

Variations in heartbeat frequently correspond to variations in the level of the workload, stress or emotion [57,58,59,60], but a new hypothesis considers that perceptive ability can depend on the synchronization between frequency of dynamic events and heart beats. Recent studies have demonstrated that flashing alarms synchronized with heart rate could reduce the solicitation of the insula, i.e., the part of brain dedicated to perception, and the ability to detect it correctly [61,62]. This performance-shaping factor based on the synchronization of dynamic events with heartbeats is relevant for human error analysis.

The development of future smart tools to support driving tasks has to consider extended abilities, such as the ability:

-

To explain results in a pedagogical way [17];

Significant advances for the prediction of driver drowsiness and workload have been made in association with the use of more sophisticated features of physiological signals, as well as from the application of increasingly sophisticated machine learning models, although extrapolation of such to the context of commercial pilots has not yet been attempted. Some approaches have been based on EDA signal decomposition into tonic and phasic components [66], extraction of features in time, frequency, and time-frequency (wavelet based) domains [67], or the use of signal-entropy related features [68].

Moreover, regarding machine-learning models, while the most widely used approach is the support vector machine, artificial neural networks, such as convolutional neural networks, seems to provide better performance for the detection of drowsiness and workload [69,70,71].

The combination of such approaches with multimodal data fusion has been shown to provide a very high degree of accuracy for drowsiness detection [72].

Such approaches are applicable to overcome some of the current limitations in the detection in pilots of drowsiness and mental workload. For instance, the high accuracy accomplished with only a subset of the signals suggests that various predictive models of drowsiness and workload could be trained based on different subsets of features, thereby helping to make the system useful, even when some specific features are not momentarily available (e.g., due to occlusion of the eyes or head). Recent advances can also help in the implementation of detection systems with lower computational cost, such as efficient techniques for signal filtering [73] and feature-selection methods to reduce model dimensionality and complexity [74].

5.2. Gesture Control Technology

Many technologies to control devices by gestures are already on the market. An extended, though not comprehensive, summary of them is presented below.

DEPTHSENSE CARLIB, by Sony, aims to control infotainement by hand movement [75].

EYEDRIVE GESTURE CONTROL by EyeLights is an infrared motion sensor that recognizes simple hand gestures while driving in order to control in-vehicle devices [76].

HAPTIX by Haptix Touch is a webcam-based environment to recognize any classical hand movement and build a virtual mouse to control screen interface [77].

KINECT by Microsoft is a web-cam based device that can capture motion and control devices with body or hand movements [78,79].

LEAP MOTION by Leap Motion Inc. (now UltraHaptics) is an environment for hand movement recognition dedicated to virtual reality. Movement detection is by infrared light, while micro-cameras detect the hands or other objects in 3D [80].

MYO BRACELET by Thalmic Labs proposes an armband to control interfaces with hand or finger movement detected via the electrical activities of activated muscles [74,81,82].

SOLI by Google comprises a mini-radar which is capable of identifying movements, from fingers to the whole body [83,84].

SWIPE by FIBARO is dedicated to home automation; it is controlled via hand motions in front of a simple, contactless tablet [85].

XPERIA TOUCH DEVICE by Sony is a smartphone application for gesture control which is capable of tracking proximate hand gesture via the phone camera [86].

Table 1 summarizes a Strengths Weaknesses Opportunities and Threats (SWOT) analysis of three of systems defined above: KINECT, LEAP MOTION and MYO BRACELET developed starting from the results of similar studies [87].

Table 1. SWOT analysis of three gesture control technologies.

| KINECT | LEAP MOTION | MYO BRACELET | |

|---|---|---|---|

| Strengths |

|

|

|

| Weaknesses |

|

|

|

| Opportunities |

|

|

|

| Threats |

|

|

|

This entry is adapted from the peer-reviewed paper 10.3390/machines9020036

This entry is offline, you can click here to edit this entry!