When people communicate, information is sent, received, and interpreted between the sender and the receiver. The information exchange often results in a closed loop, where a back and forth information transfer happens between sender and receiver . This, we refer to as an “interaction”.

Rather than over a single, sequential channel, this information is often transmitted using multiple channels at once. The multiplicity of channels reduces the risk of interruptions, more so since the information channels are used in parallel, thereby increasing the seamlessness of the process. The redundancy afforded by multichannel communication increases the overall reliability of the communication, and because the decoding is distributed over a larger number of modalities and decoding modules such as sight, sound, and touch, the overall effort is reduced, even though in physical interaction there is a greater number of channels to decode.

- verbal and nonverbal communication

- interaction

- explicit and implicit interaction

- human to human interaction

- human to human communication

- human-robot interaction

- human-agent interaction

- human virtual-human interaction

- gaze

- proxemics

- physiological synchrony

1.Introduction

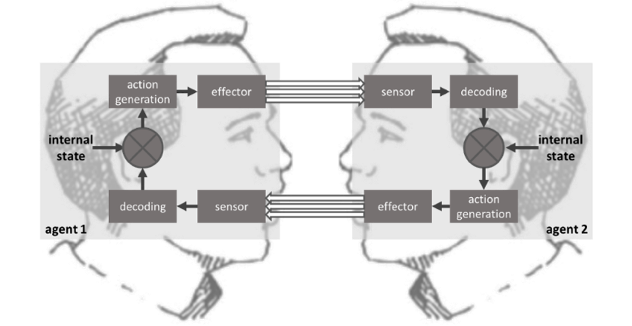

“Communication” refers to a process where information is sent, received, and interpreted. If this information exchange results in a reciprocal influence, i.e., a back and forth between sender and receiver, we refer to it as an “interaction”. Interaction is a socio-cognitive process in which we not only consider the other person but also the surroundings and social situations. Such a process of interaction between the two agents is illustrated in Figure 1. The arrows indicate the transfer of signals between interaction partners.Rather than over a single, sequential channel, information is often transmitted using multiple channels at once (as illustrated by the multiple arrows between the two agents in Figure 1).

Figure 1. The process of interaction between the two agents. Information is sensed and decoded. In conjunction with the internal state, received messages generate actions through the effector block.

In multi-channel communication, stimuli are simultaneously transmitted through different sensory modalities such as sight, sound, and touch. Characteristically, the individual modalities are used for transmitting more than a single message. In the auditory domain, the sender can simultaneously transmit language-based verbal information with prosodic information in the form of the tone of voice. Similarly, the visual domain can be used to transmit language-based messages in the form of sign language and lip-reading. The visual modality affords a broad range of nonverbal channels in the form of eye gaze and facial expressions; people can make thousands of different facial expressions along with varied eye gaze, and each can communicate a different cue. Smiling, frowning, blinking, squinting, eyebrow twitch or nostril flare are some of the most salient facial expressions. Beyond the face, other sources of kinesics information include gestures, head nods, posture, and physical distance. Often people “talk using their hands” emphasizing the use of hand gestures while communicating. How people sit, stand, walk, etc., gives vital cues about how they are perceived by their interaction partners.

2. Human to human Interaction

Human to human interaction is a seamless, effortless, and satisfactory process. Seamlessness characterizes a bi-directional interaction that happens continuously without interruptions or delays in communication between the interacting parties. For example, human to human interaction in the real physical world is more seamless than an exchange using a video-calling medium; video calling, in turn, is more seamless than talking over a telephone, and speaking on a telephone is more seamless than using a text-based interface. The importance of seamlessness is further supported by research suggesting that interaction delays on conference systems can have a negative impact on users[1]. Besides, delays can lead to people perceiving the responder as less friendly or focused[2]. Doing any kind of task involves the use of mental resources and requires a certain “effort”. Interacting with others, for instance, requires an effort in order to send and receive information. Low effort tasks typically require less attention, are easier to switch between and can be done for a longer period. Studies have shown that face-to-face interaction takes less effort and is less tiring than a video chat[3].

Ultimately, most interactions either with humans or with computers have a purpose. Hence, for an interaction to be deemed “satisfactory”, the purpose of the interaction should be achieved with minimal effort and in a minimum amount of time. Voice-base assistants are an example where users spend more time communicating the same information to a computer as compared to another human as they tend to talk slowly and precisely to avoid the need for repetition. Though the purpose of the interaction might eventually be fulfilled, the time taken is longer, which in turn leads to an inefficient interaction and in many cases, the user perceiving the interaction as less satisfactory.

3. Dimensions of Human to Human Interaction

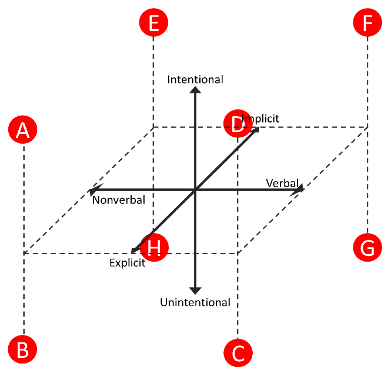

Human to human interaction can be classified along the three dimensions of verbal–nonverbal, intentional–unintentional and implicit–explicit (Figure 2). We have already discussed the dichotomy of verbal vs. nonverbal communication. While in an intentional communicative act the sender is aware of what they want to transmit, unintentional signals are sent without a conscious decision by the sender. Deitic movements to indicate direction are a typical example for the former, while blushing is an example of the latter.

Figure 2. Communication can be classified along the three dimensions of verbal–nonverbal, implicit–explicit, and intentional–unintentional. Head nods during an interaction are an example of nonverbal, explicit, and unintentional communication (A). “Freudian slips” fall in the unintentional, verbal and explicit quadrant (C). An instruction such as “bring me a glass of water” are verbal-explicit-intentional (D). Irony is an example of communication that combines verbal-implicit-intentional (F) with simultaneous nonverbal–implicit–intentional communication (E). Nonverbal–implicit–unintentional cues (H) are closely related to emotion and, therefore, associated with physiological reactions such as sweating and blushing. While some of the examples are straightforward, others seem to be more complicated, e.g., expression of anger or sadness are often explicit, nonverbal, and unintentional (B).

The third dimension of implicit vs. explicit is defined by the degree to which symbols and signs are used. Explicit interaction is a process that uses an agreed system of symbols and signs, the prime example being the use of spoken language and emblematic gestures. Conversely, implicit interaction is where the content of the information is suggested independent of the agreed system of symbols and signs[4][5]. In an implicit interaction, the sender and the receiver infer each other’s communicative intent from the behaviour.

4. Interaction Configurations

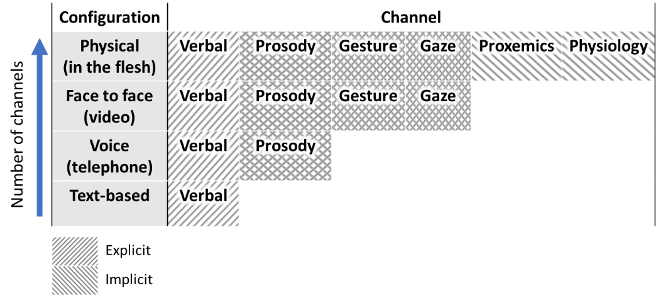

Interactions are taking place in what we can refer to as different configurations (Figure 3). The distinguishing feature of the configurations is the number of channels and the use of implicit/explicit, verbal/nonverbal and intentional/unintentional messages.

Figure 3. Overview of the relationship between communication configuration, number of channels, and channel properties. The number of channels decreases from physical world interaction to video-calling to voice-calling to texting.

The illustration makes it clear why physical interaction trumps text-based interaction: Multiple parallel channels reduce the risk of interruptions, increasing the seamlessness of the interaction[6]. The redundancy afforded by multichannel communication increases the overall reliability of the communication, and because the decoding is distributed over a larger number of modalities and decoding modules, e.g., for explicit and implicit messages, the overall effort is reduced, even though in physical interaction there is a greater number of channels to decode[7][8][9]. Ultimately, the efficiency of the communication and the ability to keep track of the goal achievement progress leads to a more satisfactory interaction.

5. The role of Implicit Interaction

The implicit cues that people send and receive in an interaction are often unintentional and very subtle—to the point that neither the receiver nor the sender is aware of these cues. However, their physiological responses via GSR, heart rate, skin conductance measurements can give away the effect of the implicit influence such that it causes a reciprocal influence on interaction partners. This can result in tight coupling of behaviours in the inetacrion partners. An example is rhythmic clapping, where individuals readily synchronize their behaviour to match stimuli, i.e., to match the neighbour’s claps that precede their clap[10]. A similar, implicit and unintentional form of reciprocal interaction is the synchronizing of physiological states, where both interaction partners fall into a rhythm. This phenomenon of the interdependence of physical activity in interaction partners is known as physiological synchrony[11]. This physiological synchrony is pivotal in nonverbal human–human interaction that helps building and maintaining rapport between people[12]. Studies have shown that romantic partners exhibit physiological synchrony in the form of heart rate adaptation[13][14]. Mothers and their infants coordinate their heart rhythms during social interactions. This visuo-affective social synchrony is believed to have a direct effect on the attachment process[15]. In psychology, the phenomenon where interaction partners unintentionally and unconsciously mimic each other’s speech patterns, facial expressions, emotions, moods, postures, gestures, mannerisms, and idiosyncratic movements is known as the “Chameleon Effect”[16]. Interestingly, this mimicking has the effect of increasing the sense of rapport and liking between the interaction partners[12].

6. Conclusions

Human to human interaction and from human to human-mediated interaction to highlight that interaction is a bi-directional and multichannel process where the actions of one influence the actions of others. The result is a reciprocal action that the receiver takes based on the effect of sender’s cues. These cues are transmitted over multiple channels that can be classified along the dimensions of verbal–nonverbal, explicit–implicit, and intentional–unintentional.

The use of multiple channels in an interaction process is so effortless that people do not realise that they are using all these channels while interacting. The use of the implicit channel is often unintentional further reducing the effort required to interact, thereby making the process more efficient. This is supported by research that suggests incorporation of implicit cues makes the interaction unambiguous[17] and efficient[18]. These unintentional and implicit cues are frequently the drivers of physiological responses and therefore pivotal in an interaction. In human to human interaction, implicit cues lead to change in physiological state with people imitating their partner’s physiology leading to an increase in rapport and bonding between them.

This entry is adapted from the peer-reviewed paper 10.3390/mti4040081

References

- M. Glowatz, D. Malone, and I. Fleming, “Information systems implementation delays and inactivity gaps: The end user perspectives,” ACM Int. Conf. Proceeding Ser., vol. 04-06-Dece, pp. 346–355, 2014, doi: 10.1145/2684200.2684295.

- K. Schoenenberg, A. Raake, and J. Koeppe, “Why are you so slow? - Misattribution of transmission delay to attributes of the conversation partner at the far-end,” Int. J. Hum. Comput. Stud., vol. 72, no. 5, pp. 477–487, 2014, doi: 10.1016/j.ijhcs.2014.02.004.

- M. Jiang, “The reason Zoom calls drain your energy,” BBC, 2020. https://www.bbc.com/worklife/article/20200421-why-zoom-video-chats-are-so-exhausting (accessed Apr. 27, 2020).

- R. Abbott, “Implicit and Explicit Communication.” https://www.streetdirectory.com/etoday/implicit-andexplicit-communication-ucwjff.html (accessed Jul. 20, 2020).

- “Implicit and Explicit Rules of Communication: Definitions & Examples,” 2014. https://study.com/academy/lesson/implicit-and-explicit-rules-of-communication-definitions-examples.html. (accessed Jul. 03, 2020).

- W. Severin, “Another look at cue summation,” Audio-v. Commun. Rev., vol. 15, pp. 233–245, 1967, doi: 10.1007/BF02768608.

- D. Moore, J. Burton, and R. Myers, “Multiple-channel communication: The theoretical and research foundations of multimedia,” in Handbook of research for educational communications and technology, 2nd ed., D. H. Jonassen, Ed. Lawrence Erlbaum Associates Publishers, 2004, pp. 979–1005.

- P. Baggett and A. Ehrenfeucht, “Encoding and retaining information in the visuals and verbals of an educational movie,” Educ. Commun. Technol. J., vol. 31, pp. 23–32, 1983, doi: 10.1007/BF02765208.

- R. E. Mayer, “Multimedia learning,” Psychol. Learn. Motiv. - Adv. Res. Theory, vol. 41, pp. 85–139, 2002, doi: 10.5926/arepj1962.41.0_27.

- M. Thomson, K. Murphy, and R. Lukeman, “Groups clapping in unison undergo size-dependent error-induced frequency increase,” Sci. Rep., vol. 8, no. 1, pp. 1–9, 2018, doi: 10.1038/s41598-017-18539-9.

- R. V Palumbo et al., “Interpersonal Autonomic Physiology: A Systematic Review of the Literature,” Personal. Soc. Psychol. Rev., vol. 21, no. 2, pp. 99–141, 2017, doi: 10.1177/1088868316628405.

- J. L. Lakin, V. E. Jefferis, C. M. Cheng, and T. L. Chartrand, “The chameleon effect as social glue: Evidence for the evolutionary significance of nonconscious mimicry,” J. Nonverbal Behav., vol. 27, pp. 145–162, 2003

- M. P. McAssey, J. Helm, F. Hsieh, D. A. Sbarra, and E. Ferrer, “Methodological advances for detecting physiological synchrony during dyadic interactions,” Methodology, vol. 9, no. 2, pp. 41–53, 2013.

- E. Ferrer and J. L. Helm, “Dynamical systems modeling of physiological coregulation in dyadic interactions,” Int. J. Psychophysiol., vol. 88, pp. 296–308, 2013.

- R. Feldman, R. Magori-Cohen, G. Galili, M. Singer, and Y. Louzoun, “Mother and infant coordinate heart rhythms through episodes of interaction synchrony,” Infant Behav. Dev., vol. 34, pp. 569–577, 2011.

- T. L. Chartrand and J. A. Bargh, “The chameleon effect: The perception-behavior link and social interaction,” J. Pers. Soc. Psychol., vol. 76, pp. 893–910, 1999, doi: 10.1037/0022-3514.76.6.893.

- J. A. Adams, P. Rani, and N. Sarkar, “Mixed-Initiative Interaction and Robotic Systems,” in AAAI Workshop on Supervisory Control of Learning and Adaptative Systems, 2004, pp. 6–13, [Online]. Available: https://pdfs.semanticscholar.org/6d9d/0d9d096dbebb2d6a7734cbf21b454fe77b3a.pdf.

- E. L. Blickensderfer, R. Reynolds, E. Salas, and J. A. Cannon-Bowers, “Shared expectations and implicit coordination in tennis doubles teams,” J. Appl. Sport Psychol., vol. 22, no. 4, pp. 486–499, 2010.