The problem of autonomous navigation of a ground vehicle in unstructured environments is both challenging and crucial for the deployment of this type of vehicle in real-world applications. We present a review on the recent contributions in the roboticsliterature adopting learning-based methods to solve the problem of environment perception andinterpretation with the final aim of the autonomous context-aware navigation of ground vehicles inunstructured environments.

- unmanned ground vehicle navigation

- end-to-end navigation

- terrain traversability analysis

- machine learning paradigms

- deep learning for robotics

- off-road navigation

1. Introduction

Unmanned Ground Vehicles (UGVs) are probably the first type of modern autonomous mobile platforms to have appeared in robotics research. A first example of an autonomous intelligent mobile wheeled robot can even be dated back to 1949, when W. Grey Walter designed and built the so-called electro-mechanical tortoises, driven by biologically inspired control [1]. Since then, field robotics research has reached several milestones ranging from first semi-autonomous planetary rovers [2] to autonomous off-road driverless cars [3]. However, in spite of the rather long history of ground mobile robots, there are still several open issues in the field of autonomous navigation of such vehicles in so-called unstructured environments. According to [4], unstructured environments are those environments which have not been previously modified in order to simplify the execution of a task by a robot. In this work we refer to those operating environments lacking clearly viable paths or helpful landmarks in supporting the autonomous navigation of the vehicle.

A significant body of literature on autonomous ground navigation has been mainly focused on indoor structured environments. In recent years, an important stimulus on outdoor environments has come from the research on self-driving cars, which has now become a well-established reality [5,6]. As opposed to the indoor scenario, where autonomous navigation has already reached a high technology readiness level (TRL), the goal of having a reliable fully-autonomous car cannot yet be considered accomplished, even in the urban scenario where lane markings or curbs can support the identification of drivable paths. Clearly, other aspects have to be addressed in this context, mainly arising from traffic rules and, more importantly, the coexistence in the same operating environment of many other external agents and vehicles (of different types).

In this survey we want to focus on the problem of autonomous ground vehicle navigation in unstructured environments, which is both challenging and crucial for the deployment of this type of vehicle in real-world applications. Several well-established communities in robotics research deal with these types of scenarios such as search and rescue robotics, planetary exploration, and agricultural robotics to name a few. It can be easily figured out how perception plays a crucial role in this context, since it provides the necessary information to make the vehicle aware of its own status and its surrounding environment to properly interact with. The term perception encloses the overall sensing system, including both the sensing devices and the solutions for high-level information extraction from the raw data.

According to this definition, we will present a review on the recent contributions in the robotics literature adopting learning-based methods to solve the problem of environment perception and interpretation with the final aim of autonomous context-aware navigation of ground vehicles in unstructured environments. In this manner we want to focus on those navigation solutions not relying on the detection of artificial landmarks or typical features of structured environments, such as corridors’ boundaries in mazes or indoor office-like environments, or road lanes, and so on. Several examples are available in the literature for these scenarios [7,8,9,10,11,12].

Furthermore, although machine learning has been adopted for decades in off-road autonomous navigation, these methods have recently gained new momentum thanks to the generally improved computing power and the introduction of novel types of sensors available on board the vehicles. Moreover, the recent breakthrough of artificial intelligence and deep learning methods has pushed advancements on several learning paradigms, thus opening up novel solutions to interpret sensory data and, more importantly in the robotics context, to define control policies. These methods represent a relevant research stream which must be further explored.

The main contribution of this work is to provide a broad high-level review on the main approaches to the autonomous navigation of ground vehicles in the specific learning realm and in the unstructured environment context. To the best of our knowledge, this is the first work attempting such a review aiming at organising the wide spectrum of approaches mentioned above. Clearly, we do not claim to provide a comprehensive survey of all the solutions reported in the literature, as this would require an almost unbearable effort. By means of this review, we wish to help researchers working on this topic to be able to obtain a clear overview of the most relevant methods currently available in the literature, as well as to easily identify the most useful approach for the problem at hand.

2. Related Works and Survey Boundaries

Several surveys and reviews can be found in the literature related to the navigation of ground robots, both in structured and unstructured environments, or related to general machine learning methods for robotics. The works by Chhaniyara et al. and Papadakis [13,14] provide an early overview on so-called terrain traversability analysis (TTA) methods for UGV navigation over rough terrains. Terrain traversability analysis can be roughly referred to as the problem of estimating the difficulty of driving through a terrain for a ground vehicle. This approach is clearly conceptually different from a classical obstacle avoidance problem, since most of the time no physical obstacle is actually present, whereas a trade-off between different paths has to be performed. Chhaniyara et al. focus their review on rovers for planetary exploration and soil mechanical property characterization. On the other hand, Papadakis provides a general overview on TTA ranging from classical geometry-based approaches, passing through proprioceptive-based methods and appearance-based methods and concluding with hybrid approaches. Although early learning-based methods are presented, the paper was written before the recent breakthrough of deep learning, especially in computer vision, which has also brought the sudden proliferation of alternative methods to classical machine learning approaches.

The work by Grigorescu et al. [15] deals specifically with deep learning methods instead. A detailed perspective on the context of autonomous cars is given, including decision making solutions, neural networks architectures and employed hardware. However, it is essentially placed in the urban on-road scenario. Other very recent surveys about deep learning methods and applications are available in the literature still in the self-driving cars domain [16,17]. However, the work by Kuutti et al. [16] is mainly concerned with vehicle control rather than perception, whereas the one by Ni et al. [17], similarly to [15], presents a wide review on the deep learning-based solutions used in self-driving urban vehicles tasks, such as object detection, lane recognition, and path planning. Another work still focused on deep learning-based control techniques, both for manipulation and navigation, is the one by Tai et al. [18].

A remarkable review on general learning methods for mobile robots navigation is given by Wulfmeier [19]. This work reports a broad discussion on the adoption of machine learning for each module of the navigation pipeline, from perception and mapping to planning and control. However, also this work is mainly oriented towards the urban scenario.

A very recent work by Hu et al. [20] provides an interesting overview on sensing technologies and sensor fusion techniques for off-road environments. Although perception is the core topic of this work, it does not put emphasis on the recent and novel learning based methods. Finally, other reviews deal with specific learning paradigms, such as learning from demonstration [21,22] or reinforcement learning [23], without a particular attention to navigation.

Boundaries

The main goal of this paper is to summarize and classify those solutions leveraging machine learning for the perception and comprehension of unstructured environments for the autonomous navigation of unmanned ground vehicles. According to this goal and for the sake of conciseness, this review purposefully does not include:

-

multi-robot approaches, as in those works many other aspects arise, ranging from the shared knowledge of the surrounding world to the multi-platform coordination and management;

-

works exclusively based on environment interaction or proprioceptive data (e.g., analysis of vehicle vibrations or attitude). We focus on environment perception in order to define a traversable path before actually crossing it;

-

works purely dealing with path planning algorithms. We want to include only those solutions where the environments characteristics are taken into account;

-

platform-specific works not including terrain information, such as prediction of the vehicle’s power consumption or low-level traction or motion control.

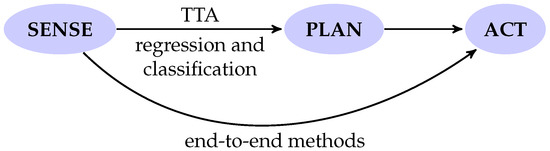

Recalling the well-known sense-plan-act paradigm in autonomous robot navigation, we essentially include all those works where at least the very first step, i.e., sense, is included. Therefore, we include those works performing planning, without moving further to the motion action, and even the solutions embedding all three aspects in a monolithic solution. There is clearly an unavoidable and partial overlap between perception and control, although this distinction is becoming more and more often evanescent.

Although this approach might look excessively broad and some approaches may even appear distant, we believe that since the reported solutions share a common starting point, i.e., the unstructured nature of the perceived environment, it is possible to identify some aspects which can be actually adapted to the different consequent tasks, namely planning, and low-level control. We argue that the side-by-side comparison of such solutions can provide a set of useful insights for the reader.

Regarding the survey methodology, the works included in this review have been selected among the papers collected over the last few years from the main scientific databases (namely Scopus, Web of Science and Google Scholar). The related search terms are ground vehicle, unstructured environments, learning, and traversability. Moreover, a further thorough research has been performed from the references included in the reported works.

3. Categorization

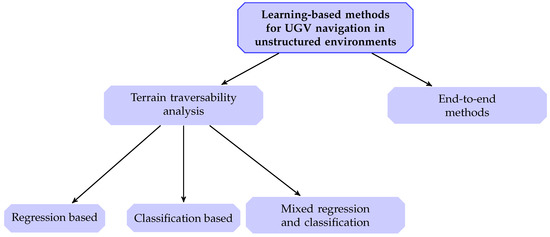

Within the boundaries described in the previous section it is possible to identify two main broad categories of learning-based methods for autonomous navigation in unstructured environments:

-

methods based on terrain traversability analysis (TTA)

-

end-to-end methods

Evidently, these two categories are deeply different. As already partially introduced in Section 1 by traversability we refer to the capability of a ground vehicle to stably reach a terrain region; this capability being dependent on (1) the terrain model, (2) the vehicle model, (3) the kinematic constraints of the vehicle and, in some cases, (4) some optimization criteria [14]. Methods relying on TTA can be in turn divided into two main approaches: (1) regression of traversal costs and (2) terrain classification. Similarly to machine learning lexicon, the former provides a continuous measure encoding the traversal cost (i.e., the expected difficulty the UGV would experience during the traversal); whereas the latter aims at identifying the terrain classes with navigation-relevant properties in the environment. Besides this rigid distinction, there are also some works based on a mixed regression-classification approach for TTA. In the context of an autonomous navigation framework, a further processing step is needed for handling the outcome of the traversability assessment, in order to translate such an assessment into actual motion.

Conversely, end-to-end methods directly map raw environment perception and/or vehicle state (i.e., raw exteroceptive and proprioceptive sensory data) to navigation control actions, thus fusing perception and control of the navigation framework into a single block. From this perspective, end-to-end methods may also be seen as a particular case of TTA-based methods, as they include both TTA and control. Although end-to-end methods might appear to offer a simpler and immediate solution, they also have some drawbacks as will be discussed in Section 6. Recalling again the sequential sense-plan-act pipeline, Figure 1 depicts where TTA and end-to-end methods are placed. Finally, for both of them we distinguish between classical learning and deep learning-based solutions. In Figure 2 a scheme representing the adopted categorization method is reported. In the following sections we will make extensive use of acronyms, especially in summary tables. To help the reader, we reported a list of them in the Abbreviations section, at the end of this manuscript.

Figure 1. Terrain traversability analysis (TTA) and end-to-end methods comparison.

Figure 2. Categorization method adopted in this survey. (UGV—Unmanned Ground Vehicle).

References

- Holland, O. The first biologically inspired robots. Robotica 2003, 21, 351–363.

- Wilcox, B.; Nguyen, T. Sojourner on Mars and Lessons Learned for Future Planetary Rovers. In SAE Technical Paper; SAE International: Danvers, MA, USA, 1998.

- Buehler, M.; Iagnemma, K.; Singh, S. The 2005 DARPA Grand Challenge: The Great Robot Race; Springer: Berlin, Germany, 2007; Volume 36.

- Brock, O.; Park, J.; Toussaint, M. Mobility and manipulation. In Springer Handbook of Robotics; Springer: Cham, Switzerland, 2016; pp. 1007–1036.

- Paden, B.; Čáp, M.; Yong, S.Z.; Yershov, D.; Frazzoli, E. A Survey of Motion Planning and Control Techniques for Self-Driving Urban Vehicles. IEEE Trans. Intell. Veh. 2016, 1, 33–55.

- Badue, C.; Guidolini, R.; Carneiro, R.V.; Azevedo, P.; Cardoso, V.B.; Forechi, A.; Jesus, L.; Berriel, R.; Paixão, T.M.; Mutz, F.; et al. Self-driving cars: A survey. Expert Syst. Appl. 2021, 165, 113816.

- Bojarski, M.; Testa, D.D.; Dworakowski, D.; Firner, B.; Flepp, B.; Goyal, P.; Jackel, L.D.; Monfort, M.; Muller, U.; Zhang, J.; et al. End to End Learning for Self-Driving Cars. arXiv 2016, arXiv:1604.07316.

- Liu, G.H.; Siravuru, A.; Prabhakar, S.; Veloso, M.; Kantor, G. Learning End-to-end Multimodal Sensor Policies for Autonomous Navigation. arXiv 2017, arXiv:1705.10422.

- Gao, W.; Hsu, D.; Lee, W.S.; Shen, S.; Subramanian, K. Intention-Net: Integrating Planning and Deep Learning for Goal-Directed Autonomous Navigation. arXiv 2017, arXiv:1710.05627.

- Pfeiffer, M.; Schaeuble, M.; Nieto, J.; Siegwart, R.; Cadena, C. From perception to decision: A data-driven approach to end-to-end motion planning for autonomous ground robots. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 1527–1533.

- Mirowski, P.; Pascanu, R.; Viola, F.; Soyer, H.; Ballard, A.J.; Banino, A.; Denil, M.; Goroshin, R.; Sifre, L.; Kavukcuoglu, K.; et al. Learning to Navigate in Complex Environments. arXiv 2017, arXiv:1611.03673.

- Devo, A.; Costante, G.; Valigi, P. Deep Reinforcement Learning for Instruction Following Visual Navigation in 3D Maze-Like Environments. IEEE Robot. Autom. Lett. 2020, 5, 1175–1182.

- Chhaniyara, S.; Brunskill, C.; Yeomans, B.; Matthews, M.; Saaj, C.; Ransom, S.; Richter, L. Terrain trafficability analysis and soil mechanical property identification for planetary rovers: A survey. J. Terramechanics 2012, 49, 115–128.

- Papadakis, P. Terrain Traversability Analysis Methods for Unmanned Ground Vehicles: A Survey. Eng. Appl. Artif. Intell. 2013, 26, 1373–1385.

- Grigorescu, S.; Trasnea, B.; Cocias, T.; Macesanu, G. A survey of deep learning techniques for autonomous driving. J. Field Robot. 2020, 37, 362–386.

- Kuutti, S.; Bowden, R.; Jin, Y.; Barber, P.; Fallah, S. A Survey of Deep Learning Applications to Autonomous Vehicle Control. IEEE Trans. Intell. Transp. Syst. 2020, 1–22.

- Ni, J.; Chen, Y.; Chen, Y.; Zhu, J.; Ali, D.; Cao, W. A Survey on Theories and Applications for Self-Driving Cars Based on Deep Learning Methods. Appl. Sci. 2020, 10, 2749.

- Tai, L.; Zhang, J.; Liu, M.; Boedecker, J.; Burgard, W. A Survey of Deep Network Solutions for Learning Control in Robotics: From Reinforcement to Imitation. arXiv 2018, arXiv:1612.07139.

- Wulfmeier, M. On Machine Learning and Structure for Mobile Robots. arXiv 2018, arXiv:1806.06003.

- Hu, J.w.; Zheng, B.y.; Wang, C.; Zhao, C.h.; Hou, X.l.; Pan, Q.; Xu, Z. A survey on multi-sensor fusion based obstacle detection for intelligent ground vehicles in off-road environments. Front. Inf. Technol. Electron. Eng. 2020, 21, 675–692.

- Hussein, A.; Gaber, M.M.; Elyan, E.; Jayne, C. Imitation Learning: A Survey of Learning Methods. ACM Comput. Surv. 2017, 50.

- Ravichandar, H.; Polydoros, A.; Chernova, S.; Billard, A. Recent Advances in Robot Learning from Demonstration. Annu. Rev. Control. Robot. Auton. Syst. 2020, 3.

- Kober, J.; Peters, J. Reinforcement Learning in Robotics: A Survey. In Learning Motor Skills: From Algorithms to Robot Experiments; Springer International Publishing: Cham, Switzerland, 2014; pp. 9–67.

This entry is adapted from the peer-reviewed paper 10.3390/s21010073