Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is an old version of this entry, which may differ significantly from the current revision.

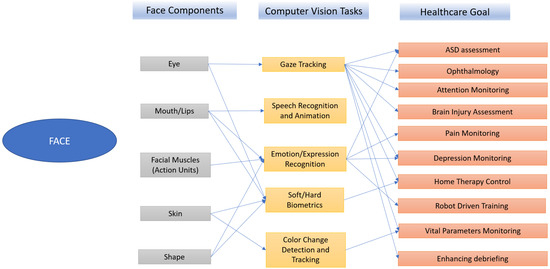

This paper gives an overview of the cutting-edge approaches that perform facial cue analysis in the healthcare area. The document is not limited to global face analysis but it also concentrates on methods related to local cues (e.g. the eyes). A research taxonomy is introduced by dividing the face in its main features: eyes, mouth, muscles, skin, and shape. For each facial feature, the computer vision-based tasks aiming at analyzing it and the related healthcare goals that could be pursued are detailed.

- face analysis

- healthcare

- assistive technology

- computer vision

- ambient assisted living technologies

The face conveys very rich information that is critical in many aspects of everyday life. Face appearance is the primary means to identify a person. It plays a crucial role in communication and social relations: a face can reveal age, sex, race, and even social status and personality. Besides, a skilled observation of the face is also relevant in the diagnosis and assessment of mental or physical diseases. The face appearance of a patient may indeed provide diagnostic clues to the illness, the severity of the disease and some vital patient’s values [1][2]. For this reason, since the beginning of studies related to automatic image processing, researchers have investigated the possibility of automatically analyzing the face to speed up the related processes, making them independent from human error and caregiver’s skill level, but also to build new ones assistive applications.

One of the early and most investigated topics in the computer vision community, which is still quite active today, is face detection: its primary goal is to determine whether or not there are any faces in the image and, if present, where are the corresponding image regions. Several new methods have emerged in recent years and they have improved the accuracy of face detection so that it can be considered a problem solved in many real applications even if the detection of partially occluded or unevenly illuminated faces is still a challenge. Most advanced approaches for face detection have been reviewed in [3][4].

Face detection is the basic step for almost all the algorithmic pipelines that in somewhat aim at analyzing facial cues. The subsequent computer vision approaches involved in the face related algorithmic pipelines are instead still under investigation and details about the recent advancements can be found in some very outstanding survey papers on face analysis from the technological point of view. They cover algorithmic approaches for biometric identification [5] [6] (even in presence of plastic surgery tricks [7], occlusions [8], or distortion; low resolution; and noise [9]), facial muscles movements analysis [10], and emotion recognition [11].

Looking deeply at the works in literature, it is possible to identify three different levels on which methodological progresses move-forward: The first level, which evolves very fast and therefore has produced solutions that reached outstanding accuracy and robustness on benchmark datasets, concerns the theoretical research. It mainly deals with the study and the introduction of novel neural models, more effective training strategies, and more robust features. At this level, classical classification topics such as object recognition are addressed.

The second level, namely, applied research, tries instead to leverage theoretical findings to solve more specific, but still cross-contextual, issues such as robust facial landmarks detection, facial action unit estimation, human pose estimation, Anomaly Detection in Video Sequence, and so on.

Finally, the third level involves the on-field research that leverages the outcomes of the theoretical and applied researches to solve contextual issues, i.e., related to healthcare, autonomous driving, sports analysis, security, safety, and so on. In the context-related researches, technological aspects are only a part of the issues to be fixed in order to get an effective framework. Often domain-specific challenges have to be addressed by a multidisciplinary team of researchers who has to find the best trade-off between domain-related constraints and available technologies to build very effective frameworks. This is even more valid in the case of the healthcare scenario as the deployment has to take into account how the final users (i.e., medics, caregivers, or patients) will exploit technology, and, to do that clinical, technological, social, and economic aspects have to be weighted [12]. For instance, recent face analysis systems (e.g., that perform facial emotion recognition) have reached outstanding accuracy by exploiting deep learning techniques. Unfortunately, they have been trained on typically developed persons and they cannot be exploited as supplied to evaluate abilities in performing facial expression in the case of cognitive or motor impairments [13]. In other words, existing approaches may require a re-engineerization to handle specific tasks involved in healthcare services. This has to be carried out including among all life science knowledge, biological, medical, and social background. At the same time, the demand for smart, interactive healthcare services is increasing, as several challenges issues (such as accurate diagnosis, remote monitoring, and cost–benefit rationalization) cannot be effectively addressed by established stakeholders. From the above, it emerges that it would be very useful to summarize works in the literature that, by exploiting computer vision and machine learning tasks, face specific issues related to healthcare applications. This paper is motivated by the lack of such similar works in the literature and its main goal is to make up for this shortcoming. In particular, the main objectives of this survey are

-

to give an overview of the cutting-edge approaches that perform facial cue analysis in the healthcare area;

-

to find critical aspects that rule the transfer of knowledge from academic, applied, and healthcare researches;

-

to path the way for further researches in this challenging domain starting from the last exciting findings in machine learning and computer vision; and

-

to point out benchmark datasets specifically built for the healthcare scenario.

The document is not limited to global face analysis and it also concentrates on methods related to local cues. A research taxonomy is introduced by dividing the face in its main features: eyes, mouth, muscles, skin, and shape. For each facial feature, the computer vision-based tasks aiming at analyzing it and the related healthcare goals that could be pursued are detailed. This leads to the scheme in Figure 1.

Figure 1. A scheme introducing a coarse taxonomy for face analysis in healthcare.

This entry is adapted from the peer-reviewed paper 10.3390/info11030128

References

- Ross, M.A.; Graff, L.G. Principles of observation medicine.Emergency Medicine Clinics2001,19, 1–17.6412.

- Leo, M.; Farinella, G.M. Computer Vision for Assistive Healthcare, 1st ed.; Academic Press Ltd.: GBR, 2018.6423.

- J W Healy; What is hazardous? What is safe?. Environmental Health Perspectives 1978, 27, 317-321, 10.1289/ehp.7827317.

- Kumar, A.; Kaur, A.; Kumar, M. Face detection techniques: a review.Artificial Intelligence Review2019,64552, 927–948

- Alireza Sepas-Moghaddam; Fernando M. Pereira; Paulo Lobato Correia; Face recognition: a novel multi-level taxonomy based survey. IET Biometrics 2020, 9, 58-67, 10.1049/iet-bmt.2019.0001.

- Iacopo Masi; Yue Wu; Tal Hassner; Prem Natarajan; Deep Face Recognition: A Survey. 2018 31st SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI) 2018, , 471-478, 10.1109/sibgrapi.2018.00067.

- Tanupreet Sabharwal; Rashmi Gupta; Le Hoang Son; Raghvendra Kumar; Sudan Jha; Recognition of surgically altered face images: an empirical analysis on recent advances. Artificial Intelligence Review 2018, 52, 1009-1040, 10.1007/s10462-018-9660-0.

- Fadhlan Hafizhelmi Kamaru Zaman; Amir Akramin Shafie; Yasir Mohd Mustafah; Robust face recognition against expressions and partial occlusions. International Journal of Automation and Computing 2016, 13, 319-337, 10.1007/s11633-016-0974-6.

- Shyam Singh Rajput; K. V. Arya; Vinay Singh; Vijay Kumar Bohat; Face Hallucination Techniques: A Survey. 2018 Conference on Information and Communication Technology (CICT) 2018, , 1-6, 10.1109/infocomtech.2018.8722416.

- Giovanni Maria Farinella, Marco Leo, Gerard G. Medioni, MohanTrivedi; Learning and recognition for assistive computer vision. Pattern Recognition Letters 2019, (IN PRESS), 0, https://doi.org/10.1016/j.patrec.2019.11.006.

- Marco Leo; Antonino Furnari; Gerard G. Medioni; Mohan Trivedi; Giovanni Maria Farinella; Deep Learning for Assistive Computer Vision. Leal-Taixé L., Roth S. (eds) Computer Vision – ECCV 2018 Workshops. ECCV 2018. Lecture Notes in Computer Science 2018, 11134, 3-14, 10.1007/978-3-030-11024-6_1.

- Giovanni Maria Farinella, Marco Leo, Gerard G. Medioni, MohanTrivedi; Learning and recognition for assistive computer vision. Pattern Recognition Letters 2019, (IN PRESS), 0, https://doi.org/10.1016/j.patrec.2019.11.006.

- Marco Leo; Marco Del Coco; Pierluigi Carcagni; Cosimo Distante; Giuseppe Bernava; Giovanni Pioggia; Giuseppe Palestra; Automatic Emotion Recognition in Robot-Children Interaction for ASD Treatment. 2015 IEEE International Conference on Computer Vision Workshop (ICCVW) 2015, x, 537-545, 10.1109/iccvw.2015.76.

This entry is offline, you can click here to edit this entry!