Space exploration and society: Space exploration stands as a pivotal industry for shaping the future. Its significance escalates year after year. Space technologies, serving as catalysts for innovation, permeate nearly every facet of modern life. Space exploration has become indispensable as an integral part to essential sectors like—just naming a few—digitalization, telecommunications, energy, raw materials, logistics, environmental, transportation, and security technologies. Spillover effects of advancements in space exploration extend into diverse realms, making it the foundation of innovative nations. The demand for satellites, payloads, and secure means to transport them into space is booming. Besides the traditional institutional spaceflight organizations, small and medium-sized enterprises and startups increasingly characterize the commercial nature of space exploration. Projections indicate that by 2030, the global value creation in space exploration will reach one trillion euros (for comparison, the current global value creation in the automotive industry stands just below three trillion euros). Public investments are deemed judicious, as each euro for space exploration (e.g., Germany: 22 euros per capita; France: 45; USA: 76, or 166 including military expenditures) is expected to generate four-fold direct and nine-fold indirect value creation [

1].

Space mission peculiarities: Preparing a mission, engineering and manufacturing the system, and finally operating it can take decades. Many missions rely on a single spacecraft that is a one-of-a-kind (and also first-of-its-kind) expensive device comprising custom-built, state-of-the-art components. For many missions, there is no second chance if the launch fails or if the spacecraft is damaged or lost, for whatever reason, e.g., because a second flight unit is never built, (cf. [

2]) because of long flight time to a destination, or because of a rare planetary constellation that occurs only every few decades. Small series launchers, multi-satellite systems, or satellite constellations that naturally provide some redundancy can, of course, be exceptions to some degree. Yet failure costs can still be very high, and the loss of system parts can still have severe consequences.

Space supply chains: Supply chains of spaceflight are known to be large and highly complex. They encompass multiple tiers of suppliers and other forms of technology accommodation, and include international, inter-agency, and cross-institutional cooperation, stretching over governmental agencies, private corporations, research institutions, scientific principal investigators, subcontractors, and regulatory bodies. The integration of advanced technologies, stringent quality control measures, and the inherent risks associated with space exploration add further layers of intricacy to these supply chains.

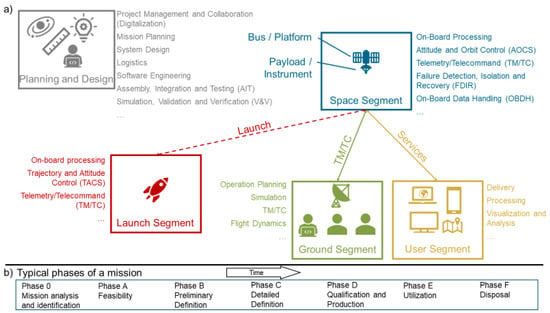

Space technology: A typical spaceflight system is divided into different segments (see Figure 1). The space segment is the spacecraft, which typically consists of an instrument that provides the functional or scientific payload and of the satellite platform that provides the flight functions. The space segment is connected to its ground segment via radio links. Both segments are usually designed and built in parallel as counter-parts for one another. Services are provided to the user segment indirectly through the ground segment (e.g., Earth observation data) or directly through respective devices (e.g., navigation signals, satellite television). Finally, the launch segment, actually a spacecraft in its own right, is the rocket that takes the space segment into space.

Figure 1. (

a) Software as an indispensable part of a space system (i.e., space, launch, ground and user segments) and for space project execution. As a subsystem, it assumes vital functions in all segments, acts as “glue” for the different parts, and is essential for engineering activities (cf. [

3,

4]). The different segments are shown in different colors. (

b) Typical project execution phases of a mission from left to right.

The role of software for spaceflight: Today, space exploration cannot be imagined without software across all lifecycle phases and in all of its segments and subsystems. Software is the “glue” [

5] that enables the autonomous parts of systems of systems to work together, regardless of whether these parts are humans, spacecraft components, tools, or other systems. The digitalization of engineering, logistics, management, development, and other processes is only possible through software. Space exploration is renowned for huge masses of data that can only be handled and analyzed through software. The agile development mindset, originating from and heavily relying on software, is the cornerstone of the New Space movement and its thriving business (cf. [

6]). As the brain of each mission, software grants spacecrafts their ability to survive autonomously, automatically and instantly reacting to adverse environmental effects and subsystem failures by detecting, isolating, and recovering from failures (FDIR). Moreover, software updates are currently the only way to make changes to spacecraft after launch, e.g., in order to adapt to new objectives or hardware failures. Harland and Lorenz report numerous missions like TIMED (Thermosphere Ionosphere Mesosphere Energetics and Dynamics), TERRIERS (Tomographic Experiment using Radiative Recombinative Ionospheric EUV and Radio Sources), and ROSAT (Röntgensatellit) with problems that were rescued through inflight software patches. Occurrence of failures—in hardware or software—is the norm [

7]. Eberhard Rechtin, a respected authority in aerospace systems, (and others, e.g., [

8]) attested that software has great potential to save on mission and hardware costs, to add unprecedented value, and to improve reliability [

9]. Since the ratio between hardware and software costs in a mission has shifted from 10:1 to 1:2 since the 1980s, Rechtin predicted in 1997 that software would very soon be at the center of spacecraft systems [

9], which the chair of the NASA Aerospace Safety Advisory Panel would later confirm:

“We are no longer building hardware into which we install a modicum of enabling software, we are actually building software systems which we wrap up in enabling hardware. Yet we have not matured to where we are uniformly applying rigorous systems engineering principles to the design of that software.”

(Patricia Sanders, quoted in [

10])

Distinctive features of flight software: Quite heterogenous software is used in the various segments of a spaceflight system. While software for planning, design, the on-ground user segment, and data exploitation is more like a common information system, software executed onboard a spacecraft, in the launch and space segments, has its own peculiarities. Flight software exhibits the following qualities:

-

Lacks direct user interfaces, requiring interaction through uplink/downlink and complicating problem diagnosis;

-

Manages various hardware devices for monitoring and control autonomously;

-

Runs on slower, memory-limited processors, demanding specialized expertise from engineers;

-

Must meet timing constraints for real-time processing. The right action performed too late is the same as being wrong [

11].

The role of software engineering: The NASA study on flight software complexity describes an exponential growth of a factor of ten every ten years in the amount of software in spacecraft, acting as a “sponge for complexity” [

11]. At the same time, the cost share of software development in the space segment has remained rather constant [

11]. Experiences with the reuse of already-flown software and integration of pre-made software components (or COTS, commercial off the shelf) software are “mixed”, i.e., good and bad, as reuse comes with its own problems [

9,

11,

12]. If rigorously analyzing modified software is prohibitively expensive or beyond the state of the art, then complete rewriting may be warranted [

13]. Often software is not developed with reuse in mind [

14], which limits its reuse. In consequence, this means that software development on a per-mission basis is important and that software engineers face a rapidly increasing density of software functionality per development cost, further emphasizing the importance of and advances in software engineering. In fact, software engineering was invented as a reaction to the software crisis, which basically says bigger programs, bigger problems (cf. [

15]). Nonetheless, software engineering struggles to be accepted by traditional space engineering disciplines. Only few project personnel, especially in management, understand its significance [

16]. Some may see it as being little more than a small piece in a subsystem or a physicist’s programming exercise:

“Spaceflight technology is historically and also traditionally located in the area of mechanical engineering. This means that software engineering is seen as a small auxiliary discipline. Some older colleagues do not even use the word ‘software’, but electronics.”

(Jasminka Matevska, [

17])

A side note on software product assurance: Together with project management and engineering, product assurance is one of three primary project functions in a spaceflight project. Product assurance focuses on quality; simplifying a bit, it aims at making the product reliable, available, maintainable, safe (RAMS), and, more recently, also secure. It observes and monitors the project, witnesses tests, analyzes, and recommends, but it does not develop or test the product, manage people, or set product requirements. Instead, it has organizational and budgetary independence and reports to highest management only. There are direct lines of communication between the customer’s and their suppliers’ product assurance personnel. For product assurance and engineering, there are software forms called software engineering and software product assurance (see [

2]). However, it is important to note that these are organizational roles, whereas both their technical backgrounds are software engineering. So, when we speak of software engineering here, we mean the technical profession, not the organizational role. Both roles are essential to mission success.

Software cost: The flight software for major NASA missions like the Mars Exploration Rover (launched in 2003) or Mars Reconnaissance Orbiter (launched in 2005) had roughly 500,000 source lines of code (SLOCs) [

11]. As a rule of thumb, a comprehensive classic of space system engineering [

18] calculates a cost of $350 (ground) to $550 (unmanned flight) for development per new SLOC, while re-fitted code has lower cost. These costs already include efforts for software quality assurance. Another important cost factor of software is risk. As famous computer scientist C.A.R. Hoare noted:

“The cost of removing errors discovered after a program has gone into use is often greater, particularly [… when] a large part of the expense is borne by the user. And finally, the cost of error in certain types of program may be almost incalculable—a lost spacecraft, a collapsed building, […].”

Software risks: The amount of software in space exploration systems is growing. More and more critical functions are entrusted to software, the spacecraft’s “brain” [

20]. Unsurprisingly, this means that sometimes software dooms large missions, causing significant delays or outright failures [

16]. A single glitch can destroy equipment worth hundreds of millions of euros. According to Holzmann [

21], a very good development process can achieve a defect rate as low as 0.1 residual defects per 1000 lines of code. Given the amount of code in a modern mission, there are hundreds of defects lingering in the software after delivery. More importantly, however, there are countless ways in which these defects can contribute to Perrow-class failures (cf. [

21,

22]). In increasingly complex safety-critical systems, where each defect is individually countered by carefully designed countermeasures, this “conspiring” of smaller defects and otherwise benign events can lead to system failures and major accidents, i.e., resulting in the loss of a spacecraft system, rendering the mission goals unreachable, or even causing human casualties [

21]. But it is not only benign errors. In the spirit of Belady, the necessary software-encoded human thoughts that allow the mindless machine to act on our behalf are missing [

5]. This, according to Prokop (see

Section 2), appears to happen quite frequently [

23]. Leveson concludes that software “allows us to build systems with a level of complexity and coupling that is beyond our ability to control” [

13]. However, MacKenzie notes that software (across different domains) “has yet to experience its Tay Bridge disaster: a catastrophic accident, unequivocally attributable to a software design fault, in the full public gaze. But, of course, one can write that sentence only with the word “yet” in it” [

24].

System view of software failures: Due to system complexity and the interplay of defects and events, failures are often difficult to attribute to specific single sources. Furthermore, spacecraft failures are viewed from a spaceflight technology perspective, which, of course, is not wrong per se. But in this view, as discussed above, software is often only seen as “a modicum of enabling software”. For example, the GNC combines the ACS, the propulsion system, and software for on-orbit flight dynamics. (There are many different terms associated with this group of subsystems, and terms actually used by different authors vary. A selective definition is not attempted here, but

Appendix A lists several terms and their possible relationships.) The ACS again includes sensors, actuators, and software. The GNC may fail from software, hardware, or a sub-subsystem like the ACS. But in the system view, analysis often only concludes that the GNC failed. That the reason is a software defect of the ACS is only recognized when viewed from a software perspective, or when asking “why” often enough. But there is also the opposite case: there are also subsystems that sound more like software, e.g., onboard data handling (OBDH), or seemingly obvious failure attributions like a “computer glitch” (e.g., Voyager 1, humanity’s oldest and most distant space probe, was recently jeopardized by computer hardware failure [

25], a stuck bit, and now is about to be software-patched). Failures in these subsystems are too easy to attribute to software upon superficial analysis, although they can have hardware or system design causes.

Types of software failures: There are many different kinds of software-related failures, and many types often leave room for interpretation. Of course, there are the classical programming errors, e.g., syntax errors, incorrect implementation of an algorithm, or a runtime failure crashing the software. In most cases, software does not fail, but it does something wrong. It functions according to its specification, which, however, is wrong in the given situation [

13]. Is this a software failure, a design fault, both, none? Are validation and verification activities to blame that they did not find the problem? Or was configuration management negligent? And then, MacKenzie finds that human–computer interaction is more deadly than software design faults (90:3) [

24]. Is it an operation failure if the human–computer interface is bad, or if bad configuration parameters are not protected? Is it a software failure if code or software-internal interfaces are written poorly, badly documented, or misleading to other developers? In spaceflight, the natural environment and hardware failures cause random events that software should be able to cope with, for instance, by rebooting, by isolating the failure, etc. Is it a software failure if software is not intelligent enough to handle it correctly, or if it does not try that at all? In fact, there is no commonly accepted failure taxonomy; a classic attempt at a taxonomy is, for example, that of Avizienis et al. [

12]. Our collection of spaceflight mishaps shows how difficult an attribution can sometimes be.

Contribution of this Entry: Newman [

22] notes that it is only human nature that a systems engineer will see the causes of failure in system engineering terms, a metallurgist will see the cause in metallurgy, etc. In this Entry, we therefore look at notable space exploration accidents from a software perspective, which is relatively underrepresented in space exploration. We focus on the following:

-

Revisiting studies that investigated the role of software in a quantitative, or at least quantifiable way, in order to give context and explain why qualitative understanding of accidents is important (see

Section 2);

-

Reanalyzing the stories and contexts of selected software-related failures from a software background. We provide context, background information, references for further reading, and high-level technical insights to allow readers to make their own critical assessment of whether and how this incident relates to software engineering. This helps software practitioners and researchers grasp which areas of software engineering are affected (see

Section 3);

-

Concluding this Entry with an outlook on growing software-related concerns (see

Section 4).

Understanding the causes and consequences of past accidents fosters a culture of safety and continuous improvement within the spaceflight engineering community. Anecdotal stories of accidents provide valuable insights into past failures, highlighting areas of concern, weaknesses in design or procedures, and lessons learned. They improve our knowledge and understanding of how software has contributed to space exploration accidents, which are important tools for success.

This entry is adapted from the peer-reviewed paper 10.3390/encyclopedia4020061