Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is an old version of this entry, which may differ significantly from the current revision.

Synthetic Aperture Radar (SAR) is a high-resolution imaging sensor commonly mounted on platforms such as airplanes and satellites for widespread use. In complex electromagnetic environments, radio frequency interference (RFI) severely degrades the quality of SAR images due to its widely varying bandwidth and numerous unknown emission sources. Although traditional deep learning-based methods have achieved remarkable results by directly processing SAR images as visual ones, there is still considerable room for improvement in their performance due to the wide coverage and high intensity of RFI.

- synthetic aperture radar

- radio frequency interference suppression

- Transformer

- SAR imaging

1. Introduction

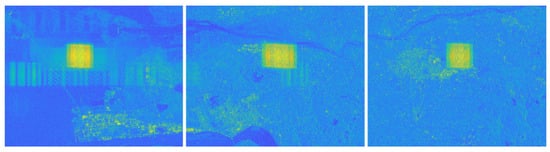

Synthetic aperture radar (SAR) is a high-resolution imaging radar, whose resolution can surpass the diffraction limit of the aperture, and even reach the centimeter level [1][2]. SAR satellites with various frequency bands have been widely deployed, including the European Sentinel satellites, Chinese Haisi satellites, and the Canadian Radarsat satellites [3]. While SAR satellites hold significant economic value in ocean monitoring, geospatial mapping, and target recognition [4][5][6], they also face complex electromagnetic interference. Common interference sources include co-frequency radars, satellite communication systems, and radar jammers [7][8]. Radio frequency interference (RFI) is a prevalent pattern, and because of its high intensity and wide coverage, it severely degrades the quality of SAR images [9]. Figure 1 shows a common RFI in Sentinel-1 satellites, with interference region typically exceeding 0.5 million pixels, corresponding to areas larger than 30 square kilometers.

Figure 1. Common RFI in Sentinel-1 satellites.

Satellite cross-interference and military conflicts can lead to SAR satellite blindness. To solve the above problems, numerous methods have been proposed. According to the different stages of anti-interference, radar anti-interference can be divided into system-level anti-interference and signal-level anti-interference. System-level anti-interference technology mainly uses array antennas to cancel interference, and this technology has achieved long-term development. However, SAR is a single-antenna radar imaging system, and currently deployed SAR satellites generally lack system-level anti-interference capability. In addition, the overlap between interference signals and radar signals in time and frequency is high, and simple filtering algorithms are difficult to be effective. Therefore, signal-level anti-interference technology has important research value.

Traditional interference suppression methods can be broadly classified into three categories: non-parametric methods, parametric methods, and semi-parametric methods [10]. Non-parametric methods mainly include subspace projection [11][12][13] and notch filtering [14][15][16][17][18]. Although non-parametric methods are simple to implement, they lack protection for targets. Parametric methods are required to model RFI signals, and in complex imaging scenarios, the performance is constrained by the models [19]. Instead of directly modeling the RFI, semi-parametric interference suppression algorithms establish an optimization model to perform matrix decomposition. Semi-parametric methods can effectively eliminate interference while maximizing target preservation, thus receiving widespread attention. With the rise of compressive sensing, sparse reconstruction algorithms have gradually become a commonly used semi-parametric method [20]. The earliest proposals for mitigating RFI using iterative models were made by Nguyen [21] and Nguyen and Tran [22], who explored the sparsity of scenes and the correlation between transmitted and received signals in the time domain, and proposed a sparse recovery theory applicable to SAR images. By utilizing different characteristics in various domains (image domain, time domain, Doppler domain, wavelet domain, etc.), various iterative relations and models have been explored, including sparse models [23][24], low-rank models [25][26], joint sparse low-rank models [27][28][29][30][31], and variations in robust PCA [32][33]. Although the aforementioned semi-parametric algorithms have achieved excellent performance, they require iterations for each individual data with the selection of specific hyperparameters, resulting in high computational complexity and poor generalization ability.

2. Interference Suppression Networks

SAR is a type of microwave imaging radar, and RFI produces similar noise effects in images, so deep learning has been naturally introduced into SAR interference suppression. In terms of the image domain, refs. [34][35] introduce residual networks and attention mechanisms into the networks. However, these algorithms lack an understanding of SAR principles and only treat interference as noise. When interference intensity is high, the performance is poor. In the time-frequency domain, ref. [36] introduces neural networks for the first time in interference suppression. The authors of [37] propose to adopt properties of RFI and SAR data as prior knowledge to inject into the network; this algorithm achieves better performance than traditional non-deep learning methods (including semi-parametric methods) and it serves as one of the main comparative methods in this paper.

3. Transformer

Transformer has achieved great success in natural language processing (NLP), especially in GPTs [38][39][40] and ChatGPT [41]. Unlike CNN architecture, Transformer-based networks hold global attention mechanisms, making it easier to capture global information. Pretrained models based on BERT [42][43] have demonstrated state-of-the-art performance in various downstream NLP tasks. These results indicate that the Transformer holds excellent feature extraction capabilities, which naturally inspires other tasks such as computer vision. In visual tasks, the pioneering work of VIT [44][45] achieves state-of-the-art results in the image classification task.

Although Transformer has shown great success in many tasks, it also presents two limitations in visual tasks. First, visual tasks often involve high redundancy and large amounts of data, resulting in significant computational costs. Second, local information is often important, but Transformer lacks the ability to capture local information. To address the first limitation, some works [46][47][48] explore local attention windows to reduce computational costs. Other works employ mask mechanisms and discard most mask pixels to reduce computational costs [49]. To address the second limitation, some works propose a pyramid Transformer [50][51] to enable multi-dimensional information interaction. Also, some works combine Transformer with CNN in network architectures to capture both local and global information. Due to the excellent feature extraction capabilities, a series of Transformer-based models have been proposed in high-dimensional visual tasks [52][53] and low-dimensional generative tasks [48][54].

This entry is adapted from the peer-reviewed paper 10.3390/rs16061013

References

- Zhu, X.X.; Bamler, R. Very High Resolution Spaceborne SAR Tomography in Urban Environment. IEEE Trans. Geosci. Remote Sens. 2010, 48, 4296–4308.

- Pu, W. Deep SAR Imaging and Motion Compensation. IEEE Trans. Image Process. 2021, 30, 2232–2247.

- Hou, X.; Ao, W.; Song, Q.; Lai, J.; Wang, H.; Xu, F. FUSAR-Ship: Building a high-resolution SAR-AIS matchup dataset of Gaofen-3 for ship detection and recognition. Sci. China-Inf. Sci. 2020, 63, 140303.

- Adeli, S.; Salehi, B.; Mahdianpari, M.; Quackenbush, L.J.; Brisco, B.; Tamiminia, H.; Shaw, S. Wetland Monitoring Using SAR Data: A Meta-Analysis and Comprehensive Review. Remote Sens. 2020, 12, 2190.

- Tebaldini, S.; Manzoni, M.; Tagliaferri, D.; Rizzi, M.; Monti-Guarnieri, A.V.; Prati, C.M.; Spagnolini, U.; Nicoli, M.; Russo, I.; Mazzucco, C. Sensing the Urban Environment by Automotive SAR Imaging: Potentials and Challenges. Remote Sens. 2022, 14, 3602.

- Li, M.-D.; Cui, X.-C.; Chen, S.-W. Adaptive Superpixel-Level CFAR Detector for SAR Inshore Dense Ship Detection. IEEE Geosci. Remote Sens. Lett. 2021, 19, 4010405.

- Li, N.; Lv, Z.; Guo, Z.; Zhao, J. Time-Domain Notch Filtering Method for Pulse RFI Mitigation in Synthetic Aperture Radar. IEEE Geosci. Remote Sens. Lett. 2021, 19, 4013805.

- Cai, Y.; Li, J.; Yang, Q.; Liang, D.; Liu, K.; Zhang, H.; Lu, P.; Wang, R. First Demonstration of RFI Mitigation in the Phase Synchronization of LT-1 Bistatic SAR. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5217319.

- Yang, H.; He, Y.; Du, Y.; Zhang, T.; Yin, J.; Yang, J. Two-Dimensional Spectral Analysis Filter for Removal of LFM Radar Interference in Spaceborne SAR Imagery. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5219016.

- Tao, M.; Su, J.; Huang, Y.; Wang, L. Mitigation of Radio Frequency Interference in Synthetic Aperture Radar Data: Current Status and Future Trends. Remote Sens. 2019, 11, 2438.

- Zhou, F.; Wu, R.; Xing, M.; Bao, Z. Eigensubspace-based filtering with application in narrow-band interference suppression for SAR. IEEE Geosci. Remote Sens. Lett. 2007, 4, 75–79.

- Yang, H.; Li, K.; Li, J.; Du, Y.; Yang, J. BSF: Block Subspace Filter for Removing Narrowband and Wideband Radio Interference Artifacts in Single-Look Complex SAR Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5211916.

- Zhou, F.; Tao, M.; Bai, X.; Liu, J. Narrow-Band Interference Suppression for SAR Based on Independent Component Analysis. IEEE Trans. Geosci. Remote Sens. 2013, 51, 4952–4960.

- Buckreuss, S.; Horn, R. E-SAR P-band SAR subsystem design and RF-interference suppression. In Proceedings of the IGARSS ‘98. Sensing and Managing the Environment, 1998 IEEE International Geoscience and Remote Sensing, Symposium Proceedings, (Cat. No.98CH36174), Seattle, WA, USA, 6–10 July 1998; Volume 1, pp. 466–468.

- Cazzaniga, G.; Guarnieri, A.M. Removing RF interferences from P-band airplane SAR data. In Proceedings of the IGARSS ‘96. 1996 International Geoscience and Remote Sensing Symposium, Lincoln, NE, USA, 31 May 1996; Volume 3, pp. 1845–1847.

- Reigber, A.; Ferro-Famil, L. Interference suppression in synthesized SAR images. IEEE Geosci. Remote Sens. Lett. 2005, 2, 45–49.

- Xu, W.; Xing, W.; Fang, C.; Huang, P.; Tan, W. RFI Suppression Based on Linear Prediction in Synthetic Aperture Radar Data. IEEE Geosci. Remote Sens. Lett. 2020, 18, 2127–2131.

- Fu, Z.; Zhang, H.; Zhao, J.; Li, N.; Zheng, F. A Modified 2-D Notch Filter Based on Image Segmentation for RFI Mitigation in Synthetic Aperture Radar. Remote Sens. 2023, 15, 846.

- Yi, J.; Wan, X.; Cheng, F.; Gong, Z. Computationally Efficient RF Interference Suppression Method with Closed-Form Maximum Likelihood Estimator for HF Surface Wave Over-The-Horizon Radars. IEEE Trans. Geosci. Remote Sens. 2012, 51, 2361–2372.

- Shi, J.R.; Zhen, X.Y.; Wei, Z.T.; Yang, W. Survey on algorithms of low-rank matrix recovery. Appl. Res. Comput. 2013, 30, 1601–1605.

- Nguyen, L.H.; Tran, T.; Do, T. Sparse Models and Sparse Recovery for Ultra-Wideband SAR Applications. IEEE Trans. Aerosp. Electron. Syst. 2014, 50, 940–958.

- Nguyen, L.H.; Tran, T.D. Efficient and Robust RFI Extraction Via Sparse Recovery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 2104–2117.

- Liu, H.; Li, D.; Zhou, Y.; Truong, T.-K. Joint Wideband Interference Suppression and SAR Signal Recovery Based on Sparse Representations. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1542–1546.

- Liu, H.; Li, D.; Zhou, Y.; Truong, T.-K. Simultaneous Radio Frequency and Wideband Interference Suppression in SAR Signals via Sparsity Exploitation in Time-Frequency Domain. IEEE Trans. Geosci. Remote Sens. 2018, 56, 5780–5793.

- Su, J.; Tao, H.; Tao, M.; Wang, L.; Xie, J. Narrow-Band Interference Suppression via RPCA-Based Signal Separation in Time–Frequency Domain. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 5016–5025.

- Tao, M.; Li, J.; Su, J.; Fan, Y.; Wang, L.; Zhang, Z. Interference Mitigation for Synthetic Aperture Radar Data using Tensor Representation and Low-Rank Approximation. In Proceedings of the 2020 33rd General Assembly and Scientific Symposium of the International Union of Radio Science, Rome, Italy, 29 August–5 September 2020.

- Joy, S.; Nguyen, L.H.; Tran, T.D. Radio frequency interference suppression in ultra-wideband synthetic aperture radar using range-azimuth sparse and low-rank model. In Proceedings of the 2016 IEEE Radar Conference (RadarConf), Philadelphia, PA, USA, 2–6 May 2016; pp. 433–436.

- Huang, Y.; Liao, G.; Li, J.; Xu, J. Narrowband RFI Suppression for SAR System via Fast Implementation of Joint Sparsity and Low-Rank Property. IEEE Trans. Geosci. Remote Sens. 2018, 56, 2748–2761.

- Lyu, Q.; Han, B.; Li, G.; Sun, W.; Pan, Z.; Hong, W.; Hu, Y. SAR interference suppression algorithm based on low-rank and sparse matrix decomposition in time–frequency domain. IEEE Geosci. Remote Sens. Lett. 2021, 19, 4008305.

- Huang, Y.; Zhang, L.; Yang, X.; Chen, Z.; Liu, J.; Li, J.; Hong, W. An Efficient Graph-Based Algorithm for Time-Varying Narrowband Interference Suppression on SAR System. IEEE Trans. Geosci. Remote Sens. 2021, 59, 8418–8432.

- Huang, Y.; Wen, C.; Chen, Z.; Chen, J.; Liu, Y.; Li, J.; Hong, W. HRWS SAR Narrowband Interference Mitigation Using Low-Rank Recovery and Image-Domain Sparse Regularization. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5217914.

- Huang, Y.; Zhang, L.; Li, J.; Hong, W.; Nehorai, A. A Novel Tensor Technique for Simultaneous Narrowband and Wideband Interference Suppression on Single-Channel SAR System. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9575–9588.

- Chen, S.; Lin, Y.; Yuan, Y.; Li, X.; Hou, L.; Zhang, S. Suppressive Interference Suppression for Airborne SAR Using BSS for Singular Value and Eigenvalue Decomposition Based on Information Entropy. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5205611.

- Wei, S.; Zhang, H.; Zeng, X.; Zhou, Z.; Shi, J.; Zhang, X. CARNet: An effective method for SAR image interference suppression. Int. J. Appl. Earth Obs. Geoinf. 2022, 114, 103019–103026.

- Li, X.; Ran, J.; Zhang, H. ISRNet: An Effective Network for SAR Interference Suppression and Recognition. In Proceedings of the 2022 IEEE 9th International Symposium on Microwave, Antenna, Propagation and EMC Technologies for Wireless Communications (MAPE), Chengdu, China, 26–29 August 2022; pp. 428–431.

- Fan, W.; Zhou, F.; Tao, M.; Bai, X.; Rong, P.; Yang, S.; Tian, T. Interference Mitigation for Synthetic Aperture Radar Based on Deep Residual Network. Remote Sens. 2019, 11, 1654.

- Shen, J.; Han, B.; Pan, Z.; Li, G.; Hu, Y.; Ding, C. Learning Time–Frequency Information With Prior for SAR Radio Frequency Interference Suppression. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5239716.

- Dong, L.; Yang, N.; Wang, W.; Wei, F.; Liu, X.; Wang, Y.; Gao, J.; Zhou, M.; Hon, H.W. Unified language model pre-training for natural language understanding and generation. In Proceedings of the 33rd International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; pp. 13063–13075.

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language models are unsupervised multitask learners. OpenAI Blog 2019, 1, 9.

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901.

- Kocoń, J.; Cichecki, I.; Kaszyca, O.; Kochanek, M.; Szydło, D.; Baran, J.; Bielaniewicz, J.; Gruza, M.; Janz, A.; Kanclerz, K.; et al. ChatGPT: Jack of all trades, master of none. Inf. Fusion 2023, 99, 101861.

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K.; Computat, L.A. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the Conference of the North-American-Chapter of the Association-for-Computational-Linguistics-Human Language Technologies (NAACL-HLT), Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186.

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. RoBERTa: A Robustly Optimized BERT Pretraining Approach. Inf. Syst. Res. 2019.

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. arXiv 2021, arXiv:2010.11929.

- Han, K.; Wang, Y.; Chen, H.; Chen, X.; Guo, J.; Liu, Z.; Tang, Y.; Xiao, A.; Xu, C.; Xu, Y.; et al. A Survey on Vision Transformer. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 87–110.

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-local Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; Volume 2.

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; Volume 2.

- Wang, Z.; Cun, X.; Bao, J.; Zhou, W.; Liu, J.; Li, H. Uformer: A general u-shaped transformer for image restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 17683–17693.

- He, K.; Chen, X.; Xie, S.; Li, Y.; Dollár, P.; Girshick, R. Masked autoencoders are scalable vision learners. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 16000–16009.

- Heo, B.; Yun, S.; Han, D.; Chun, S.; Choe, J.; Oh, S.J. Rethinking Spatial Dimensions of Vision Transformers. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021.

- Wang, W.; Xie, E.; Li, X.; Fan, D.-P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pyramid Vision Transformer: A Versatile Backbone for Dense Prediction without Convolutions. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021.

- Dong, X.; Bao, J.; Chen, D.; Zhang, W.; Yu, N.; Yuan, L.; Chen, D.; Guo, B. Cswin transformer: A general vision transformer backbone with cross-shaped windows. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 12124–12134.

- Liu, Y.; Zhang, Y.; Wang, Y.; Hou, F.; Yuan, J.; Tian, J.; Zhang, Y.; Shi, Z.; Fan, J.; He, Z. A survey of visual transformers. IEEE Trans. Neural Netw. Learn. Syst. 2023, 1–21.

- Khan, S.; Naseer, M.; Hayat, M.; Zamir, S.W.; Khan, F.S.; Shah, M. Transformers in Vision: A Survey. ACM Comput. Surv. 2022, 54, 1–41.

This entry is offline, you can click here to edit this entry!