Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is an old version of this entry, which may differ significantly from the current revision.

Subjects:

Robotics

The advent of Industry 4.0 has heralded advancements in human–robot collaboration (HRC), necessitating a deeper understanding of the factors influencing human decision making within this domain. An HRC system combines human soft skills such as decision making, intelligence, problem-solving, adaptability and flexibility with robots’ precision, repeatability, and the ability to work in dangerous environments.

- human decision making

- human–robot collaboration

- human–robot interaction

- human factors

- Industry 4.0

1. Introduction

As a socio-technical system, collaborative robots (cobots) are designed to improve productivity, flexibility, and ergonomics, and to increase customised production rather than mass production. Since the fourth industrial revolution (Industry 4.0), concerns regarding the human workers’ role in the production environment [1] have increased causing Human–robot Collaboration (HRC) to become an emerging area of robotic and cobotic research in recent years. A popular discussion about the next industrial revolution (Industry 5.0) is human–robot co-working [2], which emphasises bringing human workers back to the production process loop [3]. An HRC system combines human soft skills such as decision making, intelligence, problem-solving, adaptability and flexibility with robots’ precision, repeatability, and the ability to work in dangerous environments [4].

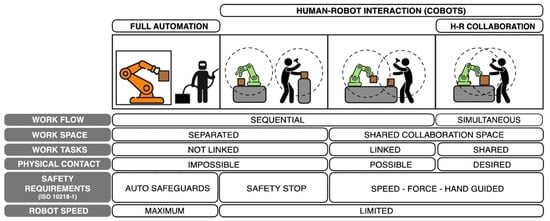

For this reason, cobots are adopted to work and interact safely with humans on shared tasks, in a shared workspace simultaneously [5,6,7]. Cobots have enormous potential for their increased use in many industries. To introduce industrial cobots clearly, [8] proposed a framework which categorises the interaction between humans and robots into four types (Figure 1). First type is the full automatization of conventional industrial robots, and the latter three types are categorised based on the interaction between humans and cobots: coexistence, cooperation, and collaboration. In the coexistence scenario, humans and cobots work sequentially in different workspaces. In the cooperation scenario, the humans and cobots work in a shared space, and the tasks of the humans and cobots are linked. In the collaboration scenario, which is the highest level of interaction, humans and cobots work in the shared space on the shared tasks simultaneously.

2. Human Decision Making

Since humans and robots can work together as a team in HRC, they both have the authority to make decisions. Robots can make decisions by using algorithms based on models such as Markov decision processes (MDP) [11], partially observable Markov decision processes (POMDP) [12], Bayesian Decision Model (BDM) [13], Adaptive Bayesian policy selection (ABPS) [14], and others. Therefore, the performance of robot decision making relies on algorithms. With the emergence of technologies such as Artificial Intelligence (AI) and Machine Learning (ML), robots can make decisions on their own and be more intelligent in some specific situations [15]. Different from robots, humans make decisions based on normative inference, influenced by their previous experiences, unconscious drives, and emotions [16]. Robots are good at making decisions in stable and predictable situations, while human decision making is essential for handling complex and dynamic situations [17].

One example of a dynamic situation where humans are working with robots is robot-assisted surgery. In this scenario, a surgeon makes decisions based on their knowledge, experience, and the current situation in the operation theatre. Other factors such as communication within the surgical team, situation awareness, and workload are related to the surgeon’s decision making [18]. For instance, during a procedure, a surgeon must carefully choose the right instrument based on the specific needs of the task and the current conditions. They must evaluate the completion of each step satisfactorily, be ready with an alternative plan if necessary, and always consider the most appropriate next action. The quality of the surgeon’s decisions regarding patient care, incision placement, and procedure steps directly affects the overall success of the surgery. Another dynamic example is a search and rescue task. In this scenario, humans make decisions based on the data collected from a robot where effective information can aid humans to make decisions necessary for the search. For instance, in an Urban Search and Rescue (USAR) task [19], robots provide data continuously to human operators, and human operators analyse the data, update search strategy, and reassign tasks to ensure the efficiency and safety.

Therefore, collaboration between humans and robots necessitates that human operators apply their expertise to make situationally appropriate decisions. This decision making process aligns with the concept of the Naturalistic Decision Making (NDM) theory proposed by Klein et al. in 1993 [20]. This theory elucidates decision-making processes in environments that are both significant and familiar to humans, portraying them as expert decision makers with domain-specific knowledge and experience. Klein et al. further introduced the Recognition-Primed Decision making (RPD) model as a framework for understanding how effective decisions were made [20,21].

This model starts with an assessment of whether the situation is familiar. If it is not, the individual seeks more information and re-assesses the situation. If the situation is familiar, the model predicts that the individual will have expectancies about what is normal for that situation. If these expectancies are not violated, the individual engages in a mental simulation of the action, essentially predicting the outcome of an action without actually performing it. If the mental simulation suggests that the action will succeed, the individual implements the action. If not, they modify the plan and re-evaluate its potential success through another mental simulation. This process repeats until a workable plan is formulated.

RDP model, along with the NDM theory, has been applied to various real-world domains, including Unmanned Air Vehicles (UAV) operations by Yesilbas and Cotter in 2019 [22] and human–agent collaboration by Fan et al. in 2005 [23], demonstrating its broad applicability.

3. Cognitive Workload and Human Decision Making

In human decision making, cognitive workload plays a critical role, especially in environments where humans interact with complex systems or technology, such as robotics [24]. This factor is frequently examined alongside human decision making due to its profound impact on performance and outcomes [25]. Effective decision making is a complex cognitive process that necessitates the optimal distribution of an individual’s attention and mental capacity. The quality of decisions heavily relies on the ability to analyse information, evaluate possible outcomes, and choose the best course of action [26,27]. However, cognitive overload can significantly impede this process. When an operator faces an excess of information or task demands that exceed their cognitive resources, they are likely to experience mental fatigue [28,29], which can lead to reliance on simplifying strategies, known as heuristics. While heuristics can be useful for quick judgments, they often ignore much of the available data and the nuance required for high-quality decision making [30,31].

For example, using tools designed to minimize cognitive effort in pattern recognition can significantly improve group-decision outcomes by allowing better resource allocation decisions in dispersed groups [32]. Similarly, the frontal network in the brain responds to uncertainty in decision making tasks by modulating cognitive resource allocation, and the cognitive control in navigating uncertain situations is important [33]. In complex operations, cognitive readiness, which includes situation awareness, problem-solving, and decision making, is essential for effective resource allocation. In addition, supporting long-term anticipation in decision making can significantly improve performance in complex environments, and cognitive support tools can enhance the anticipation of future outcomes [34].

4. Factors Related to Human Decision Making

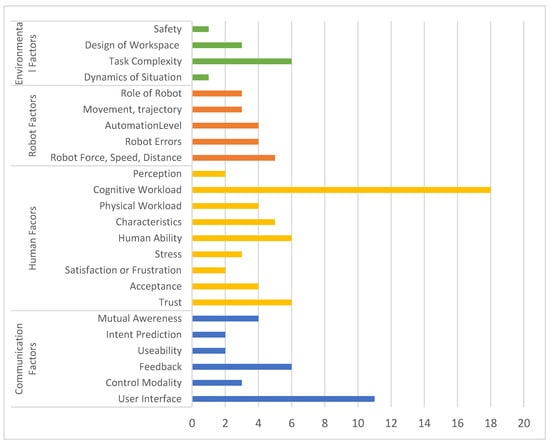

Within the selected studies, 24 factors that influence human decision making during tasks were identified. Generally, these factors affecting human decision making are categorized into four groups: human factors, robot factors, communication factors, and environmental factors. (Figure 2, Table 1).

Figure 2. Factors related to human decision making in HRC.

Table 1. Factors Related to Human Decision making.

| Human Factors | Robot Factors | Communication Factors | Environment Factors |

|---|---|---|---|

| trust [17,45,48,57,73,77] | physical attribute of the robot [39,70,72] | user interface [37,38,42,46,51,60,63,64,73] | dynamic of the situation [75] |

| acceptance [17,57,71,77] | robot errors [36,55] | control modality [37,65,71] | task complexity [36,55,66,69,80] |

| human characteristics [39] | trajectory and movement [53,55,78] | feedback [39,48,51,52,62,72] | design of workspace [39,73,77] |

| physical workload [50] | role of the robot [61,76,80] | usability [71,73] | physical safety [42] |

| cognitive workload [40] | automation level [17,44,47,72] | human intent prediction [59,68] | |

| operator’s ability [39,41,67,74,76,79] | mutual awareness [17,41,46,68] | ||

| stress [57,76,77] | |||

| feeling of satisfaction or frustration [57,73] | |||

| perception of the situation and environment [45,49] |

4.1. Human Factors

In the realm of human factors impacting decision making in HRC, cognitive workload receives the most attention, as evidenced by its focus in 18 studies. Trust, operator ability, human characteristics, and acceptance are also prominent, having been extensively studied across multiple research works. Additionally, physical workload, stress levels, and emotional responses such as satisfaction or frustration are deemed critical. These factors, together with the perception of the situation and environment, play significant roles in influencing human decision making during collaborative tasks with robots.

4.2. Robot Factors

Robot factors that influence human decision making in collaborative tasks encompass a spectrum of the robot’s physical characteristics and actions. Key aspects such as the force exerted by the robot, its speed, and the distance maintained from human operators are crucial, directly impacting task performance and safety protocols. Additionally, the frequency and nature of robot errors, as well as the trajectory and movement patterns, are vital considerations. The role of the robot, whether as a leader or a follower, alongside the degree of automation implemented, plays a significant part in shaping the human–robot interaction dynamic.

4.3. Communication Factors

Communication factors include user interface design, control modality, feedback mechanisms, usability, human intent prediction, and mutual awareness. These elements facilitate interaction and are foundational for intuitive operation and effective teamwork between humans and robots, as evidenced by numerous studies. The user interface is particularly emphasized, as it directly impacts the efficiency and satisfaction of the operator. Feedback and mutual awareness are also integral, ensuring that both humans and robots can respond adaptively to each other’s actions and intentions.

4.4. Environmental Factors

Environmental factors include the dynamics of the situation, task complexity, workspace design, and physical safety. Among the selected studies, task complexity received the most emphasis. The design of the workspace was also deemed crucial, while the dynamics of the situation and physical safety were likewise noted as important considerations.

5. User Interface

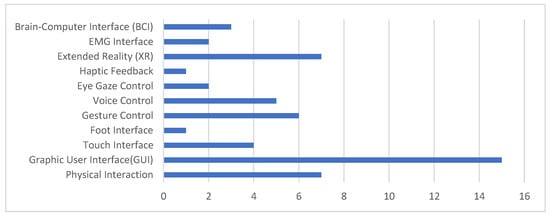

Figure 3 shows that, among all selected studies, the Graphical User Interface (GUI) [37,39,46,54,57,60,63,66,69,71,72,73,75,77,80] was the most utilized type of user interface, implemented in 15 studies. Other interfaces include physical interaction [49,57,69,71,72,73,77] and touch interfaces [46,55,65,71], which facilitate direct engagement with robots. Additional methods such as gesture recognition [38,39,63,65,72,73], voice control [36,38,63,64,65], and eye gaze tracking [65,68] were employed, enhancing the versatility of interactions. Haptic feedback [62] and extended reality (XR) interfaces [38,39,46,52,59,60,63] were noted for their immersive and tactile capabilities. Furthermore, EMG-based interfaces [37,39] and Brain–Computer Interfaces (BCIs) [36,39,40] have been adopted, indicating progress in user command and collaboration with robots. Beyond the commonly used GUI, XR-based interfaces have garnered more attention than other emerging technologies.

Figure 3. Types of user interfaces.

6. Technologies Related to Human Decision Making

Numerous studies have highlighted a variety of advanced technologies such as XR, gesture control, voice control, and AI-based perception, which significantly influence human decision making in collaborative environments.

Some studies mentioned a multimodal interface which combines different sensory input to improve interaction to enhance human decision making and collaboration. For example, gesture recognition [38,57,63,65,72], speech recognition [38,63,65], cognitive signals like EEG [36], ECG [55], fNIRS [75], and force sensory [36] were used in some studies.

XR-base interfaces including AR [38,52,59,63], VR [46], and MR [60] were discussed in several studies. These technologies can help improve human decision making in HRC by assisting with visual and other perceptions.

Other technologies such as Brain–Computer Interface (BCI) [40], eye gaze [65,68], and AI-based perception [57,72] were also mentioned. Such technologies have the potential to reduce cognitive workload and enhance users’ preference and needs therefore improving their decision making.

This entry is adapted from the peer-reviewed paper 10.3390/robotics13020030

This entry is offline, you can click here to edit this entry!