Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is an old version of this entry, which may differ significantly from the current revision.

Subjects:

Others

Semantic segmentation techniques for remote sensing images (RSIs) have been widely developed and applied. When a large change occurs in the target scenes, model performance drops significantly. Therefore, unsupervised domain adaptation (UDA) for semantic segmentation is proposed to alleviate the reliance on expensive per-pixel densely labeled data.

- adversarial perturbation consistency

- self-training

- semantic segmentation

- remote sensing

1. Introduction

Image segmentation has been widely researched as a basic remote sensing intelligent interpretation task [1,2,3,4]. In particular, semantic segmentation based on deep learning plays an important role as a pixel-level classification method in remote sensing interpretation tasks, such as building extraction [5], landcover classification [6] and change detection [7,8]. However, the prerequisite for good performance in existing fully supervised deep learning approaches is sufficiently annotated data. It is also essential that the training and test data follow the identical distributions [9]. Once applied to unseen scenarios with different data distributions, model performance can degrade significantly [10,11,12]. This means new data might be annotated and retrained for performance requirements, which requires considerable labor and time [13].

In practical applications, the domain discrepancy problem is prevalent in remote sensing images (RSIs) [14,15]. Different remote sensing platforms, payload imaging mechanisms, and photographic angles will induce variations in image spatial resolution and object features [16]. Due to the variation in seasons, geographic locations, illumination, and atmospheric radiation conditions, the same source images may also show significant feature distribution differences [17]. The data distribution shift caused by the mix of these complex factors leads the segmentation network to behave poorly in the unseen target domain.

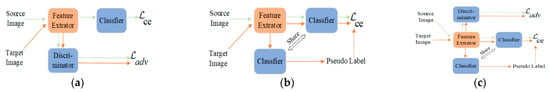

As a transfer learning paradigm [18], unsupervised domain adaptation (UDA) can improve the domain generalization performance of the model by transferring knowledge from the source domain data with annotations to the target domain [19]. This method has been extensively researched in computer vision to address the domain discrepancy issue in natural image scenes [20]. Domain adaptive (DA) methods have also gained intensive attention in remote sensing [21]. Compared with natural images, RSIs contain more complex spatial detail information and object boundary situation, and homogeneous and heterogeneous phenomena are more common in images. Additionally, the factors that generate domain discrepancies are more complex and diverse. Thus, solving the problem of domain discrepancies in RSIs became more challenging. Currently, existing research works focus on three main approaches: UDA based on image transfer [17,22], UDA based on deep adversarial training (AT), and UDA based on self-training (ST) [23,24]. Image transfer methods achieve image-level alignment based on generative adversarial networks. AT-based methods (as shown in Figure 1a) reduce the feature distribution in the source and target domains by minimizing the adversarial loss to achieve feature-level alignment [25]. The ST approach (as shown in Figure 1b) focuses on generating high-confidence pseudolabels in the target domain and then participating in the iterative training of the model to achieve the progressive transfer process [26,27].

Figure 1. General paradigm description of existing DA training methods. (a) AT based DA approach. (b) Self-training (ST) based DA approach. (c) A combined ST and AT for DA methods.

One general conclusion about the DA performance of the model is: AT + ST > ST > AT [27]. However, as shown in Figure 1c, combining ST and AT methods typically requires strong coupling between submodules, which leads to a poorly stabilized model during training [28]. Therefore, fine-tuning the network structure and the submodules parameters is generally needed, so that model performance depends on specific scenarios and loses its scalability and flexibility. Recently, several studies have been conducted to optimize and improve the process, such as decoupling AT and ST methods functionally by constructing dual-stream networks [28], and using exponential moving average (EMA) techniques to construct teacher networks to smooth instable features in the training process [29]. However, it also complicates the network architecture, increasing the spatial computational complexity, and reducing training efficiency.

2. Image-Level Alignment for UDA

Image-level alignment reduces the data distribution shift between the source and target domains through image transfer methods [33,34]. This scheme generates pseudo images that are semantically identical to the source images, but whose spectral distribution is similar to that of the target images [17]. Cycle-consistent adversarial domain adaptation (CyCADA) improves the semantic consistency of the image transfer process through cycle consistency loss [35]. To preserve the semantic invariance of RSIs after being transferred, ColorMapGAN designs a color transformation method without a convolutional structure [17]. Many UDA schemes adopt GAN-based style transfer methods [36] to align data distributions in the source and target domains. ResiDualGAN [22] introduces scale information of RSIs based on DualGAN [37]. Some work also leverages non-adversarial optimization transform methods, such as Fourier transform-based FDA [38] and Wallis filtering methods [39], to reduce image domain discrepancies.

3. Feature-Level Alignment by AT

Adversarial-based feature alignment methods train additional domain discriminators [19,40] to distinguish target samples from source samples and then train the feature network to fool the discriminator, thus generating a domain-invariant feature space [41]. Many works have made significant progress using AT to align the feature space distribution to reduce the domain variance in RSIs. Wu et al. [42] focused on interdomain category differences and proposed class-aware domain alignment. Deng et al. [23] designed a scale discriminator to detect scale variation in RSIs. Considering regional diversity, Chen et al. [43] focused on difficult-to-align regions through a region adaptive discriminator. Bai et al. [20] leveraged contrast learning to align high-dimensional image representations between different domains. Lu et al. [44] designed global-local adversarial learning methods to ensure local semantic consistency in different domains.

4. Self-Training for UDA

Self-training acts as a kind of semi-supervised learning [45], which involves high-confidence prediction as easy-to-transfer pseudolabels, and participates in the next iteration of training together with the corresponding target images, progressively realizing the knowledge transfer process [26,27]. Yao et al. [39] used the ST paradigm to improve the performance of the model for building extraction on unseen data. CBST [26] designs class-balanced selectors for pseudolabels to avoid the easy-to-predict classes becoming dominant. ProDA [46] computes representation prototypes that represent the centers of category features to correct pseudolabels. CLUDA [47] constructs contrast learning between different classes and different domains by mixing source and target domain images. Additionally, several works have attempted to combine ST and adversarial methods to improve domain generalization performance. However, these models are difficult to optimize and often require fine-tuning of the model parameters. Zhang et al. [48] established the two-stage training process of AT followed by ST. DecoupleNet [28] decouples ST and AT through two network branches to alleviate the difficulty of model training.

5. Consistency Regularization

Consistency regularization is generally employed to solve semi-supervised problems, where the essential idea is to preserve the output consistency of the model under different versions of input perturbations, thus improving the generalization ability of the model for test data [49,50]. FixMatch [30] establishes two network flows, which include weak perturbation augmentation and strong perturbation augmentation at the image level, using the weak perturbation to ensure the high quality of the output and using the strong perturbation to provide better training of the model. FeatMatch [51] extracts class representative prototypes for feature-level augmentation transformations. Liu et al. [52] constructed dual-teacher networks to provide more rigorous pseudolabels for unlabeled test data. UniMatch [50] provides an auxiliary feature perturbation stream using a simple dropout mechanism. Several recent regularization models have been designed under the ST paradigm, but fail to account for domain discrepancy scenes, which has led to the fact that pure consistency regularization has not behaved remarkably well in cross-domain scenes.

This entry is adapted from the peer-reviewed paper 10.3390/rs15235498

This entry is offline, you can click here to edit this entry!