The traditional method of finding missing people involves deploying fixed cameras in some hotspots to capture images and using humans to identify targets from these images. However, in this approach, high costs are incurred in deploying sufficient cameras in order to avoid blind spots, and a great deal of time and human effort is wasted in identifying possible targets. Further, most AI-based search systems focus on how to improve the human body recognition model, without considering how to speed up the search in order to shorten the search time and improve search efficiency. As the technology of the unmanned aerial vehicle (UAV) has seen significant progress, a number of applications have been proposed for it due to its unique characteristics, such as higher mobility and more flexible integration with different equipment, such as sensors and cameras, etc.

- unmanned aerial vehicle

- hierarchical human-weight-first path planning

- artificial intelligence image recognition

1. Introduction

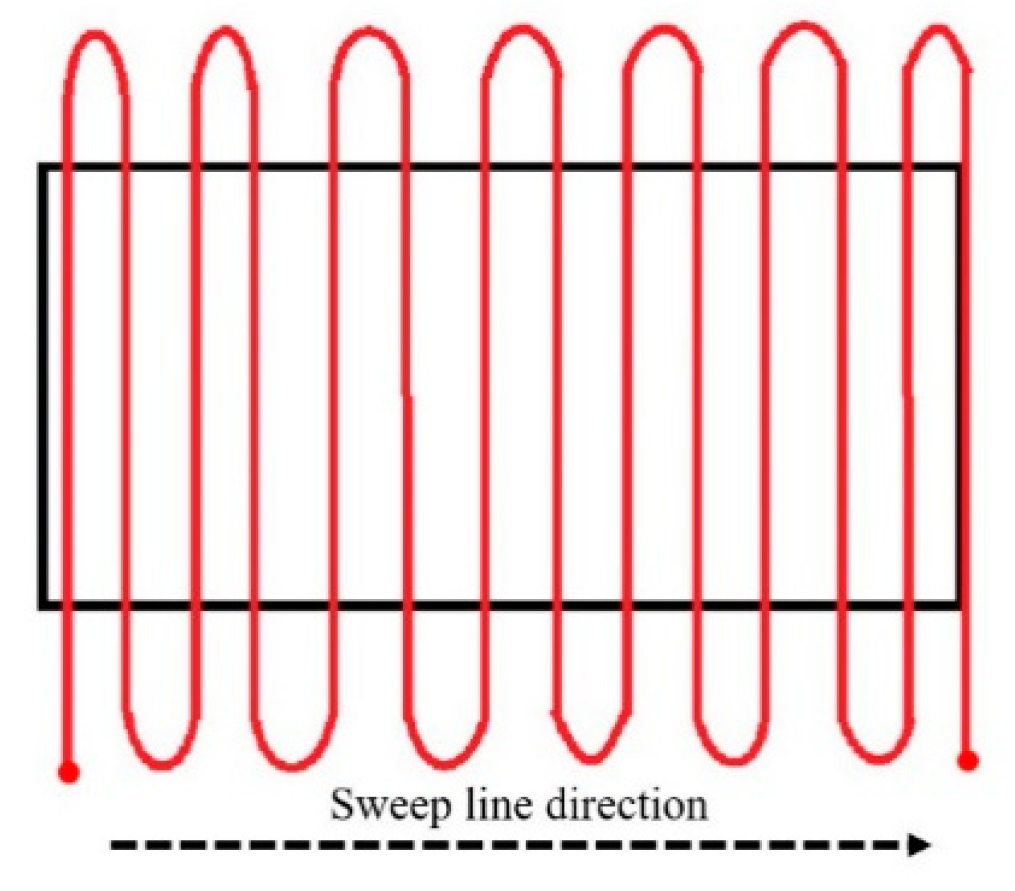

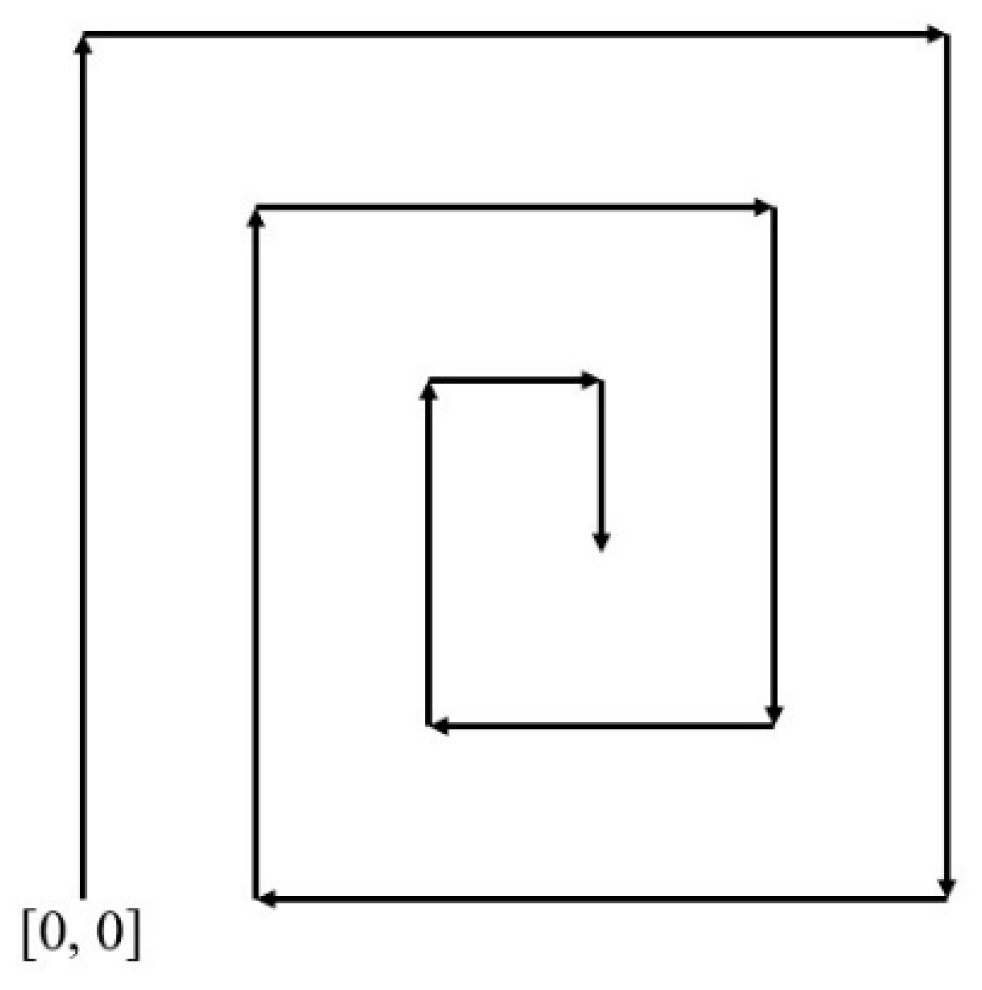

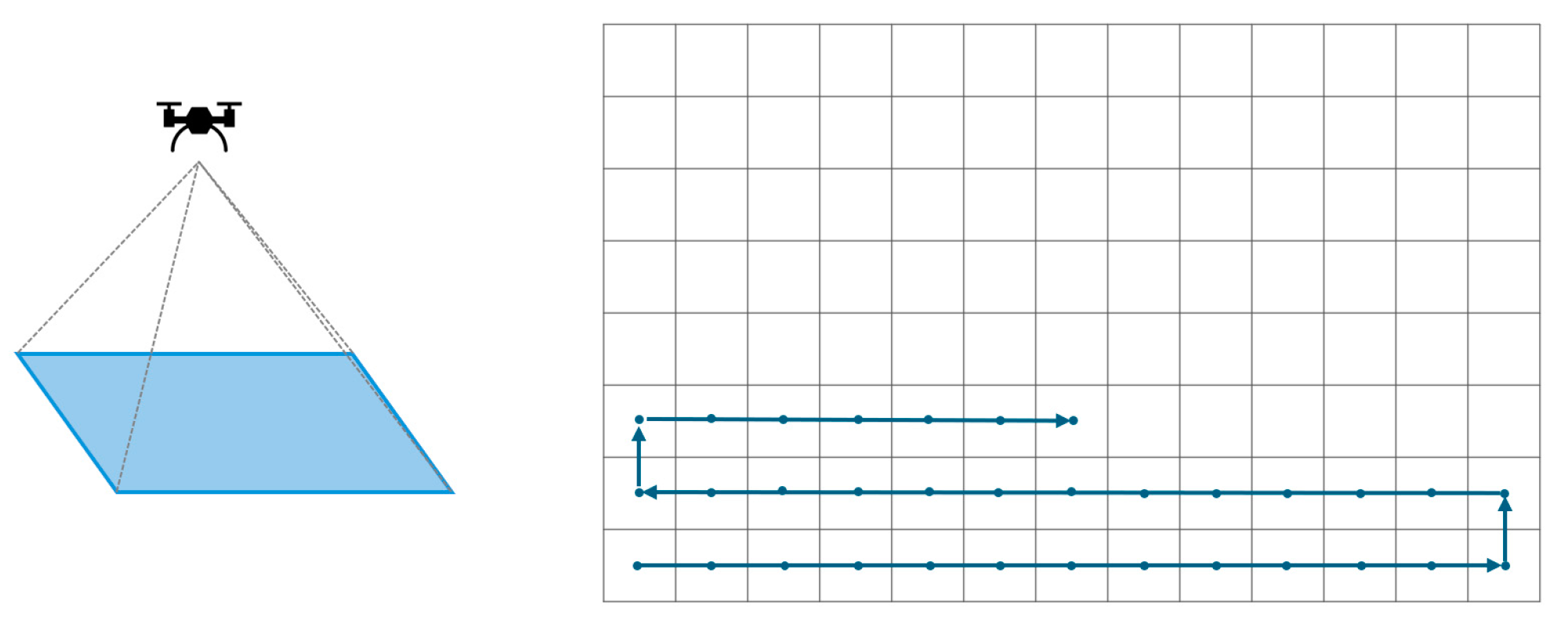

2. Traditional Unmanned Aerial Vehicle Path Planning Methods for Search and Rescue Operations

3. Search Target Recognition Techniques

3.1. Color Space Exchange

3.2. Extracting Feature Colors of Image

3.3. Transformation of Color Space

3.4. K-Nearest Neighbors (KNN) Color Classification

3.5. UAV Systems for Human Detection

|

Human Body Recognition Model |

Dataset Used |

Recognition of Human Clothing Types and Colors |

Segmentation of the Search Area |

Dynamic Route Planning for Search |

Integration of Human Body and Clothing/Pant Color Recognition with Dynamic Route Planning |

|

|---|---|---|---|---|---|---|

|

[23] |

Motion detection outputs a score of human confidence |

No |

No |

No |

No |

No |

|

[24] |

CNN |

UCF-ARG dataset |

No, proposes human activity classification algorithm |

No |

No |

No |

|

[15] |

CNN |

Self-developed captured dataset |

No |

No |

No, spiral search |

No |

|

[25] |

DNN with MobileNet V2 SSDLite |

COCO dataset |

No |

No |

Yes, estimates the person and moves in his direction with GPS |

|

|

[26] |

CNN with Tiny YOLOv3 |

COCO dataset + self-developed swimmers dataset |

No |

No |

No |

No |

|

[27] |

CNN with modified YOLOv8 |

Self-developed UAV view real-world dataset |

No |

No |

No |

No |

|

[28] |

CNN with YOLOv5 and Haar Cascade classifier |

VisDrone dataset + COC0128 dataset |

No, proposes a human body region classification algorithm |

No |

No |

No |

|

HWF |

CNN with YOLOv5 |

VisDrone dataset + self-developed drone-clothing dataset |

Yes, uses KNN color recognition |

Yes |

Yes, proposes the hierarchical human-weight-first (HWF) path planning algorithm |

Yes, Proposes the integrated YOLOv5 and HWF framework |

This entry is adapted from the peer-reviewed paper 10.3390/machines12010065

References

- Sahingoz, O.K. Networking models in flying ad-hoc networks (FANETs): Concepts and Challenges. J. Intell. Robot. Syst. 2014, 74, 513–527.

- Menouar, H.; Guvenc, I.; Akkaya, K.; Uluagac, A.S.; Kadri, A.; Tuncer, A. UAV-enabled intelligent transportation systems for the smart city: Applications and challenges. IEEE Commun. Mag. 2017, 55, 22–28.

- Aasen, H. UAV spectroscopy: Current sensors, processing techniques and theoretical concepts for data interpretation. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 8809–8812.

- Ezequiel, C.A.F.; Cua, M.; Libatique, N.C.; Tangonan, G.L.; Alampay, R.; Labuguen, R.T.; Favila, C.M.; Honrado, J.L.E.; Canos, V.; Devaney, C.; et al. UAV aerial imaging applications for post-disaster assessment, environmental management and infrastructure development. In Proceedings of the International Conference on Unmanned Aircraft Systems, Orlando, FL, USA, 27–30 May 2017; pp. 274–283.

- Zhang, Y.; Li, S.; Wang, S.; Wang, X.; Duan, H. Distributed bearing-based formation maneuver control of fixed-wing UAVs by finite-time orientation estimation. Aerosp. Sci. Technol. 2023, 136, 108241.

- Zheng, Q.; Zhao, P.; Li, Y.; Wang, H.; Yang, Y. Spectrum interference-based two-level data augmentation method in deep learning for automatic modulation classification. Neural Comput. Applic. 2021, 33, 7723–7745.

- Mao, Y.; Sun, R.; Wang, J.; Cheng, Q.; Kiong, L.C.; Ochieng, W.Y. New time-differenced carrier phase approach to GNSS/INS integration. GPS Solut. 2022, 26, 122.

- Zhang, X.; Pan, W.; Scattolini, R.; Yu, S.; Xu, X. Robust tube-based model predictive control with Koopman operators. Automatica 2022, 137, 110114.

- Narayanan, S.S.K.S.; Tellez-Castro, D.; Sutavani, S.; Vaidya, U. SE(3) (Koopman-MPC: Data-driven learning and control of quadrotor UAVs. IFAC-PapersOnLine 2023, 56, 607–612.

- Cao, B.; Zhang, W.; Wang, X.; Zhao, J.; Gu, Y.; Zhang, Y. A memetic algorithm based on two_Arch2 for multi-depot heterogeneous-vehicle capacitated arc routing problem. Swarm Evol. Comput. 2021, 63, 100864.

- Erdelj, M.; Natalizio, E. UAV-assisted disaster management: Applications and open issues. In Proceedings of the International Conference on Computing, Networking and Communications, Kauai, HI, USA, 15–18 February 2016; pp. 1–5.

- Mukherjee, A.; De, D.; Dey, N.; Crespo, R.G.; Herrera-Viedma, E. DisastDrone: A Disaster Aware Consumer Internet of Drone Things System in Ultra-Low Latent 6G Network. IEEE Trans. Consum. Electron. 2023, 69, 38–48.

- Pasandideh, F.; da Costa, J.P.J.; Kunst, R.; Islam, N.; Hardjawana, W.; Pignaton de Freitas, E. A Review of Flying Ad Hoc Networks: Key Characteristics, Applications, and Wireless Technologies. Remote Sens. 2022, 14, 4459.

- Majeed, A.; Hwang, S.O. A Multi-Objective Coverage Path Planning Algorithm for UAVs to Cover Spatially Distributed Regions in Urban Environments. Aerospace 2021, 8, 343.

- Das, L.B.; Das, L.B.; Lijiya, A.; Jagadanand, G.; Aadith, A.; Gautham, S.; Mohan, V.; Reuben, S.; George, G. Human Target Search and Detection using Autonomous UAV and Deep Learning. In Proceedings of the IEEE International Conference on Industry 4.0, Artificial Intelligence, and Communications Technology (IAICT), Bali, Indonesia, 7–8 July 2020; pp. 55–61.

- Bandeira, T.W.; Coutinho, W.P.; Brito, A.V.; Subramanian, A. Analysis of Path Planning Algorithms Based on Travelling Salesman Problem Embedded in UAVs. In Proceedings of the Brazilian Symposium on Computing Systems Engineering (SBESC), Fortaleza, Porto Alegre, Brazil, 3–6 November 2015; pp. 70–75.

- Cabreira, T.; Brisolara, L.; Ferreira, P.R., Jr. Survey on Coverage Path Planning with Unmanned Aerial Vehicles. Drones 2019, 3, 4.

- Jünger, M.; Reinelt, G.; Rinaldi, G. The Traveling Salesman Problem. In Handbooks in Operations Research and Management Science; Elsevier B.V.: Amsterdam, The Netherlands, 1995; Volume 7, pp. 225–330.

- Ali, M.; Md Rashid, N.K.A.; Mustafah, Y.M. Performance Comparison between RGB and HSV Color Segmentations for Road Signs Detection. Appl. Mech. Mater. 2013, 393, 550–555.

- Haritha, D.; Bhagavathi, C. Distance Measures in RGB and HSV Color Spaces. In Proceedings of the 20th International Conference on Computers and Their Applications (CATA 2005), New Orleans, LA, USA, 16–18 March 2005.

- Pooja, K.S.; Shreya, R.N.; Lakshmi, M.S.; Yashika, B.C.; Rekha, B.N. Color Recognition using K-Nearest Neighbors Machine Learning Classification Algorithm Trained with Color Histogram Features. Int. Res. J. Eng. Technol. (IRJET) 2021, 8, 1935–1936.

- Pradeep, A.G.; Gnanapriya, M. Novel Contrast Enhancement Algorithm Using HSV Color Space. Int. J. Innov. Technol. Res. 2016, 4, 5073–5074.

- Krishna, S.L.; Chaitanya, G.S.R.; Reddy, A.S.H.; Naidu, A.M.; Poorna, S.S.; Anuraj, K. Autonomous Human Detection System Mounted on a Drone. In Proceedings of the 2019 International Conference on Wireless Communications Signal Processing and Networking (WiSPNET), Chennai, India, 21–23 March 2019; pp. 335–338.

- Mliki, H.; Bouhlel, F.; Hammami, H. Human activity recognition from UAV-captured video sequences. Pattern Recognit. 2020, 100, 107140.

- Safadinho, D.; Ramos, J.; Ribeiro, R.; Filipe, V.; Barroso, J.; Pereira, A. UAV Landing Using Computer Vision Techniques for Human Detection. Sensors 2020, 20, 613.

- Lygouras, E.; Santavas, N.; Taitzoglou, A.; Tarchanidis, K.; Mitropoulos, A.; Gasteratos, A. Unsupervised Human Detection with an Embedded Vision System on a Fully Autonomous UAV for Search and Rescue Operations. Sensors 2019, 19, 3542.

- Do, M.-T.; Ha, M.-H.; Nguyen, D.-C.; Thai, K.; Ba, Q.-H.D. Human Detection Based Yolo Backbones-Transformer in UAVs. In Proceedings of the International Conference on System Science and Engineering (ICSSE), Ho Chi Minh, Vietnam, 27–28 July 2023; pp. 576–580.

- Wijesundara, D.; Gunawardena, L.; Premachandra, C. Human Recognition from High-altitude UAV Camera Images by AI based Body Region Detection. In Proceedings of the Joint 12th International Conference on Soft Computing and Intelligent Systems and 23rd International Symposium on Advanced Intelligent Systems (SCIS & ISIS), Ise, Japan, 29 November—2 December 2022; pp. 1–4.