A wide range of applications, including sports and healthcare, use human activity recognition (HAR). The Internet of Things (IoT), using cloud systems, offers enormous resources but produces high delays and huge amounts of traffic. This study proposes a distributed intelligence and dynamic HAR architecture using smart IoT devices, edge devices, and cloud computing. These systems were used to train models, store results, and process real-time predictions. Wearable sensors and smartphones were deployed on the human body to detect activities from three positions; accelerometer and gyroscope parameters were utilized to recognize activities. A dynamic selection of models was used, depending on the availability of the data and the mobility of the users.

1. Introduction

The Internet of Things (IoT) has revolutionized the way we interact with our environment, enabling the connection of things to the internet [

1,

2]. One application of the IoT is human activity recognition (HAR), where smart devices can monitor and recognize human activities for various purposes. HAR is essential in a number of industries, including the sport [

3,

4], healthcare [

5,

6,

7], and smart environment industries [

8,

9,

10,

11]; information about human activities has been collected using smartphones and wearable sensor technologies [

12,

13,

14,

15,

16].

Machine learning (ML) plays a significant role in HAR systems [

11]. ML automatically identifies and classifies different activities performed by individuals based on sensor data or other input sources. Feature extraction has been used to extract relevant features from raw sensor data, such as accelerometer or gyroscope readings, to represent different activities. ML models can be trained on labeled data to recognize patterns, make predictions, and classify activities based on the extracted features. ML models can be deployed to perform real-time activity recognition, allowing for immediate feedback or intervention. Feature fusion can be used by combining information from different sources to improve the accuracy and robustness of activity recognition systems [

17,

18,

19].

Cloud computing offers virtually unlimited resources for data storage and processing, making it an attractive option for HAR applications. In the context of HAR, using wearable sensors, cloud computing can be utilized. The integration of edge and cloud computing offers promising solutions to address the challenges associated with processing wearable sensor data. Edge computing provides low-latency and privacy-preserving capabilities, while cloud computing offers scalability and storage advantages [

20,

21,

22].

To combine the benefits of both edge and cloud computing, researchers have proposed hybrid architectures that combine the strengths of both paradigms [

21,

22]. These architectures aim to achieve a balance between real-time processing at the edge and the scalability and storage capabilities of the cloud. Authors of different publications demonstrated improved recognition accuracy and reduced response times compared to a solely cloud-based approach [

23,

24,

25].

Distributed intelligence methods that make use of cloud and edge computing have shown promise in addressing these issues [

26,

27]. The concept of distributed intelligence has emerged, leveraging the power of multiple interconnected devices to perform complex tasks. This paper explored the application of distributed intelligence in the IoT for human activity recognition, specifically focusing on the use of Raspberry Pi as a platform. Healthcare systems using wearable sensors are an emerging field that aims to understand and classify human activities based on data collected from sensors integrated into wearable devices. These sensors can include accelerometers, gyroscopes, magnetometers, heart rate monitors, blood pressure monitors, and other medical sensors [

8,

12,

27]. Here, only accelerometers and gyroscopes were used.

Healthcare applications have been improved by combining wearable and mobile sensors with IoT infrastructure, and medical device usability has been improved by combining mobile applications with IoT technology. The IoT has been expected to have a particularly significant impact on healthcare, with the potential to improve people’s quality of life in general. The authors of [

28] presented a “Stress-Track” system using machine learning. Through measurements of body temperature, perspiration, and movement rate during exercise, their device was intended to monitor an individual’s stress levels. With a high accuracy percentage of 99.5%, this suggested model demonstrated its potential influence on stress reduction and better health.

Wearable sensors and computer vision were employed to recognize activities. Wearable sensors are made to be worn by individuals, allowing for activity recognition in indoor and outdoor environments. Wearable sensors can also offer portability and continuous data collection, enabling long-term monitoring and analysis. In order to provide more contextual information for activity recognition, computer vision algorithms can capture a wider perspective of the environment, including objects, scenes, and interactions with other people. Sufficient lighting and clear perspectives are required for precise computer vision-based activity recognition.

HAR has been implemented with different types of motion sensors; one of them is the MPU6050 inertial measurement unit (IMU) sensor, which included a tri-axial gyroscope and a tri-axial accelerometer [

16,

29]. This small sensor module, with a size of 21.2 mm in length, 16.4 mm in width, and 3.3 mm in height, along with a weight of 2.1 g, is a cheap and popular choice for capturing motion data under different applications, including HAR systems [

7,

16], sports [

30,

31], and earthquake detection [

29]. MPU6050 can be used for HAR, and achieving high accuracy requires careful consideration of sensor placement, feature extraction, classification algorithms, training data quality, and environmental factors [

32].

Also, smartphones have become increasingly popular as a platform for HAR due to their availability, built-in sensors, computational power, and connectivity capabilities. Smartphone sensors have been widely used in machine learning; they have been used to recognize different categories of daily life activities. In recent research, several papers used smartphone sensors and focused on enhancing prediction accuracy and optimizing algorithms to speed up processing [

14,

15,

32].

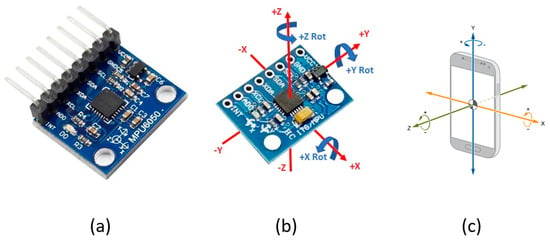

The orientation of these sensors should be considered according to the place of their installation, the nature of movements, and the dataset being used for training [

17,

33,

34]. An MPU6050 module connected to an ESP32 microcontroller was vertically installed on the shin; another module connected to Raspberry Pi version 3 was installed horizontally on the waist, and a smartphone was vertically placed inside the pocket on the thigh. Under the vertical installation, the +Y component direction of the accelerometer is upward, and in the horizontal installation, the +X component direction is upward. The directions of the MPU6050 module are explained in

Figure 1a,b, and the smartphone direction is explained in

Figure 1c [

32,

35].

Figure 1. Accelerometer and gyroscope directions in the MPU6050 module and smartphones, (a,b) shows MPU6050 directions, and (c) show the smartphone directions.

2. Human Activity Recognition Using Wearable Sensors

In recent years, researchers have used public and private datasets that have been used for HAR using wearable sensors and feature fusion to recognize various movements. Two smartphones were used by the authors of [

8] with the WISDM public dataset. Applications in healthcare have used this dataset, which contains three-dimensional inertia signals of thirteen time-stamped human activities, including walking, writing, smoking, and other activities. A HAR system was used based on effective hand-crafted features and random forest as a classifier. These authors conducted sensitivity analyses of the applied model’s parameters, and the accuracy of their model reached 98.7% on average using two devices on the hand’s wrist and in the pocket.

The authors of [

16] used a deep learning algorithm and employed three parallel convolutional neural networks for local feature extraction to establish feature fusion models of varying kernel sizes to increase the accuracy of HAR. Two datasets were used: the UCI dataset and a self-recorded dataset comprising 21 participants wearing devices on their waists and performing six activities in their laboratory. The accuracy of the activities in the UCI dataset and in the self-recorded dataset was 97.49% and 96.27%, respectively.

In the study published by the authors of [

35], the authors used waist sensors and two graphene/rubber sensors for the knees to detect falls in elderly people. They used one MPU6050 sensor located at the waist to monitor the attitude of the body. The rubber sensors were used to monitor the movements of their legs by monitoring their tilt angles in real time. They recorded four activities of daily living and six fall postures. Four basic fall-down postures can be identified with the MPU6050 sensor integrated with rubber sensors. The accuracy results for the activities of daily living recognition were 93.5%, and for fall posture identification, they were 90%.

It was proposed to use a smart e-health framework to monitor the health of the elderly and disabled people in the study published by the authors of [

36]. The authors generated notifications and performed analyses using edge computing. Three MPU9250 sensors were positioned on the body, on the left ankle, right wrist, and chest, using the MHEALTH dataset. The MPU9250 sensor integrates a magnetometer, a gyroscope, and a 3-axis accelerometer. A random forest machine learning model was used for inertial sensors in HAR. Two levels of analyses were considered; the first level was carried out using scalar sensors embedded in wearable devices, and cameras were only used as the second level if inconsistencies were identified at the first level. A video-based HAR and fall detection module achieved an accuracy of 86.97% on the DML Smart Actions dataset. The authors deployed the proposed HAR model with inertial sensors under a controlled experimental environment and achieved results with an accuracy of 96%.

A smart system for the quality of life of elderly people was proposed by the authors of [

37]. These authors proposed an IoT system that uses large amounts of data, cloud computing, low-power wireless sensing networks, and smart devices to identify falls. Their technology gathered data from elderly people’s movements in real time by integrating an accelerometer into a wearable device. The signals from their sensor were processed and analyzed using a machine learning model on a gateway for fall detection. They employed sensor positioning and multiple channeling information changes in the training set, using the MobiAct public dataset. Their system achieved 95.87% accuracy, and their edge system was able to detect falls in real time.

The majority of these studies focused on improving algorithms, employing different datasets, or adding new features to increase accuracy. Occasionally, specialized hardware was added to improve efficiency and assist with classification. Wearable technology employs sensors installed on humans to gather data from their sensors. Comfort and mobility should be taken into account when gathering data from sensors positioned at various body locations.

This paper proposes an architecture to monitor a group of people and recognize their behavior. This system was distributed over various devices, including sensor devices, smart IoT devices, edge devices, and cloud computing. The architecture used different machine learning models with different numbers of features to be able to handle scenarios where not all the sensors are available. The smart IoT device used a simple version of the Raspberry Pi microcomputer that was configured to run predictions locally. The Raspberry Pi 4 IoT edge could serve IoT devices and then run predictions and aggregate results in the cloud. The cloud process requests achieved an accuracy of 99.23% in training, while the edge and smart end devices achieved 99.19% accuracy with smaller datasets. The accuracy under real-time scenarios was measured and achieved 93.5% when all the features were available. The smart end device could process every request in 6.38 milliseconds on average, and the edge could process faster with 1.62 milliseconds on average and serve a group of users with a sufficient number of predictions per user, and the system is capable of serving more people using more edges or smart end devices. The architecture used distributed intelligence to be dynamic, accurate, and support mobility.

In addition to its great performance, this proposal provided the following features:

-

Integration and cooperation between the devices were efficient.

-

The achieved accuracy was 99.19% in training and 93.5% in real time.

-

The prediction time was efficient using the smart end and IoT edge devices.

-

Dynamic selection worked efficiently in the case of connectivity with the edges.

-

Dynamic selection of models worked efficiently in the case of feature availability.

-

The architecture is scalable and serves more than 30 users per edge.

This entry is adapted from the peer-reviewed paper 10.3390/jsan13010005