Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is an old version of this entry, which may differ significantly from the current revision.

Arrays of memristive devices coupled with photosensors can be used for capturing and processing visual information, thereby realizing the concept of “in-sensor computing”. This is a promising concept associated with the development of compact and low-power machine vision devices, which is crucial important for bionic prostheses of eyes, on-board image recognition systems for unmanned vehicles, computer vision in robotics, etc. This concept can be applied for the creation of a memristor based neuromorphic analog machine vision systems.

- neuromorphic systems

- memristive devices

- machine vision

- artificial intelligence

- artificial neural networks

- spiking neural networks

1. Introduction

The desire to create machines that are capable of seeing the world around them the way that people see it has motivated the development of computer vision systems for many years. An interesting fact is that the first neurocomputer in the history of mankind, created by Frank Rosenblatt in 1958 [1], was designed specifically to solve computer vision problems—in particular, to recognize the letters of the English alphabet. The work of F. Rosenblatt and many other pioneers in the field of neural network theory, more than half a century ago, introduced the high potential of artificial neural networks (ANNs) for solving computer vision problems; but, at that time, they did not receive intensive development for a number of reasons and were forgotten for many years, known in history as the winter of artificial intelligence.

Currently, computer vision systems are designed in accordance with the conventional principles of creating computing systems with the von Neumann architecture. They have digital processor units (e.g., the RISC (reduced instruction set computer), ARM (advanced RISC machine), or VLIW (very long instruction word) instruction set architectures for DSP (digital signal processor) and VPU (vision processing unit) microprocessors or GPUs (graphics processing units)) for processing visual information, memories for storing commands and data, as well as input devices in the form of photosensors (e.g., CCD (charge-coupled device) or APS (active-pixel sensor) image sensors) with analog-to-digital converters. During the operation of such a system, the captured image of a scene from the outside world undergoes digitization, encoding, software preprocessing, and processing using a machine learning model like ANNs (e.g., ResNet, VGG, Yolo, etc.), which involves storing a large number of parameters of the model itself and making a huge number of memory requests during the model’s inference on digital processor units with serial principles of data processing (albeit multicore). Such systems require the use of specialized computers, which makes them complex, thus consuming a lot of energy and making them expensive.

Analyzing the published reviews [2,3], one can find out that memristor-based systems have advantages over modern transistor-based digital processing units in tasks related to the hardware implementation of ANNs. In particular, existing memristor-based chips make it possible to solve image processing problems with a high accuracy, while consuming two to three orders of magnitude less energy than GPUs and digital neuromorphic processors and using the chip area more efficiently. So, the authors of these papers have shown that matrix multiplication, which is the most frequent operation in ANN algorithms, can be performed in an analog form based on Ohm’s and Kirchhoff’s laws with a high level of parallelism, and this makes it possible to create systems that have a high performance and speed and consume little energy.

At the same time, the need for analog-to-digital and digital-to-analog conversions minimizes the potential energy gain from using memristors. Due to the fact that memristive devices open up opportunities for creating neuromorphic systems in which all processing takes place in an analog form, it seems reasonable to exclude analog-to-digital and digital-to-analog conversions from machine vision systems. In this case, the signals from the photosensors can be fed to a memristor-based ANN and processed without digitization, because in the pretrained ANNs, the memristor’s conductivities form the model of visual information processing and simultaneously perform this processing. Moreover, if we fed visual signals directly to a memristor-based SNN, its training could be performed during the operation like in biological systems.

2. Memristor-Based Systems for Machine Vision

The memristor, as the fourth passive element of electrical circuits, was proposed in 1971 by Prof. Leon O. Chua [4]. A little later [5], in 1976, Prof. Leon O. Chua proposed a generalized definition of the memristor and described it with a port equation equivalent to Ohm’s law and a set of state equations that describe the dynamics of the internal state variables. The start of the active study of memristors and systems based on these equations can be considered to be in 2008, when the article [6] was published. Currently, memristors are used to create computer memories ReRAM (Resistive Random Access Memory) [7] and CAM (Content-Addressable Memory) [8], hardware implementation of ANNs [9,10], and neuromorphic systems [11,12] (“in-memory computing”). It is important to note that a main feature of these devices is that the signals inside them are processed in an analog form.

The authors of article [13] were among the first to propose an image recognition system based on passive crossbar arrays of 20 × 20 memristors [13]. This is a multilayer feed-forward ANN, trained to recognize images of letters of the Latin alphabet with an accuracy of approximately 97%. This work has shown the potential of using such technologies in the field of creating machine vision systems. This is also confirmed by significant advances in the use of memristors for pattern recognition via convolutional ANNs, reservoir computing models, and ANNs with artificial dendrites, as demonstrated in [14,15,16,17]. For example, popular models including VGG-16 and MobileNet have been successfully implemented and tested on an ImageNet dataset [14] and it was shown that for memristor-based ANNs, the power consumption is more than three orders of magnitude lower than that of a central processing unit and is 70 times lower than that of a typical application-specific integrated circuit chip [17]. In addition to traditional ANN architectures based on memristors, the authors of articles [18,19] presented and shared a human retina simulator that can be used in the development of promising variants of analog vision systems, among others.

The hardware implementation of a Hopfield network based on memristor chips and the result of its operation as an associative memory is described in article [20]. The authors have shown that by using both asynchronous and synchronous refresh schemes, complete-emotion images can be recalled from partial information. In [21], the authors demonstrated the processing of a video stream in real time with the selection of object boundaries using a 3D array of memristive devices based on HfO2, which is designed for programming the binary weights of four convolutional filters and parallel processing of input images. Their achievements in the field of hardware implementation of ANNs for a wide range of image processing tasks based on arrays of memristive devices with a size of 128 × 64 are presented in [22,23], and they have also shown that such devices are several times superior to graphics and signal processors in terms of speed and low power consumption.

The results of comparing systems based on memristors with modern ANN hardware accelerators based on transistors for various indicators are given in the reviews [2,3,24,25,26,27,28]. It can be seen from these articles that despite the analog principles of information processing, the prototypes of such systems provide high accuracy of the inference of the ANN models in image recognition tasks; for example, the NeuRRAM chip [29] provides a 99% accuracy on the MNIST dataset and an 85.7% accuracy on the CIFAR-10 dataset, 1 Mb resistive random-access memory (ReRAM) nvCIM macro [30] provides an inference accuracy of 98.8% on the MNIST dataset, 2 Mb nvCIM macro [31] provides a 90.88 % accuracy on the CIFAR-10 dataset for ResNet-20 and a 65.71 % accuracy on the CIFAR-100 dataset for ResNet-20, etc.

In order to provide compatibility with modern digital IT infrastructure, systems based on memristive devices must have interfaces that make it possible to receive digital input data and return the results of their analog processing in digital form. For this reason, all the above prototypes of neuromorphic systems based on memristive devices [13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32] contain analog-to-digital converters (ADCs) and digital-to-analog converters (DACs) to interact with digital devices. Such sets of DACs and ADCs can become the bottleneck of memristor-based computing system architectures, like the computer memory bus is the bottleneck of the von Neumann architecture, which reduces the potential benefits of their applications for solving specific practical problems set before existing computers. For example, if the system uses a 16 × 16 memristor crossbar array, then 256 scalar multiplications can be performed in one clock cycle, provided that all 16 input values are supplied simultaneously. At the same time, in order to push the inputs into the DAC and then pull the outputs from the ADC it is necessary to take at least 32 clock cycles, which is disproportionately more than the requirements of performing matrix multiplication on the crossbar array.

That is why an actual area of research in this field is the development of architectures in which there can be as few signal conversions as possible. For example, the importance of developing such concepts was stated by the authors of article [8] in 2020, who proposed an analog CAM circuit taking advantage of the analog memristor conductance tunability for the first time. It enables the processing of analog sensor data without the need for an analog-to-digital conversion step. In their study, a practical circuit implementation composed of six transistors and two memristors was demonstrated in both an experiment and a simulation. The authors note that the analog capability opens up the possibility for directly processing analog signals acquired from sensors, and is particularly attractive for Internet of Things applications due to the potential low power and energy footprint.

The idea of fully analog computing is not new and existed long before the invention of memristive devices in 2008, and even before their theoretical description in the 70s. For example, the US patent [33] published in 2006 discloses an analog CAM described in 1991 [34] that employs analog storage cells with programmable analog transfer function capabilities. The use of electrically erasable programmable read-only memory (EEPROM) cells in an analog storage device avoids the need to convert an analog waveform into a digital representation, reducing the complexity embodied in an integrated circuit as well as decreasing the die dimension. An earlier well-known example of a fully analog computer is “Sceptron”—the device for analog signal recognition, developed in 1962 [35]. Sceptron not only performed a function that would require hundreds of conventional filters and a large storage capacity at that time, but it ran a program itself for recognizing a complex signal without detailed knowledge of its characteristics. If one considers analog computers with neural network architecture, then one of the most famous is the adaptive Adaline neuron, implemented in 1962 [36] using “memistors” (not to be confused with “memristors”). With such an element it was possible to obtain an electronically variable gain control, along with the memory required for storage of the system’s experiences of training. The authors created a neuron capable of training and recognizing 3 × 3 patterns of the letters of the English alphabet. Of course, the earliest example of a fully analog ANN is the Mark-1 neurocomputer created by Frank Rosenblatt [1] in 1958, which was mentioned above.

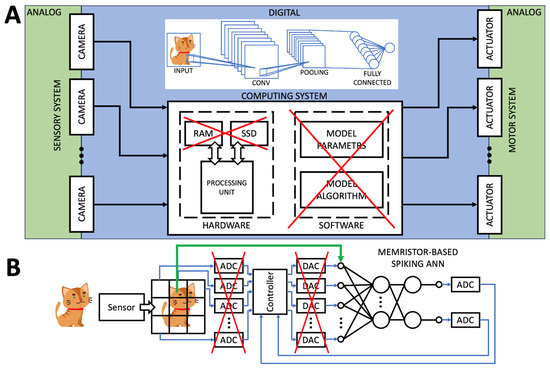

Due to the fact that the main goal of machine vision systems is not to capture and save images, but to obtain information about objects in the field of vision (e.g., class, segment, id., etc.), for the most optimal exploitation of computational resources all data processing in such systems can be performed completely in analog form, as was proposed in [8]. In the photosensors of computer vision systems, information is captured in analog form, and it is reasonable to connect them to the memristor-based ANNs without ADCs and DACs [37], implementing the concept of “in-sensor computing” [38]. As a result, there is no need to use software algorithms and models interacting with computer memory to load model parameters and store intermediate results. The system requires significantly fewer electronic components, becoming potentially faster and more energy efficient. In general, any electronic device capable of generating current (or changing the current strength in a circuit) depending on the intensity of illumination can be used as a photosensor in such a system (Figure 1).

Figure 1. The main features of the neuromorphic analog machine vision systems, combining “in-memory computing”, “in-sensor-computing”, and neuromorphic architectures. (A) In comparison with the existing digital computer vision systems, “in-memory computing” makes it possible to process visual information entirely within the hardware when the ANN models work completely in analog form using computers based on memristive devices. (B) In comparison with the existing general memristor-based computers, in the neuromorphic analog machine vision systems, there are no analog-to-digital and digital-to-analog conversions in the process of capturing visual information via photosensors. For these purposes, the devices for “in-sensor computation” can be used in the sensory part of a system for capturing visual information in analog form, which is then fed to an ANN based on memristors.

3. Memristor-Based Spiking ANNs

Throughout the history of its development, the theory of the ANN has been inspired by the results of studies on the principles of the functioning of biological neural networks (BNNs) [47]. The most powerful tool for solving computer vision problems currently is the convolutional ANN. Despite the fact that when developing the concept of convolutional ANNs, the peculiarities of the functioning of the visual cortex of the cerebral hemispheres, which has a parallel analogue nature of processing signals from the optic nerve, were taken into account, the same strict mathematical transformations occur in convolutional ANNs as in any other formal ANN architecture–matrix multiplication and activation. The weights of synapses in such ANNs are calculated using the backpropagation method, and visual information is encoded using the amplitude of the signal.

Inside the BNNs, information is transmitted through a network of neurons that have some activation potential [48], using signals called spikes. Spikes are transmitted from one neuron to another using an axon and are characterized by their frequency, duration, and amplitude. Contacts between neurons are formed at strictly defined points called synapses. It is currently believed that the phenomena of memory and learning in living organisms arise due to the mechanisms of synaptic plasticity, which consists of the possibility of changing the strength of the synapse and, accordingly, changing the parameters of transmitted signals. The existence of synaptic plasticity leads to the fact that the human nervous system can independently configure individual groups of neurons to perform various functions.

The resistance of memristive devices to changes within the boundaries of the minimum and maximum possible values is referred to as a LRS (low resistance state) or a HRS (high resistance state). The curve describing the characteristics of the transition of a memristive device from one state to another under the influence of voltage pulses with different shapes, amplitudes, and frequencies is similar in appearance to that of experimental measurements of synaptic plasticity in the BNNs [49]. Therefore, memristive devices are the most biosimilar artificial analogues to the synapses of neurons in living systems, and thus make it possible to implement neuromorphic ANN architectures in hardware.

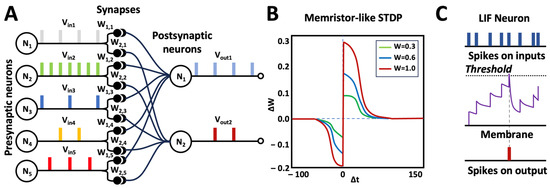

In spiking ANNs (Figure 2A), memristive devices connect presynaptic and postsynaptic neurons. The presynaptic neuron in the input layer of the network acts in this case as a generator of spikes, the frequency or timings of which encode the input information. For example, to process grayscale images, the brightness values of each pixel, represented by numbers from 0 to 255, can be represented by different frequencies ranging from 1 to 100 kHz. Spikes applied to a memristive device over a period of time change its resistance (Figure 2B)—this is a type of self-learning based on the local rules for each synapse.

Figure 2. Spiking ANNs based on memristive devices. (A) Common architecture includes presynaptic neurons and postsynaptic neurons, connected by artificial synapses implemented with memristors. Presynaptic neurons generate spikes encoding input information. Spikes go through the memristive synapses and locally change their resistances in accordance with the STDP (spike timing-dependent plasticity) rule, providing the self-learning of the whole ANN. (B) The dependence of the change ΔW in synaptic conductance on the interval Δt between a presynaptic spike and a postsynaptic spike for different current synaptic conductance values W. (C) A spiking neuron receives sequences of spikes on its inputs and, under certain conditions, generates a spike at its output; for example, in the LIF (leaky integrate-and-fire) model, each spike contributes to the neuron’s status—its amplitude, which decays over time; if a sufficient number of spikes contributes to the status in a certain time window, the neuron’s amplitude exceeds a threshold, and the neuron generates an output spike [2].

A postsynaptic neuron is a device capable of accumulating charge from all presynaptic neurons (Figure 2C), taking into account the voltage drop across the memristors (the so-called neuron membrane). It has a certain threshold charge value, exceeding which leads to the generation of a spike by the postsynaptic neuron. The final distribution of resistances of memristive devices determines the functionality of such a network of presynaptic and postsynaptic neurons and allows the solving of problems in the field of robotics, prosthetics, telecommunications, etc.

In neuroscience, there are currently several mathematical models of spiking neurons [50]: Hodgkin–Huxley [51], FitzHugh–Nagumo [52], Izhikevich [53], Koch and Segev [54], Bower and Beeman [55], Abbott [56], etc. The differences between these models lie in their degree of biological realism, the type of object being modeled (presynaptic neuron, neuronal membrane, neuron’s morphology, etc.), and in their level of computational complexity. The latter property is very important for the hardware implementation of neuromorphic processors, since it directly affects the number of components used, the complexity of electrical circuits, and their energy consumption. The research in [11,17,57,58,59,60,61,62] shows that even simple spike shapes (rectangular or triangular) and neuron models (leaky integrate-and-fire) make it possible to solve recognition problems in memristor-based SNNs, reducing energy consumption and increasing robustness [59] in comparison with formal ANNs. Unsupervised learning in such systems is based on the STDP mechanism and provided by the overlapped waveform of pre-spikes and post-spikes within a certain time window, which determines the behavior of memristive devices during the feedback process [11,58,60].

To generate presynaptic spikes, specialized electrical circuits based on analog switches, operational amplifiers, and current mirrors, such as in [63,64,65], have been developed. They are controlled digitally and externally using an FPGA or microcontroller, initiating spike generation based on the input data. With this approach, the spike generator acts as a device that encodes the input digital information received by the computer through an external interface in the form of a sequence of pulses represented in analog form. This makes it possible to encode any information—tabular, visual, audio, etc. However, in most papers, either the electrical circuits of spike generators are not considered in detail [10,13,15,16,20,22,57,66,67], since the authors pay more attention to signal processing inside the crossbar arrays, or multi-channel DACs are used as a generator while also having external control from an FPGA or microcontroller, as in [9,24,29,61]. Such systems have versatility with respect to the type of information being processed, but require external control and interfaces for receiving data.

Thus, memristive devices make it possible to implement in hardware artificial synapses for different ANN types [57,66], e.g., for the formal ANNs, in which the processed information is encoded according to the signal amplitude, and for the spiking ANNs, in which the processed information is encoded according to the signal frequency. However, spiking ANNs are more biologically plausible, because they make it possible to realize unsupervised learning in terms of an STDP mechanism or paired-pulse facilitation [68,69]. So, on the way of bringing together “in-sensor computing” and “in-memory computing”, the most prospective system’s architecture must be based on the spiking ANN architectures.

This entry is adapted from the peer-reviewed paper 10.3390/app132413309

This entry is offline, you can click here to edit this entry!