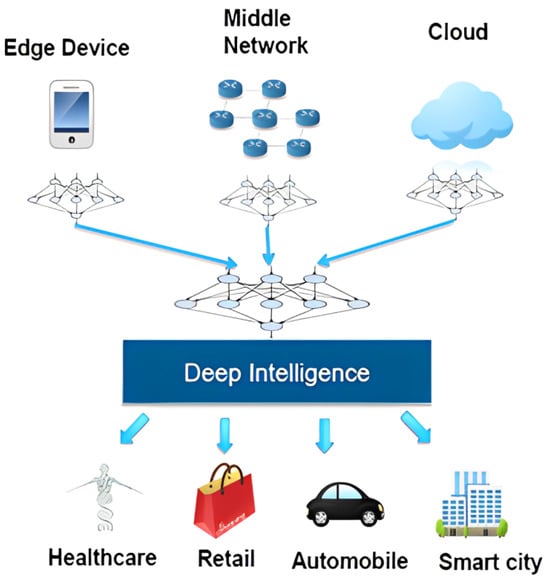

The internet of things (IoT) has emerged as a pivotal technological paradigm facilitating interconnected and intelligent devices across multifarious domains. The proliferation of IoT devices has resulted in an unprecedented surge of data, presenting formidable challenges concerning efficient processing, meaningful analysis, and informed decision making. Deep-learning (DL) methodologies, notably convolutional neural networks (CNNs), recurrent neural networks (RNNs), and deep-belief networks (DBNs), have demonstrated significant efficacy in mitigating these challenges by furnishing robust tools for learning and extraction of insights from vast and diverse IoT-generated data.

- Deep Learning

- Healthcare

- Recurrent Neural Networks

- Internet of Things

- Convolutional Neural Networks

- Surveillance

1. Introduction

-

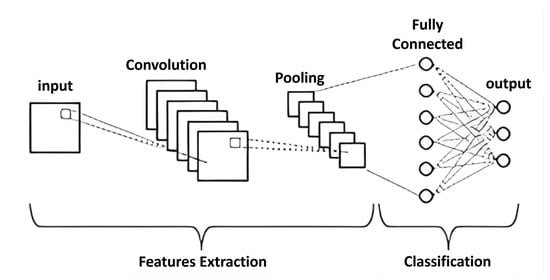

Convolutional neural networks (Figure 2): These are tailored for image and video analysis. CNNs excel in detecting spatial patterns through convolutional layers, making them invaluable for tasks like image classification and object detection. The strength of CNNs lies in their hierarchical feature-extraction process. Convolutional layers apply filters or kernels to the input data, effectively scanning it for various features, such as edges, textures, shapes, and other visual cues [10][11][12][13][14][15][16][17][18][19][20][21][22][23][24][25][26][27].

-

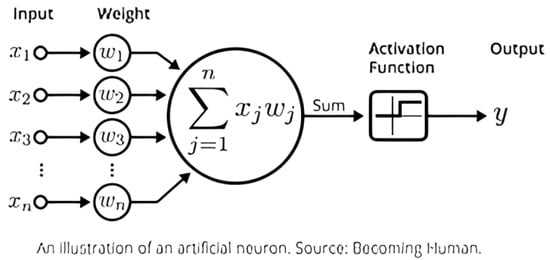

Fully connected neural networks (Figure 3): These networks, also known as multilayer perceptrons (MLPs), are versatile and can be used for various tasks, including regression and classification. Fully connected layers, serving as the final layer in a deep neural network, play a central role in synthesizing output from preceding layers into comprehensive predictions [59][60][61][62][63][64][65][66][67][68][69][70][71].

2. Overview on Deep-Learning Performance in Typical IoT Applications

2.1. Anomaly Detection

2.2. Human-Activity Recognition

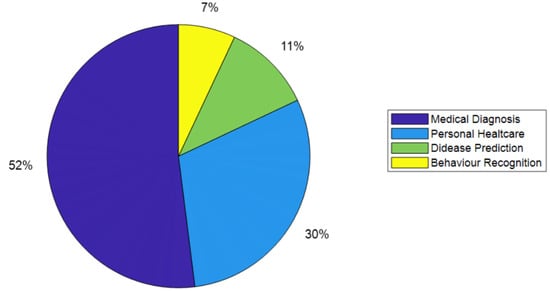

2.3. Healthcare

2.4. IoT for Surveillance Applications

-

Amazon Rekognition: Amazon Rekognition is an image-and-video-analysis service based on deep learning, which can identify objects, people, text, scenes, and activities in real time. It is used in surveillance applications for facial recognition, object detection, and traffic monitoring [77][78][79][80].

3. Summary

This entry is adapted from the peer-reviewed paper 10.3390/electronics12244925

References

- Kopetz, H.; Steiner, W. Internet of things. In Real-Time Systems: Design Principles for Distributed Embedded Applications; Springer: Berlin/Heidelberg, Germany, 2022; pp. 325–341.

- Liu, W.; Wang, Z.; Liu, X.; Zeng, N.; Liu, Y.; Alsaadi, F.E. A survey of deep neural network architectures and their applications. Neurocomputing 2017, 234, 11–26.

- Saponara, S.; Elhanashi, A.; Gagliardi, A. Reconstruct fingerprint images using deep learning and sparse autoencoder algorithms. In Proceedings of the Real-Time Image Processing and Deep Learning 2021, Brussels, Belgium, 12–16 April 2021; Volume 11736, pp. 9–18.

- Saponara, S.; Elhanashi, A.; Gagliardi, A. Enabling YOLOv2 Models to Monitor Fire and Smoke Detection Remotely in Smart Infrastructures. In Proceedings of the Applications in Electronics Pervading Industry, Environment and Society: APPLEPIES 2020, Virtual Online, 19–20 November 2020; Springer: Berlin/Heidelberg, Germany, 2021; pp. 30–38.

- Dini, P.; Elhanashi, A.; Begni, A.; Saponara, S.; Zheng, Q.; Gasmi, K. Overview on Intrusion Detection Systems Design Exploiting Machine Learning for Networking Cybersecurity. Appl. Sci. 2023, 13, 7507.

- Begni, A.; Dini, P.; Saponara, S. Design and Test of an LSTM-Based Algorithm for Li-Ion Batteries Remaining Useful Life Estimation. In Proceedings of the International Conference on Applications in Electronics Pervading Industry, Environment and Society, Genoa, Italy, 26–27 September 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 373–379.

- Elhanashi, A.; Gasmi, K.; Begni, A.; Dini, P.; Zheng, Q.; Saponara, S. Machine Learning Techniques for Anomaly-Based Detection System on CSE-CIC-IDS2018 Dataset. In Proceedings of the International Conference on Applications in Electronics Pervading Industry, Environment and Society, Genoa, Italy, 26–27 September 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 131–140.

- Dini, P.; Begni, A.; Ciavarella, S.; De Paoli, E.; Fiorelli, G.; Silvestro, C.; Saponara, S. Design and Testing Novel One-Class Classifier Based on Polynomial Interpolation With Application to Networking Security. IEEE Access 2022, 10, 67910–67924.

- Dini, P.; Saponara, S. Analysis, design, and comparison of machine-learning techniques for networking intrusion detection. Designs 2021, 5, 9.

- Budiman, A.; Yaputera, R.A.; Achmad, S.; Kurniawan, A. Student attendance with face recognition (LBPH or CNN): Systematic literature review. Procedia Comput. Sci. 2023, 216, 31–38.

- Dong, J.; He, F.; Guo, Y.; Zhang, H. A Commodity Review Sentiment Analysis Based on BERT-CNN Model. In Proceedings of the 2020 5th International Conference on Computer and Communication Systems (ICCCS), Shanghai, China, 15–18 May 2020; pp. 143–147.

- Dhruv, P.; Naskar, S. Image classification using convolutional neural network (CNN) and recurrent neural network (RNN): A review. In Proceedings of the International Conference on Machine Learning and Information Processing (ICMLIP 2019), Pune, India, 27–28 December 2019; pp. 367–381.

- Li, W.; Zhu, L.; Shi, Y.; Guo, K.; Cambria, E. User reviews: Sentiment analysis using lexicon integrated two-channel CNN–LSTM family models. Appl. Soft Comput. 2020, 94, 106435.

- Yao, G.; Lei, T.; Zhong, J. A review of convolutional-neural-network-based action recognition. Pattern Recognit. Lett. 2019, 118, 14–22.

- Minaee, S.; Azimi, E.; Abdolrashidi, A. Deep-sentiment: Sentiment analysis using ensemble of cnn and bi-lstm models. arXiv 2019, arXiv:1904.04206.

- Rehman, A.U.; Malik, A.K.; Raza, B.; Ali, W. A hybrid CNN-LSTM model for improving accuracy of movie reviews sentiment analysis. Multimed. Tools Appl. 2019, 78, 26597–26613.

- Sindagi, V.A.; Patel, V.M. A survey of recent advances in cnn-based single image crowd counting and density estimation. Pattern Recognit. Lett. 2018, 107, 3–16.

- Li, X.; Li, Q.; Kim, J. A Review Helpfulness Modeling Mechanism for Online E-commerce: Multi-Channel CNN End-to-End Approach. Appl. Artif. Intell. 2023, 37, 2166226.

- Indira, D.N.V.S.L.S.; Goddu, J.; Indraja, B.; Challa, V.M.L.; Manasa, B. A review on fruit recognition and feature evaluation using CNN. Mater. Today Proc. 2023, 80, 3438–3443.

- Liu, Y.; Wang, L.; Shi, T.; Li, J. Detection of spam reviews through a hierarchical attention architecture with N-gram CNN and Bi-LSTM. Inf. Syst. 2022, 103, 101865.

- Bhuvaneshwari, P.; Rao, A.N.; Robinson, Y.H.; Thippeswamy, M. Sentiment analysis for user reviews using Bi-LSTM self-attention based CNN model. Multimed. Tools Appl. 2022, 81, 12405–12419.

- Allmendinger, A.; Spaeth, M.; Saile, M.; Peteinatos, G.G.; Gerhards, R. Precision chemical weed management strategies: A review and a design of a new CNN-based modular spot sprayer. Agronomy 2022, 12, 1620.

- Lu, J.; Tan, L.; Jiang, H. Review on convolutional neural network (CNN) applied to plant leaf disease classification. Agriculture 2021, 11, 707.

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 1–74.

- Kattenborn, T.; Leitloff, J.; Schiefer, F.; Hinz, S. Review on Convolutional Neural Networks (CNN) in vegetation remote sensing. Isprs J. Photogramm. Remote. Sens. 2021, 173, 24–49.

- Alahmari, F.; Naim, A.; Alqahtani, H. E-Learning Modeling Technique and Convolution Neural Networks in Online Education. In IoT-Enabled Convolutional Neural Networks: Techniques and Applications; River Publishers: Aalborg, Denmark, 2023; pp. 261–295.

- Krichen, M. Convolutional neural networks: A survey. Computers 2023, 12, 151.

- Mers, M.; Yang, Z.; Hsieh, Y.A.; Tsai, Y. Recurrent neural networks for pavement performance forecasting: Review and model performance comparison. Transp. Res. Rec. 2023, 2677, 610–624.

- Kaur, M.; Mohta, A. A Review of Deep Learning with Recurrent Neural Network. In Proceedings of the 2019 International Conference on Smart Systems and Inventive Technology (ICSSIT), Tirunelveli, India, 27–29 November 2019; pp. 460–465.

- Al-Smadi, M.; Qawasmeh, O.; Al-Ayyoub, M.; Jararweh, Y.; Gupta, B. Deep Recurrent neural network vs. support vector machine for aspect-based sentiment analysis of Arabic hotels’ reviews. J. Comput. Sci. 2018, 27, 386–393.

- Chen, Y.; Cheng, Q.; Cheng, Y.; Yang, H.; Yu, H. Applications of Recurrent Neural Networks in Environmental Factor Forecasting: A Review. Neural Comput. 2018, 30, 2855–2881.

- Durstewitz, D.; Koppe, G.; Thurm, M.I. Reconstructing computational system dynamics from neural data with recurrent neural networks. Nat. Rev. Neurosci. 2023, 24, 693–710.

- Bonassi, F.; Farina, M.; Xie, J.; Scattolini, R. On recurrent neural networks for learning-based control: Recent results and ideas for future developments. J. Process. Control. 2022, 114, 92–104.

- Zhu, J.; Jiang, Q.; Shen, Y.; Qian, C.; Xu, F.; Zhu, Q. Application of recurrent neural network to mechanical fault diagnosis: A review. J. Mech. Sci. Technol. 2022, 36, 527–542.

- Mao, S.; Sejdić, E. A Review of Recurrent Neural Network-Based Methods in Computational Physiology. IEEE Trans. Neural Netw. Learn. Syst. 2023, 34, 6983–7003.

- Weerakody, P.B.; Wong, K.W.; Wang, G.; Ela, W. A review of irregular time series data handling with gated recurrent neural networks. Neurocomputing 2021, 441, 161–178.

- Hibat-Allah, M.; Ganahl, M.; Hayward, L.E.; Melko, R.G.; Carrasquilla, J. Recurrent neural network wave functions. Phys. Rev. Res. 2020, 2, 023358.

- Barik, K.; Misra, S.; Ray, A.K.; Bokolo, A. LSTM-DGWO-Based Sentiment Analysis Framework for Analyzing Online Customer Reviews. Comput. Intell. Neurosci. 2023, 2023, 6348831.

- Yu, Y.; Si, X.; Hu, C.; Zhang, J. A review of recurrent neural networks: LSTM cells and network architectures. Neural Comput. 2019, 31, 1235–1270.

- Rao, G.; Huang, W.; Feng, Z.; Cong, Q. LSTM with sentence representations for document-level sentiment classification. Neurocomputing 2018, 308, 49–57.

- Yadav, V.; Verma, P.; Katiyar, V. Long short term memory (LSTM) model for sentiment analysis in social data for e-commerce products reviews in Hindi languages. Int. J. Inf. Technol. 2023, 15, 759–772.

- Ghimire, S.; Deo, R.C.; Wang, H.; Al-Musaylh, M.S.; Casillas-Pérez, D.; Salcedo-Sanz, S. Stacked LSTM sequence-to-sequence autoencoder with feature selection for daily solar radiation prediction: A review and new modeling results. Energies 2022, 15, 1061.

- Sivakumar, M.; Uyyala, S.R. Aspect-based sentiment analysis of mobile phone reviews using LSTM and fuzzy logic. Int. J. Data Sci. Anal. 2021, 12, 355–367.

- Muhammad, P.F.; Kusumaningrum, R.; Wibowo, A. Sentiment analysis using Word2vec and long short-term memory (LSTM) for Indonesian hotel reviews. Procedia Comput. Sci. 2021, 179, 728–735.

- Hossain, N.; Bhuiyan, M.R.; Tumpa, Z.N.; Hossain, S.A. Sentiment Analysis of Restaurant Reviews using Combined CNN-LSTM. In Proceedings of the 2020 11th International Conference on Computing, Communication and Networking Technologies (ICCCNT), Kharagpur, India, 1–3 July 2020; pp. 1–5.

- Bacanin, N.; Jovanovic, L.; Zivkovic, M.; Kandasamy, V.; Antonijevic, M.; Deveci, M.; Strumberger, I. Multivariate energy forecasting via metaheuristic tuned long-short term memory and gated recurrent unit neural networks. Inf. Sci. 2023, 642, 119122.

- Santur, Y. Sentiment Analysis Based on Gated Recurrent Unit. In Proceedings of the 2019 International Artificial Intelligence and Data Processing Symposium (IDAP), Malatya, Turkey, 21–22 September 2019; pp. 1–5.

- Chen, J.; Jing, H.; Chang, Y.; Liu, Q. Gated recurrent unit based recurrent neural network for remaining useful life prediction of nonlinear deterioration process. Reliab. Eng. Syst. Saf. 2019, 185, 372–382.

- Wang, Y.; Liao, W.; Chang, Y. Gated recurrent unit network-based short-term photovoltaic forecasting. Energies 2018, 11, 2163.

- Shen, G.; Tan, Q.; Zhang, H.; Zeng, P.; Xu, J. Deep learning with gated recurrent unit networks for financial sequence predictions. Procedia Comput. Sci. 2018, 131, 895–903.

- Shukla, P.K.; Stalin, S.; Joshi, S.; Shukla, P.K.; Pareek, P.K. Optimization assisted bidirectional gated recurrent unit for healthcare monitoring system in big-data. Appl. Soft Comput. 2023, 138, 110178.

- ArunKumar, K.; Kalaga, D.V.; Kumar, C.M.S.; Kawaji, M.; Brenza, T.M. Comparative analysis of Gated Recurrent Units (GRU), long Short-Term memory (LSTM) cells, autoregressive Integrated moving average (ARIMA), seasonal autoregressive Integrated moving average (SARIMA) for forecasting COVID-19 trends. Alex. Eng. J. 2022, 61, 7585–7603.

- Lin, H.; Gharehbaghi, A.; Zhang, Q.; Band, S.S.; Pai, H.T.; Chau, K.W.; Mosavi, A. Time series-based groundwater level forecasting using gated recurrent unit deep neural networks. Eng. Appl. Comput. Fluid Mech. 2022, 16, 1655–1672.

- Farah, S.; Humaira, N.; Aneela, Z.; Steffen, E. Short-term multi-hour ahead country-wide wind power prediction for Germany using gated recurrent unit deep learning. Renew. Sustain. Energy Rev. 2022, 167, 112700.

- Zhao, N.; Gao, H.; Wen, X.; Li, H. Combination of Convolutional Neural Network and Gated Recurrent Unit for Aspect-Based Sentiment Analysis. IEEE Access 2021, 9, 15561–15569.

- Zhang, Y.G.; Tang, J.; He, Z.Y.; Tan, J.; Li, C. A novel displacement prediction method using gated recurrent unit model with time series analysis in the Erdaohe landslide. Nat. Hazards 2021, 105, 783–813.

- Sachin, S.; Tripathi, A.; Mahajan, N.; Aggarwal, S.; Nagrath, P. Sentiment analysis using gated recurrent neural networks. Comput. Sci. 2020, 1, 74.

- Tang, D.; Rong, W.; Qin, S.; Yang, J.; Xiong, Z. A n-gated recurrent unit with review for answer selection. Neurocomputing 2020, 371, 158–165.

- Sun, J.; Fard, A.P.; Mahoor, M.H. Xnodr and xnidr: Two accurate and fast fully connected layers for convolutional neural networks. J. Intell. Robot. Syst. 2023, 109, 17.

- Laredo, D.; Ma, S.F.; Leylaz, G.; Schütze, O.; Sun, J.Q. Automatic model selection for fully connected neural networks. Int. J. Dyn. Control. 2020, 8, 1063–1079.

- Petersen, P.; Voigtlaender, F. Equivalence of approximation by convolutional neural networks and fully-connected networks. Proc. Am. Math. Soc. 2020, 148, 1567–1581.

- Wang, Y.; Zhang, F.; Zhang, X.; Zhang, S. Series AC Arc Fault Detection Method Based on Hybrid Time and Frequency Analysis and Fully Connected Neural Network. IEEE Trans. Ind. Inform. 2019, 15, 6210–6219.

- Borovykh, A.; Oosterlee, C.W.; Bohté, S.M. Generalization in fully-connected neural networks for time series forecasting. J. Comput. Sci. 2019, 36, 101020.

- Ganju, K.; Wang, Q.; Yang, W.; Gunter, C.A.; Borisov, N. Property inference attacks on fully connected neural networks using permutation invariant representations. In Proceedings of the the 2018 ACM SIGSAC conference on computer and communications security, Toronto, ON, Canada, 15–19 October 2018; pp. 619–633.

- Honcharenko, T.; Akselrod, R.; Shpakov, A. Information system based on multi-value classification of fully connected neural network for construction management. Iaes Int. J. Artif. Intell. 2023, 12, 593.

- Scabini, L.F.; Bruno, O.M. Structure and performance of fully connected neural networks: Emerging complex network properties. Phys. Stat. Mech. Its Appl. 2023, 615, 128585.

- Yuan, B.; Wolfe, C.R.; Dun, C.; Tang, Y.; Kyrillidis, A.; Jermaine, C. Distributed learning of fully connected neural networks using independent subnet training. Proc. Vldb Endow. 2022, 15, 1581–1590.

- Xue, Y.; Wang, Y.; Liang, J. A self-adaptive gradient descent search algorithm for fully-connected neural networks. Neurocomputing 2022, 478, 70–80.

- Li, Z.; Zhang, Y.; Abu-Siada, A.; Chen, X.; Li, Z.; Xu, Y.; Zhang, L.; Tong, Y. Fault diagnosis of transformer windings based on decision tree and fully connected neural network. Energies 2021, 14, 1531.

- Sudharsan, B.; Salerno, S.; Nguyen, D.D.; Yahya, M.; Wahid, A.; Yadav, P.; Breslin, J.G.; Ali, M.I. TinyML Benchmark: Executing Fully Connected Neural Networks on Commodity Microcontrollers. In Proceedings of the 2021 IEEE 7th World Forum on Internet of Things (WF-IoT), New Orleans, LA, USA, 14 June–31 July 2021; pp. 883–884.

- Basha, S.S.; Dubey, S.R.; Pulabaigari, V.; Mukherjee, S. Impact of fully connected layers on performance of convolutional neural networks for image classification. Neurocomputing 2020, 378, 112–119.

- DeMedeiros, K.; Hendawi, A.; Alvarez, M. A survey of AI-based anomaly detection in IoT and sensor networks. Sensors 2023, 23, 1352.

- Reddy, D.K.; Behera, H.S.; Nayak, J.; Vijayakumar, P.; Naik, B.; Singh, P.K. Deep neural network based anomaly detection in Internet of Things network traffic tracking for the applications of future smart cities. Trans. Emerg. Telecommun. Technol. 2021, 32, e4121.

- Hasan, M.; Islam, M.M.; Zarif, M.I.I.; Hashem, M. Attack and anomaly detection in IoT sensors in IoT sites using machine learning approaches. Internet Things 2019, 7, 100059.

- Jia, Y.; Cheng, Y.; Shi, J. Semi-Supervised Variational Temporal Convolutional Network for IoT Communication Multi-Anomaly Detection. In Proceedings of the 2022 3rd International Conference on Control, Robotics and Intelligent System, Xi’an, China, 23–25 September 2022; pp. 67–73.

- Bock, M.; Hoelzemann, A.; Moeller, M.; Van Laerhoven, K. Investigating (re) current state-of-the-art in human activity recognition datasets. Front. Comput. Sci. 2022, 4, 119.

- Bhatta, A.; Albiero, V.; Bowyer, K.W.; King, M.C. The gender gap in face recognition accuracy is a hairy problem. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HA, USA, 3–7 January 2023; pp. 303–312.

- Vaishali, A.; Likitha, G.; Srujana, K.; Sunitha, B. Amazon Rekognition. Math. Stat. Eng. Appl. 2020, 69, 449–453.

- Leonor Estévez Dorantes, T.; Bertani Hernández, D.; León Reyes, A.; Elena Miranda Medina, C. Development of a powerful facial recognition system through an API using ESP32-Cam and Amazon Rekognition service as tools offered by Industry 5.0. In Proceedings of the 5th International Conference on Machine Vision and Applications (ICMVA), Singapore, 18–20 February 2022; pp. 76–81.

- Indla, R.K. An Overview on Amazon Rekognition Technology. Master of Science in Information Systems and Technology, California State University San Bernardino 2021. Available online: https://scholarworks.lib.csusb.edu/cgi/viewcontent.cgi?article=2396&context=etd (accessed on 27 November 2023).

- Pimenov, D.Y.; Bustillo, A.; Wojciechowski, S.; Sharma, V.S.; Gupta, M.K.; Kuntoğlu, M. Artificial intelligence systems for tool condition monitoring in machining: Analysis and critical review. J. Intell. Manuf. 2023, 34, 2079–2121.

- Sabato, A.; Dabetwar, S.; Kulkarni, N.N.; Fortino, G. Noncontact Sensing Techniques for AI-Aided Structural Health Monitoring: A Systematic Review. IEEE Sens. J. 2023, 23, 4672–4684.

- Shaik, T.; Tao, X.; Higgins, N.; Li, L.; Gururajan, R.; Zhou, X.; Acharya, U.R. Remote patient monitoring using artificial intelligence: Current state, applications, and challenges. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2023, 13, e1485.

- Han, J.; Jeong, D.; Lee, S. Analysis of the HIKVISION DVR file system. In Proceedings of the Digital Forensics and Cyber Crime: 7th International Conference, ICDF2C 2015, Seoul, Republic of Korea, 6–8 October 2015; Revised Selected Papers 7. Springer: Berlin/Heidelberg, Germany, 2015; pp. 189–199.

- Dragonas, E.; Lambrinoudakis, C.; Kotsis, M. IoT forensics: Analysis of a HIKVISION’s mobile app. Forensic Sci. Int. Digit. Investig. 2023, 45, 301560.

- Hashmi, M.F.; Pal, R.; Saxena, R.; Keskar, A.G. A new approach for real time object detection and tracking on high resolution and multi-camera surveillance videos using GPU. J. Cent. South Univ. 2016, 23, 130–144.

- Cheng, S.; Zhu, Y.; Wu, S. Deep learning based efficient ship detection from drone-captured images for maritime surveillance. Ocean. Eng. 2023, 285, 115440.

- Yang, H.F.; Cai, J.; Liu, C.; Ke, R.; Wang, Y. Cooperative multi-camera vehicle tracking and traffic surveillance with edge artificial intelligence and representation learning. Transp. Res. Part C Emerg. Technol. 2023, 148, 103982.

- Ugli, D.B.R.; Kim, J.; Mohammed, A.F.; Lee, J. Cognitive Video Surveillance Management in Hierarchical Edge Computing System with Long Short-Term Memory Model. Sensors 2023, 23, 2869.

- Ullah, A.; Anwar, S.M.; Li, J.; Nadeem, L.; Mahmood, T.; Rehman, A.; Saba, T. Smart cities: The role of Internet of Things and machine learning in realizing a data-centric smart environment. Complex Intell. Syst. 2023, 1–31.

- Islam, M.R.; Kabir, M.M.; Mridha, M.F.; Alfarhood, S.; Safran, M.; Che, D. Deep Learning-Based IoT System for Remote Monitoring and Early Detection of Health Issues in Real-Time. Sensors 2023, 23, 5204.