Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is an old version of this entry, which may differ significantly from the current revision.

There has been a considerable surge in the deployment of assistive robots across various settings such as rehabilitation centers, offices, hotels, and airports. Ensuring safe and efficient navigation in crowded environments is a critical goal for assistive robots.

- crowd navigation

- deep reinforcement learning

- risk-aware

1. Introduction

Assistive robots have dramatically transformed human lives by offering a multitude of services, such as healthcare, cleaning, meal delivery, and transportation, thereby augmenting convenience and comfort [1][2][3][4]. In recent years, there has been a considerable surge in the deployment of assistive robots across various settings such as rehabilitation centers, offices, hotels, and airports [1][2][3][4]. The capability of robots to navigate and evade collisions is of paramount importance, especially in high-density environments. The implementation of effective navigation and collision avoidance strategies is crucial in ensuring agent safety, preventing encounters between robots and humans or other obstacles, and minimizing potential hazards and injuries [5].

Traditional methods face a significant challenge in ensuring collision avoidance while navigating through dense crowds. Crowded environments are characterized by their complex structures and dynamic scenarios, which further exacerbate the navigation process. It becomes imperative for robots to precisely predict and comprehend human intentions for effective navigation. The implementation of appropriate strategies is essential to proactively evading potential collisions.

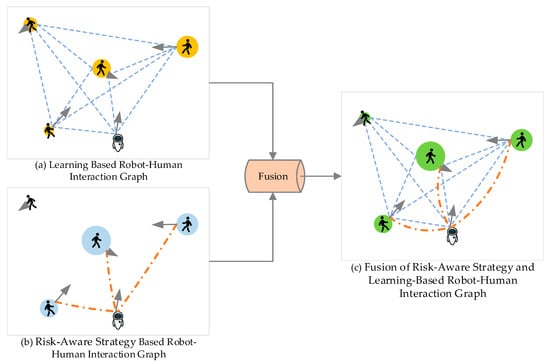

Current navigating and path planning in real-world applications rely on reactive algorithms such as the social force model (SFM) [6] and the dynamic window approach (DWA) [7]. While these methods perform well in simpler environments, they fall short in dynamic, complex environments where humans and robots coexist and struggle with uncertainties [8]. Recently, researchers have been investigating the amalgamation of planning and learning to improve the real-time decision-making and path planning capabilities of robots. For instance, using imitation learning [9] and reinforcement learning algorithms [10][11][12][13][14][15], robots can learn behavioral strategies through environmental interactions, enabling them to learn from data and adapt to environmental changes. Learning-based approaches have shown promising results in crowd navigation problems, but their effectiveness in obstacle avoidance diminishes as crowd density increases. To tackle this, some studies have suggested using graph-based approaches to represent interactions between agents. Chen et al. [16] introduced a graph attention mechanism, but it requires pre-defined network parameters based on human attention in advance. Liu et al. [17] proposed a spatial–temporal graph attention mechanism, which effectively addresses the complex interaction problem between agents by establishing connections and incorporating pedestrian trajectory prediction, as shown in Figure 1a. In [17], the interaction relationship between pedestrians and the robot is learned using a self-attention mechanism. However, this method requires the robot to have a complete connection with all pedestrians within its field of view, leading to non-influential pedestrians impacting the robot’s actions. In crowded scenes, excessive attention to unimportant individuals and neglecting pedestrians with collision risks can result in the freezing robot problem [18].

Figure 1. Illustration of the proposed robot–human interaction method. The proposed method integrates learning-based and risk-aware strategy-based robot–human interaction features. The gray arrow represents the velocity of the agent.

2. Robot Crowd Navigation Methods

The objective of crowd navigation is to successfully reach specific goals while avoiding collisions with other agents as well as static or dynamic obstacles. Existing crowd navigation methods can be broadly classified into three categories: reaction-based methods, learning-based methods, and graph-based methods.

Reaction-based robot navigation in dynamic environments often employs methods such as SMF [6], ORCA [19], and DWA [7]. The SMF method is based on the concept of social forces, which model the interactions between agents as forces. Kamezaki et al. [20] introduce a proactive social motion model that enables mobile service robots to navigate safely and socially in crowded and dynamic environments. The core idea of the ORCA model is to coordinate the velocities of agents to avoid collisions. The DWA method samples the possible velocity range for the robot, estimates the robot’s potential trajectories based on velocity, performs collision detection and evaluation on different trajectories, and selects the optimal path based on the evaluation. These aforementioned methods are reactive approaches that have demonstrated excellent performance in industrial settings. However, as assistive robots are increasingly integrated into interactive environments with humans, such as airports and supermarkets, they often encounter crowded scenarios with numerous dynamic humans.

Deep reinforcement learning (DRL) has garnered significant attention in the field of robot navigation due to its remarkable learning capability. Liu et al. [21] employed a deep reinforcement learning framework to address the collision avoidance problem in decentralized multi-agent systems. Their proposed method utilized a policy network that takes into account the relative positions and velocities of agents as inputs, effectively considering the motion features of other agents and generating collision-free velocity vectors. Chen et al. [22] suggested incorporating a pairwise interaction with a self-attention mechanism, jointly modeling human–robot and human–human interactions within the deep reinforcement learning framework. This model provides a more explicit representation of crowd–robot interaction, capturing human-to-human interactions in dense crowds and improving the robot’s navigation capability in crowded environments. However, the unpredictability of human behavior poses a challenge for real-time learning. Samsani et al. [23] introduced a model that considers all possible actions humans might take within a given time, incorporating real-time human behavior by modeling danger zones. The robot achieves safe navigation by training to avoid these danger zones. Wang et al. [24] proposed an efficient interactive reinforcement learning approach that integrates human preferences into the reward model, using them as teacher guidance to explore the underlying aspects of social interaction. The introduction of hybrid experience policy learning enhances sample efficiency and incorporates human feedback. Mun et al. [25] employed variational autoencoders to learn occlusion inference representations and applied deep reinforcement learning techniques to integrate these learned representations into occlusion-aware planning. This approach enhances obstacle avoidance performance by estimating agents in occluded spaces.

Furthermore, typical indoor environments present additional challenges due to spatial limitations. Zheng et al. [26] proposed a hierarchical approach that integrates local and global information to ensure the safety and efficiency of exploration and planning. This planning method utilizes reinforcement learning-based obstacle avoidance algorithms, enabling the robot to navigate safely through crowds by following the path generated by the exploration planner. Liu et al. [14] devised a reward function that incorporates social norms to guide the training of the policy. The policy model takes laser scan sequences and the robot’s own state as inputs to differentiate between static and dynamic obstacles and outputs steering commands. The learned policy successfully guides the robot to the target location in a manner consistent with social norms.

Previous research has demonstrated the effectiveness of DRL frameworks in training efficient navigation strategies. However, their performance declines as the crowd size increases. Recent advancements have highlighted the potential of graph neural networks to capture local interactions among surrounding objects. Liu et al. [27] proposed a relational graph learning approach that utilizes the latent features of all agents to infer their relationships. They encode higher-order interactions in the state representation of each agent using graph convolutional networks and employ these interactions for state prediction and value estimation. Implementing this method leads to enhanced navigation efficiency and reduced collisions. Chen et al. [16] introduced a novel network that utilizes graph representations to learn policies. Initially, a graph convolutional network was trained using human gaze data to predict human attention towards different agents during navigation tasks. The learned attention was then integrated into a graph-based reinforcement learning framework. Liu et al. [28] introduced a distributed structure-recurrent neural network (DS-RNN) that considers the spatial and temporal relationships of pedestrian movements to construct spatiotemporal graphs. They employ RNNs to generate the robot’s motion policy. Liu et al. [13] introduced a recurrent graph neural network with an attention mechanism that infers the intentions of dynamic agents by predicting their future trajectories across multiple time steps. These predictions are combined into a model-free reinforcement learning framework to prevent the robot from deviating from the preplanned paths of other agents. Zhang et al. [29] addressed autonomous navigation tasks in large-scale environments by incorporating relational graph learning to capture interactions among agents. They also introduced a globally guided reinforcement learning strategy to achieve fixed-size learning models in large-scale complex environments. Spatiotemporal graph transformer [30] is also employed to model the interactions between humans and the robot for crowd navigation. Wang et al. [31] proposed a social-aware navigation method named NaviSTAR, which utilizes a hybrid spatiotemporal graph transformer (STAR) to comprehend crowd interactions and incorporate latent multimodal information. The strategy is trained using off-policy reinforcement learning algorithms and preference learning, with the reward function network trained under supervision.

Current research suggests that the integration of graph neural networks and reinforcement learning can effectively capture interactions among crowds. Anticipating humans’ intentions in advance can provide improved guidance for robot navigation. However, existing graph neural networks primarily rely on distances between humans to construct graph models, neglecting positional and velocity information among humans. This oversight can lead to potential collision risks and result in the freezing robot problem.

This entry is adapted from the peer-reviewed paper 10.3390/electronics12234744

References

- Brose, S.W.; Weber, D.J.; Salatin, B.A.; Grindle, G.G.; Wang, H.; Vazquez, J.J.; Cooper, R.A. The Role of Assistive Robotics in the Lives of Persons with Disability. Am. J. Phys. Med. Rehab. 2010, 89, 509–521.

- Matarić, M.J.; Scassellati, B. Socially Assistive Robotics. In Springer Handbook of Robotics; Springer: Berlin/Heidelberg, Germany, 2016; Volume 18, pp. 1973–1994.

- Matarić, M.J. Socially Assistive Robotics: Human Augmentation versus Automation. Sci. Rob. 2017, 2, eaam5410.

- Udupa, S.; Kamat, V.R.; Menassa, C.C. Shared Autonomy in Assistive Mobile Robots: A Review. Disabil. Rehabil. Assist. Technol. 2023, 18, 827–848.

- Xiao, X.; Liu, B.; Warnell, G.; Stone, P. Motion Planning and Control for Mobile Robot Navigation Using Machine Learning: A Survey. Auton. Robot. 2022, 46, 569–597.

- Helbing, D.; Molnár, P. Social Force Model for Pedestrian Dynamics. Phys. Rev. E 1995, 51, 4282–4286.

- Zhong, X.; Tian, J.; Hu, H.; Peng, X. Hybrid Path Planning Based on Safe A* Algorithm and Adaptive Window Approach for Mobile Robot in Large-Scale Dynamic Environment. J. Intell. Robot. Syst. 2020, 99, 65–77.

- Guillén-Ruiz, S.; Bandera, J.P.; Hidalgo-Paniagua, A.; Bandera, A. Evolution of Socially-Aware Robot Navigation. Electronics 2023, 12, 1570.

- Qin, L.; Huang, Z.; Zhang, C.; Guo, H.; Ang, M.; Rus, D. Deep Imitation Learning for Autonomous Navigation in Dynamic Pedestrian Environments. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 4108–4115.

- Everett, M.; Chen, Y.F.; How, J.P. Collision Avoidance in Pedestrian-Rich Environments With Deep Reinforcement Learning. IEEE Access 2021, 9, 10357–10377.

- Cui, Y.; Zhang, H.; Wang, Y.; Xiong, R. Learning World Transition Model for Socially Aware Robot Navigation. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 9262–9268.

- Jin, J.; Nguyen, N.M.; Sakib, N.; Graves, D.; Yao, H.; Jagersand, M. Mapless Navigation among Dynamics with Social-Safety-Awareness: A Reinforcement Learning Approach from 2D Laser Scans. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 6979–6985.

- Patel, U.; Kumar, N.K.S.; Sathyamoorthy, A.J.; Manocha, D. DWA-RL: Dynamically Feasible Deep Reinforcement Learning Policy for Robot Navigation among Mobile Obstacles. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 6057–6063.

- Perez-D’Arpino, C.; Liu, C.; Goebel, P.; Martin-Martin, R.; Savarese, S. Robot Navigation in Constrained Pedestrian Environments Using Reinforcement Learning. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1140–1146.

- Samsani, S.S.; Mutahira, H.; Muhammad, M.S. Memory-Based Crowd-Aware Robot Navigation Using Deep Reinforcement Learning. Complex Intell. Syst. 2023, 9, 2147–2158.

- Chen, Y.; Liu, C.; Shi, B.E.; Liu, M. Robot Navigation in Crowds by Graph Convolutional Networks with Attention Learned From Human Gaze. IEEE Robot. Autom. Lett. 2020, 5, 2754–2761.

- Liu, S.; Chang, P.; Huang, Z.; Chakraborty, N.; Hong, K.; Liang, W.; McPherson, D.L.; Geng, J.; Driggs-Campbell, K. Intention Aware Robot Crowd Navigation with Attention-Based Interaction Graph. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 12015–12021.

- Sathyamoorthy, A.J.; Patel, U.; Guan, T.; Manocha, D. Frozone: Freezing-Free, Pedestrian-Friendly Navigation in Human Crowds. IEEE Robot. Autom. Lett. 2020, 5, 4352–4359.

- Van Den Berg, J.; Guy, S.J.; Lin, M.; Manocha, D. Reciprocal N-Body Collision Avoidance. In Robotics Research; Pradalier, C., Siegwart, R., Hirzinger, G., Eds.; Springer Tracts in Advanced Robotics; Springer: Berlin/Heidelberg, Germany, 2011; Volume 70, pp. 3–19. ISBN 978-3-642-19456-6.

- Kamezaki, M.; Tsuburaya, Y.; Kanada, T.; Hirayama, M.; Sugano, S. Reactive, Proactive, and Inducible Proximal Crowd Robot Navigation Method Based on Inducible Social Force Model. IEEE Robot. Autom. Lett. 2022, 7, 3922–3929.

- Han, R.; Chen, S.; Wang, S.; Zhang, Z.; Gao, R.; Hao, Q.; Pan, J. Reinforcement Learned Distributed Multi-Robot Navigation With Reciprocal Velocity Obstacle Shaped Rewards. IEEE Robot. Autom. Lett. 2022, 7, 5896–5903.

- Chen, C.; Liu, Y.; Kreiss, S.; Alahi, A. Crowd-Robot Interaction: Crowd-Aware Robot Navigation With Attention-Based Deep Reinforcement Learning. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 6015–6022.

- Samsani, S.S.; Muhammad, M.S. Socially Compliant Robot Navigation in Crowded Environment by Human Behavior Resemblance Using Deep Reinforcement Learning. IEEE Robot. Autom. Lett. 2021, 6, 5223–5230.

- Wang, R.; Wang, W.; Min, B.-C. Feedback-Efficient Active Preference Learning for Socially Aware Robot Navigation. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 11336–11343.

- Mun, Y.-J.; Itkina, M.; Liu, S.; Driggs-Campbell, K. Occlusion-Aware Crowd Navigation Using People as Sensors. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 12031–12037.

- Zheng, Z.; Cao, C.; Pan, J. A Hierarchical Approach for Mobile Robot Exploration in Pedestrian Crowd. IEEE Robot. Autom. Lett. 2022, 7, 175–182.

- Liu, Z.; Zhai, Y.; Li, J.; Wang, G.; Miao, Y.; Wang, H. Graph Relational Reinforcement Learning for Mobile Robot Navigation in Large-Scale Crowded Environments. IEEE Trans. Intell. Transp. Syst. 2023, 24, 8776–8787.

- Liu, S.; Chang, P.; Liang, W.; Chakraborty, N.; Driggs-Campbell, K. Decentralized Structural-RNN for Robot Crowd Navigation with Deep Reinforcement Learning. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 3517–3524.

- Zhang, Y.; Feng, Z. Crowd-Aware Mobile Robot Navigation Based on Improved Decentralized Structured RNN via Deep Reinforcement Learning. Sensors 2023, 23, 1810.

- He, H.; Fu, H.; Wang, Q.; Zhou, S.; Liu, W. Spatio-Temporal Transformer-Based Reinforcement Learning for Robot Crowd Navigation. arXiv 2023, arXiv:2305.16612.

- Wang, W.; Wang, R.; Mao, L.; Min, B.C. NaviSTAR: Socially Aware Robot Navigation with Hybrid Spatio-Temporal Graph Transformer and Preference Learning. arXiv 2023, arXiv:2304.05979.

This entry is offline, you can click here to edit this entry!