Federated Learning (FL) is poised to play an essential role in extending the Internet of Things (IoT) and Big Data ecosystems by enabling entities to harness the computational power of private devices, thus safeguarding user data privacy. Despite its benefits, FL is vulnerable to multiple types of assaults, including label-flipping and covert attacks. The label-flipping attack specifically targets the central model by manipulating its decisions for a specific class, which can result in biased or incorrect results.

- federated learning

- privacy preserving

- data poisoning

- Big Data systems

1. Introduction

2. Data Security Encryption in Federated Learning: Relevant Literature and Approaches

3. Privacy Threats in Federated Learning

4. Privacy and Security in Federated Learning Systems: State-of-the-Art Defensive Mechanisms

| Mechanism | Description | Reference |

|---|---|---|

| FedCC | Employs a robust aggregation algorithm, mitigating both targeted and untargeted model poisoning or backdoor attacks, even in non-IID data scenarios. | [51] |

| Krum | Acts as a server-side defence via an aggregation mechanism, but may be susceptible to Covert Model Poisoning (CMP). | [53] |

| Trimmed Mean | Server-side aggregation similar to Krum, yet also potentially prone to CMP, aiming to resist model poisoning attacks. | [53] |

| MPC-Based Aggregation | Mitigates model inversion attacks by employing a trained autoencoder-based anomaly-detection model during aggregation. | [50] |

| FL-WBC | A client-based strategy that minimises attack impacts on the Global Model by perturbing the parameter space during local training. | [54] |

| Romoa | Utilises a logarithm-based normalisation to manage scaled gradients from malicious vehicles, resisting model poisoning attacks. | [55] |

| RFA | Utilises a weighted average of gradients to resist Byzantine attacks, aiming to establish a robust aggregation method. | [56] |

4.1. Limitations of Current Defensive Mechanisms in Federated Learning

Computational and Communication Overhead

Knowledge Preservation and Incremental Learning

Security and Privacy Concerns

4.2. Problem Statement

-

Massive and rapid IoT data: IoT environments are characterised by the generation of immense volumes of data (𝐷𝑖) at an astounding velocity, making effective and efficient data management imperative to prevent systemic bottlenecks and to ensure sustained operational performance.

-

Preserving privacy with FL: Ensuring the data (𝐷𝑖 and 𝐷𝑡) remain localised and uncompromised during Global Model training in FL demands robust methodologies to prevent leakage or unintended disclosure of sensitive information through model updates (𝐖).

-

Label-flipping attacks: These attacks, wherein labels of data instances are maliciously altered (𝐷˜𝑖

and 𝐷˜𝑠), present a pronounced threat to model integrity and efficacy in FL. Here, the design and implementation of defensive mechanisms that can detect, mitigate, or recover from such attacks is of paramount importance.

where ℋ is the high-level function that seeks to minimise the loss function L given the perturbed data and weight matrix, ensuring the learned model 𝐖

-

is resilient against the attack.

-

Ensuring scalability: Addressing the scale, by ensuring the developed FL model not only counters privacy and security threats, but also scales efficiently to manage and process expansive and diverse IoT Big Data.

-

Technological integration and novelty: While FATE offers a promising federated learning framework and Apache Spark offers fast in-memory data processing, exploring and integrating these technologies in an innovative manner that cohesively addresses IoT Big Data management challenges within an FL paradigm becomes crucial.ℋ(𝐷𝑖,𝐷𝑡;𝐖,Ω)→min𝐖,Ω𝐿(𝐷𝑖,𝐷𝑡,𝐖,Ω)

where ℋ is aimed at minimising the loss function L concerning the data, the weight matrix 𝐖, and the model relationship matrix Ω, ensuring a harmonised functionality between the integrated technologies and, also, enabling scalable and efficient data processing and model training across federated nodes.

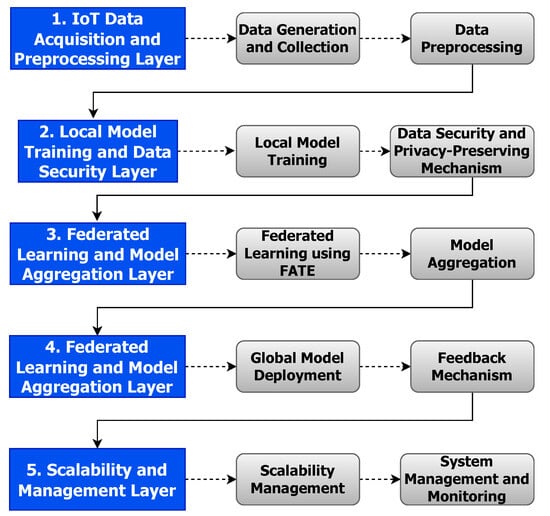

5. Proposed Architecture

-

IoT data harvesting and initial processing layer:

-

Dynamic data acquisition: implements a strategic approach to dynamically harvest, categorise, and preliminarily process extensive IoT data, utilising Apache Spark’s proficient in-memory computation to adeptly handle both streaming and batch data.

-

-

In situ model training and data privacy layer:

-

Intrinsic local model training: employs FATE to facilitate localised model training, reinforcing data privacy by ensuring data are processed in situ.

-

Data and model security mechanism: integrate cryptographic and obfuscation techniques to safeguard data and model during communication, thus fortifying privacy and integrity.

-

-

Federated learning and secure model consolidation layer:

-

Privacy-aware federated learning: engages FATE to promote decentralised learning, which encourages local model training and securely amalgamates model updates without necessitating direct data exchange.

-

Model aggregation and resilience: establishes a secure aggregation node that amalgamates model updates and validates them against potential adversarial actions and possible model poisoning.

-

-

Global Model enhancement and feedback integration layer:

-

Deploy, Enhance, and Evaluate: apply the Global Model to enhance local models, instigating a comprehensive evaluation and feedback mechanism that informs subsequent training cycles.

-

-

Adaptive scalability and dynamic management layer:

-

Dynamic scalability management: utilises Apache Spark to ensure adaptive scalability, which accommodates the continuous data and computational demands intrinsic to vast IoT setups.

-

Proactive system management: implements AI-driven predictive management and maintenance mechanisms, aiming to anticipate potential system needs and iteratively optimise for both performance and reliability.

-

This entry is adapted from the peer-reviewed paper 10.3390/electronics12224633

References

- Yang, Q.; Liu, Y.; Chen, T.; Tong, Y. Federated machine learning: Concept and applications. ACM Trans. Intell. Syst. Technol. (TIST) 2019, 10, 1–19.

- Alam, T.; Gupta, R. Federated Learning and Its Role in the Privacy Preservation of IoT Devices. Future Internet 2022, 14, 246.

- Dhiman, G.; Juneja, S.; Mohafez, H.; El-Bayoumy, I.; Sharma, L.K.; Hadizadeh, M.; Islam, M.A.; Viriyasitavat, W.; Khandaker, M.U. Federated Learning Approach to Protect Healthcare Data over Big Data Scenario. Sustainability 2022, 14, 2500.

- Aono, Y.; Hayashi, T.; Wang, L.; Moriai, S. Privacy-preserving deep learning via additively homomorphic encryption. IEEE Trans. Inf. Forensics Secur. 2017, 13, 1333–1345.

- Chen, Y.R.; Rezapour, A.; Tzeng, W.G. Privacy-preserving ridge regression on distributed data. Inf. Sci. 2018, 451, 34–49.

- Fang, H.; Qian, Q. Privacy Preserving Machine Learning with Homomorphic Encryption and Federated Learning. Future Internet 2021, 13, 94.

- Park, J.; Lim, H. Privacy-Preserving Federated Learning Using Homomorphic Encryption. Appl. Sci. 2022, 12, 734.

- Angulo, E.; Márquez, J.; Villanueva-Polanco, R. Training of Classification Models via Federated Learning and Homomorphic Encryption. Sensors 2023, 23, 1966.

- Shen, X.; Jiang, H.; Chen, Y.; Wang, B.; Gao, L. PLDP-FL: Federated Learning with Personalized Local Differential Privacy. Entropy 2023, 25, 485.

- Wang, X.; Wang, J.; Ma, X.; Wen, C. A Differential Privacy Strategy Based on Local Features of Non-Gaussian Noise in Federated Learning. Sensors 2022, 22, 2424.

- Zhao, J.; Yang, M.; Zhang, R.; Song, W.; Zheng, J.; Feng, J.; Matwin, S. Privacy-Enhanced Federated Learning: A Restrictively Self-Sampled and Data-Perturbed Local Differential Privacy Method. Electronics 2022, 11, 4007.

- McMahan, H.B.; Ramage, D.; Talwar, K.; Zhang, L. Learning differentially private recurrent language models. arXiv 2017, arXiv:1710.06963.

- So, J.; Güler, B.; Avestimehr, A.S. Turbo-Aggregate: Breaking the Quadratic Aggregation Barrier in Secure Federated Learning. IEEE J. Sel. Areas Inf. Theory 2021, 2, 479–489.

- Xu, G.; Li, H.; Liu, S.; Yang, K.; Lin, X. VerifyNet: Secure and Verifiable Federated Learning. IEEE Trans. Inf. Forensics Secur. 2020, 15, 911–926.

- Kim, H.; Park, J.; Bennis, M.; Kim, S.L. Blockchained On-Device Federated Learning. IEEE Commun. Lett. 2020, 24, 1279–1283.

- Mahmood, Z.; Jusas, V. Blockchain-Enabled: Multi-Layered Security Federated Learning Platform for Preserving Data Privacy. Electronics 2022, 11, 1624.

- Liu, H.; Zhou, H.; Chen, H.; Yan, Y.; Huang, J.; Xiong, A.; Yang, S.; Chen, J.; Guo, S. A Federated Learning Multi-Task Scheduling Mechanism Based on Trusted Computing Sandbox. Sensors 2023, 23, 2093.

- Mortaheb, M.; Vahapoglu, C.; Ulukus, S. Personalized Federated Multi-Task Learning over Wireless Fading Channels. Algorithms 2022, 15, 421.

- Du, W.; Atallah, M.J. Privacy-preserving cooperative statistical analysis. In Proceedings of the Seventeenth Annual Computer Security Applications Conference, New Orleans, LA, USA, 10–14 December 2001; pp. 102–110.

- Du, W.; Han, Y.S.; Chen, S. Privacy-preserving multivariate statistical analysis: Linear regression and classification. In Proceedings of the 2004 SIAM International Conference on Data Mining, Lake Buena Vista, FL, USA, 22–24 April 2004; pp. 222–233.

- Khan, A.; ten Thij, M.; Wilbik, A. Communication-Efficient Vertical Federated Learning. Algorithms 2022, 15, 273.

- Hardy, S.; Henecka, W.; Ivey-Law, H.; Nock, R.; Patrini, G.; Smith, G.; Thorne, B. Private federated learning on vertically partitioned data via entity resolution and additively homomorphic encryption. arXiv 2017, arXiv:1711.10677.

- Schoenmakers, B.; Tuyls, P. Efficient binary conversion for Paillier encrypted values. In Proceedings of the Advances in Cryptology-EUROCRYPT 2006: 24th Annual International Conference on the Theory and Applications of Cryptographic Techniques, St. Petersburg, Russia, 28 May–1 June 2006; Springer: Berlin/Heidelberg, Germany, 2006; pp. 522–537.

- Zhong, Z.; Zhou, Y.; Wu, D.; Chen, X.; Chen, M.; Li, C.; Sheng, Q.Z. P-FedAvg: Parallelizing Federated Learning with Theoretical Guarantees. In Proceedings of the IEEE INFOCOM 2021—IEEE Conference on Computer Communications, Vancouver, BC, Canada, 10–13 May 2021; pp. 1–10.

- Barreno, M.; Nelson, B.; Sears, R.; Joseph, A.D.; Tygar, J.D. Can machine learning be secure? In Proceedings of the 2006 ACM Symposium on Information, Computer and Communications Security, Taipei, Taiwan, 21–24 March 2006; pp. 16–25.

- Huang, L.; Joseph, A.D.; Nelson, B.; Rubinstein, B.I.; Tygar, J.D. Adversarial machine learning. In Proceedings of the 4th ACM Workshop on Security and Artificial Intelligence, Chicago, IL, USA, 21 October 2011; pp. 43–58.

- Chu, W.L.; Lin, C.J.; Chang, K.N. Detection and Classification of Advanced Persistent Threats and Attacks Using the Support Vector Machine. Appl. Sci. 2019, 9, 4579.

- Chen, Y.; Hayawi, K.; Zhao, Q.; Mou, J.; Yang, L.; Tang, J.; Li, Q.; Wen, H. Vector Auto-Regression-Based False Data Injection Attack Detection Method in Edge Computing Environment. Sensors 2022, 22, 6789.

- Jiang, Y.; Zhou, Y.; Wu, D.; Li, C.; Wang, Y. On the Detection of Shilling Attacks in Federated Collaborative Filtering. In Proceedings of the 2020 International Symposium on Reliable Distributed Systems (SRDS), Shanghai, China, 21–24 September 2020; pp. 185–194.

- Alfeld, S.; Zhu, X.; Barford, P. Data poisoning attacks against autoregressive models. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; Volume 30.

- Biggio, B.; Nelson, B.; Laskov, P. Poisoning attacks against support vector machines. arXiv 2012, arXiv:1206.6389.

- Zügner, D.; Akbarnejad, A.; Günnemann, S. Adversarial attacks on neural networks for graph data. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining; 2018; pp. 2847–2856. Available online: https://arxiv.org/abs/1805.07984 (accessed on 18 October 2023).

- Zhao, M.; An, B.; Yu, Y.; Liu, S.; Pan, S. Data poisoning attacks on multi-task relationship learning. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32.

- Dhiman, S.; Nayak, S.; Mahato, G.K.; Ram, A.; Chakraborty, S.K. Homomorphic Encryption based Federated Learning for Financial Data Security. In Proceedings of the 2023 4th International Conference on Computing and Communication Systems (I3CS), Shillong, India, 16–18 March 2023; pp. 1–6.

- Jia, B.; Zhang, X.; Liu, J.; Zhang, Y.; Huang, K.; Liang, Y. Blockchain-Enabled Federated Learning Data Protection Aggregation Scheme With Differential Privacy and Homomorphic Encryption in IIoT. IEEE Trans. Ind. Inform. 2022, 18, 4049–4058.

- Zhang, S.; Li, Z.; Chen, Q.; Zheng, W.; Leng, J.; Guo, M. Dubhe: Towards data unbiasedness with homomorphic encryption in federated learning client selection. In Proceedings of the 50th International Conference on Parallel Processing, Lemont, IL, USA, 9–12 August 2021; pp. 1–10.

- Guo, X. Federated Learning for Data Security and Privacy Protection. In Proceedings of the 2021 12th International Symposium on Parallel Architectures, Algorithms and Programming (PAAP), Xi’an, China, 10–12 December 2021; pp. 194–197.

- Fan, C.I.; Hsu, Y.W.; Shie, C.H.; Tseng, Y.F. ID-Based Multireceiver Homomorphic Proxy Re-Encryption in Federated Learning. ACM Trans. Sens. Netw. 2022, 18, 1–25.

- Kou, L.; Wu, J.; Zhang, F.; Ji, P.; Ke, W.; Wan, J.; Liu, H.; Li, Y.; Yuan, Q. Image encryption for Offshore wind power based on 2D-LCLM and Zhou Yi Eight Trigrams. Int. J. Bio-Inspired Comput. 2023, 22, 53–64.

- Li, L.; Li, T. Traceability model based on improved witness mechanism. CAAI Trans. Intell. Technol. 2022, 7, 331–339.

- Lee, J.; Duong, P.N.; Lee, H. Configurable Encryption and Decryption Architectures for CKKS-Based Homomorphic Encryption. Sensors 2023, 23, 7389.

- Ge, L.; Li, H.; Wang, X.; Wang, Z. A review of secure federated learning: Privacy leakage threats, protection technologies, challenges and future directions. Neurocomputing 2023, 561, 126897.

- Zhang, J.; Zhu, H.; Wang, F.; Zhao, J.; Xu, Q.; Li, H. Security and privacy threats to federated learning: Issues, methods, and challenges. Secur. Commun. Netw. 2022, 2022, 2886795.

- Zhang, J.; Li, M.; Zeng, S.; Xie, B.; Zhao, D. A survey on security and privacy threats to federated learning. In Proceedings of the 2021 International Conference on Networking and Network Applications (NaNA), Lijiang City, China, 29 October–1 November 2021; pp. 319–326.

- Li, Y.; Bao, Y.; Xiang, L.; Liu, J.; Chen, C.; Wang, L.; Wang, X. Privacy threats analysis to secure federated learning. arXiv 2021, arXiv:2106.13076.

- Asad, M.; Moustafa, A.; Yu, C. A critical evaluation of privacy and security threats in federated learning. Sensors 2020, 20, 7182.

- Manzoor, S.I.; Jain, S.; Singh, Y.; Singh, H. Federated Learning Based Privacy Ensured Sensor Communication in IoT Networks: A Taxonomy, Threats and Attacks. IEEE Access 2023, 11, 42248–42275.

- Benmalek, M.; Benrekia, M.A.; Challal, Y. Security of federated learning: Attacks, defensive mechanisms, and challenges. Rev. Sci. Technol. L’Inform. Série RIA Rev. D’Intell. Artif. 2022, 36, 49–59.

- Arbaoui, M.; Rahmoun, A. Towards secure and reliable aggregation for Federated Learning protocols in healthcare applications. In Proceedings of the 2022 Ninth International Conference on Software Defined Systems (SDS), Paris, France, 12–15 December 2022; pp. 1–3.

- Abdelli, K.; Cho, J.Y.; Pachnicke, S. Secure Collaborative Learning for Predictive Maintenance in Optical Networks. In Proceedings of the Secure IT Systems: 26th Nordic Conference, NordSec 2021, Virtual Event, 29–30 November 2021; Proceedings 26. Springer International Publishing: Berlin/Heidelberg, Germany, 2021; pp. 114–130.

- Jeong, H.; Son, H.; Lee, S.; Hyun, J.; Chung, T.M. FedCC: Robust Federated Learning against Model Poisoning Attacks. arXiv 2022, arXiv:2212.01976.

- Lyu, X.; Han, Y.; Wang, W.; Liu, J.; Wang, B.; Liu, J.; Zhang, X. Poisoning with cerberus: Stealthy and colluded backdoor attack against federated learning. In Proceedings of the Thirty-Seventh AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023.

- Wei, K.; Li, J.; Ding, M.; Ma, C.; Jeon, Y.S.; Poor, H.V. Covert model poisoning against federated learning: Algorithm design and optimization. IEEE Trans. Dependable Secur. Comput. 2023.

- Sun, J.; Li, A.; DiValentin, L.; Hassanzadeh, A.; Chen, Y.; Li, H. Fl-wbc: Enhancing robustness against model poisoning attacks in federated learning from a client perspective. Adv. Neural Inf. Process. Syst. 2021, 34, 12613–12624.

- Mao, Y.; Yuan, X.; Zhao, X.; Zhong, S. Romoa: Ro bust Mo del A ggregation for the Resistance of Federated Learning to Model Poisoning Attacks. In Proceedings of the Computer Security–ESORICS 2021: 26th European Symposium on Research in Computer Security, Darmstadt, Germany, 4–8 October 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 476–496.

- Pillutla, K.; Kakade, S.M.; Harchaoui, Z. Robust Aggregation for Federated Learning. IEEE Trans. Signal Process. 2022, 70, 1142–1154.

- Wang, X.; Liang, Z.; Koe, A.S.V.; Wu, Q.; Zhang, X.; Li, H.; Yang, Q. Secure and efficient parameters aggregation protocol for federated incremental learning and its applications. Int. J. Intell. Syst. 2022, 37, 4471–4487.

- Hao, M.; Li, H.; Xu, G.; Chen, H.; Zhang, T. Efficient, private and robust federated learning. In Proceedings of the Annual Computer Security Applications Conference, Virtual Event, 6–10 December 2021; pp. 45–60.