Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is an old version of this entry, which may differ significantly from the current revision.

Vehicle re-identification research under surveillance cameras has yielded impressive results. However, the challenge of unmanned aerial vehicle (UAV)-based vehicle re-identification (ReID) presents a high degree of flexibility, mainly due to complicated shooting angles, occlusions, low discrimination of top–down features, and significant changes in vehicle scales.

- UAV re-identification

- Vehicle re-identification (ReID)

- UAV

1. Introduction

Vehicle re-identification (ReID) [1,2,3,4] holds great importance in the realm of intelligent transportation systems (ITSs) in the context of smart cities. Vehicle ReID can be regarded as an image retrieval problem. Given a vehicle image, the similarity between each image and the image to be retrieved in the test set is calculated to determine whether the image to be retrieved is in the test set. Traditionally, license plate images have been employed for vehicle identification. However, obtaining clear license plate information can be challenging due to various external factors like obstructed license plates, obstacles, and image blurriness.

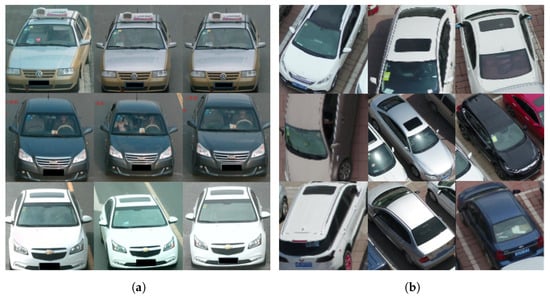

Thanks to the success of deep learning, the vehicle identification algorithm in the field of surveillance cameras again achieved impressive results [5,6,7,8,9]. According to the idea of solving the vehicle ReID problem, the methods of vehicle ReID can be divided into a global feature-based method, local feature-based method, attention mechanism-based method, vehicle perspective-based method, and generative adversarial network-based method. Typically, these methods [6,9,10,11,12] employ a deep metric learning model that relies on feature extraction networks. The objective is to train the model to distinguish between vehicles with the same ID and those with different IDs to accomplish vehicle ReID. However, as shown in Figure 1, there are discernible disparities between vehicle images captured by UAV and those acquired through stationary cameras. The ReID challenge regarding UAV imagery introduces unique complexities stemming from intricate shooting angles, occlusions, limited discriminative power of top–down features, and substantial variations in vehicle scales.

Figure 1. Comparison of two types of vehicle images. There is a significant difference between the vehicle from the UAV perspective and the vehicle from the fixed camera. The vehicle under the fixed camera shooting angle is relatively fixed. In the view of UAVs, the shooting angles of cars are changeable, and there are many top–down shooting angles. (a) Surveillance cameras; (b) UAV cameras.

It is worth mentioning that traditional vehicle ReID methods, primarily designed for stationary cameras, face challenges in delivering optimal performance when adapted to the domain of UAV-based ReID. Firstly, the shooting angle of UAVs is complex. UAVs can shoot at different positions and angles, and the camera’s viewpoint will change accordingly. This viewpoint change may cause the same object or scene to have different appearances and characteristics in different images. Second, the UAV can overlook or squint at a target or scene at different angles, resulting in viewpoint changes in the image. This viewpoint change may cause deformation or occlusion of the target shape, thus causing difficulties for feature extraction. To solve the above problems, it is necessary to add a mechanism [1,13,14,15] that can extract more detailed features when ReID extracts features to deal with the challenges brought by the drone perspective. The change in the UAV viewpoint makes the feature extraction algorithm need a certain robustness, which can correctly identify and describe the target in the case of significant changes in the viewpoint. The difference in the UAV view angle makes the feature extraction algorithm need to have the ability to adapt to shape changes and occlusions to improve the feature reliability and robustness in different views.

In recent years, the attention mechanism has gained significant popularity across multiple domains of deep convolutional neural networks. Its fundamental concept revolves around identifying the most crucial information for a given target task from a vast volume of available data. The attention mechanism selectively focuses on the image’s different regions or feature channels to improve the model’s attention and perception ability for crucial visual content. In the context of UAV-based vehicle ReID, the attention mechanism enables the model to enhance its perception capabilities by selectively highlighting the vehicle’s specific regions or feature channels.

However, most attention mechanisms [15,16,17,18] focus on extracting features only from channels or spaces. The channel attention mechanism can effectively enhance essential channels, but it cannot deal with the problem of slight inter-class similarity. Spatial attention mechanisms can selectively amplify or suppress features in specific regions spatially, but they ignore the relationship between channels. To overcome the shortcomings of a single attention mechanism, recent studies have begun to combine channel and spatial attention [19,20,21]. Such a hybrid attention mechanism can consider the relationship between channel and space at the same time to better capture the critical information in the input feature tensor. By introducing multiple branches of the attention mechanism or fusing different attention weights, the interaction between features can be modeled more comprehensively. Shuffle attention (SA) [19] divides molecular channels to extract key channel features and local spatial fusion features, with each subchannel acquiring channel and spatial fusion attention. The bottleneck attention module (BAM) [20] is a technique that generates an attention map through two distinct pathways: channel and spatial. On the other hand, the dual attention network (DANet) [21] incorporates two different types of attention modules on dilated fully convolutional networks (FCNs). These attention modules effectively capture semantic dependencies in both spatial and channel dimensions.

2. Vehicle Re-Identification

The ReID problem [1,22] is first explored and applied to humans. Compared with pedestrian ReID, vehicle ReID is more challenging. Firstly, vehicles tend to have high similarity in appearance, especially in the case of the same brand, model, or color. A higher similarity makes vehicle re-identification more challenging because relatively few features may distinguish different vehicles, and there is little difference between features. Second, vehicle re-identification may face more significant pose variation than human re-identification. Vehicles may appear at different angles, positions, and rotations, resulting in changes in the geometry and appearance characteristics of the vehicle, which increases the difficulty of matching and alignment. Traditionally, vehicle Re-ID problems have been solved by combining sensor data with other clues [23,24,25,26,27,28], such as vehicle travel time [23] and wireless magnetic sensors [24]. Although the sensor technology can obtain better detection results, it cannot meet the needs of practical applications because of its high detection cost. In theory, based on the vehicle license plate number, feature recognition technology is the most reliable and most accurate again [25,26]. However, the camera’s multi-angle, illumination, and resolution significantly influence license plate identification accuracy. Additionally, criminals block, decorate, forge, or remove license plates, making re-identifying vehicles only by license plate information less reliable. Accordingly, researchers have considered vehicle attributes and appearance characteristics, such as shape, color, and texture [27,28].

With the development of neural networks, deep learning-based approaches have outshone others [5,29]. Significant changes in camera angles can lead to substantial differences in local critical areas for vehicle re-identification, which leads to low precision. The hybrid pyramidal graph network (HPGN) [30] proposes a novel pyramid graph network, targeting features closely connected behind the backbone network to explore multi-scale spatial structural features. Zheng et al. [5] proposed the deep feature representations jointly guided by the meaningful attributes, including camera views, vehicle types and colors (DF-CVTC), a unified depth convolution framework for the joint learning of depth feature representations guided by meaningful attributes, including camera view, vehicle type, and color of vehicle re-identification. Huang et al. [29] raised multi-granularity deep feature fusion with multiple granularity (DFFMG) methods or vehicle re-identification, which uses global and local feature fusion to segment vehicle images along two directions (i.e., vertical and horizontal), and integrates discriminant information of different granularity. Graph interactive transformer (GiT) [31] proposes a structure where charts and transformers constantly interact, enabling close collaboration between global and local features for vehicle re-identification. The efficient multiresolution network (EMRN) [32] proposes a multiresolution feature dimension uniform module to fix dimensional features from images of varying resolutions.

Although the current vehicle ReID method plays a specific role in the fixed camera perspective, the vehicle space photographed from the UAV perspective changes significantly, and extracting features from the top–down vertical angle is difficult. Moreover, the shooting angle of UAVs is complex. UAVs can overlook or squint at a target or scene at different angles, resulting in viewpoint changes in the image. The current method needs to be revised to solve the above problems well, and further research is required.

This entry is adapted from the peer-reviewed paper 10.3390/app132111651

This entry is offline, you can click here to edit this entry!