Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is an old version of this entry, which may differ significantly from the current revision.

Artificial intelligence (AI) exceptional capabilities, including pattern recognition, predictive analytics, and decision-making skills, enable the development of systems that can analyze complex medical data at a scale and precision beyond human capacity. AI has significantly impacted thyroid cancer diagnosis, offering advanced tools and methodologies that promise to revolutionize patient outcomes.

- artificial intelligence

- thyroid cancer diagnosis

1. AI in Thyroid Cancer Diagnosis

The adoption of AI in healthcare has become a pivotal development, profoundly reshaping the landscape of medical diagnosis, treatment, and patient care. AI’s exceptional capabilities, including pattern recognition, predictive analytics, and decision-making skills, enable the development of systems that can analyze complex medical data at a scale and precision beyond human capacity [1]. This, in turn, augments early disease detection, facilitates accurate diagnoses, and aids personalized treatment planning. Moreover, AI-driven predictive models can forecast disease outbreaks, enhance the efficiency of hospital operations, and significantly improve patient outcomes [2]. Additionally, AI has the potential to democratize healthcare by bridging the gap between rural and urban health services and making high-quality care more accessible. Hence, the importance of AI in healthcare is profound and will continue to grow as technology advances, leading to more sophisticated applications and better health outcomes for patients worldwide [3][4]. However, the trust serves as a mediator, influencing the impact of AI-specific factors on user acceptance. Researchers have investigated how security, risk, and trust impact the adoption of AI-powered assistance [5]. They have conducted empirical tests on their proposed research framework and found that trust plays a pivotal role in determining user acceptance.

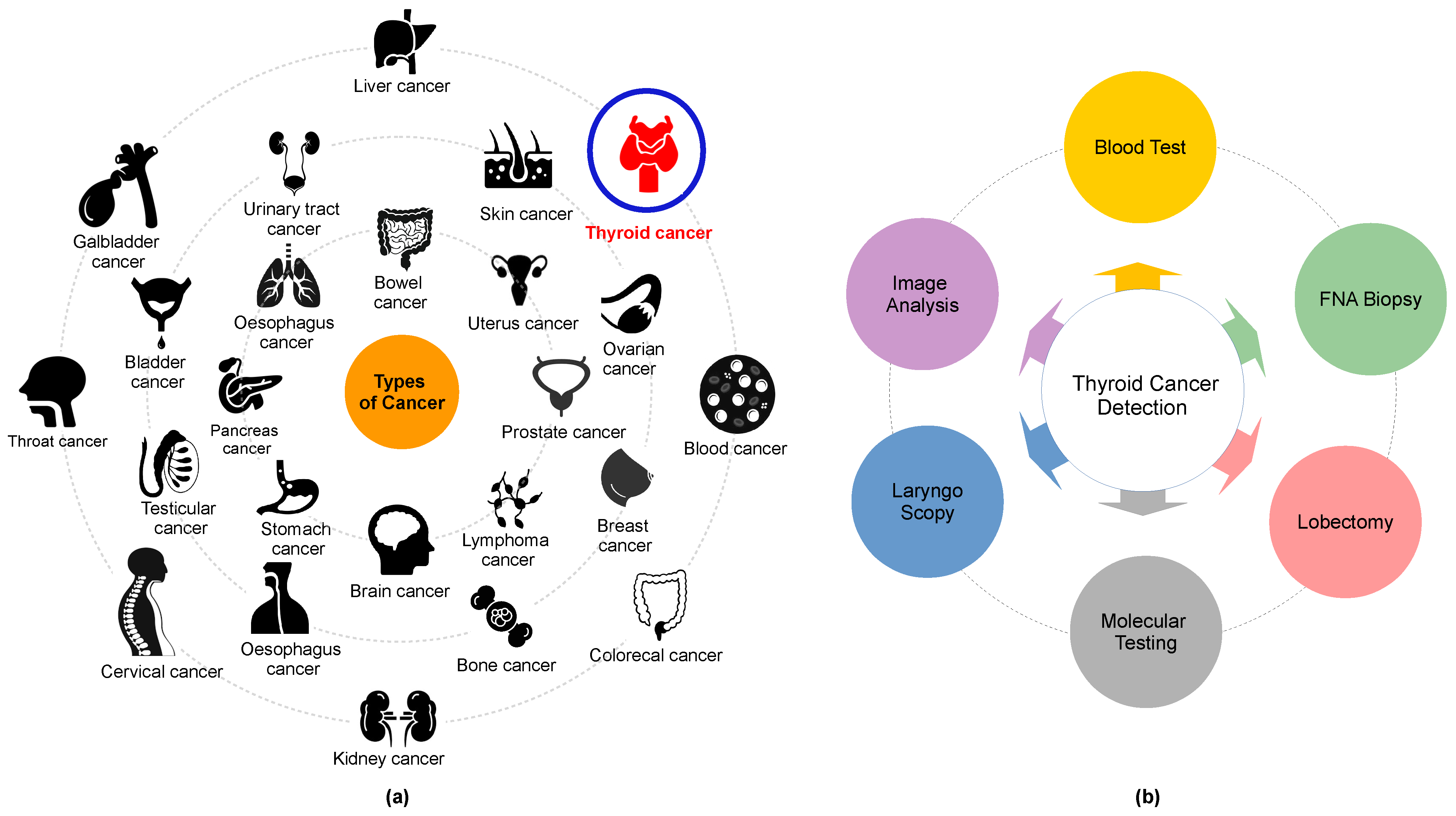

Cancer, a leading cause of death, affects various body parts, as depicted in Figure 1a. Among different types, thyroid carcinoma is one of the most common endocrine cancers globally [6][7]. Concerns are mounting over the escalating incidence of thyroid cancer and associated mortality. Research indicates that thyroid cancer incidence is higher in women aged 15–49 (ranked fifth globally) than in men aged 50–69 years [8][9][10].

Figure 1. (a) Some of the common types of cancer and (b) thyroid cancer detection methods.

According to existing global epidemiological data, the rapid growth of abnormal thyroid nodules is driven by an accelerated increase in genetic cell activity, where the normal functioning and activity of cells in an organism are heightened or intensified. This condition can be categorized into four primary subtypes: papillary carcinoma (PTC) [11], follicular thyroid carcinoma (FTC) [12], anaplastic thyroid carcinoma (ATC) [13], and medullary thyroid carcinoma (MTC) [14]. Influential factors such as high radiation exposure, Hashimoto’s thyroiditis, psychological and genetic predispositions, and advancements in detection technology can contribute to the onset of these cancer types. These conditions might subsequently lead to chronic health issues, including diabetes, irregular heart rhythms, and blood pressure fluctuations [15][16][17]. Although the quantity of cancer cells is a significant indicator for assessing both invasiveness and poor prognosis in thyroid carcinoma, obtaining results is often time-consuming due to the requirement to observe cell appearance. Therefore, the detection and quantification of cell nuclei are considered alternative biomarkers for assessing cancer cell proliferation.

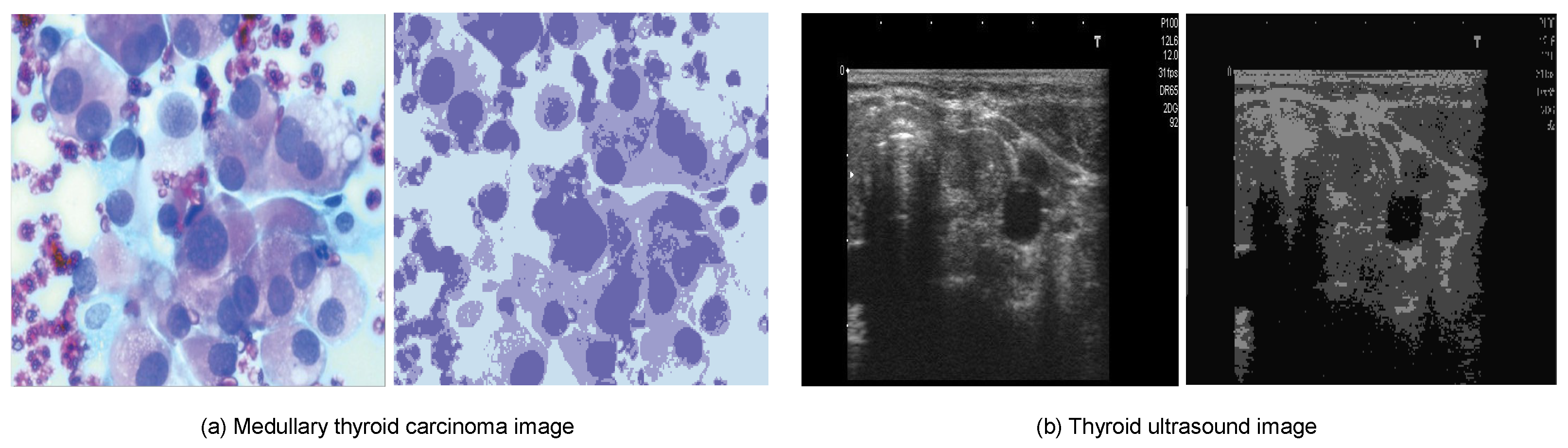

The utilization of computer-aided diagnosis (CAD) systems for analyzing thyroid cancer images has seen a significant increase in popularity in recent years. These systems, renowned for enhancing diagnostic accuracy and reducing interpretation time, have become an invaluable tool in the field. Among these technologies, radionics, when used in conjunction with ultrasonography imaging, has become widely accepted as a cost-effective, safe, simple, and practical diagnostic method in clinical practice. Endocrinologists frequently conduct US scans in the 7–15 MHz range to identify thyroid cancer and evaluate its anatomical characteristics. The American College of Radiology has formulated a Thyroid Imaging, Reporting, and Data System (TI-RADS) that classifies thyroid nodules into six categories based on attributes such as composition, echogenicity, shape, size, margins, and echogenic foci. These classifications range from normal (Thyroid Imaging, Reporting, and Data System (TIRADS)-1) to malignant (TIRADS-6) [18][19][20]. Several open-source applications are available for assessing these thyroid cancer features [21][22]. However, the identification and differentiation of nodules continue to present a challenge, largely reliant on radiologists’ personal experience and cognitive abilities. This is due to the subjective nature of human eye-based image recognition, the poor quality of captured images, and the similarities among US images of benign thyroid nodules, malignant thyroid nodules, and lymph nodes.

Moreover, ultrasonography imaging is often a time-intensive and stressful procedure, which can result in inaccurate diagnoses. Misclassifications among normal, benign, malignant, and indeterminate cases are typical [23][24][25][26][27][28]. A fine-needle aspiration biopsy (FNAB) is typically conducted for a more precise diagnosis. However, FNAB can be an uncomfortable experience for patients, and a specialist’s lack of knowledge can potentially convert benign nodules into malignant ones, not to mention the additional financial burden [29][30] (refer to Figure 1b). Selecting their characteristics is the primary challenge in distinguishing between benign and malignant nodules. Numerous studies have explored the characterization of conventional US imaging for various types of cancers, including retina [31][32], breast cancer [33][34][35][36][37], blood cancer [38][39], and thyroid cancer [40][41]. However, these methods remain insufficiently accurate for the reliable classification of thyroid nodules.

The incorporation of AI technology plays a pivotal role in reducing subjectivity and enhancing the accuracy of pathological diagnoses for various intractable diseases, including those affecting the thyroid gland [42][43]. This enhancement is achieved through an improved interpretation of ultrasonography images and faster processing times. Machine learning (ML) and deep learning (DL) have surfaced as potential solutions for automating the classification of thyroid nodules in applications such as US, fine-needle aspiration (FNA), and thyroid surgery [44][45]. This potential has been underscored in numerous studies, such as [43][46][47][48][49][50]. Furthermore, there are ongoing studies examining the use of this innovative technology for cancer detection, where its effectiveness hinges on the volume of data and the precision of the classification process.

2. Example of Thyroid Cancer Detection Using AI

To explain how thyroid cancer has been considered in the literature and how AI can be used to detect types of cancers, in the following, a simple example of TD classification was presented. It has been known that pattern recognition is the process of training a neural network to assign the correct target classes to a set of input patterns. Once trained, the network can be used to classify patterns. An example of thyroid cancer classification as benign, malignant, and normal based on a set of features specified according to the TIRADS was presented. In this example, the dataset (7200 samples) was selected from the UCI Machine Learning Repository [51]. This dataset can be used to create a neural network that classifies patients referred to a clinic as normal, hyperfunctioning, or subnormal functioning. The thyroid inputs and thyroid targets are defined as: (i) TI: a 21 × 7200 matrix consisting of 7200 patients characterized by 15 binary and 6 continuous patient attributes. (ii) TT: a 3 × 7200 matrix of 7200 associated class vectors defining which of three classes each input is assigned to. Classes are represented by a one in rows 1, 2, or 3. (1) Normal, not hyperthyroid. (2) Hyperfunctioning. (3) Subnormal functioning.

In this network, the data were divided into 5040 samples, 1080 samples, and 1080 samples used for training, validation, and testing, respectively. The network was trained to reduce the error between thyroid inputs and thyroid targets or until it reached the target goal. If the ER did not decrease and the training did not improve, the training data were halted with the data of the validation set. The testing dataset was used to deduce the values of the targets. Thus, it determined the percentage of learning. For this example, 10 neurons were used in the hidden layer in this model for 21 inputs and 3 outputs. After the simulation of the model, the percent error was 5.337%, 7.407%, and 5.092% for training, validation, and testing, respectively. Thus, in total, it recognized 94.4% and the overall ER was 5.6%. The confusion matrix and the ROC metric are illustrated in Figure 2.

Figure 2. An example of the confusion matrix and ROC metric for thyroid cancer classification.

Figure 3 illustrates an example of a thyroid segmentation in ultrasound images using K-means (three clusters were chosen for this example) which is one of the most commonly used clustering techniques.

Figure 3. Example of thyroid segmentation based on the K-means method.

This entry is adapted from the peer-reviewed paper 10.3390/systems11100519

References

- Himeur, Y.; Al-Maadeed, S.; Varlamis, I.; Al-Maadeed, N.; Abualsaud, K.; Mohamed, A. Face mask detection in smart cities using deep and transfer learning: Lessons learned from the COVID-19 pandemic. Systems 2023, 11, 107.

- Himeur, Y.; Al-Maadeed, S.; Almaadeed, N.; Abualsaud, K.; Mohamed, A.; Khattab, T.; Elharrouss, O. Deep visual social distancing monitoring to combat COVID-19: A comprehensive survey. Sustain. Cities Soc. 2022, 85, 104064.

- Sohail, S.S.; Farhat, F.; Himeur, Y.; Nadeem, M.; Madsen, D.Ø.; Singh, Y.; Atalla, S.; Mansoor, W. Decoding ChatGPT: A Taxonomy of Existing Research, Current Challenges, and Possible Future Directions. J. King Saud Univ. Comput. Inf. Sci. 2023, 35, 101675.

- Himeur, Y.; Elnour, M.; Fadli, F.; Meskin, N.; Petri, I.; Rezgui, Y.; Bensaali, F.; Amira, A. AI-big data analytics for building automation and management systems: A survey, actual challenges and future perspectives. Artif. Intell. Rev. 2023, 56, 4929–5021.

- Calisto, F.M.; Nunes, N.; Nascimento, J.C. Modeling adoption of intelligent agents in medical imaging. Int. J. Hum. Comput. Stud. 2022, 168, 102922.

- Deng, Y.; Li, H.; Wang, M.; Li, N.; Tian, T.; Wu, Y.; Xu, P.; Yang, S.; Zhai, Z.; Zhou, L.; et al. Global burden of thyroid cancer from 1990 to 2017. JAMA Netw. Open 2020, 3, e208759.

- Hammouda, D.; Aoun, M.; Bouzerar, K.; Namaoui, M.; Rezzik, L.; Meguerba, O.; Belaidi, A.; Kherroubi, S. Registre des Tumeurs d’Alger; Ministere de la Sante et de la Population Institut National de Sante Publique: Paris, France, 2006.

- Abid, L. Le cancer de la thyroide en Algérie. In Guide de la Santé en Algérie: Actualité Pathologie; Santedz: Alger, Algeria, 2008.

- NIPH. Available online: http://www.insp.dz/index.php/Non-categorise/registre-des-tumeurs-d-alger.html (accessed on 17 January 2021).

- Siegel, R.L.; Miller, K.D.; Jemal, A. Cancer statistics, 2019. CA Cancer J. Clin. 2019, 69, 7–34.

- Hitu, L.; Gabora, K.; Bonci, E.A.; Piciu, A.; Hitu, A.C.; Ștefan, P.A.; Piciu, D. MicroRNA in Papillary Thyroid Carcinoma: A Systematic Review from 2018 to June 2020. Cancers 2020, 12, 3118.

- Castellana, M.; Piccardo, A.; Virili, C.; Scappaticcio, L.; Grani, G.; Durante, C.; Giovanella, L.; Trimboli, P. Can ultrasound systems for risk stratification of thyroid nodules identify follicular carcinoma? Cancer Cytopathol. 2020, 128, 250–259.

- Ferrari, S.M.; Elia, G.; Ragusa, F.; Ruffilli, I.; La Motta, C.; Paparo, S.R.; Patrizio, A.; Vita, R.; Benvenga, S.; Materazzi, G.; et al. Novel treatments for anaplastic thyroid carcinoma. Gland. Surg. 2020, 9, S28.

- Giovanella, L.; Treglia, G.; Iakovou, I.; Mihailovic, J.; Verburg, F.A.; Luster, M. EANM practice guideline for PET/CT imaging in medullary thyroid carcinoma. Eur. J. Nucl. Med. Mol. Imaging 2020, 47, 61–77.

- Carling, T.; Udelsman, R. Thyroid cancer. Annu. Rev. Med. 2014, 65, 125–137.

- Yang, R.; Zou, X.; Zeng, H.; Zhao, Y.; Ma, X. Comparison of Diagnostic Performance of Five Different Ultrasound TI-RADS Classification Guidelines for Thyroid Nodules. Front. Oncol. 2020, 10, 598225.

- Kobayashi, K.; Fujimoto, T.; Ota, H.; Hirokawa, M.; Yabuta, T.; Masuoka, H.; Fukushima, M.; Higashiyama, T.; Kihara, M.; Ito, Y.; et al. Calcifications in thyroid tumors on ultrasonography: Calcification types and relationship with histopathological type. Ultrasound Int. Open 2018, 4, E45.

- Tessler, F.N.; Middleton, W.D.; Grant, E.G.; Hoang, J.K.; Berland, L.L.; Teefey, S.A.; Cronan, J.J.; Beland, M.D.; Desser, T.S.; Frates, M.C.; et al. ACR thyroid imaging, reporting and data system (TI-RADS): White paper of the ACR TI-RADS committee. J. Am. Coll. Radiol. 2017, 14, 587–595.

- Tessler, F.N.; Middleton, W.D.; Grant, E.G. Thyroid imaging reporting and data system (TI-RADS): A user’s guide. Radiology 2018, 287, 29–36.

- Genomic Data Commons Data Portal. Available online: https://portal.gdc.cancer.gov/ (accessed on 10 January 2021).

- TI-RADS Calculator. Available online: http://tiradscalculator.com/ (accessed on 10 January 2021).

- AI TI-RADS Calculator. Available online: https://deckard.duhs.duke.edu/~ai-ti-rads/ (accessed on 10 January 2021).

- Schlumberger, M.; Tahara, M.; Wirth, L.J.; Robinson, B.; Brose, M.S.; Elisei, R.; Habra, M.A.; Newbold, K.; Shah, M.H.; Hoff, A.O.; et al. Lenvatinib versus placebo in radioiodine-refractory thyroid cancer. N. Engl. J. Med. 2015, 372, 621–630.

- Wettasinghe, M.C.; Rosairo, S.; Ratnatunga, N.; Wickramasinghe, N.D. Diagnostic accuracy of ultrasound characteristics in the identification of malignant thyroid nodules. BMC Res. Notes 2019, 12, 193.

- Nayak, R.; Nawar, N.; Webb, J.; Fatemi, M.; Alizad, A. Impact of imaging cross-section on visualization of thyroid microvessels using ultrasound: Pilot study. Sci. Rep. 2020, 10, 415.

- Singh Ospina, N.; Maraka, S.; Espinosa DeYcaza, A.; O’Keeffe, D.; Brito, J.P.; Gionfriddo, M.R.; Castro, M.R.; Morris, J.C.; Erwin, P.; Montori, V.M. Diagnostic accuracy of thyroid nodule growth to predict malignancy in thyroid nodules with benign cytology: Systematic review and meta-analysis. Clin. Endocrinol. 2016, 85, 122–131.

- Kumar, V.; Webb, J.; Gregory, A.; Meixner, D.D.; Knudsen, J.M.; Callstrom, M.; Fatemi, M.; Alizad, A. Automated segmentation of thyroid nodule, gland, and cystic components from ultrasound images using deep learning. IEEE Access 2020, 8, 63482–63496.

- Ma, J.; Wu, F.; Jiang, T.; Zhao, Q.; Kong, D. Ultrasound image-based thyroid nodule automatic segmentation using convolutional neural networks. Int. J. Comput. Assist. Radiol. Surg. 2017, 12, 1895–1910.

- Song, J.S.A.; Hart, R.D. Fine-needle aspiration biopsy of thyroid nodules: Determining when it is necessary. Can. Fam. Physician 2018, 64, 127.

- Hahn, S.Y.; Shin, J.H.; Oh, Y.L.; Park, K.W.; Lim, Y. Comparison between fine needle Aspiration and core needle Biopsy for the Diagnosis of thyroid Nodules: Effective Indications according to US Findings. Sci. Rep. 2020, 10, 4969.

- Ullah, H.; Saba, T.; Islam, N.; Abbas, N.; Rehman, A.; Mehmood, Z.; Anjum, A. An ensemble classification of exudates in color fundus images using an evolutionary algorithm based optimal features selection. Microsc. Res. Tech. 2019, 82, 361–372.

- Saba, T.; Bokhari, S.T.F.; Sharif, M.; Yasmin, M.; Raza, M. Fundus image classification methods for the detection of glaucoma: A review. Microsc. Res. Tech. 2018, 81, 1105–1121.

- Mughal, B.; Muhammad, N.; Sharif, M.; Rehman, A.; Saba, T. Removal of pectoral muscle based on topographic map and shape-shifting silhouette. BMC Cancer 2018, 18, 778.

- Morais, M.; Calisto, F.M.; Santiago, C.; Aleluia, C.; Nascimento, J.C. Classification of Breast Cancer in Mri with Multimodal Fusion. In Proceedings of the 2023 IEEE 20th International Symposium on Biomedical Imaging (ISBI), Cartagena de Indias, Colombia, 18–21 April 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–4.

- Diogo, P.; Morais, M.; Calisto, F.M.; Santiago, C.; Aleluia, C.; Nascimento, J.C. Weakly-Supervised Diagnosis and Detection of Breast Cancer Using Deep Multiple Instance Learning. In Proceedings of the 2023 IEEE 20th International Symposium on Biomedical Imaging (ISBI), Cartagena de Indias, Colombia, 18–21 April 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–4.

- Wang, X.; Zhang, J.; Yang, S.; Xiang, J.; Luo, F.; Wang, M.; Zhang, J.; Yang, W.; Huang, J.; Han, X. A generalizable and robust deep learning algorithm for mitosis detection in multicenter breast histopathological images. Med. Image Anal. 2023, 84, 102703.

- Mughal, B.; Sharif, M.; Muhammad, N.; Saba, T. A novel classification scheme to decline the mortality rate among women due to breast tumor. Microsc. Res. Tech. 2018, 81, 171–180.

- Abbas, N.; Saba, T.; Mehmood, Z.; Rehman, A.; Islam, N.; Ahmed, K.T. An automated nuclei segmentation of leukocytes from microscopic digital images. Pak. J. Pharm. Sci. 2019, 32, 2123–2138.

- Abbas, N.; Saba, T.; Rehman, A.; Mehmood, Z.; Javaid, N.; Tahir, M.; Khan, N.U.; Ahmed, K.T.; Shah, R. Plasmodium species aware based quantification of malaria parasitemia in light microscopy thin blood smear. Microsc. Res. Tech. 2019, 82, 1198–1214.

- Wang, Y.; Yue, W.; Li, X.; Liu, S.; Guo, L.; Xu, H.; Zhang, H.; Yang, G. Comparison study of radiomics and deep learning-based methods for thyroid nodules classification using ultrasound images. IEEE Access 2020, 8, 52010–52017.

- Qin, P.; Wu, K.; Hu, Y.; Zeng, J.; Chai, X. Diagnosis of benign and malignant thyroid nodules using combined conventional ultrasound and ultrasound elasticity imaging. IEEE J. Biomed. Health Informatics 2019, 24, 1028–1036.

- Wu, H.; Deng, Z.; Zhang, B.; Liu, Q.; Chen, J. Classifier model based on machine learning algorithms: Application to differential diagnosis of suspicious thyroid nodules via sonography. Am. J. Roentgenol. 2016, 207, 859–864.

- Zhang, B.; Tian, J.; Pei, S.; Chen, Y.; He, X.; Dong, Y.; Zhang, L.; Mo, X.; Huang, W.; Cong, S.; et al. Machine learning–assisted system for thyroid nodule diagnosis. Thyroid 2019, 29, 858–867.

- Sollini, M.; Cozzi, L.; Chiti, A.; Kirienko, M. Texture analysis and machine learning to characterize suspected thyroid nodules and differentiated thyroid cancer: Where do we stand? Eur. J. Radiol. 2018, 99, 1–8.

- Yang, C.Q.; Gardiner, L.; Wang, H.; Hueman, M.T.; Chen, D. Creating prognostic systems for well-differentiated thyroid cancer using machine learning. Front. Endocrinol. 2019, 10, 288.

- Abbad Ur Rehman, H.; Lin, C.Y.; Mushtaq, Z. Effective K-Nearest Neighbor Algorithms Performance Analysis of Thyroid Disease. J. Chin. Inst. Eng. 2021, 44, 77–87.

- Taylor, J.N.; Mochizuki, K.; Hashimoto, K.; Kumamoto, Y.; Harada, Y.; Fujita, K.; Komatsuzaki, T. High-resolution Raman microscopic detection of follicular thyroid cancer cells with unsupervised machine learning. J. Phys. Chem. B 2019, 123, 4358–4372.

- Chandio, J.A.; Mallah, G.A.; Shaikh, N.A. Decision Support System for Classification Medullary Thyroid Cancer. IEEE Access 2020, 8, 145216–145226.

- Lee, J.H.; Chai, Y.J. A deep-learning model to assist thyroid nodule diagnosis and management. Lancet Digit. Health 2021, 3, e410.

- Buda, M.; Wildman-Tobriner, B.; Hoang, J.K.; Thayer, D.; Tessler, F.N.; Middleton, W.D.; Mazurowski, M.A. Management of thyroid nodules seen on US images: Deep learning may match performance of radiologists. Radiology 2019, 292, 695–701.

- Murphy, P.M. UCI Repository of Machine Learning Databases. 1994. Available online: https://archive.ics.uci.edu/ (accessed on 1 March 2021).

This entry is offline, you can click here to edit this entry!