Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is an old version of this entry, which may differ significantly from the current revision.

Skin cancer is considered a dangerous type of cancer with a high global mortality rate. Manual skin cancer diagnosis is a challenging and time-consuming method due to the complexity of the disease. In the field of transfer learning and federated learning, there are several new algorithms and techniques for classifying melanoma and nonmelanoma skin cancer.

- transfer learning

- federated learning

- melanoma

- dermoscopy

1. Introduction

Skin cancer is the most common type of cancer. Clinical screenings are performed first; then, biopsy, histological tests, and dermoscopy are performed to confirm the diagnosis [1]. Skin cancer appears when the normal growth of skin cells is affected, causing a mutation in the DNA and eventually leading to skin cancer. Exposure to ultraviolet rays is considered to be the main cause of skin cancer. However, several other factors, such as a light skin tone, exposure to radiation and chemicals, severe skin injuries/burns, a weak immune system, old age, and smoking, also lead to skin cancer [2]. According to data compiled by the WHO, cancer is the main cause of death globally. They reveal that cancer cases are increasing rapidly, with one in six deaths occurring due to this deadly disease. In 2018, 18.1 million people had cancer globally, and approximately 9.6 million died from this disease. It is predicted that these statistics will nearly double by 2040 and approximately 29.4 million people will be diagnosed with cancer [3]. The most frequently diagnosed cancers worldwide are stomach, lung, liver, skin, cervix, breast, and colorectal [4]. This disease is the most severe and critical issue in all generations of populations, regardless of social status or wealth. At an early stage, the treatment and diagnosis of cancer can significantly decrease the number of deaths [5]. Researchers are mainly concerned with diagnosing cancer early by employing artificial intelligence-based approaches [6]. There are several classes of skin cancer that are considered nonmelanoma cancers. Basal cell carcinoma (BCC), Merkel cell carcinoma (MCC), and squamous cell carcinoma (SCC) are examples of nonmelanoma skin malignancies. These nonmelanoma cancers are considered to be less aggressive than melanoma. Furthermore, these nonmelanoma cancers are more treatable than melanoma [7].

The most malignant type of skin cancer is melanoma, which has a high misdiagnosis rate due to its potential for metastasis, recurrence, and vascular invasion [8]. It is the 19th most common cancer among human beings. In 2018, approximately 300,000 new cases of the disease were found. Moreover, 4740 males and 2490 females died from melanoma in 2019 [9]. A report issued by the American Cancer Society in 2022 calculated that about 99,780 people will be infected with melanoma in the U.S. and approximately 7650 human beings are expected to die from it [10]. The actual cause of melanoma has still not been found, but various factors like environmental and genetic factors and ultraviolet radiation are the primary causes of skin cancer. Melanoma cancer originates in skin melanocytes, which make dark pigments in the hair, eyes, and skin [11]. Over the last few years, melanoma cancer cases have been gradually increasing. If the cancer is detected at the initial level, a minor surgical process can increase the possibility of recovery. The dermoscopy imaging technique is a popular non-invasive technique widely used by dermatologists to evaluate pigmented skin lesions [12]. Through dermoscopy, the structure becomes more visible for examination by the dermatologist because it enlarges the lesion’s position or surface [13,14]. However, this imaging technique can only be effective if it is used by trained and expert dermatologists because it is wholly based on the physician’s experience and optical acuteness [15]. These challenges and issues stimulate researchers to discover new strategies and methods for diagnosing and visualizing melanoma and nonmelanoma skin cancer. A computer-aided diagnosis (CAD) system, applied as a traditional process, due to its convenient and user-friendly procedure which assists young and non-experienced dermatologists in diagnosing melanoma. A proficient and experienced dermatologist can achieve 65% to 75% precision in classifying melanoma cancer through dermoscopy photographs [16]. The automated process of melanoma diagnosis from medical imaging can help dermatologists in their clinical routine. The challenges in the field of dermatology drive research groups to place their primary attention on the diagnosis of melanoma by using AI-based tools. The utilization of artificial intelligence (AI) for the diagnosis of skin cancer has recently gained a great deal of attention.

2. Methods for the Detection of Melanoma and Nonmelanoma Skin Cancer (RQ1)

In the field of transfer learning and federated learning, there are several new algorithms and techniques for classifying melanoma and nonmelanoma skin cancer. In this section, state-of-the-art methods dependent on transfer learning and federated learning are examined.

2.1. Fully Convolutional Network (FCN)-Based Methods

Some studies used FCN-based methods to classify skin cancer, such as Lequan et al. [34], which proposed a two-stage approach for automated skin cancer recognition based on deep CNNs. FCRN and deep residual DRN networks were used for lesion segmentation and classification. The residual learning technique is utilized for the training of both deep networks. Moreover, the proposed approach creates a grade map of the skin lesion from the images and then the lesion mask is cropped and resized. The cropped lesion patch is transferred for melanoma classification. However, Al-Masni [35] proposed an integrated deep learning two-level framework for segmenting and classifying multiple skin lesions. Firstly, an FRCN is applied to dermoscopic images to segment the lesion boundaries. To differentiate between various skin lesions, the segmented skin lesions are fed into pretrained algorithms, such as DenseNet-201, Inception-ResNet-v2, ResNet-50, and Inception-v3. The pre-segmentation phase enables these classifiers to memorize and learn only specific lesion features while ignoring the surrounding region.

In comparison, Jayapriya and Jacob [36] also designed an architecture consisting of two fully convolutional networks (FCNs) based on pretrained GoogLeNet and VGG-16 models. These hybrid pretrained networks extract more specific features and give a better segmentation output than an FCRN. The segmented lesion image is next passed into a DRN and a hand-crafted feature for classification purposes. The SVM classifier is implemented for classifying various skin lesions into nonmelanoma and melanoma lesions. Elsewhere, Khan et al. [37] suggested a method for the multiclass localization and classification of lesion images based on MASK-RCNN and Resnet50 along with a feature pyramid network (FPN).

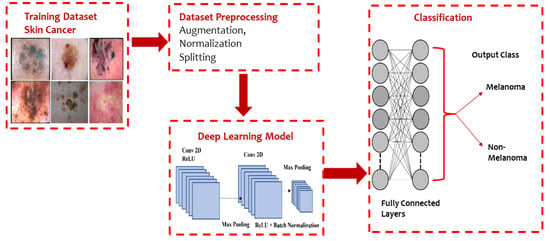

Moreover, Al-Masni et al. [38] presented an integrated model based on an FRCN and ResNet-50 network. An FRCN is implemented on dermoscopy images to segment the boundaries of the lesion images and then passed to a pretrained ResNet-50 deep residual network by fine-tuning the model for the classification of various lesion images. The basic architecture of a CNN model used for classifying melanoma and nonmelanoma is presented in Figure 5.

Figure 5. Typical CNN architecture for melanoma and non-melanoma cancer classification.

2.2. Hybrid Methods

Many studies used hybrid methods for the diagnosis of skin cancer. Kassem et al. [39] proposed an architecture that can accurately classify eight different kinds of skin lesion images, even imbalanced images between classes. The proposed method used a pretrained GoogLeNet architecture by incorporating supplementary filters onto every layer for improved feature extraction and less background noise. The model was implemented to classify the lesion by changing various layers in two ways. This change aimed to identify outliers or unknown images. The performance metrics of the architecture increased when all the layers’ weights were fine-tuned instead of performing fine-tuning only on replaced layers. Gavrilov et al. [40] used a pretrained neural network, Inception v3, that was trained on a large image dataset. Miglani et al. [41] used a novel scaling pretrained model called EfficientNet-B0 to classify lesion images in various categories by using transfer learning. Moreover, Hosny et al. [42] developed a method based on pretrained AlexNet and transfer learning to classify seven different kinds of lesions.

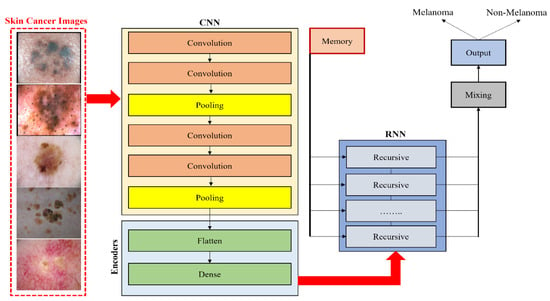

Esteva et al. [43] implemented a pretrained GoogLeNet Inception v3 classifier for the binary classification of two problems: benign nevi versus malignant melanomas and benign seborrheic keratosis versus keratinocyte carcinomas. Furthermore, Majtner et al. [44] suggested a two-part method consisting of a feature extraction and feature reduction process based on deep learning with the LDA approach for melanoma detection. Pretrained AlexNet was used for feature extraction and then the LDA approach was employed to optimize features, which helped decrease the set of features and enhance the precision of classification. Ather et al. [45] proposed a multiclass classification framework for identification and optimal discrimination between different skin lesions, both benign and malignant. Moreover, three deep models, namely ResNet-18, VGG16, and AlexNet, were suggested by Mahbod et al. [46] for the classification of three lesion classes: benign nevi, malignant melanoma, and seborrheic keratosis. In comparison, Namozov et al. [47] suggested a deep method with adaptive linear piecewise (APL) activation units for the classification of melanoma that can attain superb melanoma recognition performance. Hosny et al. [48] suggested a deep CNN that classifies three different lesion types, melanoma, atypical nevus, and common nevus, from color images of skin cancer in addition to image augmentation and fine-tuning. To categorize the three types of lesions, the last layer of a pretrained AlexNet is modified. This technique can work directly with any photographic or dermoscopic image and does not need preprocessing. Devansh et al. [49] developed an automated technique for melanoma diagnosis that specially deals with skin lesion image datasets that are small-scale, extremely imbalanced, and image occlusions. However, Maron et al. [50] examined the brittleness of three pretrained VGG16, ResNet50, and DenseNet121 CNNs in image analysis and showed brittleness, such as rotation, scaling, or minor changes in the input image, which have a significant effect on the classification of the CNN. Rivera et al. [51] proposed a technique for the early detection of melanoma that is implemented on mobile phones or embedded devices. The proposed system uses a pretrained MobileNet. Khan et al. [52] suggested a deep neural network model based on RESNET-50 and RESNET-101 with a kurtosis-controlled principal component (KcPCA) approach. In contrast, Khan et al. [53] implemented a CAD system based on MASK-RCNN and a DenseNet deep model for lesion detection and recognition. Georgakopoulos et al. [54] compared two different CNN models without and with pretraining in images. The transfer learning technique was applied in the case of the pretrained model instead of randomly initialing the weights of the CNN. The consequences of this kind of hybrid method demonstrate that the classification results are significantly enhanced. Kulhalli et al. [55] provided a hierarchical classifier approach based on CNN and transfer learning. The proposed branched approach uses the pretrained InceptionV3 model for skin lesion classification. The structure of the hybrid methods based on transfer learning classifiers is presented in Figure 6.

Figure 6. Hybrid CNN model with RNN for classifying melanoma and nonmelanoma skin disease.

2.3. Ensemble Methods

Tahir et al. [27] introduced a CNN-based method named DSCC_Net for the classification of skin cancer. ISIC 2020, DermIS, and HAM10000 were the three publicly accessible benchmark datasets utilized to evaluate the performance of the proposed methodology. Moreover, the performance of DSCC_Net was also compared with six baseline deep learning methods. Furthermore, the researchers used the SMOTE to effectively tackle the problem of underrepresented classes. The suggested DSCC_Net model showed a high level of effectiveness in accurately classifying the four distinct categories of skin cancer disorders. It achieved an impressive area under the curve (AUC) value of 99.43%, indicating its strong discriminatory power. Moreover, the model exhibited a commendable accuracy rate of 94.17%. The recall rate was found to be 93.76%, further highlighting the model’s reliability. The precision rate was determined to be 94.28%. Lastly, the F1-score, which combines precision and recall, was calculated to be 93.93%, further affirming the model’s overall performance in accurately classifying skin cancer disorders.

Karri et al. [56] developed a model by using two notable technical advancements: the evaluation of a two-stage, domain-to-domain transfer learning assessment, which involves model-level and data-level transfer learning that is carried out by fine-tuning two datasets, namely MoleMap and ImageNet. The authors introduced nSknRSUNet, a deep learning network designed for the segmentation of skin lesions. This network demonstrates good performance by using large receptive fields and feature fusion techniques to enhance spatial edge attention. The MoleMap and HAM10000 datasets were used to conduct a comparative analysis between the model’s predictions and images of real skin lesions originating from two separate clinical settings. The proposed model in data-level transfer learning, when applied to the HAM10000 dataset, attained a Dice Similarity Coefficient (DSC) of 94.63% and an accuracy of 99.12%. The MoleMap dataset demonstrated that the suggested model achieved a Dice Similarity Coefficient (DSC) of 93.63% and an accuracy of 97.01%.

Several research studies used ensemble methods, like Yu et al. [57], who proposed a network ensemble strategy to combine deep convolutional descriptors for automated skin lesion detection. In this proposed method, pretrained ResNet50 and VGGNet are adopted. Multiple CNNs are trained using a data augmentation technique specifically designed based on illuminant projection and color recalibration. Then, output convolution activation maps of each skin image are extracted from each network and the local deep features are selected from the object-relevant region. Finally, the Fisher kernel encoding-based method combines these deep features as image illustrations to classify lesions. SVM is then used to classify skin lesions accurately. Pal et al. [58] used an ensemble of three fine-tuned DenseNet-121, MobileNet-50, and ResNet50 architectures to predict the disease class.

Alizadeh et al. [59] proposed an ensemble method based on two CNN architectures, including a CNN model composed of nine layers and a pretrained VGG-19 CNN model combined with other classifiers. Milton [60] used an ensemble of transfer learning techniques including InceptionV4, SENet154, InceptionResNetV2, PNASNet-5-Large, and all architectures to classify seven different lesion classes. Chaturvedi et al. [61] implemented a method that uses five pretrained CNN models, including ResNetXt101, NASNet Large, InceptionResNetV2, InceptionV3, and Xception CNN, and four ensemble models to discover the best model for the multiclassification of skin cancer. However, Amirreza et al. [62] proposed a method that ensembles deep extracted features from several pretrained models.

Le et al. [63] provided an ensemble framework based on modified ResNet50 models with a focal loss function and class weight. Moreover, Mahbod et al. [64] described the effect of dermoscopic images of different resolutions on the classification performance of different fine-tuned CNNs in skin lesion analysis. Moreover, a novel fusion approach was presented by assembling the results of multiple fine-tuned networks, such as DenseNet-121, ResNet-50, and ResNet-18, that were trained with various dimensions and sizes of dermoscopic images. Nyiri and Kiss [65] suggested multiple novel methods of ensemble networks, such as VGG16, VGG19, ResNet50, Xception, InceptionV3, and DenseNet121, with differently preprocessed data and different hyperparameters to classify skin lesions. Bi et al. [66] implemented the CNN ensemble technique to classify nevi versus seborrheic keratosis versus melanoma from dermoscopic images; for this purpose, instead of training multiple CNNs, they trained three ResNet-like ResNet multiclasses for three classes; the second one is the other two lesion classes versus melanoma or the other two lesion classes versus seborrheic (ResNet binary) and for the third one, they ensembled the first two methods to obtain the final results (ResNet (ensemble)) by fine-tuning a pretrained CNN.

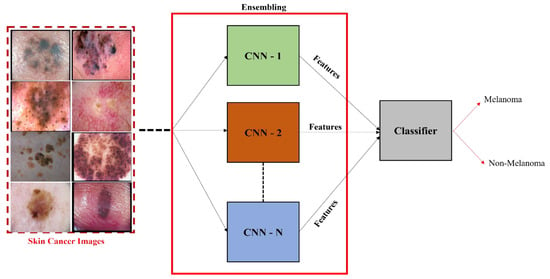

Wei et al. [67] proposed an ensemble lightweight melanoma recognition CNN model based on MobileNet and DenseNet. Harangi et al. [68] outlined that the ensemble of the different CNN networks enhanced the individual accuracies of models to classify different skin lesions into related classes such as seborrheic keratosis, melanoma, and benign. They fused the output layers of pretrained GoogLeNet, ResNet, AlexNet, and VGGNet CNN models. The best fusion-based methods were used to aggregate pretrained models into one framework. Finally, the extracted deep features were classified based on a sum of maximal probabilities. The overview of the ensembling of CNN-based models is depicted in Figure 7.

Figure 7. Ensemble CNN model for the classification of melanoma and nonmelanoma.

2.4. Federated Learning

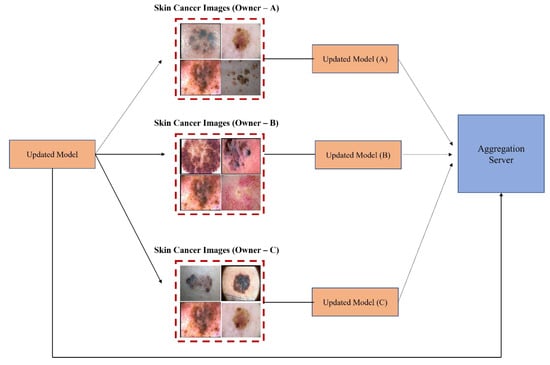

Recently, federated learning (FL) has been proposed to train decentralized models in a privacy-preserved fashion depending on labeled data on the client side, which are usually not available and costly. To address this, Bdair et al. [31] proposed a federated learning method known as the FedPerl framework for classifying skin lesions using a multisource dataset. This study also applied various methods to improve privacy, hide user identity, and minimize communication costs. Lan et al. [69] proposed a MaOEA-IS based on federated learning to solve the privacy and fragmentation of data to a better extent for skin cancer diagnosis. Hossen et al. [70] applied federated learning based on a convolutional neural network to classify skin lesions using a custom skin lesion dataset while ensuring data security. Agbley et al. [71] proposed a federated learning model for melanoma detection that fused lesion images and their corresponding clinical metadata while ensuring privacy during training. Hashmani et al. [72] suggested an adaptive federated learning-based model with the capability of learning new features consisting of global point (server) and intelligent local edges (dermoscopy) that can correctly classify skin lesion dermoscopy images and predict their severity level. Moreover, Bdair et al. [73] proposed a semi-supervised FL framework motivated by a peer learning (PL) approach from educational psychology and ensemble averaging from committee machines for lesion classification in dermoscopic skin images. A block diagram of FL for the classification of skin lesion images is illustrated in Figure 8. In addition, Table 2 presents an overview of FL and transfer learning (TL) classifiers for skin disease classification.

Figure 8. Federated learning for skin image classification.

Table 2. Federated and transfer learning classifiers for the classification of melanoma.

| Ref | Training Algorithms | Archi. | Datasets | Image Modality |

|---|---|---|---|---|

| [17] | Hybrid deep CNN | DCNN | HAM10000, ISIC 2018 | Dermoscopy |

| [18] | SLDCNet, FrCN | DCNN | ISIC 2019 | Dermoscopy |

| [31] | FedPerl | FL | Multisource combined dataset | Dermoscopy |

| [42] | MaOEA-IS (federated learning) | FL | ISIC 2018 | Dermoscopy |

| [44] | AlexNet + LDA | CNN | ISIC Archive | Dermoscopy |

| [45] | ResNet-18, VGG16, AlexNet | DNN | ISIC 2016, ISIC 2017 | Dermoscopy |

| [47] | LeNet + Adaptive linear piecewise function | CNN | ISIC 2018 | Dermoscopy |

| [48] | AlexNet | DNN | PH2 | Dermoscopy |

| [66] | DenseNet | DCNN | ISIC 2017, HAM10000 | Dermoscopy |

| [67] | MobileNet V1, DenseNet-121 | DCNN | ISIC 2016 | Dermoscopy |

| [68] | CNN | DCNN | Dermo fit, MEDNODE | Dermoscopy |

| [69] | MaOEA | FSDM | Ham 10000 | Dermoscopy |

| [70] | FL + CNN | CNN | Custom image dataset | Dermoscopy |

| [71] | FL + CNN | FL | Multisource dataset | Dermoscopy |

| [72] | Adaptive ensemble CNN with FL | FL | ISIC 2019 | Dermoscopy |

| [74] | Ensemble DCCN | DCNN | ISIC 2017, PH2 | Dermoscopy |

| [75] | Derma Net | CNN | ISIC 2017 | Dermoscopy |

| [76] | VGG-M, VGG-16 | DNN | ISIC 2016, Atlas | Dermoscopy |

| [77] | Ensemble CNN | CNN | HAM 10000 | Dermoscopy |

| [78] | CNN | CNN | ISIC 2017, ISIC 2016, PH2 | Dermoscopy |

3. Performance Evaluation of Methods to Determine the Efficacy of Various Classification Algorithms for Melanoma and Nonmelanoma Cancer Using Clinical and Dermoscopic Images (RQ2)

The classification accuracy of the considered articles was computed using evaluation metrics like TPR, TNR, PPV, ACC, and AUC. The credibility and performance of every classification method were judged on these metrics. The performance of the proposed models on single, multiple, and combined datasets was evaluated and a summary of the performance metrics is provided in Table 3.

3.1. Analyzing Performance on a Single Dataset

Damian et al. [79] proposed a CAD method based on texture, color, shape, and deep learning feature fusion through mutual information (MI) metrics for nonmelanoma and melanoma lesion detection and classification. The efficiency of this method was tested on the HAM10000 dataset and exhibited competitive performance against other advanced methods. Furthermore, Adegun and Viriri [80] implemented a CAD framework based on a segmentation network with a multi-scale encoder–decoder and a fully convolutional network-based DenseNet classification network combined with a fully connected (FC) CRF for the refinement of skin lesion borders to generate precise soft segmentation maps, as well as a DenseNet architecture for the effective classification of lesion images. Furthermore, Nida et al. [81] implemented a deep regional CNN with FCM clustering for skin lesion segmentation and detection. Moreover, Kaymak et al. [82] utilized four different FCNs, namely FCN-8s, FCN-16s, FCN-32s, and FCN-AlexNet, for the automatic semantic segmentation of lesion images. Shan et al. [83] implemented an ∇N-Net architecture with a feature fusion method; all these proposed methods were tested and evaluated on the ISIC 2018 benchmark dataset. Bakheet et al. [84] proposed a CAD method based on multilevel neural networks with improved backpropagation based on the Levenberg–Marquardt (LM) model and Gabor-based entropic features. Balaji et al. [85] implemented a firefly and FCM-based neural network. The performance of the classification methods was evaluated on an open-source PH2 dataset that consists of only 200 lesion images, including 40 melanoma images, 80 atypical nevi, and 80 common nevi images. Warsi et al. [86] proposed a novel multi-direction 3D CTF method for the extraction of features from images and employed a multilayer backpropagation NN technique for classification.

3.2. Performance Evaluation on Multiple Datasets

Yutong Xie et al. [87] proposed a mutual bootstrapping DCNN method based on coarse-SN, mask-CN, and enhanced-SN for simultaneous lesion image segmentation and classification, and the effectiveness of the proposed approach was validated using ISIC 2017 and PH2 datasets. Barata et al. [88] proposed a multi-task CNN with channel and spatial attention modules that perform a hierarchical diagnosis of the lesion’s images and used ISIC 2017 and ISIC 2018 datasets to evaluate the proposed model. Hosny et al. [89] implemented a method that used ROI and data augmentation techniques and modified GoogleNet, Resnet101, and Alex-Net models. The performance and effectiveness of the proposed approach were validated using ISIC 2017, DermIS, DermQuest, and MED-NODE datasets. Filali et al. [90] used PH2 and ISIC 2017 to validate a method based on the fusion of features like texture, color, skeleton, shape, and four pretrained CNN features. Moreover, Hasan et al. [91] proposed a lightweight DSNet that uses PH2 and ISIC 2017. Saba et al. [92] used PH2, ISIC 2017, and ISIC 2016 benchmark datasets to evaluate their proposed contrast-enhanced deep CNN method. The deep features were extracted through AP and FC layers of the pretrained Inception V3 algorithm through fine-tuning.

3.3. Performance Evaluation on Combined Datasets

Javeria et al. [93] implemented an architecture that extracts deep features using AlexNet and the VGG-16 model and fused them to produce a single feature vector for melanoma classification. Optimal features were selected by using the PCA method. This model was assessed on a combined dataset which contains 7849 images of the ISIC 2016, ISIC 2017, and PH2 datasets. Hameed et al. [94] implemented a method based on AlexNet for performing multiclass, multilevel classification. The pretrained model AlexNet was re-trained on the multisource dataset by performing fine-tuning. The proposed method was validated using 3672 images gathered from different online data sources such as DermQuest, DermIS, DermNZ, ISIC Image Challenge, and PH2. Zhang et al. [95] proposed an optimized CNN technique that adopted a whale optimization method for improving the efficacy of CNNs and evaluated the method on a large DermIS and DermQuest combined dataset. The proposed method was compared with other pretrained CNNs and gave the best results for melanoma diagnosis.

3.4. Performance Evaluation on a Smartphone Camera-Based Collected Dataset

Pacheco and Krohling [96] suggested an aggregation method combining patient clinical information with pretrained models. To validate the proposed method, the PAD dataset was used based on the images collected by using different smartphone cameras. The model achieved an improvement of approximately 7% in balanced prediction accuracy.

Table 3. Performance evaluation of TL and FL classifier.

| Ref. | Dataset | Model | TPR | TNR | PPV | ACC | AUC |

|---|---|---|---|---|---|---|---|

| [18] | ISIC 2019 | SLDCNet, FrCN | 99%, | 99.36% | NM | 99.92% | NM |

| [69] | ISIC 2018 | MaOEA-IS with FL | NM | NM | NM | 91% | 88.7% |

| [87] | ISIC 2018 | CKDNet | 96.7% | 90.4% | NM | 93.4% | NM |

| [88] | ISIC 2017 | CKDNet | 92.5% | 70% | NM | 88.1% | 90.5% |

| [97] | ISIC 2019 | GoogleNet and transfer learning | 79.8% | 97% | 80.3% | 94.92% | NM |

| [98] | ISIC 2019 | ResNet-101, NASNet-Large | 88.46% | 88.24% | NM | 88.33% | NM |

| [99] | ISIC 2019 | Adaptive ensemble CNN with FL | 91% | NM | 90% | 89% | NM |

| [100] | ISIC 2018 | Ensemble GoogLeNet, Inceptionv3 | 45% | 97.2% | 67.5% | 88.2% | 91.3% |

| [101] | ISIC 2018 | ∇N-Net architecture | NM | NM | NM | 87% | NM |

| [102] | ISIC 2018 | Hybrid-CNN with DSNet | 86% | NM | 85% | NM | 97% |

| [103] | ISIC 2017 | FrCN | 78.9% | 96% | NM | 90.7% | NM |

| [104] | ISIC 2017 | Mutual bootstrapping DCNN | 72.7% | 91.5% | NM | 87.8% | 90.3% |

| [105] | ISIC 2017 | Ensemble CNN | NM | NM | NM | NM | 92.1% |

| [106] | ISIC 2017 | Inception-V3 | 94.5% | 98% | 95% | 94.8% | 98% |

| [107] | ISIC 2017 | DenseNet-161, ResNet-50 | 60.7% | 89.7% | NM | NM | 80.0% |

| [108] | ISIC 2017 | FC-DenseNet | 83.8% | 98.6% | NM | 93.71% | NM |

| [109] | ISIC 2017 | Lightweight DSNet | 83.6% | 93.9% | NM | 92.8% | NM |

4. Available Datasets for the Evaluation of Classification Methods for Melanoma and Nonmelanoma Skin Cancer (RQ3)

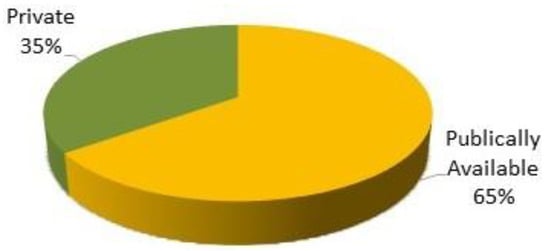

There were numerous datasets available for skin lesion classification. It was identified from the literature that most datasets are publicly available for use, and some are not publicly accessible. Figure 9 shows the availability proportion of public and private datasets.

Figure 9. Availability proportion of datasets.

4.1. Public Datasets

These datasets are also known as benchmark datasets because of their high usage in research for detecting melanoma. The below-discussed datasets are known as benchmark datasets.

SIIM-ISIC 2020 challenge dataset: This dataset contains 33,126 dermoscopic images of different types including 584 melanoma and nonmelanoma images [29]. These images were collected at multiple centers and are available in two formats, DICOM/JPEG and TIF. This multicenter dataset was collected from 2056 patients worldwide with clinical contextual information.

ISIC 2019 challenge dataset: This dataset comprises 25,331 dermoscopic images of eight types and includes 4522 melanoma images, with the rest being nonmelanoma images [39,106].

ISIC 2018 challenge dataset: This dataset consists of 12,500 dermoscopic images of seven types such as dermatofibromas, vascular lesions, Bowen’s disease, actinic keratosis, BCC, seborrheic keratosis, nevi, and melanoma [110].

ISIC 2017 challenge dataset: This dataset contains 2000 dermoscopic images of three types, of which 374 are melanoma, 1372 are benign nevi, and 254 are seborrheic keratosis [99,101].

ISIC 2016 challenge dataset: This dataset has a collection of 900 images including 173 melanoma and 727 noncancerous, labeled as either malignant or benign [82].

PH2 dataset: This dermoscopic image database consists of 200 images, which contain 40 melanoma, 80 atypical nevi, and 80 common nevi, obtained from the “Pedro Hispano Clinic, Portugal Dermatology Service” [76,83,92].

HAM10000 dataset: This is a benchmark dataset with a massive collection of multisource dermoscopic lesion images extracted from the ISIC 2018 grand challenge datasets. The dataset contains 10,015 images of seven different types of common pigmented skin lesions, namely MEL, VASC, AKIEC, NV, BCC, DF, and BKL, with a 600 × 450-pixel resolution including 1113 melanoma images [78,95,97].

MEDNODE dataset: This dataset has a collection of 170 non-dermoscopic images of two types, including 100 nevus images and 70 melanomas from the digital image archive of the University of Medical Center’s Department of Dermatology, Groningen (UMCG). The dimensions of clinical images range from 201 × 257 pixels to 3177 × 1333 pixels [111].

DermIS: The DermIS Digital Database is a European dermatology atlas for healthcare professionals. This image database consists of 206 images of different types including 87 benign and 119 melanoma images in RGB format. It has vast online lesion image information for detecting skin cancers on the Internet [112]. The images in the datasets consist of two labeled classes, nevus and melanoma. This organization provides free-to-use classified images for academic purposes [113].

ISIC Archive: This online archive dataset has a collection of around 24,000 clinical and dermoscopic high-quality images of seven different types, including 2177 melanoma images. Their growing archive is labeled manually, containing high-quality lesion images [114].

DERM 7pt: This dataset consists of a seven-point skin lesion malignancy checklist. It comprises 1011 images, including 252 melanoma and 759 benign [115].

DermNet: The DermNet dataset is freely available, gathered and labeled by the DermNet Skin Disease Atlas, and has a collection of around 23,000 dermoscopic images, of which around 635 are melanoma. This dataset consists of 23 super-classes and 642 sub-classes of the disease [86,107,116].

DermQuest: The DermQuest database is an online medical atlas for educationists and dermatologists. It provides 22,000 non-dermoscopic (clinical) images for analysis purposes. Renowned international editorial boards reviewed and approved these clinical images. The images in the datasets have only two labeled classes, nevus and melanoma. These organizations provide free-to-use classified images for academic purposes [116,117].

4.2. Private Datasets

DermNet NZ: The dermatology image library owned by the DermNet New Zealand Trust contains over 20,000 clinical images for download and re-use [118]. It is frequently updated to provide information about the skin via any desktop or mobile web browser. Moreover, high-definition, non-watermarked images are available for purchase [108,117,119].

Dermofit Image Library: This dermoscopic image database contains 1,300 high-quality images including 526 melanomas. The Dermofit dataset consists of 10 different classes of lesions, such as melanocytic nevus (mole), actinic keratosis, intraepithelial carcinoma, basal cell carcinoma, pyogenic granuloma, seborrheic keratosis, hemangioma, pyogenic granuloma, dermatofibroma, and squamous cell carcinoma [120]. A licensing agreement with a EUR 75 license fee is required to obtain this dataset [90,101].

Interactive Dermoscopy Atlas: The Interactive Dermatology Atlas database consists of over 1000 clinical and dermoscopic images from patient visits over the past two decades [121]. This dataset contains 270 melanomas, 681 unmarked, and 49 seborrheic keratosis. This database is accessible by paying a fee of EUR 250 for research purposes [84,92].

4.3. Non-Listed/Non-Published Datasets

MoleMap Dataset: This is a New Zealand-forward telemedicine service and store for diagnosing melanoma. It contains 32,195 photographs of 8882 patients with 14,754 lesions from 15 disease groups and it was collected between the years 2003 and 2015. Clinical and dermoscopic images of skin lesions are included in the dataset and image size varies from 800 × 600 pixels to 1600 × 1200 pixels [122]. This dataset is available only upon special request [77,88].

Irma skin lesion dataset: This dataset comprises 747 dermoscopic images, including 560 benign and 187 melanoma lesions, with a resolution of 512 × 512 pixels. It is under third-party development and only available upon special request [88,120].

Skin-EDRA. The Skin-EDRA dataset consists of 1064 clinical and dermoscopic images with a 768 × 512-pixel resolution. This dataset is a part of the EDRA CDROM collected as a CD resource from two European university hospitals [66,77,123].

PAD dataset: The Federal University of Espírito Santo collected this dataset through the Dermatological Assistance Program (PAD) [124]. This dataset consists of 1612 images collected using various smartphone camera devices with different resolutions and sizes. Their respective clinical information includes the patient’s age, lesion location on the body, and whether the lesion has recently increased, changed its pattern, itches, or bleeds. Moreover, the dataset contains six types of skin lesion images (MEL 67, ACK 543, BCC 442, NEV 196, SCC 149, and SEK 215) and is available only upon special request [57,60].

It was identified from the literature that publicly available datasets were the most preferred datasets until February 2023 and were most frequently used by researchers to evaluate their proposed architectures.

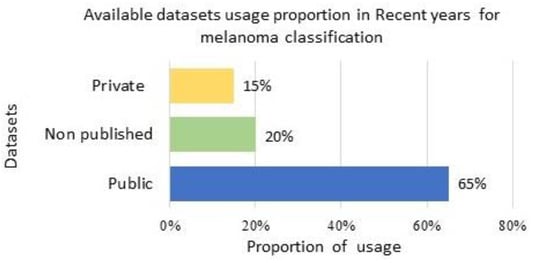

Figure 10 presents the recent trend in using available public, private, and non-listed datasets for melanoma classification.

Figure 10. Dataset usage proportion for method evaluation: usage proportion of available public, non-listed, and private datasets in current years for melanoma classification.

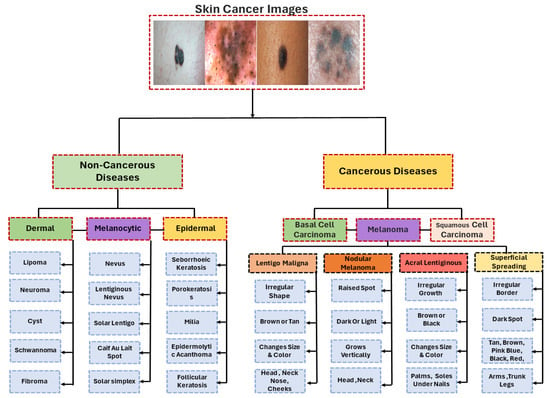

5. Taxonomy for Melanoma Diagnosis

Initially, the proposed taxonomy classifies lesion images into cancerous diseases (malignant) and noncancerous diseases (benign) [125]. In this study, the majority of the studies examined were on transfer learning for the categorization of melanoma and nonmelanoma skin cancers. Squamous cell carcinoma and basal cell carcinoma are considered nonmelanoma cancers [126,127]. Melanoma is the most serious kind of skin cancer. Lentigo maligna, acral lentiginous, noda melanoma, and superficial spreading are the four main subtypes of melanoma. Malignant melanoma is the name given to these cancers when they are found. Finding the appropriate form requires analysis and searching for patterns. A model is trained to identify the particular type of cancer [62,66,77,78]. There is a wide range of melanoma cancers, each with its own unique appearance, size, structure, prevalence, and color. Lentigo maligna has an uneven form that may be brown or tan and varies in color and size, while Noda melanoma has elevated patches that can be dark or light and develop vertically. Acral lentiginous melanoma grows unevenly and varies in size and color, while superficial spreading melanoma is characterized by a black patch, an uneven border, and color variations. Additionally, if the illness is determined to be benign or noncancerous, it is divided into three primary categories: dermal, epidermal, or melanocytic [62,78]. These skin cancers have shapes that look like melanoma. They are not cancerous and belong to the group of noncancerous diseases (Figure 11).

Figure 11. Taxonomy for melanoma diagnosis.

Several techniques such as precision, recall, and F1-score are used to analyze the performance of methods. The precise measurement of classification performance is provided by considering all values in the confusion matrix. The Matthews Correlation Coefficient (MCC) is a statistical measure that assume values between −1 and 1. A value of −1 represents complete disagreement, while a value of 0 suggests no improvement compared to random classification. The metric under consideration is a quantitative assessment of the effectiveness of categorization, accounting for the potential occurrence of chance results. A value of 1 represents complete consensus, whereas 0 signifies no discernible enhancement above random chance and −1 denotes a lack of consensus. The range of Kappa values spans from −1 to 1. The distribution of accurate replies is determined by the percentage of correct, incorrect, and incomplete responses. The Jaccard index is a metric used to evaluate the performance of a model by comparing the agreement between its predicted outcomes and the precision of manually annotated examples.

However, the MCC measure has many benefits in comparison to other metrics, including precision, confusion entropy, F1-score, and balanced precision. The great reliability of the Matthews Correlation Coefficient (MCC) with imbalanced databases is attributed to its ability to provide a high score when a significant proportion of both projected negative and positive data occurrences are accurately classified.

This entry is adapted from the peer-reviewed paper 10.3390/s23208457

This entry is offline, you can click here to edit this entry!