Due to the success of artificial intelligence (AI) applications in the medical field over the past decade, concerns about the explainability of these systems have increased. The reliability requirements of black-box algorithms for making decisions affecting patients pose a challenge even beyond their accuracy. Recent advances in AI increasingly emphasize the necessity of integrating explainability into these systems. While most traditional AI methods and expert systems are inherently interpretable, the recent literature has focused primarily on explainability techniques for more complex models such as deep learning.

1. Classification of Explainability Approaches

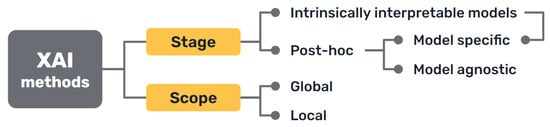

Several taxonomies have been proposed in the literature to classify XAI methods and approaches, depending on different criteria [

40,

41,

42] (

Figure 3).

Figure 3. Most common approaches to the classification of XAI methods.

First, there is a clear distinction between auxiliary techniques that aim to provide explanations for either the model’s prediction or its inner workings, which are commonly called post hoc explainability methods, and AI models that are intrinsically interpretable, either because of their simplicity, the features they use or because they have a straightforward structure that is readily understandable by humans.

Secondly, it can distinguish interpretability techniques by their scope, where explanations provided by an XAI approach can be local, meaning that they refer to particular predictions of the model, or global, if they try to describe the behaviour of the model as a whole.

Other differentiations can be made between interpretability techniques that are model specific, because they have requirements regarding the kind of data or algorithm used, and model agnostic methods, that are general and can be applied in any case. Intrinsically interpretable models are model specific by definition, but post hoc explainability methods can be generally seen as model agnostic, though some of them can have some requisites regarding the data or structure of the model.

More classifications can be made regarding how characteristics of the output explanations are displayed (textual, visual, rules, etc.), the type of input data required, the type of problem they can be applied to [

42] or how they are produced [

30].

2. Intrinsically Interpretable Models

Intrinsically interpretable models are those built using logical relations, statistical or probabilistic frameworks, and similar strategies that represent human-interpretable systems, since they use rules, relationships or probabilities assigned to known variables.

This approach to explainable AI, despite receiving less attention in recent years while the focus has been on DL, is historically the original one, and the perspective taken by knowledge-based systems.

2.1. Classical Medical Knowledge-Based Systems

Some knowledge-based systems, commonly known as “expert systems”, are some of the classical AI models that were first developed at the end of the 1960s. Explanations were sometimes introduced as a feature of these first rule-based expert systems by design, as they were needed not only by users, but also by developers, to troubleshoot their code during the design of these models. Thus, the importance of AI explainability has been discussed since the 1970s [

44,

45].

In medicine, many of these systems were developed aiming to be an aid for clinicians during the diagnosis of patients and treatment assignment [

46]. It must reiterate that explanations for patient cases are not easily made, in many cases, by human medical professionals. The most widely known of these classical models was MYCIN [

47], but many more based on causal, taxonomic, and other networks of semantic relations, such as CASNET, INTERNIST, the Present Illiness Program, and others, were designed to support rules by models of underlying knowledge that explained the rules and drove the inferences in clinical decision-making [

37,

48,

49]. Subsequently, the modelling of explanations was pursued explicitly for the MYCIN type of rule-based models [

50]. The role of the explanation was frequently recognised as a major aspect of expert systems [

51,

52,

53].

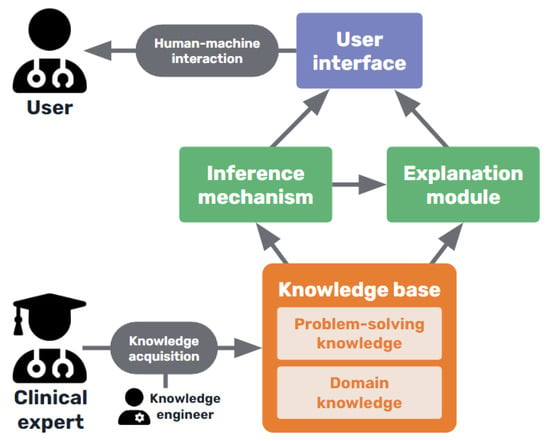

As shown in

Figure 4, expert systems consist of a knowledge base containing the expertise captured from human experts in the field, usually in the form of rules, including both declarative or terminological knowledge and procedural knowledge of the domain [

45]. This knowledge base is consulted by an inference algorithm when the user interacts with the system, and an explanation facility interacts with both the inference system and the knowledge base to construct the corresponding explaining statements [

54].

Figure 4. Basic diagram of a medical knowledge-based expert system.

The explanations provided were pre-modeled [

55] and usually consisted of tracing the rules used by the inference mechanism to arrive at the final decision and present them in an intelligible way to the user. Such an approach aimed to explain how and why the system produced a diagnosis [

53] and, in some more sophisticated cases, even the causal evolution of the patient’s clinical status [

56].

However, these explanations were limited by the knowledge base constructed by the system’s designers; all the justifications for knowledge had to be explicitly captured to produce specific explanations [

53]. Knowledge acquisition (KA) and updating is a challenging task and this was not efficiently resolved, leading KA to become the bottleneck that resulted in the decline of interest in knowledge-based AI systems (as a main example of symbolic AI) in favour of data-based AI and ML systems.

2.2. Interpretable Machine Learning Models

As an alternative to knowledge-based systems, from the early days of medical decision making, statistical Bayesian, Hypothesis-Testing, and linear discriminant models were ML models that can be considered interpretable. They are based on the statistical relationships extracted from clinical databases which allow formal probabilistic inferential methods to be applied. Ledley and Lusted proposed the Bayesian approach in their pioneering article in the journal 𝑆𝑐𝑖𝑒𝑛𝑐𝑒

in 1959 [

57], with many of the alternatives first discussed in The Diagnostic Process conference [

58]. Logistic regression is an effective statistical approach that can be used for classification and prediction. Generalised Linear Models (GLMs) [

59] are also used in various problems among the literature [

60,

61], while Generalised Additive Models (GAMs), an extension of these, allow the modelling of non-linear relationships and are used for prediction in medical problems as well [

62,

63].

Decision trees are considered transparent models because of their hierarchical structure which allows the easy visualisation of the logical processing of data in decision making processes. Moreover, a set of rules can be extracted to formalise that interpretation. They can be used for classification in the medical context [

64,

65], however, they sometimes show poor generalisation capabilities, so it is most common to use tree ensembles (like the random forest algorithm [

66]) that show better performance, in combination with post hoc explainability methods, as they lose some interpretability [

67,

68,

69,

70].

The generalisation of formal models through Bayesian networks [

71], have become popular for modelling medical prediction problems [

72,

73,

74,

75,

76], representing conditional dependencies between variables in the form of a graph, so that evidence can be propagated through the network to update the diagnostic or prognostic states of a patient [

77]. This reasoning process can be easily visualised in a straightforward manner.

Interpretable models can be used by themselves, but another interesting strategy is using them in ensemble models. Ensemble models consist of combining several different ML methods to achieve a better performance and better interpretability than with a black-box model alone [

78]. These approaches can also include these interpretable models in conjunction with DL models such as neural networks [

79,

80,

81,

82], as well as other post hoc explainability techniques [

83,

84]. However, they pose increasingly, and as yet unresolved, complex interpretation issues, as recently emphasised by Pearl [

85].

2.3. Interpretation of Neural Network Architectures

Despite the fact that neural networks cannot be fully included in the category of intrinsically interpretable models, it can characterize them (DL are also included), such as architectures designed so that they resemble some of the simple neural modelling of brain function and that are used heuristically to recognise images or perform different tasks, and some neural network architectures have been specifically designed to provide interpretability. The first type tries to mimic human decision-making where the decision is based on previously seen examples. In reference [

86], a prototype learning design is presented to provide the interpretable samples associated with the different types of respiratory sounds (normal, crackle, and wheeze). This technique learns a set of prototypes in a latent space that are used to make a prediction. Moreover, it also allows for a new sample to be compared with the set of prototypes, identifying the most similar and decoding it to its original input representation. The architecture is based on the work in reference [

87] and it intrinsically provides an automatic process to extract the input characteristics that are related to the associated prototype given that input.

Other methods’ main motivation is to behave in a way more similar to how clinicians diagnose, and provide explanations in the form of relevant features. Among this type, attention maps are widely used. In short, they extract the influence of a feature on the output for a given sample. They are based on the gradients of the learned model and, in [

88], have been used to provide visual MRI explanations of liver lesions. For small datasets, it is even possible to include some kind of medical knowledge as structural constraint rules over the attention maps during the process design [

89]. Moreover, the attention maps can also be applied at different scales, concatenating feature maps, as proposed in reference [

90], and being able to identify small structures on retina images.

These approaches are specific to DL but, still, surrogate models or post hoc methods are applicable to add explainability.

3. Post Hoc Explainability Methods

Extending the above approach to transparency came with the development of more complex data-based ML methods, such as support vector machines (SVMs), tree ensembles, and, of course, DL techniques. The latter have become popular due to their impressive performance on a huge variety of tasks, sometimes even surpassing human accuracy for concrete applications, but also unfortunately entailing deeper opacity, for instance, than the detailed explanations that classic statistics can provide.

For this reason, different explainability methods have been proposed in order to shed light on the inner workings or algorithmic implementations used in these black-box-like AI models. Because they are implemented as added facilities to these models, executed either over the results or the finished models, they are known as post hoc methods, which produce post hoc explanations, as opposed to the approach of intrinsically interpretable models.

Many of the approaches included in this category, which are also currently the most widely used, as reported in the literature, are model agnostic. Post hoc model agnostic methods are so popular due to their convenience: they are quick and easy to set up, flexible, and well-established. Within this category, there are also some model specific post hoc techniques designed to work only for a particular type of model.

These are less flexible, but tend to be faster and sometimes more accurate due to their specificity, as they can access the model internals and can produce different types of explanations that might be more suitable for some cases [

30].

Regardless of their range of application, post hoc methods can also be grouped on the basis of their functionalities. Combining the taxonomies proposed in references [

11] and [

67], it can broadly differentiate between explanations through simplification (surrogate models), feature relevance methods, visualisation techniques, and example-based explanations. In the following sections, these ideas will be presented as well as some of the most popular and representative methods belonging to each group.

3.1. Explanation by Simplification

One way to explain a black-box model is to use a simpler, intrinsically interpretable model for the task of explaining its behaviour.

One method that uses this idea, which is undoubtedly one of the most employed ones throughout all the literature, is LIME (Local Interpretable Model-agnostic Explanations) [

91]. This method builds a simple linear surrogate model to explain each of the predictions of the learned black-box model. The prediction’s input to be explained is locally perturbed creating a new dataset that is used to build the explainable surrogate model. An explanation of instances can help to enforce trust in assisted AI clinical diagnosis within a patient diagnosis workflow [

92].

Knowledge distillation is another technique included in this category. It was developed to compress neural networks for efficiency purposes, but it can also be used to construct a global surrogate interpretable model [

93]. It consists of using the more complex black-box model as a “teacher” for a simpler model that learns to mimic its output scores. If the “student” model demonstrates sufficient empirical performance, a domain expert may even prefer to use it in place of the teacher model and LIME. The main rationale behind this type of modelling is the assumption that some potential noise and error in the training data may affect the training efficacy of simple models. The authors of reference [

94] used knowledge distillation to create an interpretable model, achieving a strong prediction performance for ICU outcome prediction.

Under this category, we could also include techniques that attempt to simplify the models by extracting knowledge in a more comprehensive way. For example, rule extraction methods try to approximate the decision-making process of the black-box model, such as a neural network, with a set of rules or decision trees. Some of the methods try decomposing the units of the model to extract these rules [

95], while others keep treating the original model as a black box and use the outcomes to perform a rule search [

96]. There are also combinations of both approaches [

97].

3.2. Explanation Using Feature Relevance Methods

In the category of feature relevance methods, it can find many popular examples of explainability techniques. These approaches try to find the most relevant variables or features to the model’s predictions, those that most influence the outcome in each case or in general.

The ancestry to these techniques can be found in both statistical and heuristic approaches dating back to the 1930s with Principal Component Analysis (PCA), which explains the weightings of features, or contributions to relevance in terms of their contribution to inter- and intra-population patterns of multinomial variance and covariance [

98]. These techniques were also shown to be central to both dimensionality reduction and its explanation in terms of information content for pattern recognition [

99] and clinical diagnostic classification and prediction using subspace methods from atomic logic [

100]. Later, related techniques for feature extraction by projection pursuit were developed and applied to clinical decision-making.

More recently, with LIME (that could also be included in this group), SHAP (SHapley Additive exPlanations) is one of the most widely used XAI model agnostic techniques, and it is the main example of the category of feature relevance methods. It is based on concepts from game theory that allow the computing, which are the features that contribute the most to the outcomes of the black-box model, by trying different feature set permutations [

101]. SHAP explanations increase trust by helping to test prior knowledge and can also help to get insights into new ones [

102].

Other well-known similar examples that measure the importance of different parts of the input by trying different changes is SA (Sensitivity Analysis) [

103], and LRP (Layer-Wise Relevance Propagation) [

104]. Deep Taylor Decomposition (an evolution of LRP) [

105] and DeepLIFT [

106] are other model-specific alternatives for neural networks, that propagate the activation of neurons with respect to the inputs to compute feature importance.

3.3. Explanation by Visualisation Techniques

Some of the aforementioned methods can produce visual explanations in some cases. Still, some other methods that directly visualize the inner workings of the models, like Grad-CAM [

107], that helps in showing the activation of the layers of a convolutional neural network. In addition, there are other techniques that visualize the inputs and outputs of a model and the relationship between them, such as PDP (Partial Dependence Plots) [

83] and ICE (Individual Conditional Expectation) plots [

108]. It is worth mentioning that visualisation can help to build explicable interfaces to interact with users, but it is complex to use them as an automatic step of the general explainability process.

3.4. Explanations by Examples

Finally, another approach to produce explanations is to provide examples of other similar cases that help in understanding why one instance has been classified as one object or structure or another by the model, or instead, dissimilar instances (counterfactuals) that might provide insights as to why not.

For instance, MMD-critic [

109] is an unsupervised algorithm that finds prototypes (the most representative instances of a class) as well as criticisms (instances that belong to a class but are not well represented by the prototypes). Another example are counterfactual explanations [

110] that describe the minimum conditions that would lead to a different prediction by the model.

4. Evaluation of Explainability

Despite the growing body of literature on different XAI methods, and the rising interest in the topics of interpretability, explainability, and transparency, there is still limited research on the field of formal evaluations and measurements for these issues [

111]. Most studies just employ XAI techniques without providing any kind of quantitative evaluation or appraisal of whether the produced explanations are appropriate.

Developing formal metrics and a more systematic evaluation of different methods can be difficult because of the variety of the available techniques and the lack of consensus on the definition of interpretability [

112]. Moreover, contrary to usual performance metrics, there is no ground-truth when evaluating explanations of a black-box model [

27,

112]. However, this is a foundational work of great importance, as such evaluation metrics would help towards not only assessing the quality of explanations and somehow measuring if the goal of interpretability is met, but also to compare between techniques and help standardise the different approaches, making it easier to select the most appropriate method for each case [

113].

In short, there is a need for more robust metrics, standards, and methodologies that help data scientists and engineers to integrate interpretability in medical AI applications in a more detailed, verified, consistent, and comparable way, along the whole methodology, design, and algorithmic development process [

114]. Nevertheless, in the few studies available on this topic, there are some common aspects that establish a starting point for further development, and there are some metrics, such as the robustness, consistency, comprehensibility, and importance of explanations.

A good and useful explanation for an AI model is one that is in accordance with human intuition and easy to understand [

115]. To evaluate this, some qualitative and quantitative intuitions have already been proposed.

-

On the one hand, qualitative intuitions include notions about the cognitive form, complexity, and structure of the explanation. For example, what are the basic units that compose the explanation and how many are there (more units mean more complexity), how are they related (rules or hierarchies might be more interpretable for humans), if any uncertainty measure is provided or not, and so on [

111].

-

On the other hand, quantitative intuitions are easier to formally measure and include, for example, notions like identity (for identical instances, explanations should be the same), stability (instances from the same class should have comparable explanations) or separability (distinct instances should have distinct explanations) [

115,

116]. The metrics based on these intuitions mathematically measure the similarity between explanations and instances as well as the agreement between the explainer and the black-box model.

Other options to evaluate XAI techniques include factors such as the time needed to output an explanation or the ability to detect bias in the data [

115].

Another interesting strategy is to quantify the overlap between human intuitions (such as expert annotations) and the explanations obtained [

117,

118], or using human ratings by experts on the topic [

113,

119,

120,

121].

There are also different options regarding the context in which these various metrics can be used. The evaluation of an XAI system can be made either in the context of the final target task with the help of domain experts, in simpler tasks, or using formal definitions [

111]. Depending on the specific characteristics of the problem and available resources, different approaches and metrics can be chosen.

This entry is adapted from the peer-reviewed paper 10.3390/app131910778