Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is an old version of this entry, which may differ significantly from the current revision.

Accurate identification of lesions and their use across different medical institutions are the foundation and key to the clinical application of automatic diabetic retinopathy (DR) detection. Existing detection or segmentation methods can achieve acceptable results in DR lesion identification, but they strongly rely on a large number of fine-grained annotations that are not easily accessible and suffer severe performance degradation in the cross-domain application.

- diabetic retinopathy identification

- multiple instance learning

- supervised learning

1. Introduction

Diabetic retinopathy (DR) is one of the most common complications of diabetes and one of the leading causes of visual impairment in the working-age population. Fortunately, timely diagnosis can prevent further deterioration of the lesions, thus reducing the risk of blindness. During the diagnosis of DR, the ophthalmologist completes the comprehensive diagnosis by identifying the lesion attributes on the fundus image, such as microaneurysm (MA), hemorrhage (HE), exudate (EX), cotton wool spots (CWS), neovascularization (NV), and intraretinal microvascular abnormalities (IRMA). However, due to the difficulty in identifying certain lesions, this process can be time-consuming and labor-intensive. Automatic DR-aided diagnosis methods use deep learning models to extract features from the fundus image to complete the location of the lesions, and the results can be provided to ophthalmologists for further diagnosis. At the same time, with the maturity of automatic DR-assisted diagnosis technology, the requirements for deep learning models in clinical applications are also increasing. For example, it is expected to have the ability to be used across medical institutions. In conclusion, cross-domain localization of DR lesions is becoming a concern of both academia and industry.

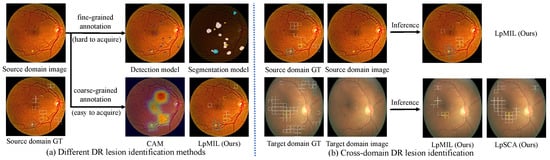

In recent years, with the development of deep learning, as shown in Figure 1a, several lesion identification models have been proposed to assist ophthalmologists in the diagnosis of DR. Models [1,2,3,4] trained with fine-grained annotations such as pixel-level annotations or bounding box annotations have been proposed and have achieved acceptable results in DR lesion identification. However, the application of these models is limited due to the time-consuming manual annotation. Therefore, some methods [5,6,7] attempt to accomplish both DR grading and lesion identification using only coarse-grained annotations such as grading labels or lesion attribute labels. However, due to the limited supervision provided by coarse-grained annotations, these methods tend to be biased on the most important lesion regions while ignoring trivial lesion information. In addition, in clinical applications, image quality and imaging performance vary due to the different image acquisition equipment used in different healthcare facilities, the direct application of models on other datasets will suffer huge performance losses (Figure 1b), which greatly limits the flexibility and scalability of these deep learning methods.

Figure 1. (a) Different DR lesion identification methods, including models trained with fine-grained annotations represented by detection models and segmentation models, and models trained with coarse-grained annotations represented by CAM and LpMIL. Using only coarse-grained annotations, our LpMIL not only achieves better lesion identification performance than CAM, but also achieves results that are competitive with detection and segmentation models. (b) Directly applying our LpMIL trained on the source domain to the target domain results in severe performance degradation, while our LpSCA improves cross-domain lesion identification performance through the semantic constrained adaptation method. “GT” denotes ground truth.

2. Related Work

2.1. Diabetic Retinopathy Lesion Identification

To complete automatic DR lesion identification, many DR lesion identification methods based on pixel-level or bounding box annotations have been proposed. Yang et al. [8] propose a two-stage Convolutional Neural Network (CNN) for DR grading and lesion detection, which uses the lesion detection results to assign different weights to the image patch to improve the performance of DR grading. Li et al. [9] adopt the object detection model to extract lesion features from fundus images for DR grading. Using a small number of pixel-level annotations, Foo et al. [10] propose a multi-task learning approach to simultaneously complete the tasks of DR grading and lesion segmentation. Zhou et al. [2] propose the FGADR dataset, on which DR lesion segmentation is performed. However, the application of these methods is limited due to the difficulty of obtaining fine-grained annotations. Therefore, researchers attempt to accomplish both DR grading and lesion identification using only coarse-grained grading labels. Wang et al. [5] utilize the attention map to highlight suspicious areas, and complete DR grading and lesion localization at the same time. Sun et al. [6] formulate lesion identification as a weakly supervised lesion localization problem through a transformer decoder, which jointly performs DR grading and lesion detection. Different from previous methods, we define DR lesion identification as a multi-label classification problem for patch-level lesion identification and use a cross-domain approach to enable the model to be used across healthcare institutions.

2.2. Multiple Instance Learning

Multiple instance learning has become a widely adopted weakly supervised learning method [11,12,13,14,15,16]. Many works have studied different pooling functions combined with instance embedding or instance prediction to accomplish bag-level prediction [17,18,19,20]. However, the pooling function itself cannot provide sufficient information, and supervision can only be retained at the bag level, which severely limits the effect of instance-level prediction. To address this problem, some approaches introduce artificial instance labels by specifying thresholds to provide both bag-level and instance-level supervision. Zhou et al. [18] use specified thresholds to directly assign positive or negative labels based on prediction scores, providing supervision for all instances. Morfi et al. [21] propose MMM loss for audio event detection. This loss function provides supervision for instances of extreme prediction scores according to the specified threshold and obtains bag level prediction through average pooling aggregation for bag level supervision. Seibold et al. [22] use a more customized way to create soft instance labels, flexibly providing supervision for all instances, and apply it to the pathological localization of chest radiographs pathologies.

To the best of our knowledge, these methods have not been applied to automatic DR detection.

2.3. Domain Adaptation

Domain adaptation is a subtask of transfer learning, which maps features of different domains to the same feature space, and utilizes the labels of the source domain to enhance the training of the target domain. The mainstream approach is to learn the domain invariant representation using adversarial training. DANN [23] pioneers this field by training a domain discriminator to distinguish the source domain from the target domain, and training a feature extractor to cheat the discriminator to align the features of the two domains. CDAN [24] utilizes the discrimination information predicted by the classifier to condition the adversarial model. GVB [25] improves adversarial training by building bridging layers between the generator and the discriminator. MetaAlign [26] treats domain alignment tasks and classification tasks as meta-training and meta-testing tasks in a meta-learning scheme for domain adaptation.

This entry is adapted from the peer-reviewed paper 10.3390/bioengineering10091100

This entry is offline, you can click here to edit this entry!