Inverse dynamics from motion capture is the most common technique for acquiring biomechanical kinetic data. However, this method is time-intensive, limited to a gait laboratory setting, and requires a large array of reflective markers to be attached to the body. A practical alternative must be developed to provide biomechanical information to high-bandwidth prosthesis control systems to enable predictive controllers. In this study, we applied deep learning to build dynamical system models capable of accurately estimating and predicting prosthetic ankle torque from inverse dynamics using only six input signals. We performed a hyperparameter optimization protocol that automatically selected the model architectures and learning parameters that resulted in the most accurate predictions. We show that the trained deep neural networks predict ankle torques one sample into the future with an average RMSE of 0.04 ± 0.02 Nm/kg, corresponding to 2.9 ± 1.6% of the ankle torque’s dynamic range. Comparatively, a manually derived analytical regression model predicted ankle torques with a RMSE of 0.35 ± 0.53 Nm/kg, corresponding to 26.6 ± 40.9% of the ankle torque’s dynamic range. In addition, the deep neural networks predicted ankle torque values half a gait cycle into the future with an average decrease in performance of 1.7% of the ankle torque’s dynamic range when compared to the one-sample-ahead prediction. This application of deep learning provides an avenue towards the development of predictive control systems for powered limbs aimed at optimizing prosthetic ankle torque.

Introduction

Use of a practical model that maps from control commands and easily observed states to a controllable future state of a system, such as the torque about a powered ankle–foot prosthesis (PAFP), has the potential to improve the mobility of individuals with lower limb loss [

1]. By simulating PAFP dynamics over a period of time for different control commands, a model can provide information to search for a sequence of control commands that achieve a desired prosthesis behavior, such as time-varying impedance parameters or terrain-specific angle-torque profiles. Real-time model predictive control (MPC) has been proposed to control assistive robotic devices during physical interaction with humans, allowing the robot to adapt its behavior [

2,

3]. Integrating MPC with real-time feedback from sensors into PAFP control schemes may enable the prosthesis to better adapt to the user’s movements, allowing for more natural and intuitive interactions between the user, device, and environment. A key benefit of this approach is the optimization of robot behavior over a finite time horizon by finding the optimal control inputs that minimize a cost function. This allows a controller to achieve a desired task, such as tracking a desired angle-torque profile while rejecting disturbances and minimizing energy consumption. Additionally, MPC can be designed to ensure the robot operates within the constraints of the system, such as joint limits and actuator saturation. For prosthetic applications, such constraints can be essential for safe and robust operation.

In terms of modeling PAFPs, control commands (e.g., motor current) can be mapped to motor torque for some devices including series elastic actuators. For parallel elastic actuators, the passive dynamics play a critical role in the total ankle torque (i.e., the total prosthetic ankle torque is the summation of the active and passive components). In addition to the passive elements of the prosthesis, there are other important factors, including variations in limb loading, shoe/keel mechanics, the dynamics of the other limbs and joints, and environmental conditions that can influence prosthetic ankle torque. Furthermore, even under steady-state walking conditions, stride-to-stride variations are inevitable, and it is important for prosthesis controllers to compensate for this variability [

4].

Developing a method to predict prosthetic torques could better allow a PAFP controller (e.g., model predictive controller) to adapt to these factors that are unaccounted for in modern prosthetic control strategies [

5]. This paper investigates different predictive model architectures with the goal of accurately predicting ankle torque over both short (one sample period or 33 ms) and long (twenty sample periods or approximately half a gait cycle) time periods. The results demonstrate the capability of high-fidelity predictive models and how they could be used in future prosthetic control systems.

The Role of System Models for Robotic Controllers and the Need for Better Modeling Approaches

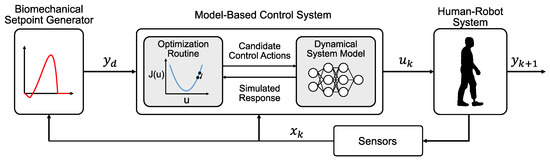

A dynamical model of a robot prosthesis system would enable the use of model-based control methods, which use forward predictions to adjust control commands that drive the system toward a desired behavior (

Figure 1). Model-based controllers that provide a distribution of future states (e.g., prosthetic ankle torque profiles) and their costs (e.g., the error between desired ankle torque profile and the predicted torque profile) based on candidate control commands are, when presented with limited environmental interactions, typically more sample-efficient and converge (i.e., minimize cost) more quickly than model-free techniques [

6,

7]. This is because a model-based controller is able to leverage the system model to focus the control command search space [

8]. Model-free control strategies require more time to minimize cost (e.g., require more walking trials to train the controller and achieve a desired torque profile). In some cases, a model-based control law can be used as an initialization for a model-free learner (e.g., [

9]). The model-free learner can then fine-tune the control law to overcome any model uncertainties. Despite their promise, model-based control systems have not been implemented in robotic prosthesis control because modeling human prosthesis dynamical behavior remains a challenge.

Figure 1. Block diagram of the architecture of a generic model-based prosthesis control system. A trajectory generator outputs a desired ankle torque 𝑦𝑑, which is then fed into an optimizer. The optimizer samples different possible control commands 𝑢𝑘 and conducts forward simulations based on system model predictions. The optimizer uses the results to determine which control command would achieve the closest one-sample ahead ankle torque response 𝑦𝑘+1 to the desired behavior. This control command is then sent to the human–robot system and the measurements of the system 𝑥𝑘 (e.g., loading and motion information from wearable sensors) are fed back to the optimizer for the next control sample.

Deep neural networks (DNNs) provide a promising modeling strategy for model-based control of prosthetic ankles and are state of the art in other challenging learning tasks, such as natural language processing [

10] and computer vision [

11] that also involve high-dimensional nonlinear relationships. Once a DNN is sufficiently trained to model the human–robot system, it can be deployed to a real-time prosthesis control system and map system state measurements to an output prediction (e.g., ankle torque response), all without explicit knowledge of the system’s physics.

The capability of DNNs to output long-term predictions presents another key advantage in the context of prosthetic control. In certain cases, modeling approaches based on physics or first principles can provide insight toward system input–output relationships. However, it is often challenging and time-consuming to characterize system behaviors using first principles for systems with nonstationary dynamics that are highly nonlinear and vary over time (e.g., a human-prosthesis system). When mathematical process models are not known, DNN architectures have demonstrated an ability to accurately predict long-term behaviors including robotic trajectory [

12] and human behavior prediction [

13]. If high-fidelity DNN models that predict human-prosthetic dynamics can be developed, then it would be possible to proactively adjust prosthesis control actions to achieve a desired long-term trajectory or prepare for gait transitions.

Current Data-Driven Approaches in Prosthetic Control

Most data-driven biomechatronics research up to this point has been used to build models that relate environmental, system, and user data to intent recognition [

14,

15,

16] or gait phase estimation [

17] rather than to build predictive regression models of human–robot dynamical systems (e.g., estimating joint kinetics that cannot be observed instantaneously). A predictive model that outputs these dynamics directly can inform controllers and improve their performance (e.g., adapt the control inputs to achieve a desired ankle torque response). In addition, a model-based prosthesis controller can utilize joint dynamics information even outside the laboratory where motion capture (MoCap) is not available and inverse dynamics computations are more challenging.

Some early studies used neural networks and electromyography signal inputs to predict ankle dynamics; however, their predictions were noisy and less accurate when compared to a muscle model [

18,

19]. One explanation is that their shallow neural network architecture did not take the dynamic spatial–temporal relationships of human–robot systems into account. More recently, studies have successfully used more advanced neural network classes such as recurrent neural networks (RNNs) [

20] and attention-based long short-term memory networks (LSTMs) [

21,

22] to generate reference trajectories for prosthesis controllers based on able-bodied data. Others have used wearable sensors and machine learning models to predict joint moments for exoskeleton control, also using able-bodied data [

23]. However, deep learning models have not been used to predict prosthesis joint dynamics directly for individuals with transtibial amputation.

Deep Learning Modeling Approach

The field of wearable robotics is increasingly incorporating deep learning to improve control systems and user adaptability [

24]. Recent studies have applied deep learning for intuitive control of powered knee–ankle prostheses [

25], estimating joint moments and ground reaction forces in various walking conditions [

26], and predicting joint moments in real time for exoskeleton controllers [

27,

28]. This signifies a growing trend towards more responsive and adaptive assistive technologies, yet gaps remain in understanding the potential and limitations of deep learning methods for PAFP control.

The development of models that can predict ankle mechanics is a key milestone in implementing a model-based control strategy for prosthetic devices, and this paper investigates different model architectures for predicting prosthetic ankle torques. The models are trained and evaluated based on how well they can predict prosthetic ankle torque values from previously collected data. These methods use only a small number of model input features and do not rely on a full-body MoCap suit or a large array of electromyography sensors. Experimental data in the form of total prosthetic ankle torques from optical MoCap (target outputs) and system state data (predictor inputs) from optical MoCap, force plate measurements, and on-board sensors are used to train the predictive models.

The output of the models was chosen to be the forward predictions of the prosthetic ankle torque. These output targets were computed using the full-body MoCap marker set (Vicon) with inverse dynamics principles to derive the total torque value at the ankle based on the movements of all anatomical structures that affect the joint. The reason for choosing the prosthetic ankle torque as the time series of interest is because many robotic prosthesis control methods aim to achieve a desired ankle torque or deploy a mathematical formula based on ankle impedance (i.e., prescribing an assistive ankle torque based on changes in ankle position).

Three DNN architectures were developed and trained: (i) a simple multilayer feedforward network (FFN), which passes information in one direction [

28]; (ii) a gated recurrent unit network (GRU), which includes feedback connections and gates used to keep track of long-term dependencies in the input sequences [

29]; and (iii) a dual-stage attention-based gated recurrent unit network (DA-GRU), which shares the capabilities of the GRU and adds attention mechanisms that assign weights to the different elements of the input sequence based on their relevance to learning the given task [

30]. Each DNN was trained to predict ankle torques from inverse dynamics a short time period (one sample) into the future. The one-sample-ahead prediction performances of the DNNs and an analytical model of the PAFP system, which was derived based on first principles, are then directly compared to PAFP torque computed from MoCap and inverse dynamics. Additionally, we assess the long-term predictive capabilities of neural networks that could realistically be applied to prosthetic control. Each DNN was trained to predict twenty samples, approximately half a gait cycle, into the future to compare each DNN’s ability to predict prosthetic ankle torques. Half a gait cycle was chosen, as it could enable a prosthesis controller to proactively respond to stride-to-stride variations or gait transitions.

In summary, while most prosthetic applications have yet to incorporate predictive models, advanced feedback control systems stand to benefit significantly from them, especially for managing the complex human–robot interactions in prosthetic ankle–foot devices. One of the primary challenges in predicting prosthetic ankle torque stems from the fact that it is influenced by both the control action of the device and the variable kinematic pattern adopted by the user when using devices that incorporate parallel elastic elements. Traditional physics-based models struggle to account for these complexities, making them less effective for real-time control. The main contribution of this paper is the development and comparison of deep learning models that are specifically designed to predict this shared human–robot state, thus paving the way for more adaptive and intuitive prosthetic control systems in the future.

This entry is adapted from the peer-reviewed paper 10.3390/s23187712