Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is an old version of this entry, which may differ significantly from the current revision.

Spiking neural networks, often employed to bridge the gap between machine learning and neuroscience fields, are considered a promising solution for resource-constrained applications. Since deploying spiking neural networks on traditional von-Newman architectures requires significant processing time and high power, typically, neuromorphic hardware is created to execute spiking neural networks. The objective of neuromorphic devices is to mimic the distinctive functionalities of the human brain in terms of energy efficiency, computational power, and robust learning.

- neuromorphic computing

- artificial neural network

- natural language processing

1. Introduction

In recent years, the Artificial Neural Networks (ANNs) domain has witnessed a significant adaptation of Deep Neural Networks (DNNs) across several fields, such as machine learning, computer vision, artificial intelligence, and natural language processing (NLP). DNNs are capable of accurately performing a wide range of tasks by training on massive datasets [1]. However, the energy consumption and computational cost required for training large volumes of datasets and for deploying the resulting applications have been of less importance; thus, they have been overlooked [2,3]. The DNNs typically consume high power and require large data storage [4,5]. Although there have been significant advancements in ANNs, ANNs were unable to achieve the same level of energy efficiency and online learning ability as biological neural networks [6]. Drawing inspiration from brain-inspired computing, one potential solution to address the issue of high-power consumption is to use the neuromorphic hardware with Spiking Neural Networks (SNNs). SNNs, often considered the third generation of neural networks, are emerging to bridge the gap between fields such as machine learning and neuroscience [7].

Unlike traditional neural networks that rely on continuous-valued signals, the SNNs work in continuous time [8]. In SNNs, the neurons communicate with each other using discrete electrical signals called spikes. Spikes model the behavior of the neurons more accurately and more biologically plausible than ANNs, thus making SNNs more energy efficient and computationally powerful than ANNs [9]. The neuron models of ANNs and SNNs differ from each other. For instance, ANNs do not have any memory and use sigmoid, tanh, or rectified linear unit (ReLU) as computational units, whereas SNNs have memory and use non-differentiable neuron models. Typically, large-scale SNN models consume high power and require high execution time when utilized/executed on classical Von Neumann architectures [10]. Hence, there is a need for high-speed and low-power hardware for executing large-scale SNN models. In this regard, existing neuromorphic platforms, such as SpiNNaker [11], Loihi [12], NeuroGrid [13], and TrueNorth by IBM [14], are expected to advance the applicability of large-scale SNNs in several emerging fields by offering energy-efficient high-speed computational solutions. SNNs have the functional similarities to biological neural networks, allowing them to embrace the sparsity and temporal coding found in biological systems [15]. However, SNNs are difficult to train because of their non-differentiable neuron models. In terms of speed performance, SNNs are inferior to DNNs. Nevertheless, due to the low power traits, SNNs are considered more efficient than DNNs [6].

2. Neuromorphic Sentiment Analysis Using Spiking Neural Networks

2.1. Spiking Neural Networks (SNNs)

As stated in [16], the SNNs are considered the third generation of neural networks, which communicate through a sequence of discrete electrical events called “spikes” that takes place at a point of time. The SNN models are generally expressed in the form of differential equations [17]. The structure of spiking neurons in the SNN model is similar to the structure of the ANN neuron; however, their behavior is different. SNNs are widely used in various applications, including brain–machine interface, event detection, forecasting, and decision making [18,19].

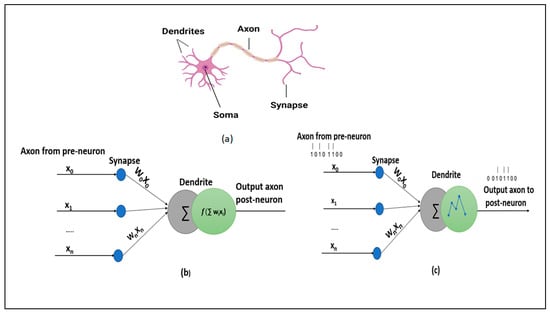

The spiking neuron models are distinguished based on the biological plausibility and computational capabilities [20,21,22]. Typically, spiking neuron models are selected based on specific user requirements. Figure 1 illustrates the schematics of a biological neural network, ANN and SNN [17].

Figure 1. Schematic representations of (a) biological neural network, (b) artificial neural network, and (c) spiking neural network.

2.2. ANN to SNN Conversion

The ANNs are used extensively for solving several tasks in various fields, such as machine learning and artificial intelligence. In this case, deep learning develops large neural networks with millions of neurons that span up to thousands of layers. These large neural networks have proven to be effective while solving several complex tasks, including video classification, object detection and recognition, etc.; however, these networks require massive computational resources [32,33,34,35]. The development of SNNs is mainly to address the challenge associated with massive computational resources. The SNNs perform similar tasks with less computational resources and with low energy consumption. In SNNs, all the computations are event-driven, and operations are sparse. In this case, the computations and operations are performed only when there is a significant change in the input. Typically, training a large SNN is a difficult task; thus, an alternative approach is to take a pre-trained ANN network and convert it into SNNs [1]. Existing ANN-to-SNN conversion methods in the literature primarily focus on converting ReLu to IF neurons.

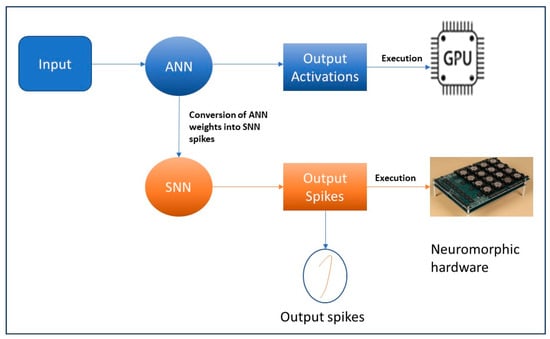

An overview of ANN-to-SNN conversion is illustrated in Figure 2. The process of converting from ANN to SNN involves transferring the trained ANN settings that use ReLU activations to an SNN with an identical structure, as depicted in Figure 2. This approach enables the SNN to achieve exceptional performance while requiring minimal computational resources. Initially, the ANN model is trained with the given inputs, and the weights are saved. Typically, traditional trained ANN models are being executed on GPUs, as illustrated in top modules in blue (in Figure 2).

Figure 2. Overview of the ANN-to-SNN conversion.

2.3. Neuromorphic Hardware

The neuromorphic hardware for SNNs is categorized into analog, digital, or mixed-signal (analog/digital) designs [37]. Many neuromorphic hardware platforms with varying configurations have emerged to manage large-scale neural networks. From these neuromorphic platforms, fully digital and mixed-signal hardware, such as IBM TrueNorth, NeuroGrid, BrainScaleS, Lohi, and SpiNNaker, are some of the commonly used platforms among several applications [38]. A detailed description of the neuromorphic hardware platforms can be found in [38].

Table 3 presents various features/characteristics of existing neuromorphic hardware platforms

Table 3. Characteristics of existing neuromorphic hardware platforms.

| Platform | Technology (mm) |

Electronics | Chip Area (mm2) |

Neuron Model |

On-Chip Learning |

Neuron Number (Chip) |

Synapse Model |

Synapse Number (Chip) |

Online Learning |

Power |

|---|---|---|---|---|---|---|---|---|---|---|

| TrueNorth [9] |

ASIC-CMOS 28 | Digital | 430 | LIF | No | 1 Million | Binary 4 modulators | 256 M | No | 65 mW (per chip) |

| BrainScaleS [9] |

ASIC-CMOS 180 | Analog/Digital | 50 | Adaptive exponential IF |

No | 512 | Spiking 4-bit digital | 100 K | Yes | 2 kW Per module (peak) |

| NeuroGrid [9] |

ASIC-CMOS 180 | Analog/Digital | 168 | Adaptive Quadratic IF |

No | 65,000 | Shared dendrite | 100 M | Yes | 2.7 W |

| Loihi [9] |

ASIC-CMOS 14 nm |

Digital | 60 | LIF | Yes (with plasticity rule) | 131,000 | N/A | 126 M | Yes | 0.45 W |

| SpiNNaker [9] |

ASIC-CMOS 130 nm |

Digital | 102 | LIF LZH HH |

Yes (synaptic plasticity rule) | 16,000 | Programmable | 16 M | Yes | 1 W (per chip) |

2.4. SpiNNaker

The SpiNNaker was designed by the Advanced Processor Technologies Research Group (APT), from the Department of Computer Science at the University of Manchester [39]. It is composed of 57,600 processing nodes, each with 18 ARM9 processors (specifically ARM968), 128 MB of mobile DDR-SDRAMs, totaling 1,036,800 cores, and over 7 TB of RAM [40,41]. The SpiNNaker is an SNN architecture designed to simulate large-scale SNNs. The main component of the SpiNNaker system is the SpiNNaker chip, whose main focus is to provide the required scalability and flexibility to perform experiments with neuron models. Based on brain-inspired computing, the objective of the SpiNNaker system is to design the neural architecture model of the human brain which is made up of approximately 100 billion neurons connected by trillions of synapses [39]. The SpiNNaker machine is a collection of low-power processors, which can simulate/execute a small number of neurons and synapses in real time. In this case, all the processors are interconnected by a high-speed network [42]. The high-speed network allows the processors to communicate with each other, while distributing the computation load for simulating a large neural network. The main advantage of the SpiNNaker system is its ability to simulate large-scale neural networks using an asynchronous scheme of communication [40,43], which is essential for testing brain functions and developing new neural network applications in areas such as robotics, machine learning, and artificial intelligence. The SpiNNaker system is indeed an exciting creation in the field of neural networks, and it has the potential to greatly advance the understanding of the brain and the information processing of the brain [44,45].

2.4.1. Architecture of SpiNNaker Chip

As stated in [45], the SpiNNaker chip has 18 cores coupled with an external RAM controller and a Network-on-Chip (NoC). Each core comprises an ARM968 processor, a direct memory access controller, a controller for communications, a network interface controller, and other peripherals, including a timer [45]. Every core in the SpiNNaker chip runs given applications by simulating/executing a group of neurons at 200 MHz. Each core also comprises 96 kB of tightly coupled memory (TCM). In order to avoid any contention issues, this TCM is split into two: 64 kB for data (DTCM), and 32 kB for instructions (ITCM). The DTCM consists of application data, including zero-initialized data, heap, stack, and read/write. Each chip in the SpiNNaker system has 128 MB of shared memory (i.e., SDRAM), which is directly accessible by all cores in the SpiNNaker chip [45]. In this case, the memory access time varies significantly when accessing the different memories mentioned above. Hence, the following should be considered when designing applications for the SpiNNaker system.

-

Faster access to DTCM at ≈5 ns/word. DTCM is only limited to the local core.

-

Access to SDRAM via a bridge. Accessing SDRAM could lead to a contention issue, since more than one core in the SpiNNaker chip could attempt to access. This is a slow process with >100 ns/word.

-

As a result, each core encompasses a direct memory access (DMA) controller, which is used to enable bulk transfer of data from the SDRAM core to DTCM efficiently. Although the DMCA setup introduces a fixed overhead, the data are still transferred from the processor independently at ≈10 ns/word.

The SpiNNaker is a large-scale parallel network, comprising low-power and energy-efficient processors connected by a network. Each node in the network is responsible for simulating/executing a small number of neurons and synapses [44]. Each node in the network communicates with every other node to exchange information and distribute the computation load. Each node in the network consists of processors, memory, I/O interfaces and core. Every node in the SpiNNaker architecture is constructed from one or more SpiNNaker boards, which are made up of SpiNNaker chips [11]. Currently, two production versions called SpiNN-3 and SpiNN-5, each of which has 4 and 48 chips, respectively, are available.

2.4.2. Components of SpiNNaker System

The architecture of the SpiNNaker system consists of the following four major components [11].

Processing nodes: are the individual processors used to simulate/execute the behavior of artificial neurons and synapses.

Interconnect fabric: is a high-speed network used to connect the processing nodes together. Interconnect fabric allows efficient communication between the nodes, as well as efficient load distribution across the network.

Host machine: is a separate master computer/processor used to configure and control the SpiNNaker system. The host machine constantly communicates with the processing nodes via the network interface. The host machine also provides a user interface to set up and run the simulations.

Software stack: consists of a variety of software components that work together to enable the simulation/execution of neural networks on the SpiNNaker system. This software stack includes the operating system running on the processing nodes, higher-level software libraries, and tools for configuring and running the simulations.

2.5. PyNN

In [46], the authors introduced PyNN, which is a python interface used to define the simulations after creating the SNN model. The simulations are typically executed on the SpiNNaker machine via an event-driven operating system [46]. Using a python script, PyNN allows users to specify the SNN simulations for executions. In this case, NEST, Neuron, INI, Brian, and SpiNNaker are commonly used SNN simulators.

2.6. Sentiment Analysis Using Natural Language Processing

Sentiment analysis is a natural language processing (NLP) technique that is commonly used to identify, extract, and quantify subjective information from text data [47]. Sentiment analysis is mainly used to analyze the text and determine the sentiment score. The sentiment score can range from −1 (indicating very negative sentiment) to +1 (indicating very positive sentiment), with 0 representing the neutral sentiment [48]. Using deep-learning-based approaches to perform sentiment analysis in NLP is a popular research area. Sentiment analysis is widely employed across various fields, such as marketing, finance, and customer service, to name a few [49]. Sentiment analysis can also be used to analyze financial news and social media to predict stock prices or market trends [50]. However, with the ongoing increase in data sizes, novel and efficient models (for sentiment analysis) are needed to manage and process the massive amount of data [51]. Although the existing ANN models provide the required accuracy while classifying the data, the ANN models are not efficient in terms of energy consumption and speed-performance [52].

This entry is adapted from the peer-reviewed paper 10.3390/s23187701

This entry is offline, you can click here to edit this entry!