Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is an old version of this entry, which may differ significantly from the current revision.

Image matting refers to precisely estimating the foreground opacity mattes from a given image. It is a necessary task in the computer vision field and has a broad set of applications in image or video editing. Semi-supervised learning (SSL) utilizes unlabeled data to improve feature representation given limited labeled data. Two main branches of methods have been proposed, consistency regularization and pseudo-labeling.

- image matting

- semi-supervised learning

- computer vision

- deep learning

- consistency regularization

- pseudo-labeling

- self-training

- multi-view training

1. Introduction

Image matting refers to precisely estimating the foreground opacity mattes from a given image. It is a necessary task in the computer vision field and has a broad set of applications in image or video editing. Mathematically, the observed image is modeled as a convex combination of the foreground and background as follows:

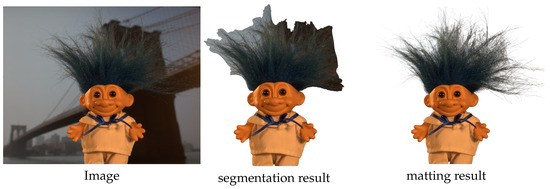

where 𝛼𝑖 denotes the opacity of the foreground at pixel i, and 𝐹𝑖 and 𝐵𝑖 are the foreground (FG) and background (BG) colors. Different from segmentation tasks which aim to identify the semantic scope of the object, image matting seeks to extract the smooth boundary and accurate opacity of the foreground object. As shown in Figure 1, semantic segmentation is a hard segmentation that identifies the category of each pixel, while image matting is a soft segmentation that predicts the proportion of each pixel that belongs to the foreground. It is a continuous prediction, representing the ratio as alpha matte.

Figure 1. Semantic segmentation and nature image matting.

The matting problem is highly ill-posed. When multiple foreground objects appear in a given image, the algorithm cannot determine the target object by itself, so previous work introduced a user-designed trimap to mark the target foreground region. Trimap indicates the rough area of the target foreground object and divides an image into three parts: known FG, known BG, and unknown transition region, with three colors: white, black, and gray. The gray region (unknown transition region) is the target region needed to be estimated in the matting problem. Therefore, the matting task is simplified as assigning alpha values on the range [0, 1] for each pixel in the unknown transition region.

2. Traditional Matting Methods

Traditional matting methods usually rely on low-level features such as color, texture, and brightness to separate foreground objects. Depending on the specific separation strategy, these traditional methods can be divided into two categories: sampling-based methods and propagation-based methods.

Sampling-based methods sample pixels in the known region and select the best foreground and background color pairs to estimate the alpha value of each pixel in the unknown region. There are various ways to sample pixel pairs and select appropriate color pairs. For example, Bayesian matting [1] uses the Gaussian Mixture Model (GMM) to learn the local color distribution and iteratively estimate alpha, foreground, and background using maximum likelihood criteria. Robust matting [2] samples a set of foreground–background pairs for each unknown pixel based on color similarity. Global matting [3] selects all pixels on the boundary of the known foreground and background regions to construct candidate pairs and finds the best color pairs.

Propagation-based methods establish connections between adjacent pixels, then use different optimization strategies to propagate alpha values from the known region to the unknown region. Non-local propagation-based methods establish connections between non-adjacent pixels. Closed-form matting [4] is a classic propagation-based method, which derives a cost function based on the local smoothness assumption and estimates the global optimal alpha value by solving a sparse linear equation system. Poisson matting [5] assumes the local smoothness of the input image, then utilizes the gradient field and Dirichlet boundary conditions to solve the Poisson equation and estimates the alpha value.

3. Deep Matting Methods

Due to deep learning networks’ solid high-level semantic comprehension ability and excellent performance, deep learning methods have become increasingly popular in image matting tasks.

In 2016, Cho et al. [6] proposed DCNN, which utilizes a convolutional neural network to optimize the matting result of closed-form matting [4] and KNN matting [7] with the assistance of the original trimap. Shen et al. [8] used a convolutional neural network to generate a trimap, then estimated alpha values by closed-form matting [4]. These works only used deep learning as optimization tools; no large-scale matting datasets were available for training. In 2017, Xu et al. [9] proposed Deep Image Matting (DIM), an end-to-end convolutional neural network matting method. It consists of two stages: a matting encoder–decoder network and a small refinement network. Specifically, the image and trimap are fed into the matting encoder–decoder; the refinement network then uses the original image as auxiliary information to optimize the output from the previous stage, generating a refined alpha matte. They first released a large-scale matting dataset, which became essential to developing deep matting methods.

Beginning with DIM, more and more deep learning methods were proposed and achieved good results. Sebastian et al. [10] proposed AlphaGAN, which applies generative adversarial networks (GAN) to image matting, and it contains two key components: a generator and a discriminator. The generator generates alpha mattes of given images, and the discriminator evaluates the quality of the alpha mattes generated by the generator and helps the generator to produce better results. Qiao et al. [11] utilized channel attention and spatial attention mechanism to fuse low-level and high-level features and make networks focus on valuable channels and spatial regions. Liu et al. [12] explored the semantic correlation between the trimap and image using a three-branch encoder. Park et al. [13] and Hu et al. [14] combined the latest Transformer [15] with image matting and achieved good results. In recent years, there were also some works focused on trimap-free matting [11], portrait matting [16], and background-based matting [17]. There has been no semi-supervised deep matting method yet.

4. Semi-Supervised Learning

Semi-supervised learning (SSL) utilizes unlabeled data to improve feature representation given limited labeled data. Two main branches of methods have been proposed in recent years, namely, consistency regularization and pseudo-labeling.

Consistency regularization is based on the cluster assumption, which states that the predicted result should not change significantly with minor perturbations because data points with different labels are separated in low-density regions. Given an unlabeled data point x and its perturbation 𝑥̂ , SSL aims to minimize the distance between the two outputs. Popular distance measures are mean square error (MSE), Kullback–Leibler divergence (KL), and Jensen–Shannon divergence (JS). Consistency regularization enforces the current optimized model to yield stable and consistent predictions under various perturbations on the same unlabeled data. VAT [18] applies adversarial perturbations to the output. The ΠΠ model [19] applies different data augmentations and dropouts to form perturbed samples and aligns them. Dual struent [20] uses two networks with different initializations to generate perturbed outputs for the same input and aligns the different network outputs. MixMatch [21] and ReMixMatch [22] use data interpolation to generate perturbations.

Pseudo-labeling can be roughly divided in two ways: self-training and multi-view training.

Self-training [23] is a packaging algorithm repeatedly using supervised methods in the training process of each round. It uses the labeled data to train the model in the first round. The trained model predicts pseudo-labels for the unlabeled samples, and the high-confidence unlabeled samples are added to the training set for the re-training. There is no limit to the total training round. The advantage of self-training is that it is simple, but its learning performance depends on the supervised method used internally, and the model can hardly correct its errors, which may lead to error accumulation. The performance of this method is closely related to error labeling correction strategies and high-confidence unlabeled sample screening strategies.

Multi-view training has more than one model in the training process; the unlabeled data and their pseudo-label generated by one model are used for another model in the next training round. Co-training [24] has two models 𝑚1 and 𝑚2 trained on different datasets. In each iteration, if either model considers its prediction result of sample X trustworthy, with confidence higher than the threshold 𝜏, then that model will generate a pseudo-label for sample X and add the training sample X to the training set of the other model. Tri-training [25] is similar to Co-training, except that, in Tri-training, the newly acquired unlabeled training instances of one model are jointly determined by two other models. Tri-training first bootstraps the labeled sample set for repeatable sampling to obtain three labeled training sets and then trains a classifier from each labeled training set. In the cooperative training process, the new unlabeled samples with pseudo-labels obtained by each model are jointly provided by the other two classifiers. Specifically, suppose two classifiers predict the same unlabeled sample and have the same output. In that case, the sample is considered to have higher confidence and is added to the labeled training set of the third classifier after generating the pseudo-label.

In addition to classification tasks, semi-supervised learning is widely used in semantic segmentation. In the semi-supervised semantic segmentation, CCT [26] enforces similar predictions under multiple perturbed embeddings. Lai proposed a method [27] that enforces similar predictions under two different contextual crops. CPS [28] enforces consistency between dual differently initialized models. Similar to FixMatch [29], PseudoSeg [30] adapts the weak-to-strong consistency to the segmentation scenario and further applies a calibration module to refine the pseudo-masks. ST++ [31] proposes an advanced self-training framework that performs selective re-training via prioritizing reliable unlabeled images based on holistic prediction-level stability.

This entry is adapted from the peer-reviewed paper 10.3390/app13158616

References

- Chuang, Y.Y.; Curless, B.; Salesin, D.H.; Szeliski, R. A bayesian approach to digital matting. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR 2001, Kauai, HI, USA, 8–14 December 2001; Volume 2, p. II.

- Wang, J.; Cohen, M.F. Optimized color sampling for robust matting. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8.

- He, K.; Rhemann, C.; Rother, C.; Tang, X.; Sun, J. A global sampling method for alpha matting. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 2049–2056.

- Levin, A.; Lischinski, D.; Weiss, Y. A closed-form solution to natural image matting. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 30, 228–242.

- Sun, J.; Jia, J.; Tang, C.K.; Shum, H.Y. Poisson matting. In Proceedings of the ACM SIGGRAPH 2004, Los Angeles, CA, USA, 8–12 August 2004; pp. 315–321.

- Cho, D.; Tai, Y.W.; Kweon, I. Natural image matting using deep convolutional neural networks. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Springer: Cham, Switzerland, 2016; pp. 626–643.

- Chen, Q.; Li, D.; Tang, C.K. KNN matting. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 2175–2188.

- Shen, X.; Tao, X.; Gao, H.; Zhou, C.; Jia, J. Deep automatic portrait matting. In Proceedings of the Computer Vision—ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. Springer: Cham, Switzerland, 2016; pp. 92–107.

- Xu, N.; Price, B.; Cohen, S.; Huang, T. Deep image matting. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2970–2979.

- Lutz, S.; Amplianitis, K.; Smolic, A. AlphaGAN: Generative adversarial networks for natural image matting. In Proceedings of the British Machine Vision Conference 2018, BMVC 2018, Newcastle, UK, 3–6 September 2018; BMVA Press: Durham, UK, 2018; p. 259.

- Qiao, Y.; Liu, Y.; Yang, X.; Zhou, D.; Xu, M.; Zhang, Q.; Wei, X. Attention-guided hierarchical structure aggregation for image matting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 13676–13685.

- Liu, Y.; Xie, J.; Shi, X.; Qiao, Y.; Huang, Y.; Tang, Y.; Yang, X. Tripartite Information Mining and Integration for Image Matting. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 7555–7564.

- Park, G.; Son, S.; Yoo, J.; Kim, S.; Kwak, N. Matteformer: Transformer-based image matting via prior-tokens. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 11696–11706.

- Hu, L.; Kong, Y.; Li, J.; Li, X. Effective Local-Global Transformer for Natural Image Matting. IEEE Trans. Circuits Syst. Video Technol. 2023.

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008.

- Zhang, Z.; Wang, Y.; Yang, J. Deep quantised portrait matting. IET Comput. Vis. 2020, 14, 339–349.

- Sengupta, S.; Jayaram, V.; Curless, B.; Seitz, S.M.; Kemelmacher-Shlizerman, I. Background matting: The world is your green screen. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 2291–2300.

- Miyato, T.; Maeda, S.i.; Koyama, M.; Ishii, S. Virtual adversarial training: A regularization method for supervised and semi-supervised learning. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 1979–1993.

- Laine, S.; Aila, T. Temporal Ensembling for Semi-Supervised Learning. In Proceedings of the International Conference on Learning Representations, San Juan, PR, USA, 2–4 May 2016.

- Ke, Z.; Wang, D.; Yan, Q.; Ren, J.; Lau, R.W. Dual student: Breaking the limits of the teacher in semi-supervised learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6728–6736.

- Berthelot, D.; Carlini, N.; Goodfellow, I.; Papernot, N.; Oliver, A.; Raffel, C.A. Mixmatch: A holistic approach to semi-supervised learning. Adv. Neural Inf. Process. Syst. 2019, 32.

- Berthelot, D.; Carlini, N.; Cubuk, E.D.; Kurakin, A.; Sohn, K.; Zhang, H.; Raffel, C. ReMixMatch: Semi-Supervised Learning with Distribution Matching and Augmentation Anchoring. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019.

- Lee, D.H. Pseudo-label: The simple and efficient semi-supervised learning method for deep neural networks. Workshop Challenges Represent. Learn. ICML 2013, 3, 896.

- Blum, A.; Mitchell, T. Combining labeled and unlabeled data with co-training. In Proceedings of the Eleventh Annual Conference on Computational Learning Theory, Madison, WI, USA, 24–26 July 1998; pp. 92–100.

- Zhou, Z.H.; Li, M. Tri-training: Exploiting unlabeled data using three classifiers. IEEE Trans. Knowl. Data Eng. 2005, 17, 1529–1541.

- Ouali, Y.; Hudelot, C.; Tami, M. Semi-supervised semantic segmentation with cross-consistency training. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 12674–12684.

- Lai, X.; Tian, Z.; Jiang, L.; Liu, S.; Zhao, H.; Wang, L.; Jia, J. Semi-supervised semantic segmentation with directional context-aware consistency. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 1205–1214.

- Chen, X.; Yuan, Y.; Zeng, G.; Wang, J. Semi-supervised semantic segmentation with cross pseudo supervision. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 2613–2622.

- Sohn, K.; Berthelot, D.; Carlini, N.; Zhang, Z.; Zhang, H.; Raffel, C.A.; Cubuk, E.D.; Kurakin, A.; Li, C.L. Fixmatch: Simplifying semi-supervised learning with consistency and confidence. Adv. Neural Inf. Process. Syst. 2020, 33, 596–608.

- Zou, Y.; Zhang, Z.; Zhang, H.; Li, C.L.; Bian, X.; Huang, J.B.; Pfister, T. Pseudoseg: Designing pseudo labels for semantic segmentation. arXiv 2020, arXiv:2010.09713.

- Yang, L.; Zhuo, W.; Qi, L.; Shi, Y.; Gao, Y. St++: Make self-training work better for semi-supervised semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 4268–4277.

This entry is offline, you can click here to edit this entry!