Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is an old version of this entry, which may differ significantly from the current revision.

Eye tracking is a technique for detecting and measuring eye movements and characteristics. An eye tracker can sense a person’s gaze locations and features at a certain frequency.

- eye tracking

- gaze input

- museums and exhibitions

1. Introduction

Eye tracking is a technique for detecting and measuring eye movements and characteristics [1]. An eye tracker can sense a person’s gaze locations at a certain frequency. Finding gaze position allows identification of fixations and saccades. Fixations, which typically last between 100 and 600 ms [2][3], are time periods during which the eyes are almost still, with the gaze being focused on a specific element of the scene. On the other hand, saccades, which normally last less than 100 ms [3], are very fast eye movements occurring between consecutive pairs of fixations, with the purpose of relocating the gaze on a different element in the visual scene.

2. Eye Tracking Technology

Electro-oculography, scleral contact lens/search coil, photo-oculography, video-oculography and pupil center-corneal reflection are some of the eye-tracking technologies that have been developed over time [1].

Electro-oculography (EOG), one of the oldest methods to record eye movements, measures the skin’s electrical potential differences through small electrodes placed around the eyes [4]. This solution allows recording of eye movements even when the eyes are closed, but is generally more invasive and less accurate and precise than other approaches.

Scleral contact lens/search coil is another old method consisting of small coils of wire inserted in special contact lenses. The user’s head is then placed inside a magnetic field to generate an electrical potential that allows estimation of eye position [5]. While this technique has a very high spatial and temporal resolution, it is also extremely invasive and uncomfortable, used practically only for physiological studies.

Photo- and video-oculography (POG and VOG) are generally video-based methods in which small cameras, incorporated in head-mounted devices, measure eye features such as pupil size, iris–sclera boundaries and possible corneal reflections. The assessment of these characteristics can occur both automatically and manually. However, these systems tend to be inaccurate and are mainly used for medical purposes [1].

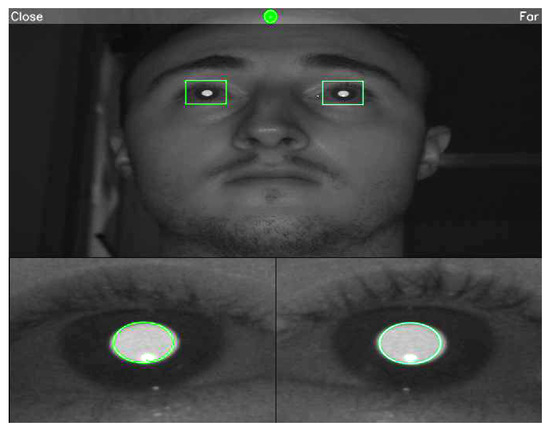

Pupil center-corneal reflection (PCCR) is the most used eye tracking technique nowadays. Its basic principle consists of using infrared (or near-infrared) light sources to illuminate the eyes and detect reflections on their surface (Figure 1); this allows determination of the gaze direction [1]. Infrared light is employed because it is invisible and also produces a better contrast between pupil and iris. The prices of these eye trackers range from a few hundreds to tens of thousands of euros, depending on their accuracy and gaze sampling frequency. All the works analyzed in the present review employ this technology.

Figure 1. Example of eye detection with the Gazepoint GP3 HD eye tracker: above, the eyes detected within the face; below, pupil/corneal reflections.

There are two main kinds of eye trackers, namely, remote and wearable. Remote eye trackers (Figure 2, left) are normally non-intrusive devices (often little “bars”) that are positioned at the bottom of standard displays. They are currently the most prevalent kind of eye trackers. Wearable eye trackers (Figure 2, right), on the other hand, are frequently used to study viewing behavior in real-world settings. Recent wearable eye trackers look more and more like glasses, making them much more comfortable than in the past.

Figure 2. Examples of remote and wearable eye trackers. On the left, highlighted in red, a Tobii 4c (by Tobii) remote device; on the right, a PupilCore (by Pupil Labs) wearable tool.

Psychology [6], neuroscience [7], marketing [8], education [9], usability [10][11] and biometrics [12][13] are all fields in which eye tracking technology has been applied, for instance, to determine the user’s gaze path while looking at something (e.g., an image or a web page) or to obtain information about the screen regions that are most frequently inspected. When using an eye tracker as an input tool (i.e., for interactive purposes, in an explicit way), gaze data must be evaluated in real-time, so that the computer can respond to specific gaze behaviors [14]. Gaze input is also extremely beneficial as an assistive technology for people who are unable to use their hands. Several assistive solutions have been devised to date, including those for writing [15][16][17], surfing the Web [18][19] and playing music [20][21].

Two common ways to provide gaze input are through dwell time and gaze gestures. Dwell time, which is the most used approach, consists of fixating a target element (e.g., a button) for a certain time (the dwell time), after which an action connected to that element is triggered. The duration of the dwell time can vary depending on the application, but it should be chosen so as to avoid the so called “Midas touch problem” [22], i.e., involuntary selections occurring when simply looking at the elements of an interface.

Gaze gestures consist of gaze paths performed by the user to trigger specific actions. This approach can be fast and is immune to the Midas touch problem, but it is also generally less intuitive than the dwell time (since the user needs to memorize a set of gaze gestures, it may have a steep learning curve). For this reason, gaze gestures are recommended only for applications meant to be used multiple times, such as writing systems (e.g., [23][24]).

Hybrid approaches that mix dwell time and gaze gestures (e.g., for interacting with video games [25]) have also been proposed, while other gaze input methods (such as those based on blinks [26] or smooth pursuit [17]) are currently less common.

This entry is adapted from the peer-reviewed paper 10.3390/electronics12143064

References

- Duchowski, A.T. Eye Tracking Methodology: Theory and Practice, 3rd ed.; Springer International Publishing AG: Cham, Switzerland, 2017.

- Velichkovsky, B.M.; Dornhoefer, S.M.; Pannasch, S.; Unema, P.J. Visual Fixations and Level of Attentional Processing. In Proceedings of the ETRA 2000 Symposium on Eye Tracking Research & Applications, Palm Beach Gardens, FL, USA, 6–8 November 2000; ACM: New York, NY, USA, 2000; pp. 79–85.

- Robinson, D.A. The mechanics of human saccadic eye movement. J. Physiol. 1964, 174, 245–264.

- Shackel, B. Pilot study in electro-oculography. Br. J. Ophthalmol. 1960, 44, 89.

- Robinson, D.A. A Method of Measuring Eye Movemnent Using a Scieral Search Coil in a Magnetic Field. IEEE Trans. Bio-Med. Electron. 1963, 10, 137–145.

- Mele, M.L.; Federici, S. Gaze and eye-tracking solutions for psychological research. Cogn. Process. 2012, 13, 261–265.

- Popa, L.; Selejan, O.; Scott, A.; Mureşanu, D.F.; Balea, M.; Rafila, A. Reading beyond the glance: Eye tracking in neurosciences. Neurol. Sci. 2015, 36, 683–688.

- Wedel, M.; Pieters, R. Eye tracking for visual marketing. Found. Trends Mark. 2008, 1, 231–320.

- Cantoni, V.; Perez, C.J.; Porta, M.; Ricotti, S. Exploiting Eye Tracking in Advanced E-Learning Systems. In Proceedings of the CompSysTech ’12: 13th International Conference on Computer Systems and Technologies, Ruse, Bulgaria, 22–23 June 2012; Association for Computing Machinery: New York, NY, USA, 2012; pp. 376–383.

- Nielsen, J.; Pernice, K. Eyetracking Web Usability; New Riders Press: Thousand Oaks, CA, USA, 2009.

- Mosconi, M.; Porta, M.; Ravarelli, A. On-Line Newspapers and Multimedia Content: An Eye Tracking Study. In Proceedings of the SIGDOC ’08: 26th Annual ACM International Conference on Design of Communication, Lisbon, Portugal, 22–24 September 2008; Association for Computing Machinery: New York, NY, USA, 2008; pp. 55–64.

- Kasprowski, P.; Ober, J. Eye Movements in Biometrics. In Biometric Authentication; Maltoni, D., Jain, A.K., Eds.; Springer: Berlin/Heidelberg, Germany, 2004; pp. 248–258.

- Porta, M.; Dondi, P.; Zangrandi, N.; Lombardi, L. Gaze-Based Biometrics From Free Observation of Moving Elements. IEEE Trans. Biom. Behav. Identity Sci. 2022, 4, 85–96.

- Duchowski, A.T. Gaze-based interaction: A 30 year retrospective. Comput. Graph. 2018, 73, 59–69.

- Majaranta, P.; Räihä, K.J. Text entry by gaze: Utilizing eye-tracking. In Text Entry Systems: Mobility, Accessibility, Universality; Morgan Kaufmann: San Francisco, CA, USA, 2007; pp. 175–187.

- Porta, M. A study on text entry methods based on eye gestures. J. Assist. Technol. 2015, 9, 48–67.

- Porta, M.; Dondi, P.; Pianetta, A.; Cantoni, V. SPEye: A Calibration-Free Gaze-Driven Text Entry Technique Based on Smooth Pursuit. IEEE Trans. Hum.-Mach. Syst. 2022, 52, 312–323.

- Kumar, C.; Menges, R.; Müller, D.; Staab, S. Chromium based framework to include gaze interaction in web browser. In Proceedings of the 26th International Conference on World Wide Web Companion, Geneva, Switzerland, 3–7 April 2017; pp. 219–223.

- Casarini, M.; Porta, M.; Dondi, P. A Gaze-Based Web Browser with Multiple Methods for Link Selection. In Proceedings of the ETRA ’20 Adjunct: ACM Symposium on Eye Tracking Research and Applications, Stuttgart, Germany, 2–5 June 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 1–8.

- Davanzo, N.; Dondi, P.; Mosconi, M.; Porta, M. Playing Music with the Eyes through an Isomorphic Interface. In Proceedings of the COGAIN ’18: Workshop on Communication by Gaze Interaction, Warsaw, Poland, 15 June 2018; ACM: New York, NY, USA, 2018; pp. 5:1–5:5.

- Valencia, S.; Lamb, D.; Williams, S.; Kulkarni, H.S.; Paradiso, A.; Ringel Morris, M. Dueto: Accessible, Gaze-Operated Musical Expression. In Proceedings of the ASSETS ’19: 21st International ACM SIGACCESS Conference on Computers and Accessibility, Pittsburgh, PA, USA, 28–30 October 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 513–515.

- Jacob, R.J. Eye movement-based human-computer interaction techniques: Toward non-command interfaces. Adv. Hum.-Comput. Interact. 1993, 4, 151–190.

- Wobbrock, J.O.; Rubinstein, J.; Sawyer, M.W.; Duchowski, A.T. Longitudinal Evaluation of Discrete Consecutive Gaze Gestures for Text Entry. In Proceedings of the ETRA ’08: 2008 Symposium on Eye Tracking Research & Applications, Savannah, Georgia, 26–28 March 2008; ACM: New York, NY, USA, 2008; pp. 11–18.

- Porta, M.; Turina, M. Eye-S: A Full-Screen Input Modality for Pure Eye-Based Communication. In Proceedings of the ETRA ’08: 2008 Symposium on Eye Tracking Research & Applications, Savannah, Georgia, 26–28 March 2008; ACM: New York, NY, USA, 2008; pp. 27–34.

- Istance, H.; Bates, R.; Hyrskykari, A.; Vickers, S. Snap clutch, a moded approach to solving the Midas touch problem. In Proceedings of the 2008 Symposium on Eye Tracking Research & Applications, Savannah, Georgia, 26–28 March 2008; pp. 221–228.

- Królak, A.; Strumiłło, P. Eye-blink detection system for human–computer interaction. Univ. Access Inf. Soc. 2012, 11, 409–419.

This entry is offline, you can click here to edit this entry!