Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is an old version of this entry, which may differ significantly from the current revision.

Despite high-spatial-resolution imaging techniques such as functional magnetic resonance imaging (fMRI) being widely used to map this complex network of multiple interactions, electroencephalographic (EEG) recordings claim high temporal resolution and are thus perfectly suitable to describe either spatially distributed and temporally dynamic patterns of neural activation and connectivity.

- EEG

- functional connectivity

- data-driven

- signal acquisition

- pre-processing

1. Introduction

The human brain has always fascinated researchers and neuroscientists. Its complexity lies in the combined spatial- and temporal-evolving activities that different cerebral networks explicate over three-dimensional space. These networks display distinct patterns of activity in a resting state or during task execution, but also interact with each other in various spatio-temporal modalities, being connected both by anatomical tracts and by functional associations [1]. In fact, to understand the mechanisms of perception, attention, and learning; and to manage neurological and mental diseases such as epilepsy, neurodegeneration, and depression, it is necessary to map the patterns of neural activation and connectivity that are both spatially distributed and temporally dynamic.

The analysis of the complex interactions between brain regions has been shaping the research field of connectomics [2], a neuro-scientific discipline that has become more and more renowned over the last few years [3]. The effort to map the human connectome, which consists of brain networks, their structural connections, and functional interactions [2], has given life to a number of different approaches, each with its own specifications and interpretations [4][5][6][7][8]. Some of these methods, such as covariance structural equation modeling [9] and the dynamic causal modeling [10][11], are based on the definition of an underlying structural and functional model of brain interactions. Conversely, some others, such as Granger causality [12], transfer entropy [13], directed coherence [14][15], partial directed coherence [16][17], and the directed transfer function [18], are data-driven and based on the statistical analysis of multivariate time series. Interestingly, while non-linear model-free and linear model-based approaches are apparently unrelated, as they look at different aspects of multivariate dynamics, they become clearly connected if some assumptions, such as the Gaussianity of the joint probability distribution of the variables drawn from the data [19][20], are met. Under these assumptions, connectivity measures such as Granger causality and transfer entropy, as well as coherence [21] and mutual information rate [22][23], can be mathematically related to each other. This equivalence forms the basis for a model-based frequency-specific interpretation of inherently model-free information-theoretic measures [24]. Furthermore, emerging trends, such as the development of high-order interaction measures, are coming up in the neurosciences to respond to the need for providing more exhaustive descriptions of brain-network interactions. These measures allow one to deal with multivariate representations of complex systems [25][26][27], showing their potential for disentangling physiological mechanisms involving more than two units or subsystems [28]. Additionally, more sophisticated tools, such as graph theory [29][30][31], are widely used to depict the functional structure of the brain intended as a whole complex network where neural units are highly interconnected with each other via different direct and indirect pathways.

Mapping the complexity of these interactions requires the use of high-resolution neuroimaging techniques. A number of brain mapping modalities have been used in recent decades to investigate the human connectome in different experimental conditions and physiological states [32][33][34], including functional magnetic resonance imaging (fMRI) [35][36][37][38], positron emission tomography, functional near-infrared spectroscopy, and electrophysiological methods such as electroencephalography (EEG), magnetoencephalography (MEG), and electrocorticography (ECoG) [14][39][40]. The most known technique used so far in this context is fMRI, which allows one to map the synchronized activity of spatially localized brain networks by detecting the changes in blood oxygenation and flow that occur in response to neuronal activity [41]. However, fMRI lacks in time resolution, and therefore cannot be entrusted with detecting short-living events, which can instead be investigated by EEG, a low-cost non-invasive imaging technique allowing one to study the dynamic relations between the activity of cortical brain regions and providing different information with respect to fMRI [38]. Being exploited in a wide range of clinical and research applications [30][42][43][44], EEG has allowed researchers to identify the spatio-temporal patterns of neuronal electric activity over the scalp with huge feasibility, thanks to advances in the technologies for its acquisition, such as the development of high-density EEG systems [45][46] and their combinations with other imaging modalities, robotics or neurostimulation [47][48][49][50].

2. Brain Connectivity

Brain connectivity aims at describing the patterns of interaction within and between different brain regions. This description relies on the key concept of functional integration [51], which describes the coordinated activation of systems of neural ensembles distributed across different cortical areas, as opposed to functional segregation, which instead refers to the activation of specialized brain regions. Brain connectivity encompasses various modalities of interaction between brain networks, including structural connectivity (SC), functional connectivity (FC), and effective connectivity (EC).

SC is perhaps the most intuitive concept of connectivity in the brain. It can be intended as a representation of the brain fiber pathways that traverse broad regions and correspond with established anatomical understanding [52]. As such, SC can be intended as a purely physical phenomenon.

Some studies suggest that the repertoire of cortical functional configurations reflects the underlying anatomical connections, as the functional interactions between different brain areas are thought to vary according to the density and structure of the connecting pathways [52][53][54][55][56][57][58][59]. This leads to the assumption that investigating the anatomical structure of a network, i.e., how the neurons are linked together, is an important prerequisite for discovering its function, i.e., how neurons interact together, synchronizing their dynamic activity. Moreover, according to the original definition proposed in [60], FC does not relate to any specific direction or structure of the brain. Instead, it is purely based on the probabilities of the observed neural responses. No conclusions were made about the type of relationship between two brain regions. The only comparison is established via the presence or absence of statistical dependence.

Conversely, EC was originally defined in terms of the directional influence that one neural unit exerts over another, thereby requiring the generation of a mechanistic model of the cause–effect relationships. In a nutshell, while FC was intended as an observable phenomenon quantified through measures of statistical dependencies, such as correlation and mutual information, EC was determined to explain the observed functional dependencies based on a model of directed causal influences [60].

In the last two decades, these concepts have been widely discussed and have evolved towards various interpretations [7][61][62][63][64]. EC can be assessed either from the signals directly (i.e., data-driven EC) or based on an underlying model specifying the causal pathways given anatomical and functional knowledge (i.e., EC is a combination of both SC and FC) [62][63]. The most exploited data-driven methods based on time-series analysis include adaptations of Granger causality [12][65], transfer entropy [13], partial directed coherence [16][17], and the directed transfer function [18], and are designed to identify the directed transfer of information between two brain regions. Conversely, mechanistic models of EC focus on either (i) the determination of the model parameters that align with observed correlation patterns in a given task, such as in the case of the covariance structural equation modeling [9] and dynamic causal modeling [10], or (ii) perturbational approaches to investigate the degree of causal influence between two brain regions [66].

3. Functional Connectivity: A Classification of Data-Driven Methods

3.1. Model-Based vs. Model-Free Connectivity Estimators

AR model-based data-driven approaches typically assume linear interactions between signals. Specifically, in a linear framework, coupling is traditionally investigated by means of spectral coherence, partial coherence [16][21][67], correlation coefficient, and partial correlation coefficient [21]. On the other hand, different measures have been introduced for studying causal interactions, such as directed transfer function [18], directed coherence [14][15], partial directed coherence [16][17], and Granger causality [12][68]. Conversely, more general approaches, such as mutual information [60][69] and transfer entropy [13][70], can investigate non-linear dependencies between the recorded signals, starting from the definition of entropy given by Shannon [69] and based on the estimation of probability distributions of the observed data. Importantly, under the Gaussian assumption [19], model-free and model-based measures converge and can be inferred from the linear parametric representation of multivariate vector autoregressive (VAR) models [12][20][24].

Constituting the most employed metrics, linear model-based approaches are sufficient for identifying the wide range of oscillatory interactions that take place under the hypothesis of oscillatory phase coupling [6]. Linear-model-based approaches allow the frequency domain representations of multiple interactions in terms of transfer functions, partial coherence, and partial power spectrum decomposition [6][21]. This feature is extremely helpful in the study of brain signals that usually exhibit oscillatory components in well-known frequency bands, resulting from the activity of neural circuits operating as a network [71].

3.2. Time-Domain vs. Frequency-Domain Connectivity Estimators

It is important to distinguish between time- and frequency-domain techniques, as the latter reveal connectivity mechanisms related to the brain rhythms that operate within specific frequency bands [21][39]. While approaches such as correlation, mutual information, Granger causality, and transfer entropy are linked to a time-domain representation of the data, some others, such as coherence, directed transfer function, directed coherence, and partial directed coherence, assume that the acquired data are rich in individual rhythmic components and exploit frequency-domain representations of the investigated signals. Although this can be achieved through the application of non-parametric techniques (Fourier decomposition, wavelet analysis, Hilbert transformation after band-pass filtering [72]), the utilization of parametric AR models has collected great popularity, allowing one to evaluate brain interactions within specific spectral bands with physiological meanings [21]. Furthermore, time-frequency analysis approaches, which simultaneously extract spectral and temporal information [73], have been extensively used to study changes in EEG connectivity in the time-frequency domain [74][75][76], and in combination with deep learning approaches for the automatic detection of schizophrenia [77] and K-nearest neighbor classifiers for monitoring the depth of anesthesia during surgery [78].

4. Functional Connectivity Estimation Approaches

4.1. Time-Domain Approaches

Several time-domain approaches devoted to the study of FC have been developed throughout the years. Despite phase-synchronization measures, such as the phase locking value [79] and other model-free approaches [80] being still abundantly used in brain-connectivity analysis, linear methods are easier to use and sufficient to capture brain interactions taking place under the hypothesis that neuronal interactions are governed by oscillatory phase coupling [6].

In a linear framework, ergodicity, Gaussianity, and wide-sense stationarity (WSS) conditions are typically assumed for the acquired data, meaning that the analyzed signals are stochastic processes with Gaussian properties and preserve their statistical properties as a function of time. These assumptions are made, often implicitly, as prerequisites for the analysis, in order to assure that the linear description is exhaustive and the measures can be safely computed from a single realization of the analyzed process. Under these assumptions, the dynamic interactions between a realization of M Gaussian stochastic processes (e.g., M EEG signals recorded at different electrodes) can be studied in terms of time-lagged correlations. In the time domain, the analysis is performed via a linear parametric approach grounded on the classical vector AR (VAR) model description of a discrete-time, zero-mean, stationary multivariate stochastic Markov process, S=[X1…XM]⊺. Considering the time step n as the current time, the dynamics of S can be completely described by the VAR model [21][24]:

where Sn=[X1,n…XM,n]⊺ is the vector describing the present state of S, and Spn=[S⊺n−1…S⊺n−p]⊺ describes its past states until lag p, which is the model order defining the maximum lag used to quantify interactions; Ak is the M×M coefficient matrix quantifying the time-lagged interactions within and between the M processes at lag k; and U is a M×1 vector of uncorrelated white noise with an M×M covariance matrix Σ=diag(σ211,…σ2MM). Multivariate methods based on VAR models as in (1) depend on the reliability of the fitted model, and especially the model order. While lower model orders can provide inadequate representations of the signal, orders higher than are strictly needed tend to provide overrepresentation of the oscillatory content of the process and drastically increase noise [81]. One should pay attention to the procedure for selecting the optimum model order, which can be set according to different criteria, such as the Akaike information criterion (AIC) [82] or the Bayesian information criterion (BIC) [83].

It should be noted that, in multichannel recordings such as with EEG data, the analysis can be multivariate, which means taking all the channels into account and fitting a full VAR model, as in (1), or it can be done by considering each channel pair separately, which means fitting a bivariate AR model (2AR) in the form of (1) with M=2:

where Z=[XiXj]⊺, i,j=1,…,M (i≠j), is the bivariate process containing the investigated channel pair, with Zn=[Xi,nXj,n]⊺ and Zpn=[Z⊺n−1…Z⊺n−p]⊺ describing, respectively, the present and p past states of Z; Bk is the 2×2 coefficient matrix quantifying the time-lagged interactions within and between the two processes at lag k, and W is a 2×1 vector of uncorrelated white noises with 2×2 covariance matrix Λ. The pairwise (bivariate) approach typically provides more stable results, since it involves the fitting of fewer parameters but leads to loss of information due to the fact that only a pair of time series is taken into account [84]. Indeed, since there are various situations that provide significant estimates of connectivity in the absence of true interactions (e.g., cascade interactions or common inputs) [21][84], the core issue becomes whether the estimate of pairwise connectivity reflects a true direct connection between the two investigated signals or is the result of spurious dynamics between multiple time series. To answer this question, it is recommended to take into account the information from all channels when estimating the interaction terms between any pair of time series. Even if at the expense of increased model complexity resulting in a more difficult model identification process, moving from a pairwise to a multivariate approach can significantly increase the accuracy of the reconstructed connectivity patterns. This would allow distinguishing direct from indirect interactions through the use of extended formulations obtained through partialization or conditioning procedures [16][21][85].

4.2. Frequency-Domain Approaches

To examine oscillatory neuronal interactions and identify the individual rhythmic components in the measured data, representations of connectivity in the frequency domain are often desirable. The transformation from the time domain to the frequency domain can be carried out by exploiting parametric (model-based) or non-parametric (model-free) approaches. Non-parametric signal-representation techniques are mostly based on the definition of the power spectral density (PSD) matrix of the process as the Fourier transform (FT) of Rk

, on the wavelet transformation (WT) of data, or on Hilbert transformation following band-pass filtering [72]. In general, they bypass the issues of the ability of linear AR models to correctly interpret neurophysiological data and the selection of the optimum model order. The latter choice can be problematic, especially with brain data, because it strongly depends on the experimental task, the quality of the data and the model estimation technique [86]. However, the non-parametric spectral approach is somewhat less robust compared to parametric estimates, since it can be characterized by lower spectral resolution; e.g., it has been shown to be less efficient in discriminating epileptic occurrences in EEG data [87].

On the other hand, parametric approaches exploit the frequency-domain representation of the VAR model, in the multivariate (1) or in the bivariate (2) case, which means computing the model coefficients in the Z-domain and then evaluating the model transfer function H(ω) on the unit circle of the complex plane, where ω=2πffs is the normalized angular frequency and fs is the sampling frequency [21]. The M×M PSD matrix can then be computed using spectral factorization as

where * stands for the Hermitian transpose [21]; note that Σ is replaced by Λ in the case of (2), i.e., when M=2. It is worth noting that, while the frequency-domain descriptions ubiquitously used are based on the VAR model representation, their key element is actually the spectral factorization theorem reported above and that approaches other than VAR models can be used to derive frequency-domain connectivity measures [88].

4.3. Information-Domain Approaches

The statistical dependencies among electrophysiological signals can be evaluated using information theory. Concepts of mutual information, mutual information rate, and information transfer are widely used to assess the information exchanged between two interdependent systems [60][69], the dynamic interdependence between two systems per unit of time [22][23], and the dynamic information transferred to the target from the other connected systems [13][70], respectively. The main advantage of these approaches lies in the fact that they are probabilistic and can thus be stated in a fully model-free formulation. On the other hand, their practical assessment in the information domain is not straightforward because it comprises the estimation of high-dimensional probability distributions [89], which becomes more and more difficult as the number of network nodes increases in multichannel EEG recordings. Nevertheless, information-based metrics can also be expressed in terms of predictability improvement, such that their computation can rely on linear parametric AR models, where concepts of prediction error and conditional entropy, GC and information transfer, or TD and mutual information rate, have been linked to each other [19][20][90][91]. Indeed, it has been demonstrated that, under the hypothesis of Gaussianity, predictability improvement and information-based indexes are equivalent [19]. Based on the knowledge that stationary Gaussian processes are fully described in terms of linear regression models, a central result is that for Gaussian data, all the information measures can be computed straightforwardly from the variances of the innovation processes of full and restricted AR models [19].

4.4. Other Connectivity Estimators

Brain connectivity can be estimated through a large number of analyses applied to EEG data. Multivariate time-series analysis has traditionally relied on the use of linear methods in the time and frequency domains. Nevertheless, these methods are insufficient for capturing non-linear features in signals, especially in neurophysiological data where non-linearity is a characteristic of neuronal activity [80]. This has driven the exploration of alternative techniques that are not limited by this constraint [80][92]. Moreover, the utilization of AR models with constant parameters, and the underlying hypotheses of Gaussianity and WSS of the data, can be key limitations when stationarity is not verified [93]. A number of approaches have been developed to overcome this issue, providing time-varying extensions of linear model-based connectivity estimators using adaptive AR models with time-resolved parameters, in which the AR parameters are functions of time [94][95][96].

5. EEG Acquisition and Pre-Processing

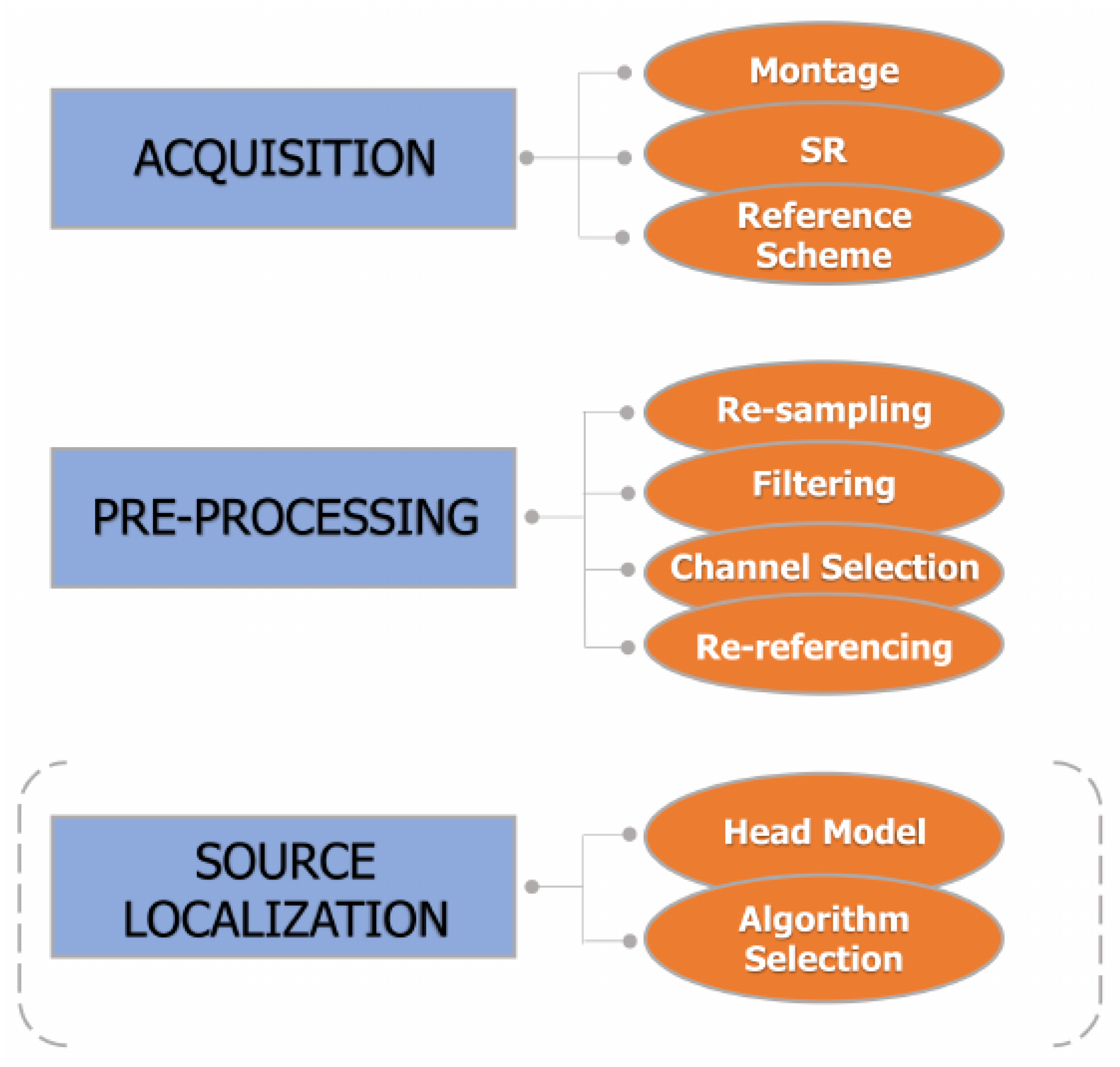

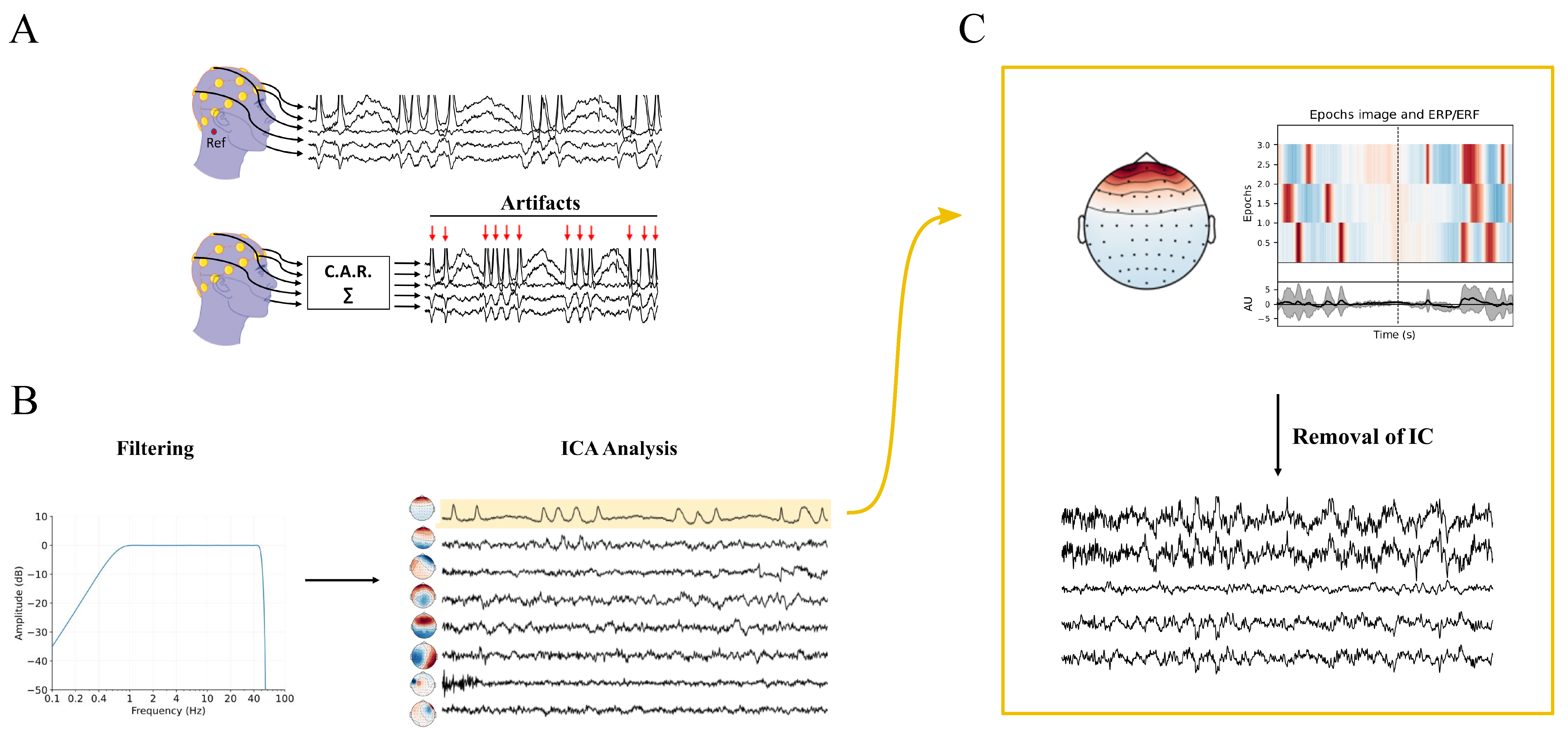

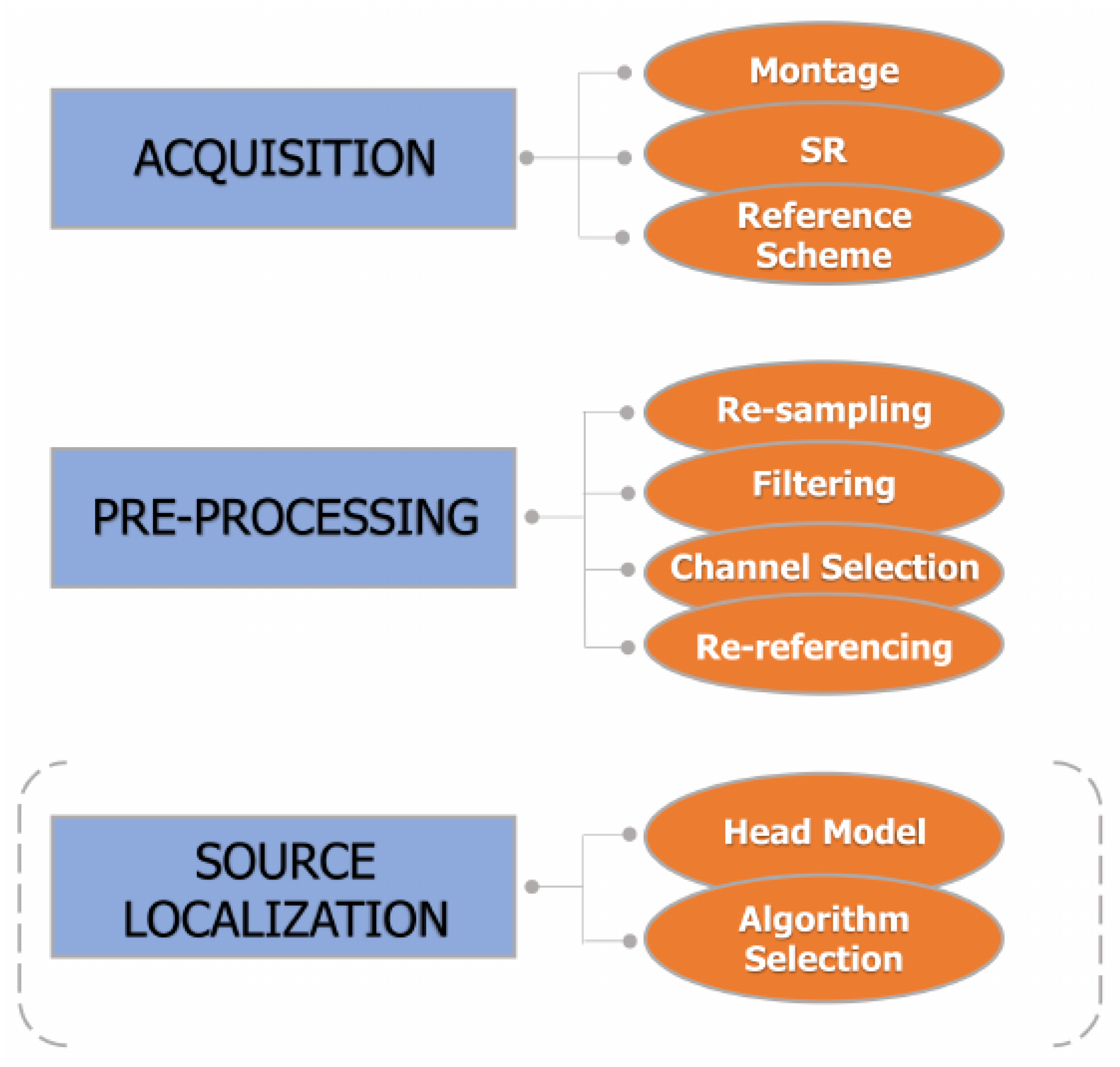

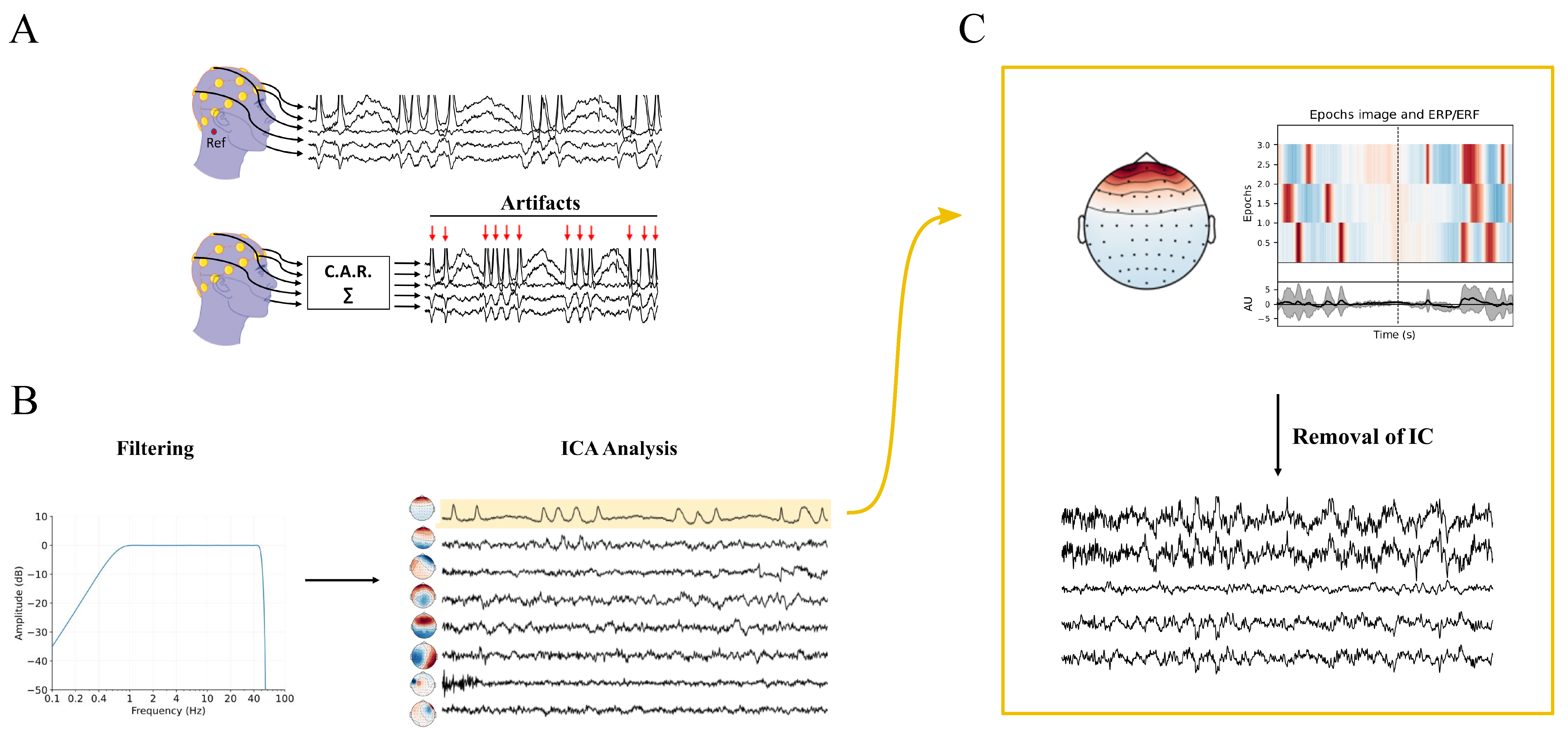

The acquisition and conditioning of the EEG signal represent two important aspects with effects on the entire subsequent processing chain. The main steps of acquisition and pre-processing are indicated in Figure 1. An example of application of the pre-processing pipeline to experimental EEG is shown in Figure 2.

Figure 1. Main steps in EEG acquisition and pre-processing. In general, source localization is not mandatory, as represented by the dashed round brackets.

Figure 2. Schematic representation of the pre-processing pipeline applied to EEG signals acquired on the scalp (s253 recording of the subject 2 that could be found in https://eeglab.org/tutorials/10_Group_analysis/study_creation.html#description-of-the-5-subject-experiment-tutorial-data, accessed on 15 February 2023). (A) Unipolar EEG signals are acquired using a mastoid reference (Ref, in red). For clarity, only a limited number of the recorded signals, among the original 30 channels, is plotted. The average re-referencing process and the pre-processed signals are illustrated below. Notably, red arrows indicate blinking artifacts that are clearly visible. (B) The re-referenced signals are filtered using a 1–45 Hz zero-phase pass-band filter, followed by independent component analysis (ICA) to extract eight independent components (ICs), shown on the right. (C) The first IC, suspected to be an artifact, is analyzed, with a scalp-shaped heatmap assessing its localization in the frontal area and its temporary coincidence with the artifacts shown in panel (A). After removing the first IC, the cleaned signal is plotted at the bottom of the panel.

Sampling frequency, the number of electrodes, and their positioning, each have an important role in assessing connectivity; too low of a sampling frequency cannot be used to analyze high-frequency bands for the Nyquist–Shannon sampling theorem [97], and the electrode density defines with which accuracy and reliability further processing will be performed [40].

An EEG signal is a temporal sequence of electric potential values. The potential is measured with respect to a reference, which should be ideally the potential at a point at infinity (thus, with a stable zero value). In practice, an ideal reference cannot be found, and any specific choice will affect the evaluated connectivity [6][98]. Unfortunately, as for most of the FC analysis pipeline, there is no gold standard for referencing, and this is clearly a problem for cross-study comparability [99].

5.1. Resampling

Nowadays, EEG signal is usually acquired with a sampling rate (SR) equal or superior to 128 Hz, since this value is the lower base-2-power that permits one to capture most of the information content in EEG signal and a big part of the γ band. It is noticeable that high-frequency oscillations (HFOs), such as ripples (80–200 Hz) and fast ripples (200–500 Hz), will not be captured with these SRs [100][101][102][103]. Moreover, there are studies suggesting that low SRs affect correlation dimensions, and in general, non-linear metrics [104][105].

5.2. Filtering and Artifact Rejection

Filtering EEG is a necessary step for the FC analysis pipeline, not only to extract the principal EEG frequency waves, but particularly to reduce the amounts of noise and artifacts present in the signal and to enhance its SNR. Research suggests the use of finite impulse response (FIR) causal filters that could be used also for real-time (RT) applications, or infinite impulse response (IIR) filters, which are less demanding in terms of filter orders but distort phase, unless they are applied with reverse filtering, thereby making the process non-causal and not applicable for RT applications. In general, if sharp cut-offs are not needed for the analysis, FIR filters are recommended, since they are always stable and easier to control [106].

If one is interested in investigating FC in the γ

band, electrical line noise at 50 or 60 Hz could be a problem, since it is not fully removable with a low-pass filter. Notch filters are basically band-stop filters with a very narrow transition phase in the frequency domain, which in turn leads to an inevitable distortion of the signal in the time domain, such as smearing artifacts [106]. To avoid this problem, some alternatives have been developed. A discrete Fourier transform (DFT) filter is obtained by subtracting from the signal an estimation of the interference obtained by fitting the signal with a combination of sine and cosine with the same frequency as the interference. It avoids potential distortions of components external to the power-line frequency. It assumes that the power-line noise has constant power in the analyzed signal segment. As this hypothesis is not strictly verified in practice, it is recommended to apply the DFT technique to short data segments (1 s or less).

Another proposed technique is CleanLine, a regression-based method that makes use of a sliding window and multitapers to transform data from the time domain to the frequency domain, thereby estimating the characteristics of the power line noise signal with a regression model and subtracting it from the data [107]. This method eliminates only the deterministic line components, which are optimal, since EEG signal is a stochastic process, but in the presence of strong non-stationary artifacts, it may fail [108].

It is pretty normal that EEG signals can be corrupted by many types of artifacts, defined as any undesired signal which affects the EEG signal whose origin cannot be identified in neural activity. Generators of these undesirable signals could be physiological, such as ocular artifacts (OAs such as eye blink, saccade movement, rapid eye movements), head or facial movements, muscle contraction, or cardiac activity [109][110]. Power-line noise, electrode movements (due to non-properly connected electrodes), and interference with other electrical instrumentation are non-physiological artifacts [111]. Artifact management is crucial in the analysis of connectivity. In fact, their presence in multiple electrodes can result in overestimation of brain connectivity, skewing the results [112][113].

The most effective way for dealing with artifacts is to apply prevention in the phase of acquisition to avoid as much as possible their presence, for example, by making acquisitions in a controlled environment, double-checking the correct positioning of electrodes, or instructing the patients to avoid blinking the eyes in certain moments of the acquisition. In fact, eye blinks are by far the most common source of artifacts in the EEG signal, especially in the frontal electrodes [114][115]. They are shown as rapid, high-amplitude waveforms present in many channels, whose magnitudes exponentially decrease from frontal to occipital electrodes. However, saccade movements produce artifacts, generating an electrooculographic signal (EOG). Acquisition of this signal, concurrently with the EEG signal, is known to be a great advantage in identifying and removing ocular artifacts, since vertical (VEOG), horizontal (HEOG), and radial (REOG) signals diffuse in different ways through the scalp [116].

Once the parts of the signals corrupted by the artifacts have been identified, it is still common practice to eliminate these portions, avoiding alterations on the signal that could lead to spurious connectivity. As for channel rejection, however, it is preferable to retain as much information as possible [117].

5.3. Bad Channel Identification, Rejection, and Interpolation

It could happen that some EEG channels present a high number of artifacts (eye blink, muscular noise, etc.) or noise, due to bad electrode-scalp contact. In these cases, the rejection of these channels could be an option. However, it is necessary to check whether the deleted channels are not fundamental and the remaining channels are sufficient to carry on the analysis, considering also that deleting channels will result in an important loss of information that is likely not recovered anymore. Some authors discussed the criteria for detection of bad channels and suggested considering the proportion of bad channels with respect to the total to assess the quality of the dataset (for example, imposing a maximum of 5%) [118].

Identification of bad channels could be performed visually or automatically. Visual inspection requires certain experience with EEG signals to decide if a channel is actually not recoverable and needs to be rejected [119][120]. Indeed, this process is highly subjective. Automatic detection of bad channels could be performed in various ways. A channel correlation method identifies the bad channels by comparing their divergence from the Pearson correlation distribution among each pair of channels with the other couples. This method assumes high correlations between channels due to the volume-conduction effect proper of the EEG signal, which is discussed in detail in the source localization section. High standard deviation of an EEG signal can be an indicator of the presence of a great amount of noise. By setting a proper threshold, the standard deviation can be a useful index for identifying bad channels.

5.4. Re-Referencing

As described in the previous section, many decisions are made during the acquisition of the signals; however, some of them are revertible. Re-referencing and down-sampling is one of them. It consists of changing the (common) reference of each EEG channel into another one by performing for each channel the addition of a fixed value (notice that it is a fixed value in space, i.e., common for all channels, but not in general constant in time).

Re-reference with respect to a new reference channel can be performed by simply subtracting each channel with the new reference. In most cases, average reference (CAR) is considered to be a good choice, especially if electrodes cover a large portion of the scalp with the assumption that the algebraic sum of currents must be zero in the case of uniform density of sources [121]. It consists of referencing all the electrode signals with respect to a virtual reference that is the arithmetic mean of all the channel signals. Due to charge conservation, the integral of the potential over a closed surface surrounding the neural sources is zero. Obviously, the EEG channels give only a discrete sampling of a portion of that surface, so that the virtual reference can be assumed to be only approximately zero. However, it could be much better than using a single location as reference, such as an EEG channel or the mastoid (both LM and LR), which have been shown to generate larger distortion than the average reference [112].

6. Source Connectivity Analysis

Measuring information dynamics from EEG signals on the scalp is not straightforward due to the impact of volume conduction, which can modify or obscure the patterns of information flow across EEG electrodes [122]. This effect is due to the electrical conductance of the skull, which serves as a support to diffuse neural activity in all the directions and for this reason is also known as field spread problem. The neural current sources are related to the Poisson equation to the electrical potential, which diffuses across the scalp and can be measured by many electrodes, also pretty far from the original source. This is the reason why interpreting scalp-level connectivity requires caution. In fact, the estimated FC between two electrodes could be reflecting the activation of a single brain region, rather than two functionally connected regions. Even though this effect can be compensated for when working with scalp EEG signals [122][123], it is often recommended to use source signal reconstruction to obtain a more accurate representation of the underlying neural network. This is because the source-based network representation is considered a more accurate approximation of the real neural network structure [124].

The general pipeline that source localization algorithms follow is based on this two-step loop [125]:

-

Forward problem—definition of a set of sources and their characteristics and simulation of the signal that would be measured (i.e., the potential on the scalp) knowing the physical characteristics of the medium that makes it diffuse;

-

Inverse problem—comparison of the signal generated by the head model with the actual measured EEG and adjustment of the parameters of the source model to make them as similar as possible.

The first part, also known as estimation problem, can be carried out by defining a proper head model using boundary element models (BEMs) or finite element models (FEM). The second part of the pipeline is also called the appraisal problem, and it is not trivial at all, being one of the fundamental challenges in EEG processing analysis. This process is in fact formally an inverse ill-posed problem, where there exists an infinite number of combinations of sources that can explain the acquired signal [126].

This entry is adapted from the peer-reviewed paper 10.3390/bioengineering10030372

References

- Sporns, O. Structure and function of complex brain networks. Dialogues Clin. Neurosci. 2013, 15, 247–262.

- Craddock, R.; Tungaraza, R.; Milham, M. Connectomics and new approaches for analyzing human brain functional connectivity. Gigascience 2015, 4, 13.

- Sporns, O.; Bassett, D. Editorial: New Trends in Connectomics. Netw. Neurosci. 2018, 2, 125–127.

- Wang, H.E.; Bénar, C.G.; Quilichini, P.P.; Friston, K.J.; Jirsa, V.K.; Bernard, C. A systematic framework for functional connectivity measures. Front. Neurosci. 2014, 8, 405.

- He, B.; Astolfi, L.; Valdés-Sosa, P.A.; Marinazzo, D.; Palva, S.O.; Bénar, C.G.; Michel, C.M.; Koenig, T. Electrophysiological Brain Connectivity: Theory and Implementation. IEEE Trans. Biomed. Eng. 2019, 66, 2115–2137.

- Bastos, A.M.; Schoffelen, J.M. A tutorial review of functional connectivity analysis methods and their interpretational pitfalls. Front. Syst. Neurosci. 2016, 9, 175.

- Cao, J.; Zhao, Y.; Shan, X.; Wei, H.L.; Guo, Y.; Chen, L.; Erkoyuncu, J.A.; Sarrigiannis, P.G. Brain functional and effective connectivity based on electroencephalography recordings: A review. Hum. Brain Mapp. 2022, 43, 860–879.

- Goodfellow, M.; Andrzejak, R.; Masoller, C.; Lehnertz, K. What Models and Tools can Contribute to a Better Understanding of Brain Activity? Front. Netw. Physiol. 2022, 2, 907995.

- McIntosh, A.; Gonzalez-Lima, F. Network interactions among limbic cortices, basal forebrain, and cerebellum differentiate a tone conditioned as a Pavlovian excitor or inhibitor: Fluorodeoxyglucose mapping and covariance structural modeling. J. Neurophysiol. 1994, 72, 1717–1733.

- Friston, K.J.; Harrison, L.; Penny, W. Dynamic causal modelling. Neuroimage 2003, 19, 1273–1302.

- David, O.; Kiebel, S.J.; Harrison, L.M.; Mattout, J.; Kilner, J.M.; Friston, K.J. Dynamic causal modeling of evoked responses in EEG and MEG. NeuroImage 2006, 30, 1255–1272.

- Geweke, J. Measurement of linear dependence and feedback between multiple time series. J. Am. Stat. Assoc. 1982, 77, 304–313.

- Schreiber, T. Measuring information transfer. Phys. Rev. Lett. 2000, 85, 461.

- Saito, Y.; Harashima, H. Tracking of information within multichannel EEG record causal analysis in eeg. In Recent Advances in and Data Processing; Yamaguchi, N., Fujisawa, K., Eds.; Elsevier: Amsterdam, The Netherlands, 1981.

- Baccalá, L.A.; Sameshima, K.; Ballester, G.; Valle, A.C.d.; Yoshimoto, C.E.; Timo-Iaria, C. Studying the interaction between brain via direct coherence and Granger causality. Appl. Signal Process 1998, 5, 40–48.

- Baccalá, L.A.; Sameshima, K. Partial directed coherence: A new concept in neural structure determination. Biol. Cybern. 2001, 84, 463–474.

- Sameshima, K.; Baccalá, L.A. Using partial directed coherence to describe neuronal ensemble interactions. J. Neurosci. Methods 1999, 94, 93–103.

- Kaminski, M.J.; Blinowska, K.J. A new method of the description of the information flow in the brain structures. Biol. Cybern. 1991, 65, 203–210.

- Barnett, L.; Barrett, A.B.; Seth, A.K. Granger causality and transfer entropy are equivalent for Gaussian variables. Phys. Rev. Lett. 2009, 103, 238701.

- Barrett, A.B.; Barnett, L.; Seth, A.K. Multivariate Granger causality and generalized variance. Phys. Rev. E 2010, 81, 041907.

- Faes, L.; Erla, S.; Nollo, G. Measuring connectivity in linear multivariate processes: Definitions, interpretation, and practical analysis. Comput. Math. Methods Med. 2012, 2012, 140513.

- Gelfand, I.M.; IAglom, A. Calculation of the Amount of Information about a Random Function Contained in Another Such Function; American Mathematical Society Providence: Providence, RI, USA, 1959.

- Duncan, T.E. On the calculation of mutual information. SIAM J. Appl. Math. 1970, 19, 215–220.

- Chicharro, D. On the spectral formulation of Granger causality. Biol. Cybern. 2011, 105, 331–347.

- Rosas, F.E.; Mediano, P.A.; Gastpar, M.; Jensen, H.J. Quantifying high-order interdependencies via multivariate extensions of the mutual information. Phys. Rev. E 2019, 100, 032305.

- Stramaglia, S.; Scagliarini, T.; Daniels, B.C.; Marinazzo, D. Quantifying dynamical high-order interdependencies from the o-information: An application to neural spiking dynamics. Front. Physiol. 2021, 11, 595736.

- Faes, L.; Mijatovic, G.; Antonacci, Y.; Pernice, R.; Barà, C.; Sparacino, L.; Sammartino, M.; Porta, A.; Marinazzo, D.; Stramaglia, S. A New Framework for the Time-and Frequency-Domain Assessment of High-Order Interactions in Networks of Random Processes. IEEE Trans. Signal Process. 2022, 70, 5766–5777.

- Scagliarini, T.; Nuzzi, D.; Antonacci, Y.; Faes, L.; Rosas, F.E.; Marinazzo, D.; Stramaglia, S. Gradients of O-information: Low-order descriptors of high-order dependencies. Phys. Rev. Res. 2023, 5, 013025.

- Bassett, D.S.; Bullmore, E. Small-World Brain Networks. Neuroscientist 2006, 12, 512–523.

- Stam, C.J.; Reijneveld, J.C. Graph theoretical analysis of complex networks in the brain. Nonlinear Biomed. Phys. 2007, 1, 3.

- Bullmore, E.; Sporns, O. Complex brain networks: Graph theoretical analysis of structural and functional systems. Nat. Rev. Neurosci. 2009, 10, 186–198.

- Toga, A.W.; Mazziotta, J.C. Brain Mapping: The Systems; Gulf Professional Publishing: Houston, TX, USA, 2000; Volume 2.

- Toga, A.W.; Mazziotta, J.C.; Mazziotta, J.C. Brain Mapping: The Methods; Academic Press: Cambridge, MA, USA, 2002; Volume 1.

- Dale, A.M.; Halgren, E. Spatiotemporal mapping of brain activity by integration of multiple imaging modalities. Curr. Opin. Neurobiol. 2001, 11, 202–208.

- Achard, S.; Bullmore, E. Efficiency and cost of economical brain functional networks. PLoS Comput. Biol. 2007, 3, 0174–0183.

- Park, C.H.; Chang, W.H.; Ohn, S.H.; Kim, S.T.; Bang, O.Y.; Pascual-Leone, A.; Kim, Y.H. Longitudinal changes of resting-state functional connectivity during motor recovery after stroke. Stroke 2011, 42, 1357–1362.

- Ding, L.; Shou, G.; Cha, Y.H.; Sweeney, J.A.; Yuan, H. Brain-wide neural co-activations in resting human. NeuroImage 2022, 260, 119461.

- Rizkallah, J.; Amoud, H.; Fraschini, M.; Wendling, F.; Hassan, M. Exploring the Correlation Between M/EEG Source–Space and fMRI Networks at Rest. Brain Topogr. 2020, 33, 151–160.

- Astolfi, L.; Cincotti, F.; Mattia, D.; Marciani, M.G.; Baccala, L.A.; de Vico Fallani, F.; Salinari, S.; Ursino, M.; Zavaglia, M.; Ding, L.; et al. Comparison of different cortical connectivity estimators for high-resolution EEG recordings. Hum. Brain Mapp. 2007, 28, 143–157.

- Rolle, C.E.; Narayan, M.; Wu, W.; Toll, R.; Johnson, N.; Caudle, T.; Yan, M.; El-Said, D.; Watts, M.; Eisenberg, M.; et al. Functional connectivity using high density EEG shows competitive reliability and agreement across test/retest sessions. J. Neurosci. Methods 2022, 367, 109424.

- Gore, J.C. Principles and practice of functional MRI of the human brain. J. Clin. Investig. 2003, 112, 4–9.

- Friston, K.J.; Frith, C.D. Schizophrenia: A disconnection syndrome. Clin. Neurosci. 1995, 3, 89–97.

- Frantzidis, C.A.; Vivas, A.B.; Tsolaki, A.; Klados, M.A.; Tsolaki, M.; Bamidis, P.D. Functional disorganization of small-world brain networks in mild Alzheimer’s Disease and amnestic Mild Cognitive Impairment: An EEG study using Relative Wavelet Entropy (RWE). Front. Aging Neurosci. 2014, 6, 224.

- Fogelson, N.; Li, L.; Li, Y.; Fernandez-del Olmo, M.; Santos-Garcia, D.; Peled, A. Functional connectivity abnormalities during contextual processing in schizophrenia and in Parkinson’s disease. Brain Cogn. 2013, 82, 243–253.

- Holmes, M.D. Dense array EEG: Methodology and new hypothesis on epilepsy syndromes. Epilepsia 2008, 49, 3–14.

- Kleffner-Canucci, K.; Luu, P.; Naleway, J.; Tucker, D.M. A novel hydrogel electrolyte extender for rapid application of EEG sensors and extended recordings. J. Neurosci. Methods 2012, 206, 83–87.

- Lebedev, M.A.; Nicolelis, M.A. Brain–machine interfaces: Past, present and future. Trends Neurosci. 2006, 29, 536–546.

- Debener, S.; Ullsperger, M.; Siegel, M.; Engel, A.K. Single-trial EEG–fMRI reveals the dynamics of cognitive function. Trends Cogn. Sci. 2006, 10, 558–563.

- Wolpaw, J.R.; Wolpaw, E.W. Brain-computer interfaces: Something new under the sun. In Brain-Computer Interfaces: Principles and Practice; Oxford University Press: Oxford, UK, 2012.

- Bestmann, S.; Feredoes, E. Combined neurostimulation and neuroimaging in cognitive neuroscience: Past, present, and future. Ann. N. Y. Acad. Sci. 2013, 1296, 11–30.

- Friston, K.J. Modalities, modes, and models in functional neuroimaging. Science 2009, 326, 399–403.

- Koch, M.A.; Norris, D.G.; Hund-Georgiadis, M. An investigation of functional and anatomical connectivity using magnetic resonance imaging. Neuroimage 2002, 16, 241–250.

- Damoiseaux, J.S.; Rombouts, S.; Barkhof, F.; Scheltens, P.; Stam, C.J.; Smith, S.M.; Beckmann, C.F. Consistent resting-state networks across healthy subjects. Proc. Natl. Acad. Sci. USA 2006, 103, 13848–13853.

- Passingham, R.E.; Stephan, K.E.; Kötter, R. The anatomical basis of functional localization in the cortex. Nat. Rev. Neurosci. 2002, 3, 606–616.

- Mantini, D.; Perrucci, M.G.; Del Gratta, C.; Romani, G.L.; Corbetta, M. Electrophysiological signatures of resting state networks in the human brain. Proc. Natl. Acad. Sci. USA 2007, 104, 13170–13175.

- Cohen, A.L.; Fair, D.A.; Dosenbach, N.U.; Miezin, F.M.; Dierker, D.; Van Essen, D.C.; Schlaggar, B.L.; Petersen, S.E. Defining functional areas in individual human brains using resting functional connectivity MRI. Neuroimage 2008, 41, 45–57.

- Rykhlevskaia, E.; Gratton, G.; Fabiani, M. Combining structural and functional neuroimaging data for studying brain connectivity: A review. Psychophysiology 2008, 45, 173–187.

- Hagmann, P.; Cammoun, L.; Gigandet, X.; Meuli, R.; Honey, C.J.; Wedeen, V.J.; Sporns, O. Mapping the structural core of human cerebral cortex. PLoS Biol. 2008, 6, e159.

- Honey, C.J.; Sporns, O.; Cammoun, L.; Gigandet, X.; Thiran, J.P.; Meuli, R.; Hagmann, P. Predicting human resting-state functional connectivity from structural connectivity. Proc. Natl. Acad. Sci. USA 2009, 106, 2035–2040.

- Friston, K.J. Functional and effective connectivity in neuroimaging: A synthesis. Hum. Brain Mapp. 1994, 2, 56–78.

- Friston, K.; Moran, R.; Seth, A.K. Analysing connectivity with Granger causality and dynamic causal modelling. Curr. Opin. Neurobiol. 2013, 23, 172–178.

- Friston, K.J. Functional and Effective Connectivity: A Review. Brain Connect. 2011, 1, 13–36.

- Sakkalis, V. Review of advanced techniques for the estimation of brain connectivity measured with EEG/MEG. Comput. Biol. Med. 2011, 41, 1110–1117.

- Goldenberg, D.; Galván, A. The use of functional and effective connectivity techniques to understand the developing brain. Dev. Cogn. Neurosci. 2015, 12, 155–164.

- Granger, C.W. Investigating causal relations by econometric models and cross-spectral methods. Econom. J. Econom. Soc. 1969, 37, 424–438.

- Tononi, G.; Sporns, O. Measuring information integration. BMC Neurosci. 2003, 4, 1–20.

- Brillinger, D.R.; Bryant, H.L.; Segundo, J.P. Identification of synaptic interactions. Biol. Cybern. 1976, 22, 213–228.

- Geweke, J.F. Measures of conditional linear dependence and feedback between time series. J. Am. Stat. Assoc. 1984, 79, 907–915.

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423.

- Wibral, M.; Vicente, R.; Lizier, J.T. Directed Information Measures in Neuroscience; Springer: Berlin/Heidelberg, Germany, 2014; Volume 724.

- Muresan, R.C.; Jurjut, O.F.; Moca, V.V.; Singer, W.; Nikolic, D. The oscillation score: An efficient method for estimating oscillation strength in neuronal activity. J. Neurophysiol. 2008, 99, 1333–1353.

- von Sachs, R. Nonparametric spectral analysis of multivariate time series. Annu. Rev. Stat. Its Appl. 2020, 7, 361–386.

- Sankari, Z.; Adeli, H.; Adeli, A. Wavelet coherence model for diagnosis of Alzheimer disease. Clin. EEG Neurosci. 2012, 43, 268–278.

- Chen, J.L.; Ros, T.; Gruzelier, J.H. Dynamic changes of ICA-derived EEG functional connectivity in the resting state. Hum. Brain Mapp. 2013, 34, 852–868.

- Ieracitano, C.; Duun-Henriksen, J.; Mammone, N.; La Foresta, F.; Morabito, F.C. Wavelet coherence-based clustering of EEG signals to estimate the brain connectivity in absence epileptic patients. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1297–1304.

- Lachaux, J.P.; Lutz, A.; Rudrauf, D.; Cosmelli, D.; Le Van Quyen, M.; Martinerie, J.; Varela, F. Estimating the time-course of coherence between single-trial brain signals: An introduction to wavelet coherence. Neurophysiol. Clin. Neurophysiol. 2002, 32, 157–174.

- Khare, S.K.; Bajaj, V.; Acharya, U.R. SchizoNET: A robust and accurate Margenau-Hill time-frequency distribution based deep neural network model for schizophrenia detection using EEG signals. Physiol. Meas. 2023, 44, 035005.

- Mousavi, S.M.; Asgharzadeh-Bonab, A.; Ranjbarzadeh, R. Time-frequency analysis of EEG signals and GLCM features for depth of anesthesia monitoring. Comput. Intell. Neurosci. 2021, 2021, 8430565.

- Lachaux, J.P.; Rodriguez, E.; Martinerie, J.; Varela, F.J. Measuring phase synchrony in brain signals. Hum. Brain Mapp. 1999, 8, 194–208.

- Pereda, E.; Quiroga, R.Q.; Bhattacharya, J. Nonlinear multivariate analysis of neurophysiological signals. Prog. Neurobiol. 2005, 77, 1–37.

- Xu, B.G.; Song, A.G. Pattern recognition of motor imagery EEG using wavelet transform. J. Biomed. Sci. Eng. 2008, 1, 64.

- Akaike, H. A new look at statistical model identification. IEEE Trans. Autom. Control 1974, 19, 716–723.

- Schwarz, G. Estimating the dimension of a model. Ann. Stat. 1978, 6, 461–464.

- Sanchez-Romero, R.; Cole, M.W. Combining multiple functional connectivity methods to improve causal inferences. J. Cogn. Neurosci. 2021, 33, 180–194.

- Ding, M.; Chen, Y.; Bressler, S.L. Granger causality: Basic theory and application to neuroscience. In Handbook of Time Series Analysis: Recent Theoretical Developments and Applications; Wiley: Weinheim, Germany, 2006; pp. 437–460.

- Barnett, L.; Seth, A.K. Behaviour of Granger Causality under Filtering: Theoretical Invariance and Practical Application. J. Neurosci. Methods 2011, 201, 404–419.

- Sakkalis, V.; Cassar, T.; Zervakis, M.; Camilleri, K.P.; Fabri, S.G.; Bigan, C.; Karakonstantaki, E.; Micheloyannis, S. Parametric and nonparametric EEG analysis for the evaluation of EEG activity in young children with controlled epilepsy. Comput. Intell. Neurosci. 2008, 2008, 462593.

- Baccalá, L.A.; Sameshima, K. Partial Directed Coherence and the Vector Autoregressive Modelling Myth and a Caveat. Front. Netw. Physiol. 2022, 2, 13.

- Ash, R.B. Information Theory; Courier Corporation: Chelmsford, MA, USA, 2012.

- Porta, A.; Faes, L. Wiener–Granger causality in network physiology with applications to cardiovascular control and neuroscience. Proc. IEEE 2015, 104, 282–309.

- Faes, L.; Marinazzo, D.; Jurysta, F.; Nollo, G. Linear and non-linear brain–heart and brain–brain interactions during sleep. Physiol. Meas. 2015, 36, 683.

- Stam, C.J. Nonlinear dynamical analysis of EEG and MEG: Review of an emerging field. Clin. Neurophysiol. 2005, 116, 2266–2301.

- Leistritz, L.; Schiecke, K.; Astolfi, L.; Witte, H. Time-variant modeling of brain processes. Proc. IEEE 2015, 104, 262–281.

- Milde, T.; Leistritz, L.; Astolfi, L.; Miltner, W.H.; Weiss, T.; Babiloni, F.; Witte, H. A new Kalman filter approach for the estimation of high-dimensional time-variant multivariate AR models and its application in analysis of laser-evoked brain potentials. Neuroimage 2010, 50, 960–969.

- Wilke, C.; Ding, L.; He, B. Estimation of time-varying connectivity patterns through the use of an adaptive directed transfer function. IEEE Trans. Biomed. Eng. 2008, 55, 2557–2564.

- Astolfi, L.; Cincotti, F.; Mattia, D.; Fallani, F.D.V.; Tocci, A.; Colosimo, A.; Salinari, S.; Marciani, M.G.; Hesse, W.; Witte, H.; et al. Tracking the time-varying cortical connectivity patterns by adaptive multivariate estimators. IEEE Trans. Biomed. Eng. 2008, 55, 902–913.

- Shannon, C. Communication in the Presence of Noise. Proc. IRE 1949, 37, 10–21.

- Geselowitz, D. The zero of potential. IEEE Eng. Med. Biol. Mag. 1998, 17, 128–136.

- Kayser, J.; Tenke, C.E. In Search of the Rosetta Stone for Scalp EEG: Converging on Reference-Free Techniques. Clin. Neurophysiol. 2010, 121, 1973–1975.

- Quyen, M.L.V.; Staba, R.; Bragin, A.; Dickson, C.; Valderrama, M.; Fried, I.; Engel, J. Large-Scale Microelectrode Recordings of High-Frequency Gamma Oscillations in Human Cortex during Sleep. J. Neurosci. 2010, 30, 7770–7782.

- Jacobs, J.; Staba, R.; Asano, E.; Otsubo, H.; Wu, J.; Zijlmans, M.; Mohamed, I.; Kahane, P.; Dubeau, F.; Navarro, V.; et al. High-Frequency Oscillations (HFOs) in Clinical Epilepsy. Prog. Neurobiol. 2012, 98, 302–315.

- Grosmark, A.D.; Buzsáki, G. Diversity in Neural Firing Dynamics Supports Both Rigid and Learned Hippocampal Sequences. Science 2016, 351, 1440–1443.

- Gliske, S.V.; Irwin, Z.T.; Chestek, C.; Stacey, W.C. Effect of Sampling Rate and Filter Settings on High Frequency Oscillation Detections. Clin. Neurophysiol. Off. J. Int. Fed. Clin. Neurophysiol. 2016, 127, 3042–3050.

- Bolea, J.; Pueyo, E.; Orini, M.; Bailón, R. Influence of Heart Rate in Non-linear HRV Indices as a Sampling Rate Effect Evaluated on Supine and Standing. Front. Physiol. 2016, 7, 501.

- Jing, H.; Takigawa, M. Low Sampling Rate Induces High Correlation Dimension on Electroencephalograms from Healthy Subjects. Psychiatry Clin. Neurosci. 2000, 54, 407–412.

- Widmann, A.; Schröger, E.; Maess, B. Digital Filter Design for Electrophysiological Data–a Practical Approach. J. Neurosci. Methods 2015, 250, 34–46.

- Mullen, T. Cleanline Tool. 2012. Available online: https://www.nitrc.org/projects/cleanline/ (accessed on 10 December 2022).

- Bigdely-Shamlo, N.; Mullen, T.; Kothe, C.; Su, K.M.; Robbins, K.A. The PREP Pipeline: Standardized Preprocessing for Large-Scale EEG Analysis. Front. Neuroinform. 2015, 9, 16.

- Phadikar, S.; Sinha, N.; Ghosh, R.; Ghaderpour, E. Automatic muscle artifacts identification and removal from single-channel eeg using wavelet transform with meta-heuristically optimized non-local means filter. Sensors 2022, 22, 2948.

- Ghosh, R.; Phadikar, S.; Deb, N.; Sinha Sr, N.; Das, P.; Ghaderpour, E. Automatic Eye-blink and Muscular Artifact Detection and Removal from EEG Signals using k-Nearest Neighbour Classifier and Long Short-Term Memory Networks. IEEE Sens. J. 2023, 23, 5422–5436.

- da Cruz, J.R.; Chicherov, V.; Herzog, M.H.; Figueiredo, P. An Automatic Pre-Processing Pipeline for EEG Analysis (APP) Based on Robust Statistics. Clin. Neurophysiol. Off. J. Int. Fed. Clin. Neurophysiol. 2018, 129, 1427–1437.

- Miljevic, A.; Bailey, N.W.; Vila-Rodriguez, F.; Herring, S.E.; Fitzgerald, P.B. Electroencephalographic Connectivity: A Fundamental Guide and Checklist for Optimal Study Design and Evaluation. Biol. Psychiatry Cogn. Neurosci. Neuroimaging 2022, 7, 546–554.

- Zaveri, H.P.; Duckrow, R.B.; Spencer, S.S. The effect of a scalp reference signal on coherence measurements of intracranial electroencephalograms. Clin. Neurophysiol. 2000, 111, 1293–1299.

- Croft, R.; Barry, R. Removal of Ocular Artifact from the EEG: A Review. Neurophysiol. Clin. Neurophysiol. 2000, 30, 5–19.

- Wallstrom, G.L.; Kass, R.E.; Miller, A.; Cohn, J.F.; Fox, N.A. Automatic Correction of Ocular Artifacts in the EEG: A Comparison of Regression-Based and Component-Based Methods. Int. J. Psychophysiol. 2004, 53, 105–119.

- Romero, S.; Mañanas, M.A.; Barbanoj, M.J. A Comparative Study of Automatic Techniques for Ocular Artifact Reduction in Spontaneous EEG Signals Based on Clinical Target Variables: A Simulation Case. Comput. Biol. Med. 2008, 38, 348–360.

- Sörnmo, L.; Laguna, P. Bioelectrical Signal Processing in Cardiac and Neurological Applications; Elsevier: Amsterdam, The Netherlands; Academic Press: Boca Raton, FL, USA, 2005.

- Picton, T.; Bentin, S.; Berg, P.; Donchin, E.; Hillyard, S.; Johnson JR., R.; Miller, G.; Ritter, W.; Ruchkin, D.; Rugg, M.; et al. Guidelines for Using Human Event-Related Potentials to Study Cognition: Recording Standards and Publication Criteria. Psychophysiology 2000, 37, 127–152.

- Van der Meer, M.; Tewarie, P.; Schoonheim, M.; Douw, L.; Barkhof, F.; Polman, C.; Stam, C.; Hillebrand, A. Cognition in MS Correlates with Resting-State Oscillatory Brain Activity: An Explorative MEG Source-Space Study. NeuroImage Clin. 2013, 2, 727–734.

- Dominguez, L.G.; Radhu, N.; Farzan, F.; Daskalakis, Z.J. Characterizing Long Interval Cortical Inhibition over the Time-Frequency Domain. PLoS ONE 2014, 9, e92354.

- Re-Referencing. Available online: https://eeglab.org/tutorials/ConceptsGuide/rereferencing_background.html (accessed on 10 December 2022).

- Faes, L.; Marinazzo, D.; Nollo, G.; Porta, A. An information-theoretic framework to map the spatiotemporal dynamics of the scalp electroencephalogram. IEEE Trans. Biomed. Eng. 2016, 63, 2488–2496.

- Vicente, R.; Wibral, M.; Lindner, M.; Pipa, G. Transfer entropy—A model-free measure of effective connectivity for the neurosciences. J. Comput. Neurosci. 2011, 30, 45–67.

- Lai, M.; Demuru, M.; Hillebrand, A.; Fraschini, M. A comparison between scalp-and source-reconstructed EEG networks. Sci. Rep. 2018, 8, 12269.

- Jatoi, M.A.; Kamel, N.; Malik, A.S.; Faye, I.; Begum, T. A Survey of Methods Used for Source Localization Using EEG Signals. Biomed. Signal Process. Control 2014, 11, 42–52.

- Snieder, R.; Trampert, J. Inverse Problems in Geophysics. In Wavefield Inversion; Wirgin, A., Ed.; Springer: Vienna, Austria, 1999; pp. 119–190.

This entry is offline, you can click here to edit this entry!